This is the official implementation for the papers Active Learning on a Budget - Opposite Strategies Suit High and Low Budgets and Active Learning Through a Covering Lens.

This code implements TypiClust, ProbCover and DCoM - Simple and Effective Low Budget Active Learning methods.

Arxiv link, Twitter Post link, Blog Post link

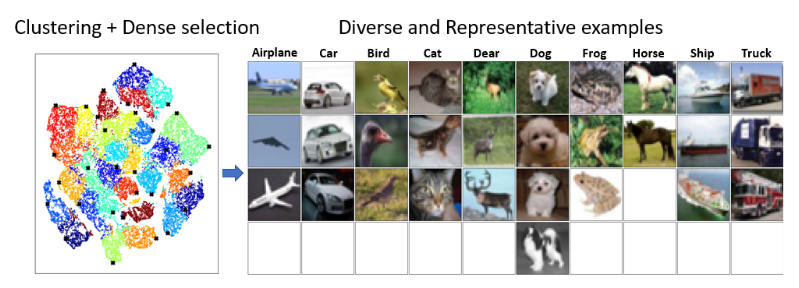

TypiClust first employs a representation learning method, then clusters the data into K clusters, and selects the most Typical (Dense) sample from every cluster. In other words, TypiClust selects samples from dense and diverse regions of the data distribution.

Selection of 30 samples on CIFAR-10:

Selection of 10 samples from a GMM:

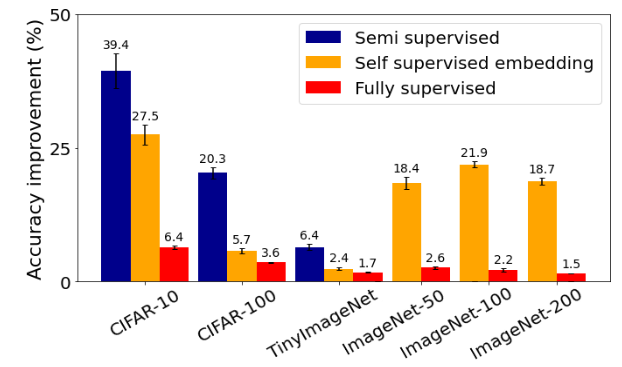

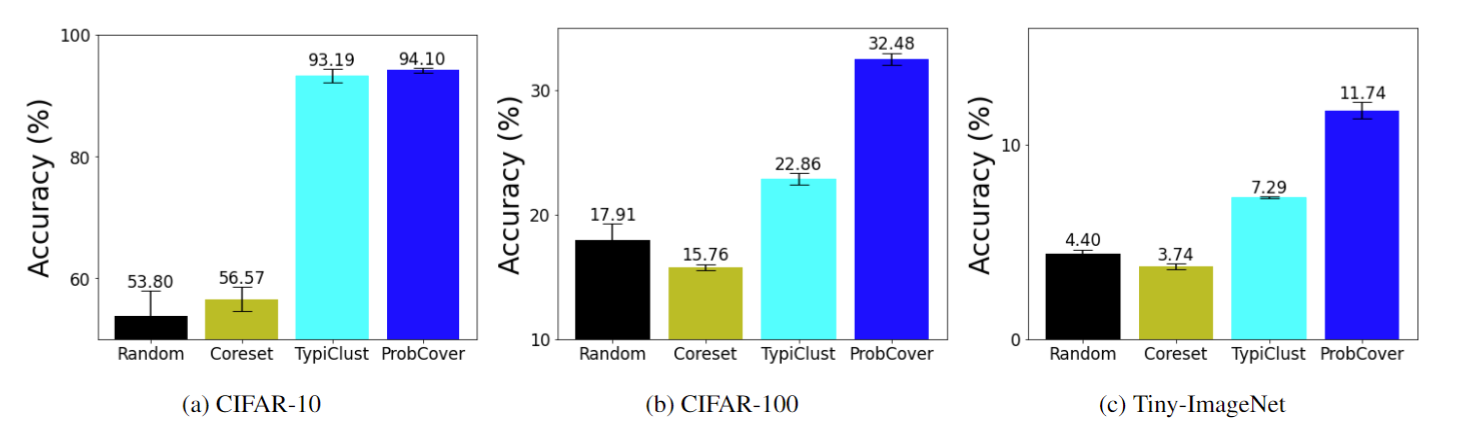

TypiClust Results summary

Arxiv link, Twitter Post link, Blog Post link

ProbCover also uses a representation learning method. Then, around every point is placed a

Unfolding selection of ProbCover

ProbCover results in the Semi-Supervised training framework

DCoM employs a representation learning approach. Initially, a

Illustration of DCoM's

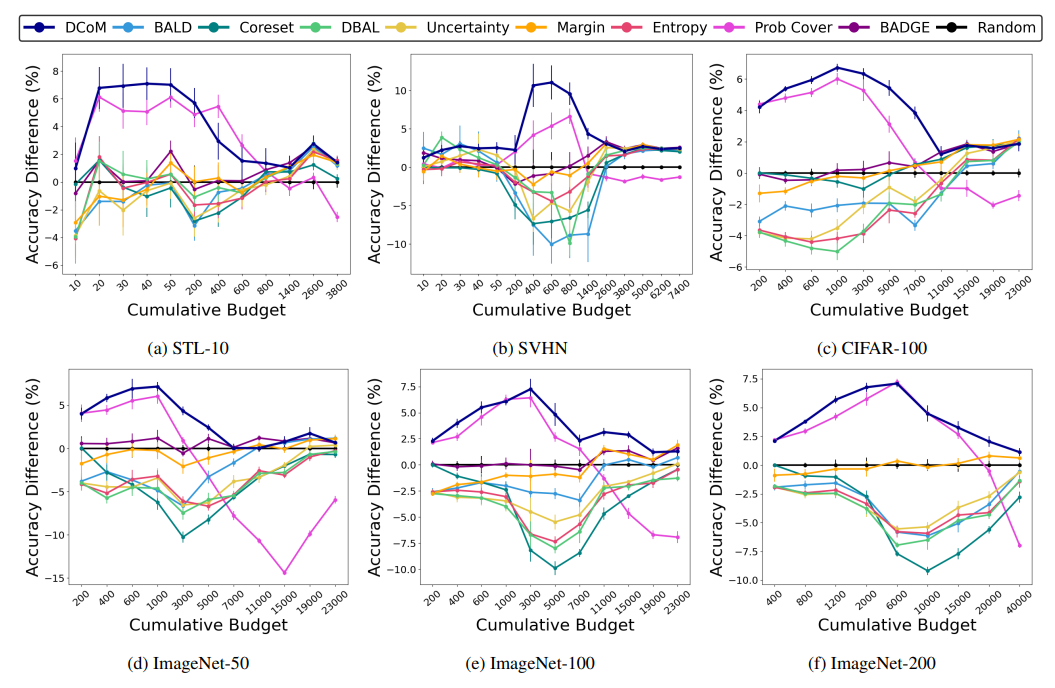

DCoM results in the Supervised training framework

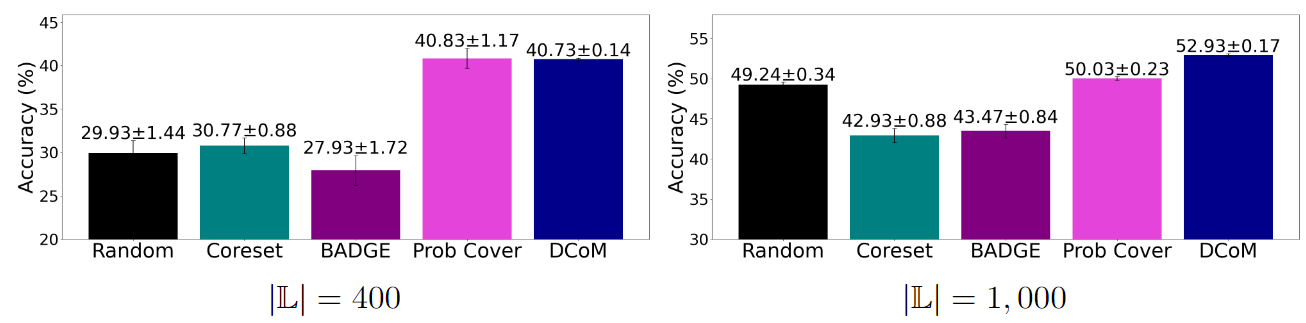

DCoM results in the Semi-Supervised training framework

Please see USAGE for brief instructions on installation and basic usage examples.

This Repository makes use of two repositories: (SCAN and Deep-AL) Please consider citing their work and ours:

@article{hacohen2022active,

title={Active learning on a budget: Opposite strategies suit high and low budgets},

author={Hacohen, Guy and Dekel, Avihu and Weinshall, Daphna},

journal={arXiv preprint arXiv:2202.02794},

year={2022}

}

@article{yehudaActiveLearningCovering2022,

title = {Active {{Learning Through}} a {{Covering Lens}}},

author = {Yehuda, Ofer and Dekel, Avihu and Hacohen, Guy and Weinshall, Daphna},

journal={arXiv preprint arXiv:2205.11320},

year={2022}

}

@article{mishal2024dcom,

title={DCoM: Active Learning for All Learners},

author={Mishal, Inbal and Weinshall, Daphna},

journal={arXiv preprint arXiv:2407.01804},

year={2024}

}

This toolkit is released under the MIT license. Please see the LICENSE file for more information.