View this page in Japanese(日本語)

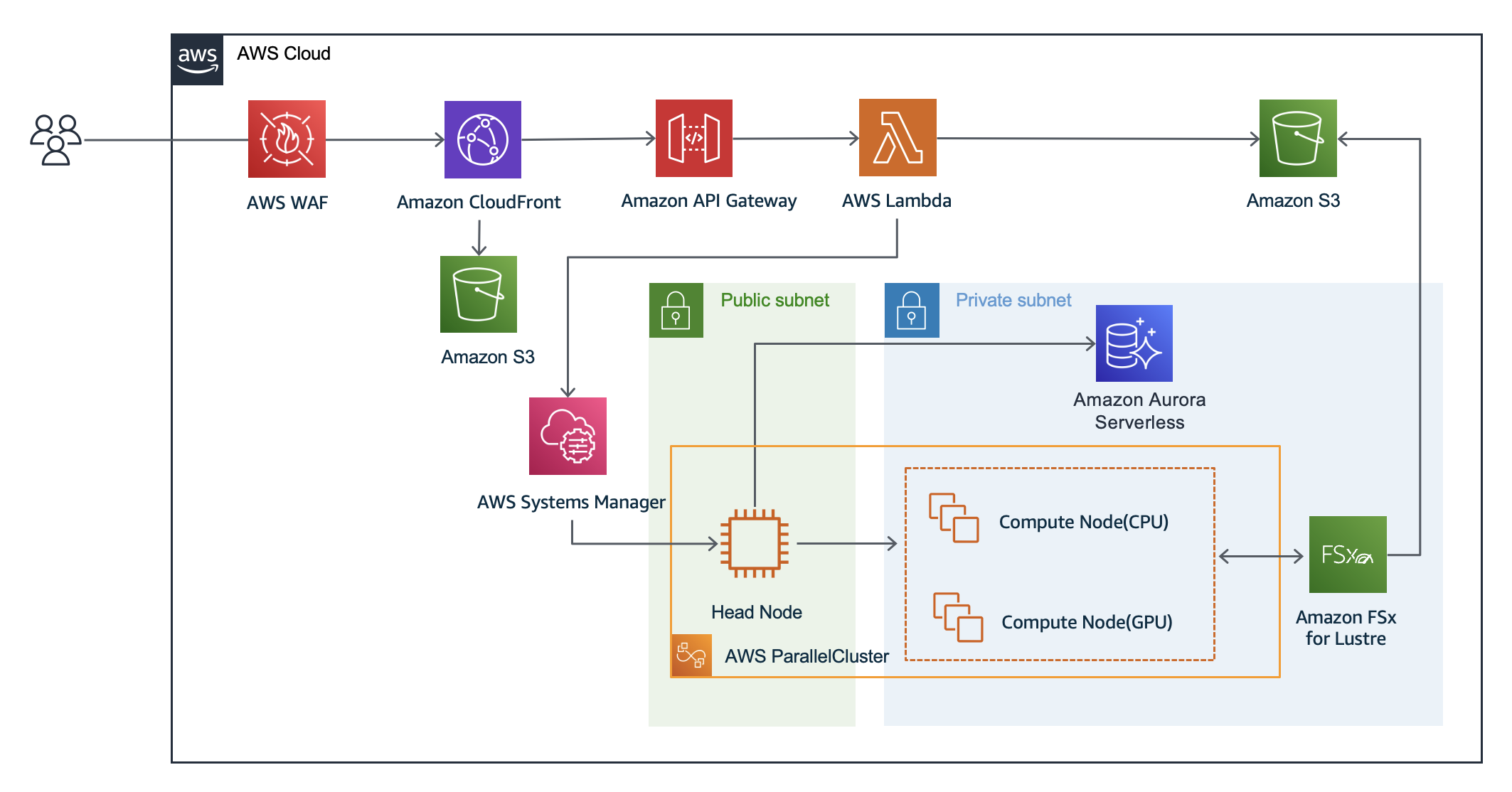

AlphaFold2 Webapp on AWS provides a web frontend that allows users to run AlphaFold2 or ColabFold using GUI. In addition, administrators can easily build an AlphaFold2 or ColabFold analysis environment with AWS CDK. For more information, please refer to an AWS HPC blog post: "Running protein structure prediction at scale using a web interface for researchers".

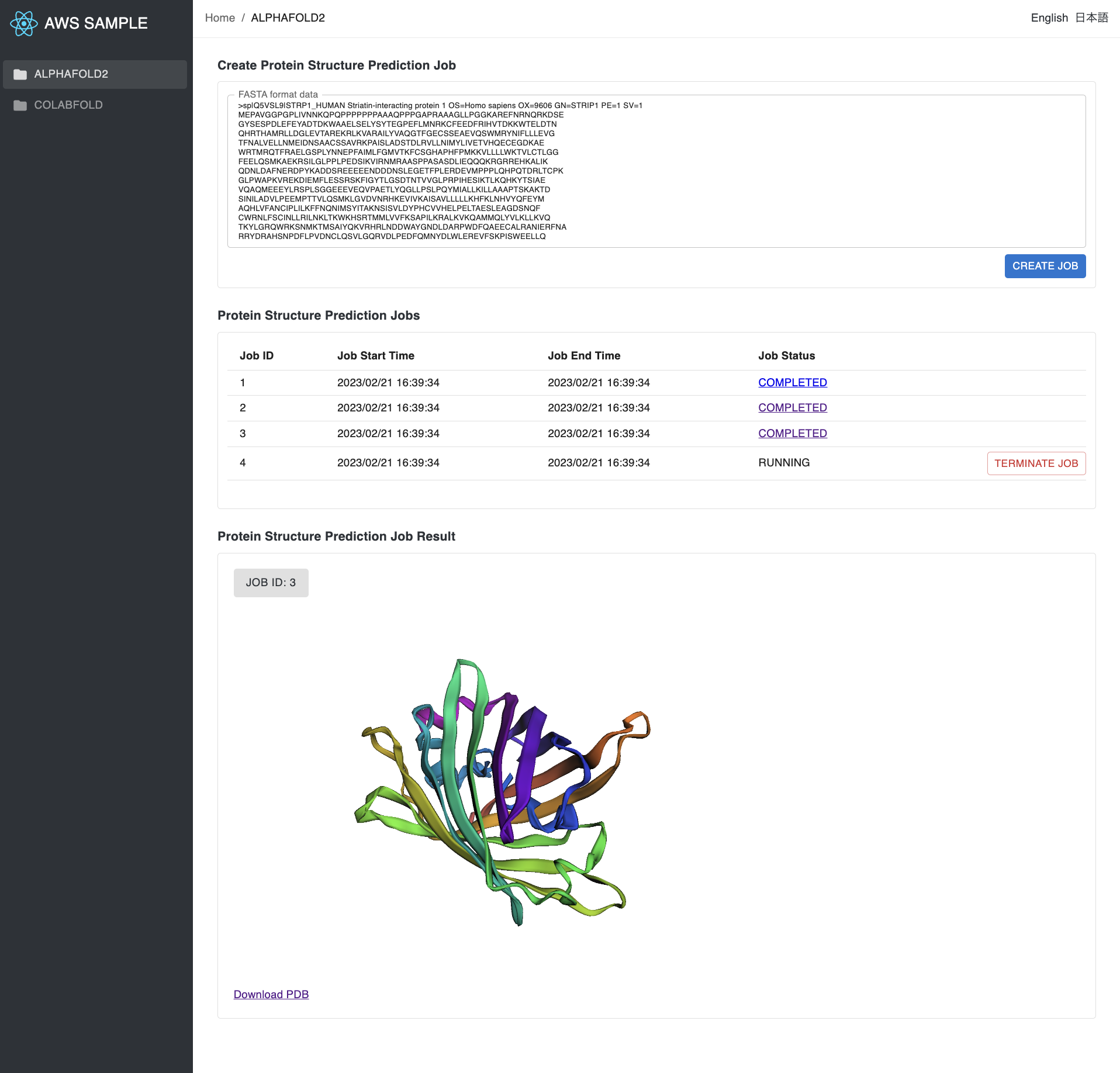

NOTE: On the frontend, there are two tabs, AlphaFold2 and ColabFold, each with a corresponding page. However, only one of them will actually work. If the HeadNode specified during frontend setup was AlphaFold2, only the AlphaFold2 page will work, and if it was ColabFold, only the ColabFold page will work.

NOTE: We recommend that you follow the steps in the next section to set up your development environment.

- Install AWS CLI and Set configuration and credentials

- Install Python 3

- Install Node.js LTS

- Install Docker Desktop

NOTE: We recommend you create your AWS Cloud9 environment in us-east-1 (N. Virginia) region.

NOTE: If you are going to create an AWS Cloud9 environment using the following commands, the prerequisites above (e.g. AWS CLI / Python / Node.js / Docker) are pre-configured at Cloud9.

- Launch AWS CloudShell and run the following command.

git clone https://github.com/aws-samples/cloud9-setup-for-prototyping

cd cloud9-setup-for-prototyping- To assign an Elastic IP to Cloud9, edit the

params.jsonfile byvim params.jsonand change theattach_eipoption totrue.

"volume_size": 128,

- "attach_eip": false

+ "attach_eip": true

}- Launch Cloud9 environment

cloud9-for-prototyping

./bin/bootstrapNOTE: After the completion of the bootstrap process, the Elastic IP assigned to Cloud9 will be displayed on the screen. Copy this IP to keep it for future reference.

Elastic IP: 127.0.0.1 (example)

- Open the AWS Cloud9 console, and open an environment named

cloud9-for-prototyping. - On the menu bar at the top of the AWS Cloud9 IDE, choose

Window>New Terminalor use an existing terminal window. - In the terminal window, enter the following.

git clone https://github.com/aws-samples/alphafold-protein-structure-prediction-with-frontend-app.git- Go to

alphafold-protein-structure-prediction-with-frontend-appdirectory.

cd alphafold-protein-structure-prediction-with-frontend-app/NOTE: The following command uses us-east-1 (N. Virginia) region.

NOTE: We recommend that you use the Cloud9 environment for the following steps.

- Before deploying backend CDK stack, you need to build frontend CDK stack. In the terminal window of Cloud9 IDE, enter the following.

## Build the frontend CDK stack

cd app

npm install

npm run build- Modify the value of

c9Eipinprovisioning/bin/provisioning.tsto the Elastic IP address assigned to Cloud9 using the above steps.

-const c9Eip = 'your-cloud9-ip'

+const c9Eip = 'xx.xx.xx.xx'- After the modification, deploy backend CDK stack.

cd ../provisioning

npm install

npx cdk bootstrap

## Set up the network, database, and storage

npx cdk deploy Alphafold2ServiceStack --require-approval never

cd ../- After finishing the above command, you will see the output like below.

- If you miss out these outputs, you can check them from the outputs tab of the stack named

AlphaFold2ServiceStackat AWS Cloudformation Console.

- If you miss out these outputs, you can check them from the outputs tab of the stack named

Output:

Alphafold2ServiceStack.AuroraCredentialSecretArn = arn:aws:secretsmanager:us-east-1:123456789012:secret:AuroraCredentialSecretxxxyyyzzz

Alphafold2ServiceStack.AuroraPasswordArn = arn:aws:secretsmanager:us-east-1:123456789012:secret:AuroraPasswordxxxyyyzzz

Alphafold2ServiceStack.ExportsOutputRefHpcBucketxxxyyyzzz = alphafold2servicestack-hpcbucketxxxyyyzzz

Alphafold2ServiceStack.FsxFileSystemId = fs-xxxyyyzzz

Alphafold2ServiceStack.GetSSHKeyCommand = aws ssm get-parameter --name /ec2/keypair/key-xxxyyyzzz --region us-east-1 --with-decryption --query Parameter.Value --output text > ~/.ssh/keypair-alphafold2.pem

...

- From the outputs above, copy the value of Alphafold2ServiceStack.GetSSHKeyCommand

aws ssm get-parameter ...and enter it to Cloud9 terminal.- This command fetches the private key and saves it to Cloud9.

aws ssm get-parameter --name /ec2/keypair/key-{your key ID} --region us-east-1 --with-decryption --query Parameter.Value --output text > ~/.ssh/keypair-alphafold2.pem

## change the access mode of private key

chmod 600 ~/.ssh/keypair-alphafold2.pem- Now that the backend has been built, the next step is to create clusters for protein structure prediction.

- In the Cloud9 IDE terminal, enter the following.

- You can modify

config.ymlto change instance type which is appropriate to your workload.

## Install AWS ParallelCluster CLI

pip3 install aws-parallelcluster==3.7.2 --user

## Set the default region

export AWS_DEFAULT_REGION=us-east-1

## Generate a configuration file for a ParallelCluster cluster

npx ts-node provisioning/hpc/alphafold2/config/generate-template.ts

## Create a ParallelCluster cluster

pcluster create-cluster --cluster-name hpccluster --cluster-configuration provisioning/hpc/alphafold2/config/config.ymlFor ColabFold

npx ts-node provisioning/hpc/colabfold/config/generate-template.ts

pcluster create-cluster --cluster-name hpccluster --cluster-configuration provisioning/hpc/colabfold/config/config.yml

- You can check the cluster creation status using the following command.

pcluster list-clustersOutput:

{

"clusters": [

{

"clusterName": "hpccluster",

## Wait until CREATE_COMPLETE

"cloudformationStackStatus": "CREATE_COMPLETE",

...- Create a web frontend and connect it to the cluster we just created in the previous step.

- In the terminal of Cloud9 IDE, enter the following.

## Get the instance ID of the cluster's HeadNode

pcluster describe-cluster -n hpccluster | grep -A 5 headNode | grep instanceIdOutput:

"instanceId": "i-{your_headnode_instanceid}",

- In

provisioning/bin/provisioning.ts, modify the value ofssmInstanceIdto what we have just fetched in the previous step.

-const ssmInstanceId = 'your-headnode-instanceid'

+const ssmInstanceId = 'i-{your_headnode_instanceid}'- In

provisioning/bin/provisioning.ts, modify the value ofallowIp4Rangesto the IP address ranges that are allowed to connect to the frontend.

-const allowIp4Ranges = ['your-global-ip-v4-cidr']

+const allowIp4Ranges = ['xx.xx.xx.xx/xx']- After the modification, deploy the frontend CDK stack.

## Deploy the frontend CDK stack

cd ~/environment/alphafold-protein-structure-prediction-with-frontend-app/provisioning

npx cdk deploy FrontendStack --require-approval never- After finishing the command above, you will see the outputs similar to the following.

- The value of

CloudFrontWebDistributionEndpointshows the URL of the frontend environment. - If you miss out these outputs, you can check them from the outputs tab of the stack named

FrontendStackat AWS Cloudformation Console.

- The value of

Output:

FrontendStack.ApiGatewayEndpoint = https://xxxyyyzzz.execute-api.us-east-1.amazonaws.com/api

FrontendStack.ApiRestApiEndpointXXYYZZ = https://xxxyyyzzz.execute-api.us-east-1.amazonaws.com/api/

FrontendStack.CloudFrontWebDistributionEndpoint = xxxyyyzzz.cloudfront.net

## SSH login to ParallelCluster's HeadNode using private key

export AWS_DEFAULT_REGION=us-east-1

pcluster ssh --cluster-name hpccluster -i ~/.ssh/keypair-alphafold2.pem- Once you logged into the headnode of the ParallelCluster cluster, install the AlphaFold2.

bash /fsx/alphafold2/scripts/bin/app_install.sh- Create a database for AlphaFold2. This process may take about 12 hours to complete. Once you have started the job, it is safe to close the terminal.

nohup bash /fsx/alphafold2/scripts/bin/setup_database.sh &For ColabFold

bash /fsx/colabfold/scripts/bin/app_install.sh sbatch /fsx/colabfold/scripts/setupDatabase.bth

- If you are logged out of the ParallelCluster's HeadNode, log in again.

## SSH login to ParallelCluster's HeadNode using private key

export AWS_DEFAULT_REGION=us-east-1

pcluster ssh --cluster-name hpccluster -i ~/.ssh/keypair-alphafold2.pem- Before testing the backend, make sure downloading datasets is completed.

tail /fsx/alphafold2/job/log/setup_database.out -n 8Output:

Download Results:

gid |stat|avg speed |path/URI

======+====+===========+=======================================================

dcfd44|OK | 66MiB/s|/fsx/alphafold2/database/pdb_seqres/pdb_seqres.txt

Status Legend:

(OK):download completed.

All data downloaded.

- Submit jobs from ParallelCluster's HeadNode with the following commands

## Fetch the FASTA file of your choice (e.g. Q5VSL9)

wget -q -P /fsx/alphafold2/job/input/ https://rest.uniprot.org/uniprotkb/Q5VSL9.fasta

## Start the job using CLI

python3 /fsx/alphafold2/scripts/job_create.py Q5VSL9.fastaFor ColabFold

wget -q -P /fsx/colabfold/job/input/ https://rest.uniprot.org/uniprotkb/Q5VSL9.fasta python3 /fsx/colabfold/scripts/job_create.py Q5VSL9.fasta

- Check the job status with the following command.

squeueOutput:

## While running a job

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

1 queue-cpu setupDat ubuntu CF 0:03 1 queue-cpu-dy-x2iedn16xlarge-1

## Once all the jobs finished

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

- Access the frontend URL that you obtained in step 3.

- If you forgot the URL, you can check it from the value of

CloudFrontWebDistributionEndpointat the outputs tab of the stack namedFrontendStackat AWS Cloudformation Console. - It looks like

xxxyyyzzz.cloudfront.net.

- If you forgot the URL, you can check it from the value of

- From the frontend, you can submit a job, list recent jobs, cancel a job, and visualize the result of the job.

When you are done trying out this sample, remove the resource to avoid incurring additional costs. Run the following commands from the Cloud9 terminal.

- First, delete your ParallelCluster cluster.

## Delete the dataset files from HeadNode

export AWS_DEFAULT_REGION=us-east-1

pcluster ssh --cluster-name hpccluster -i ~/.ssh/keypair-alphafold2.pem

rm -fr /fsx/alphafold2/database/

logout

## Delete the cluster

export AWS_DEFAULT_REGION=us-east-1

pcluster delete-cluster -n hpccluster- Delete the CDK stacks.

## Check the name of the CDK stacks (for frontend and backend) and destroy them

cd ~/environment/alphafold-protein-structure-prediction-with-frontend-app/provisioning

npx cdk list

npx cdk destroy FrontendStack

npx cdk destroy GlobalStack

npx cdk destroy Alphafold2ServiceStack- Lastly, Remove the Cloud9 development environment and Elastic IP attached Cloud9 from the AWS Management Console.