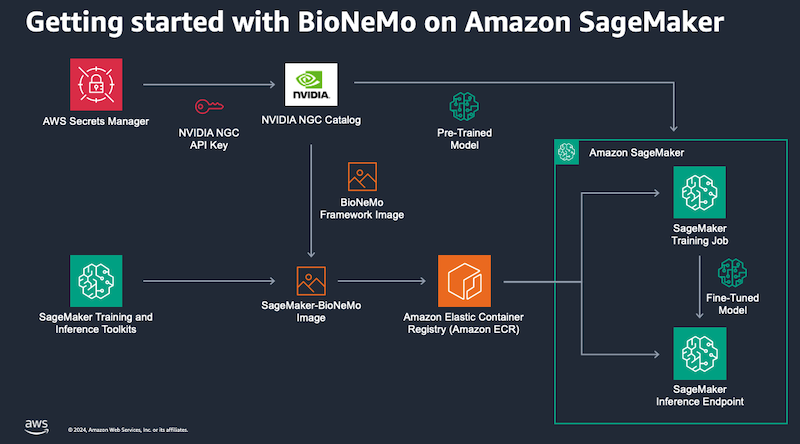

Code examples for running NVIDIA BioNeMo inference and training on Amazon SageMaker.

Proteins are complex biomolecules that carry out most of the essential functions in cells, from metabolism to cellular signaling and structure. A deep understanding of protein structure and function is critical for advancing fields like personalized medicine, biomanufacturing, and synthetic biology.

Recent advances in natural language processing (NLP) have enabled breakthroughs in computational biology through the development of protein language models (pLMs). Similar to how word tokens are the building blocks of sentences in NLP models, amino acids are the building blocks that make up protein sequences. When exposed to millions of protein sequences during training, pLMs develop attention patterns that represent the evolutionary relationships between amino acids. This learned representation of primary sequence can then be fine-tuned to predict protein properties and higher-order structure.

At re:Invent 2023, NVIDIA announced that its BioNeMo generative AI platform for drug discovery will now be available on AWS services including Amazon SageMaker, AWS ParallelCluster, and the upcoming NVIDIA DGX Cloud on AWS. BioNeMo provides pre-trained large language models, data loaders, and optimized training frameworks to help speed up target identification, protein structure prediction, and drug candidate screening in the drug discovery process. Researchers and developers at pharmaceutical and biotech companies that use AWS will be able to leverage BioNeMo and AWS's scalable GPU cloud computing capabilities to rapidly build and train generative AI models on biomolecular data. Several biotech companies and startups are already using BioNeMo for AI-accelerated drug discovery and this announcement will enable them to easily scale up resources as needed.

This repository contains example of how to use the BioNeMo framework container on Amazon SageMaker.

BioNeMo uses Hydra to manage the training job parameters. These are stored in .yaml files and passed to the training job at runtime. For this reason, you do not need to pass many hyperparameters to your SageMaker Estimator - sometimes only the name of your configuration file! You can find a basic Hydra tutorial here.

Before you create a BioNeMo training job, follow these steps to generate some NGC API credentials and store them in AWS Secrets Manager.

- Sign-in or create a new account at NVIDIA NGC.

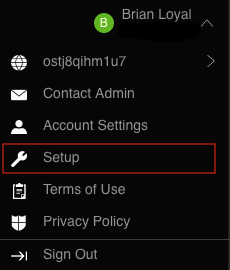

- Select your name in the top-right corner of the screen and then "Setup"

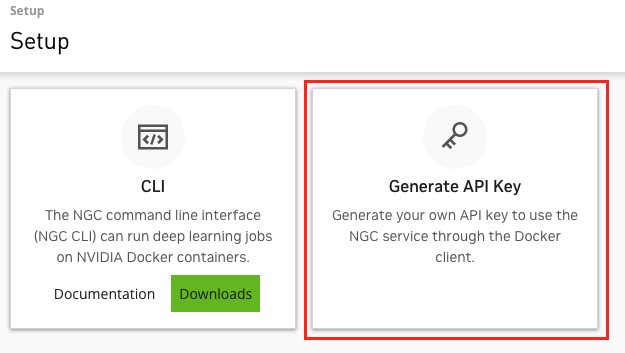

- Select "Generate API Key".

- Select the green "+ Generate API Key" button and confirm.

-

Copy the API key - this is the last time you can retrieve it!

-

Before you leave the NVIDIA NGC site, also take note of your organization ID listed under your name in the top-right corner of the screen. You'll need this, plus your API key, to download BioNeMo artifacts.

-

Navigate to the AWS Console and then to AWS Secrets Manager.

- Select "Store a new secret".

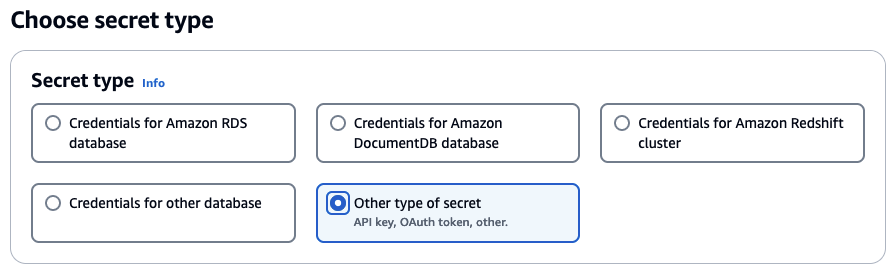

- Under "Secret type" select "Other type of secret"

-

Under "Key/value" pairs, add a key named "NGC_CLI_API_KEY" with a value of your NGC API key. Add another key named "NGC_CLI_ORG" with a value of your NGC organization. Select Next.

-

Under "Configure secret - Secret name and description", name your secret "NVIDIA_NGC_CREDS" and select Next. You'll use this secret name when submitting BioNeMo jobs to SageMaker.

-

Select the remaining default options to create your secret.

The deploy-ESM-embeddings-server.ipynb notebook describes how to deploy the pretrained esm-1nv model as an endpoint for generating sequence embeddings. In this case, all of the required configuration files are already included in the BioNeMo framwork. You only need to specify the name of your model and your NGC API secret name in AWS Secrets Manager.

The train-ESM.ipynb notebook describes how to pretrain or fine-tune the esm-1nv model using a sequence data from the UniProt sequence database. In this case, you will need to create a configuration file and upload it with the training script when creating the job. You should not need to modify the training script. Once the training has finished the Nemo checkpoints will be available in S3.

Amazon S3 now applies server-side encryption with Amazon S3 managed keys (SSE-S3) as the base level of encryption for every bucket in Amazon S3. However, for particularly sensitive data or models you may want to apply a different server- or client-side encrption method, as described in the Amazon S3 documentation.

Additional security best practices, such as disabling access control lists (ACLs) and S3 Block Public Access, can by found in the Amazon S3 documentation.

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.