The Agentic Documents Assistant is an LLM assistant that allows users to answer complex questions from their business documents through natural conversations. It supports answering factual questions by retrieving information directly from documents using semantic search with the popular RAG design pattern. Additionally, it answers analytical questions such as which contracts will expire in the next 3 months? by translating user questions into SQL queries and running them against a database of entities extracted from the documents using a batch process. It is also able to answer complex multi-step questions by combining retrieval, analytical, and other tools and data sources using an LLM agent design pattern.

To learn more about the design and architecture of this solution, check the accompanying AWS ML blog post: Boosting RAG-based intelligent document assistants using entity extraction, SQL querying, and agents with Amazon Bedrock.

- Semantic search to augment response generation with relevant documents

- Structured metadata & entities extraction and SQL queries for analytical reasoning

- An agent built with the Reason and Act (ReAct) instruction format that determines whether to use search or SQL to answer a given question.

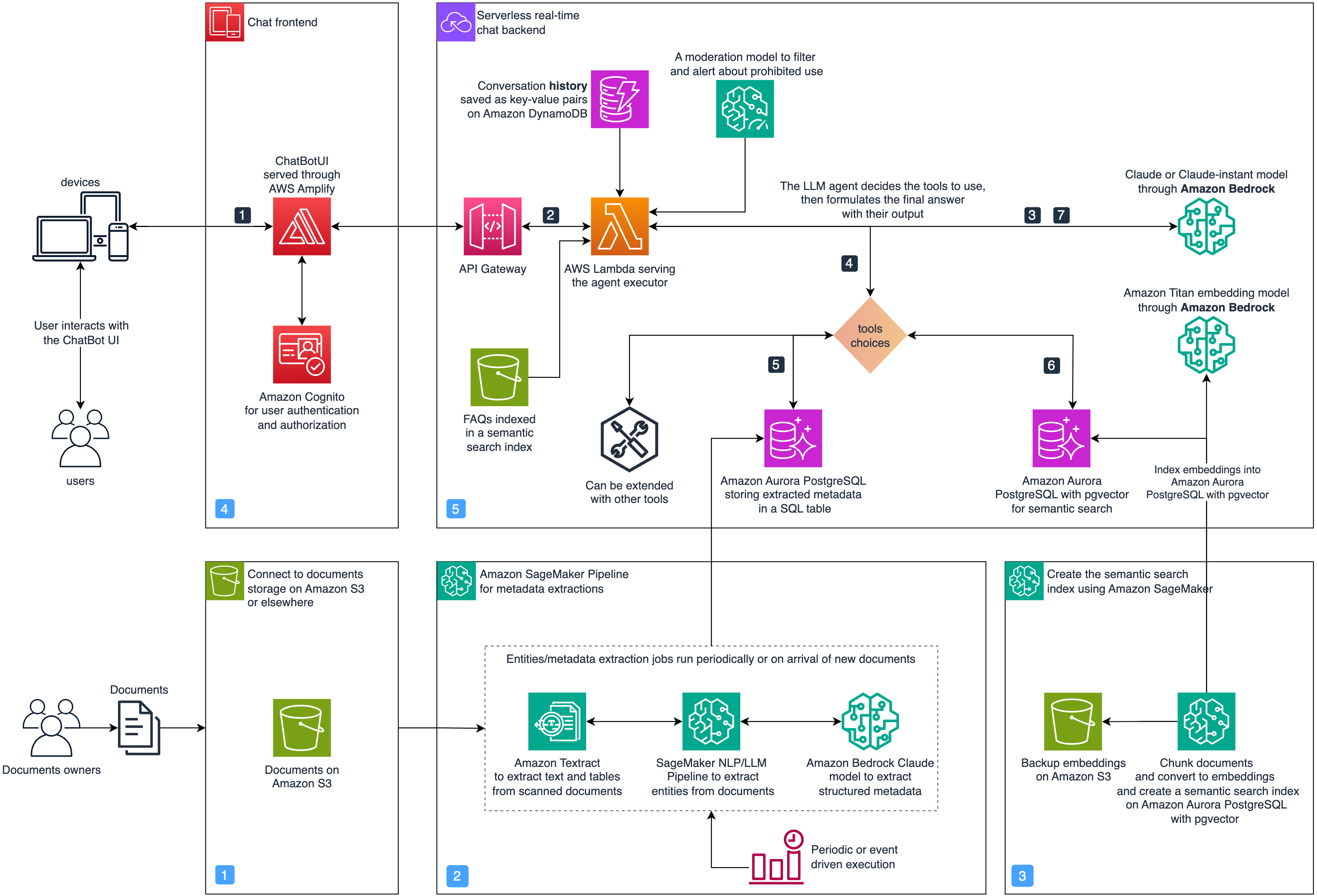

The following architecture diagrams depicts the design of the solution.

Below an outline of the main folders included in this asset.

| Folder | Description |

|---|---|

backend |

Includes a Typescript CDK project implementing IaaC to setup the backend infrastructure. |

frontend |

A Typescript CDK project to setup infrastructure for deploying and hosting the frontend app with AWS Amplify. |

frontend/chat-app |

A Next.js app with AWS Cognito Authentication and secured backend connectivity. |

data-pipelines |

Notebooks implementing SageMaker Jobs and Pipeline to process the data in batch. |

experiments |

Notebooks and code showcasing different modules of the solution as standalone experiments for research and development. |

Follow the insturctions below to setup the solution on your account.

- An AWS account.

- Configure model access to Anthroptic Claude and Amazon Titan models in one of the supported regions of Amazon Bedrock.

- to install the AWS Cloud Development Kit (CDK) stack:

- We recommend using an AWS Cloud9 environment if you indent to make changes or CloudShell to simply install it.

- Alternatively, you can trigger the installation from your local environment after you setup the CDK command-line by following the documentation instructions.

To install the solution in your AWS account:

- Clone this repository.

- Install the backend CDK app, as follows:

- Go inside the

backendfolder. - Run

npm installto install the dependencies. - If you have never used CDK in the current account and region, run bootstrapping with

npx cdk bootstrap. - Run

npx cdk deployto deploy the stack. - Take note of the SageMaker IAM Policy ARN found in the CDK stack output.

- Go inside the

- Deploy the Next.js frontend on AWS Amplify:

- Go inside the

frontendfolder. - Run

npm installto install the dependencies. - Run

npx cdk deployto deploy a stack that builds an Amplify CI/CD - Once the CI/CD is ready go to the Amplify console and trigger a build.

- Once the app is built, click the hosting link to view the app. You can now create a new account and login to view the assistant.

- Go inside the

- To load the underlying data, run the SageMaker Pipeline in

data-pipelines/04-sagemaker-pipeline-for-documents-processing.ipynb. This will load two files: (1) a pre-created csv file to load into the SQL tables, and (2) a json file containing preprocessed Amazon financial documents which will be used to create the semantic search index used by the LLM assistant.

After running the above steps successfully, you can start interacting with the assistant and ask questions.

If you want to update the underlying documents and extract new entities, explore the notebooks 1 to 5 under the experiments folder.

To remove the resources of the solution:

- Remove the stack inside the

backendfolder by runningnpx cdk destroy. - Remove the stack inside the

frontendfolder by runningnpx cdk destroy.

The authors of this asset are:

- Mohamed Ali Jamaoui: Solution designer/Core maintainer.

- Giuseppe Hannen: Extensive contribution to the data extraction modules.

- Laurens ten Cate: Contributed to extending the agent with SQL tool and early streamlit UI deployments.

See CONTRIBUTING for more information.

- Best practices for working with AWS Lambda functions.

- Langchain custom LLM agents

- LLM Powered Autonomous Agents

- Improve the overall-inference speed.

This library is licensed under the MIT-0 License. See the LICENSE file.