-

Install a cluster with kops

-

Modify IAM policy on ec2 instances (

cni.policy.yamlincluded in this repo) -

Assure all traffic is allowed between the master and node security groups and all traffic is allowed within the security groups.

-

Pass

--node-ip <ip-address>to kubelet on all master and node instances, where <ip-address> equals the primary ip address on eth0 of the ec2 instance -

Apply the

aws-cni.yamlfile to your cluster -

Apply the

calico.yamlfile to your cluster -

Apply and test network policy enforcement

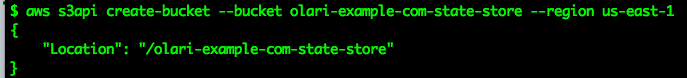

Add a custom prefix to the bucket name below:

aws s3api create-bucket --bucket prefix-example-com-state-store --region us-east-1

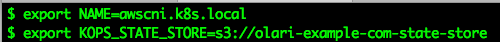

Assure you use the same prefix you defined above to the state store below:

export NAME=awscni.k8s.local export kops_STATE_STORE=s3://prefix-example-state-store

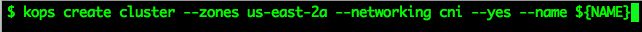

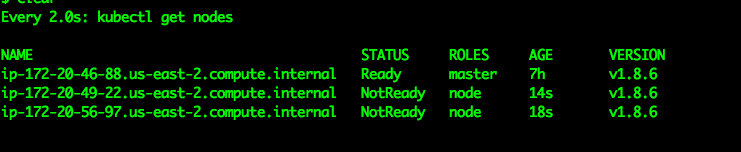

In a few minutes a single-master/dual-node cluster will be deployed into your account. kops will automatically configure the Kubernetes contexts in your config file. From here let’s make sure we can access the node from kubectl:

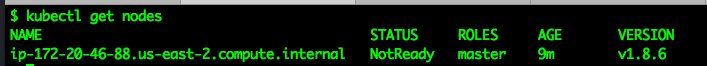

kubectl get nodes

This will only display the master node in a not ready status for now. We need to perform a few tasks to get the CNI up and running properly.

First we need to modify the IAM policy attached to the masters and nodes to allow them to allocate IP addresses and ENIs from the VPC network.

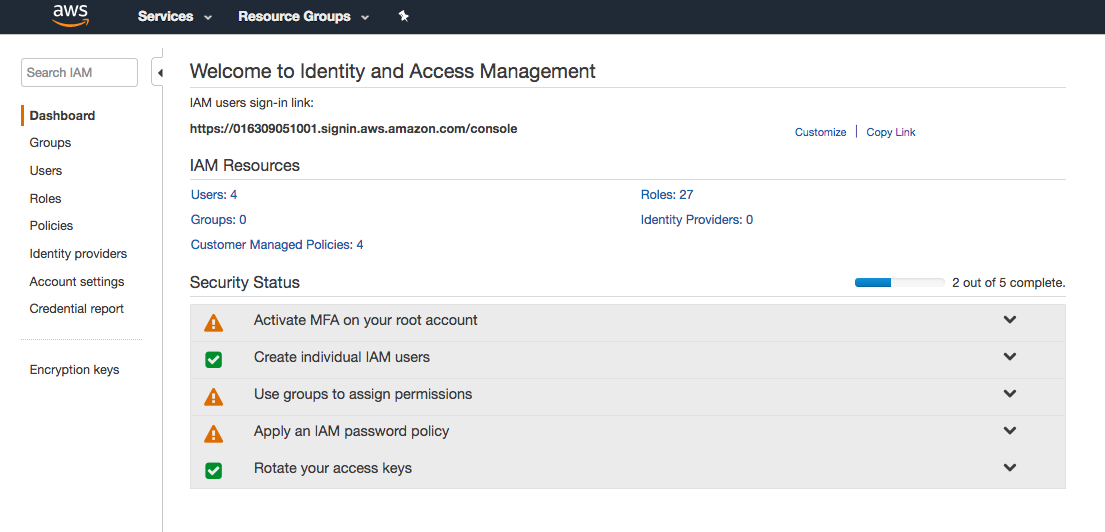

From the AWS console navigate to the IAM page:

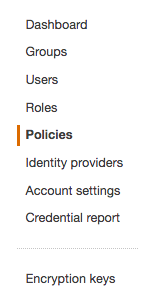

Click on "Policies to the left"

Click on the "Create Policy" button and then click on the "JSON" tab:

From here copy and paste the contents from the file "cni-policy.json" (included in the root of this repository) and then click "Review Policy".

The next screen will allow you to review the permissions and provide the policy a name, for the purpose of this walkthrough let’s name the policy "aws-cni".

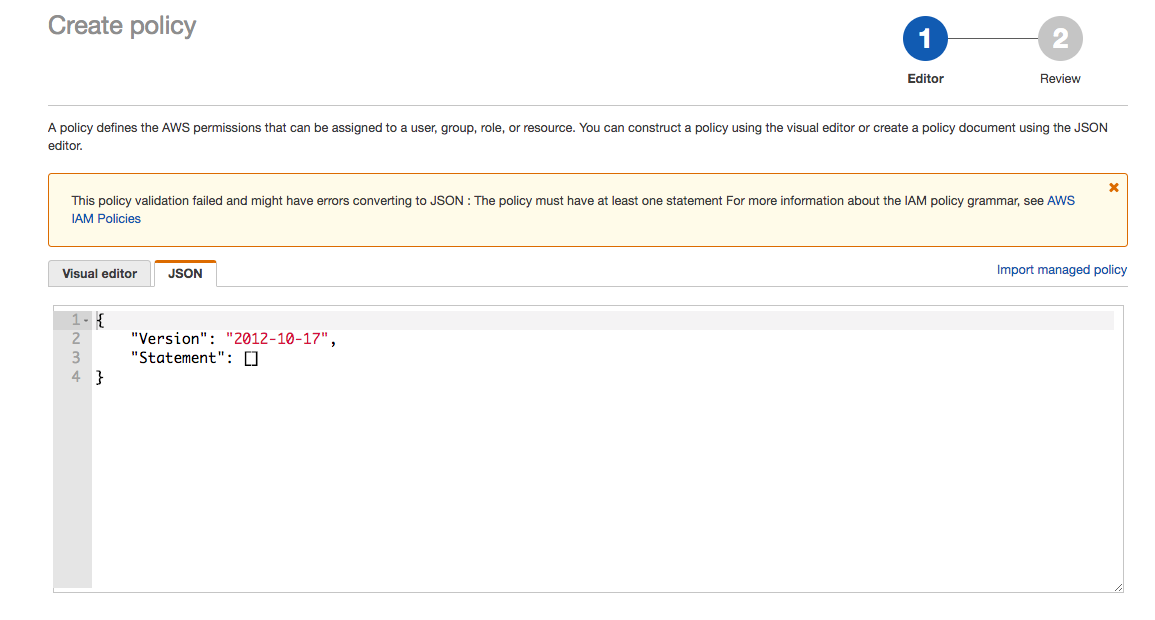

From the IAM console select the roles menu from the left and then search for "awscni" in the search box.

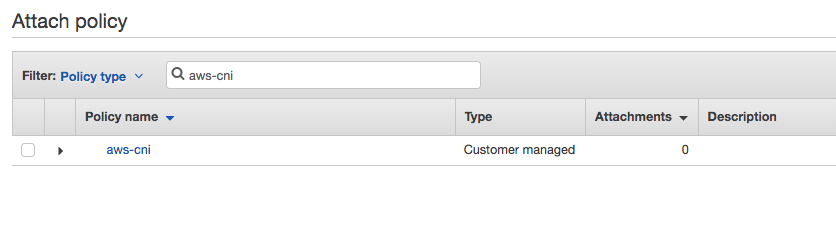

Next select the "masters.awscni.k8s.local" role and apply the customer managed policy we created in the previous bullet point. Click the "attach policy" button and search for the "aws-cni" policy we previously created and click the "attach policy" button in the lower right hand corner.

Repeat for the "nodes.awscni.k8s.local" policy.

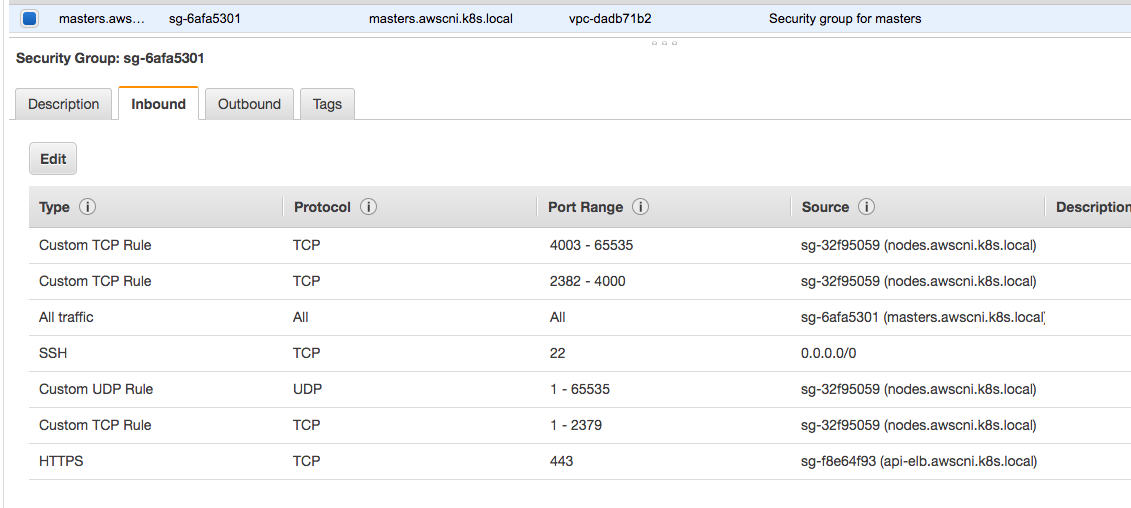

Next we need to modify the security groups to allow all traffic from/to and within the master and node security groups.

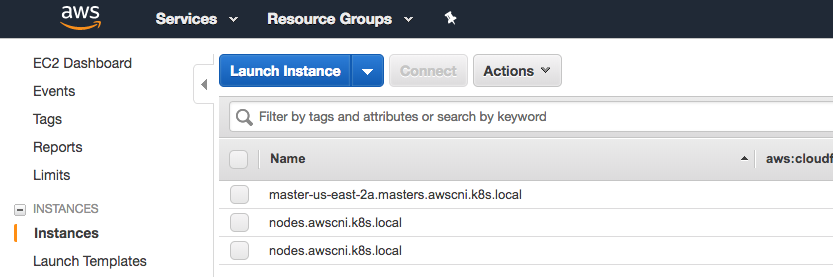

First, go to the aws ec2 console and click instances on the left side:

Select the master instance and click on the security group (from the description tab).

Create a new inbound rule to allow all traffic from the nodes group.

All of the other rules should already exist, at the end what you need to verify is that all traffic flows freely between the masters and nodes security group in addition to all traffic within the groups themselves (all traffic from master security group to master security group).

ssh into each instance and retrieve the primary ip address:

curl http://169.254.169.254/latest/meta-data/local-ipv4

Add the --node-ip flag with the value retrieved from above to the kubelet config /etc/sysconfig/kubelet and restart kubelet:

sudo systemctl restart kubelet

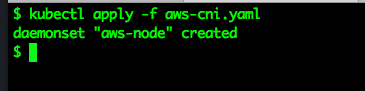

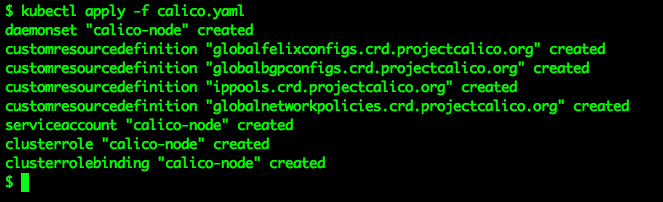

Apply the manifest file, this is included in the root of this repository.

kubectl apply -f aws-cni.yaml

Verify the nodes are in a ready state

watch kubectl get nodes

Eventually the display will change and all of the nodes will have a status of "ready". If not, stop here, and go back and verify all of the previous steps as the next steps will not work.

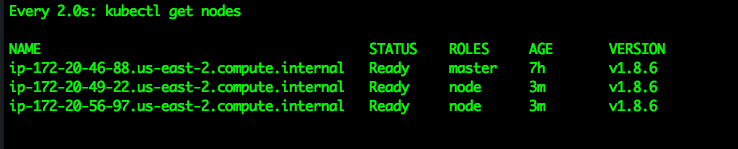

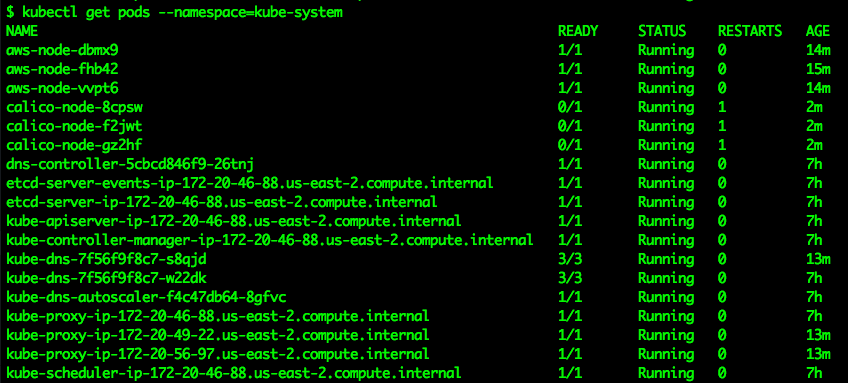

Next verify that all of the pods in the kube-system namespace are successfully running, this will validate that the CNI is properly functioning:

kubectl get pods --namespace=kube-system

Again if there are any problems here go back and verify all of the previous steps.

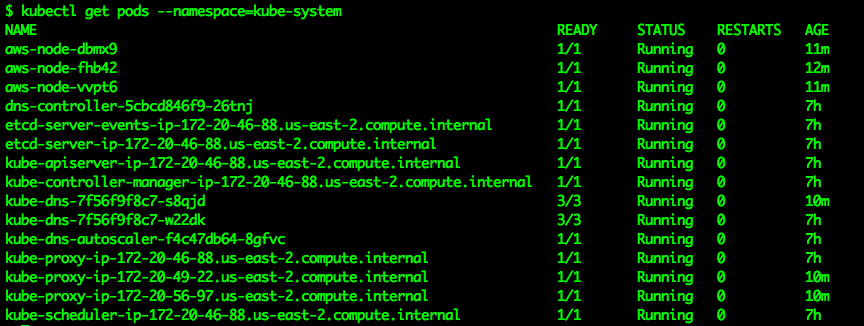

kubectl apply -f calico.yaml

Again verify all of the pods in the kube-system namespace are in good running order, you will have three more pods than you did the first time we performed this step:

kubectl get pods --namespace=kube-system

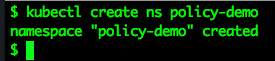

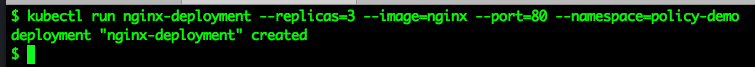

kubectl run nginx-deployment --replicas=3 --image=nginx --port=80 --namespace=policy-demo

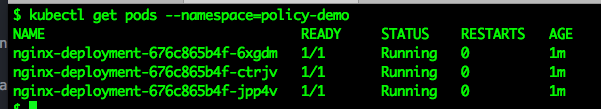

Verify the pods are in running state:

kubectl get pods --namespace=policy-demo

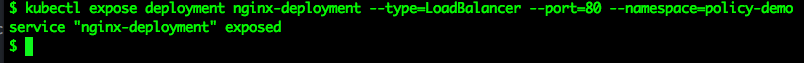

Expose the deployment:

kubectl expose deployment nginx-deployment --type=LoadBalancer --port=80 --namespace=policy-demo

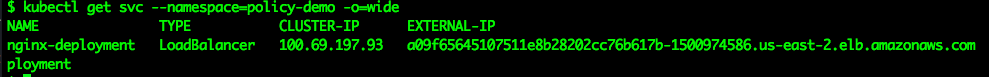

Retrieve the service endpoint:

kubectl get svc --namespace=policy-demo -o=wide

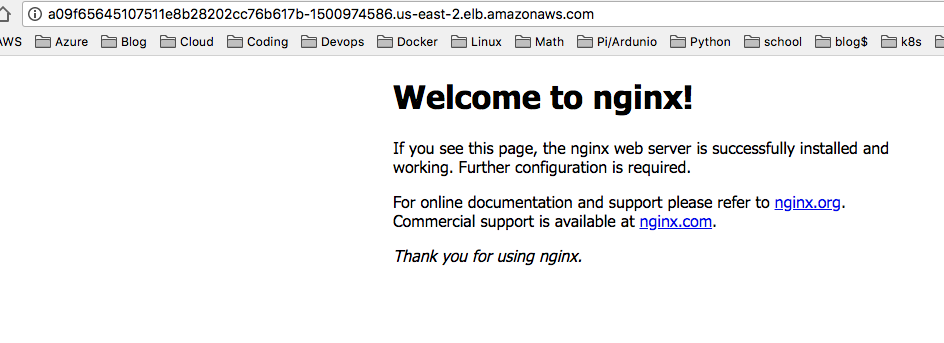

Browse to the External IP and you should see the Nginx home page

Apply default deny all network policy, this file is available in the root of this repository:

kubectl apply -f deny-all-policy.yaml --namespace=policy-demo

Again browse to the external IP and you should now be blocked (note your existing brower may re-use an existing connection, try another browswer or curl the endpoint)

This demonstration has shown how you can leverage the aws kubernetes cni in your own cluster and how to use Calico network policy to enforce your Kubernetes network policy objects.

To remove all resources created by this example do the following:

-

Delete the policy-demo namespace (this will delete all of the resources in the namespace as well):

kubectl delete ns --policy-demo

-

Remove the customer policy from the node and master IAM roles

-

Delete the cluster with kops:

kubectl delete cluster awscni.k8s.local --yes