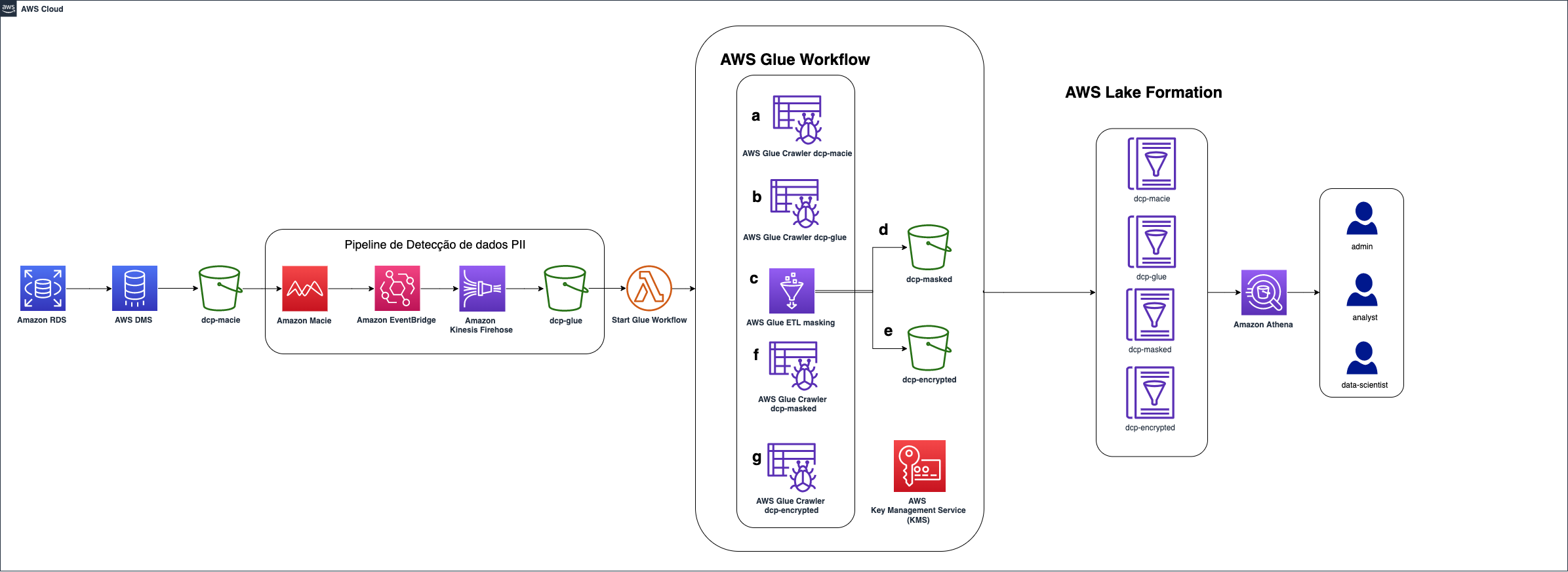

This project illustrates how it is possible to build an architecture that makes data anomization and allows granular access to them according to well-defined rules.

This repository provides an AWS CloudFormation template that deploys a sample solution demonstrating how to leverage Amazon Macie to automatically detect Personal Identifiable Information (PII) data, and mask the respective PII data with AWS Glue job and using Glue workflow with EventBridge e Kinesis Firehose to form an event-driven PII data processing pipeline and configure data access permission through AWS Lake Formation

For the scenario where a user cannot view the sensitive data itself but can use it for training machine learning models, we will use the AWS Key Management Service (AWS KMS). In it, we will store the encryption keys that will be used to mask the data and we will only give access to the training algorithms. Users will see the masked data but only algorithms will be able to see the data in its natural form and use it to build machine learning models.

- Amazon Athena

- AWS CloudFormation

- AWS DMS

- Amazon Elastic Compute Cloud (Amazon EC2)

- AWS Glue

- AWS IAM

- AWS Key Management Service

- Amazon Kinesis Firehose

- AWS Lake Formation

- AWS Lambda

- Amazon Macie

- Amazon RDS

- Amazon S3

- AWS Secrets Manager

- Amazon Virtual Private Cloud (Amazon VPC)

-

Access to the above mentioned AWS services within AWS Account.

-

It is important to validate that there is a pre-existing AWS Lake Formation configuration. If so, there may be permission issues. We suggest testing this solution on a development account without Lake Formation active yet. If this is not possible, see the AWS Lake Formation for more details on required permissions on your Role.

-

For AWS DMS, it is necessary to give permission for it to create the necessary resources, such as the EC2 instance where the DMS tasks will run. If at any time you have worked with DMS, this permission must already exist. Otherwise, AWS Clouformation can create it. To validate that this permission already exists, navigate to the AWS IAM, click on Roles, and see if there is a role named

dms-vpc-role. If not, choose to create the role during deployment. -

We use the Faker library to create non-real data consisting of the following tables:

- Customer

- Bank

- Card

Please refer to this blog post for the detailed instructions on how to use the solution.

-

The data source will be a database, like Amazon RDS in our case. It could be a database on an Amazon EC2 instance, running in an on-premise datacenter, or even on other public clouds;

-

The AWS Database Migration Service (DMS) makes a full load on this database, bringing the information to the "dcp-macie" bucket that will store the data without any treatment yet;

-

Then a Personal Identifiable Information (PII) detection pipeline is started. Amazon Macie analyzes the files and identifies fields that are considered sensitive. You can customize what these fields are, and in this case we are doing this to identify the bank account number;

-

The fields identified by Macie are sent to Eventbridge which will call the Kinesis Data Firehose service to store them in the "dcp-glue" bucket. This data will be used by Glue to know which fields to mask or encrypt using a key stored in KMS service;

-

At the end of this step, S3 is triggered from an Lambda function that starts the Glue workflow that will mask and encrypt the identified data;

- Glue starts a crawler on bucket "dcp-macie" (1) and bucket "dcp-glue (2) to populate two tables, respectively;

- Next, a Python script is executed (3) querying the data from these tables. It uses this information to mask and encrypt the data and then stores it in the "dcp-masked" (4) and "dcp-encrypted" (5) prefixes within the "dcp-athena" bucket;

- The last step of this flow is to run a crawler for each of these prefixes (6 and 7) creating their respective tables in the Glue catalog.

-

To allow fine-grained access to data, AWS Lake Formation will map permissions to defined tags. We'll see how to implement being part in stages later on;

-

To query the data, we will use Amazon Athena. Other tools such as Amazon Redshift or Amazon Quicksight could be used, as well as third-party tools.

- Sign in to your AWS account

- Download the CloudFormation template file here.

- Then upload the file in the CloudFormation create stack page to deploy the solution.

- Provide the CloudFormation stack a stack name or leave the value as default (“dcp”).

- Provide the CloudFormation stack a password on the TestUserPassword parameter for Lake Formation personas to log in to the AWS Management Console.

- Check the box for acknowledgement at the last step of creating the CloudFormation stack

- Click “Create stack”

To avoid incurring future charges, delete the resources.

Navigate to the CloudFormation console and delete the stack named “dcp” (or the stack named with your customized value during the CloudFormation stack creation step).

See CONTRIBUTING for more information.

Building a serverless tokenization solution to mask sensitive data

Como anonimizar seus dados usando o AWS Glue

Enabling data classification for Amazon RDS database with Macie

This library is licensed under the MIT-0 License. See the LICENSE file.