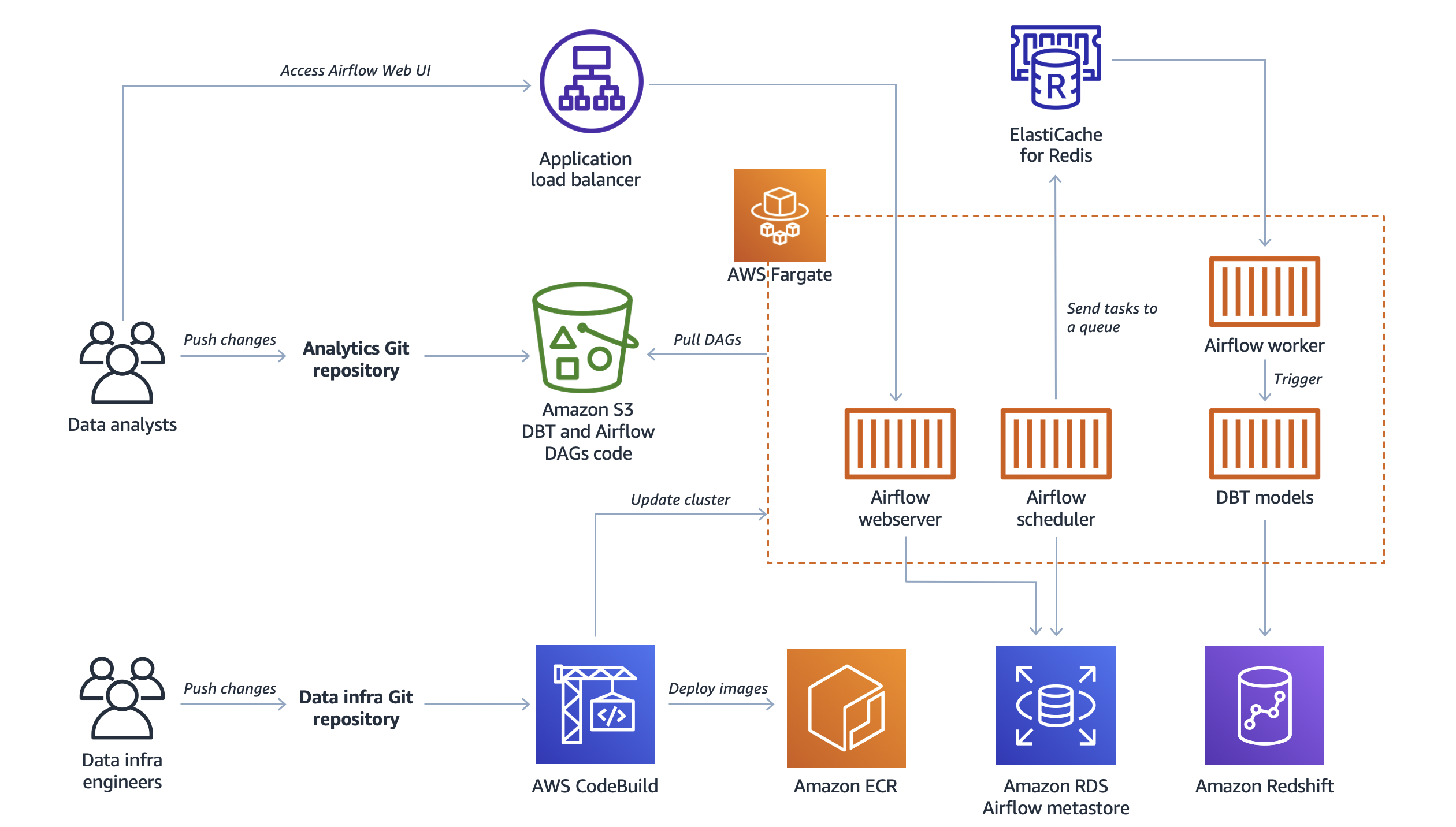

This repository contains code to deploy the architecture described in the blog post: "Build DataOps platform to break silos between engineers and analysts".

The architecture includes following AWS services:

- Amazon Elastic Container Service, to run Apache Airflow and dbt

- Amazon Elastic Container Repository, to store Docker images for Airflow and dbt

- Amazon Redshift, as data warehouse

- Amazon Relational Database System, as metadata store for Airflow

- Amazon ElastiCache for Redis, as a Celery backend for Airflow

- Amazon Simple Storage Service, to store Airflow and dbt DAGs

- AWS CodeBuild (optional), automate deployments

In this repository there are two main project folders: dataops-infra and analytics. This setup is meant to demonstrate how DataOps can foster effective collaboration between data engineers and data analysts, separating the platform infrastructure code from the business logic.

These two folders should be considered as two separate repositories following their own release cycles.

The dataops-infra folder contains code and intructions to deploy the platform infrastructure described in the Architecture overview section. This project is created from the prospective of a data engineering team that is responsible for creating and maintaining data infrastructure such as data lake, data warehouse, orchestration, and CI/CD pipelines for analytics.

The analytics folder contains code and instructions to manage and deploy Airflow and dbt DAGs on the DataOps platform. This project is created from the prospective of a data analytics team composed of data analysts and data scientists. They have domain knowledge and are responsible for serving analytics requests from different stakeholders such as marketing and business development teams so that company can make data driven decisions.

This library is licensed under the MIT-0 License. See the LICENSE file.