This repository provides a pattern to use HashiCorp Terraform to deploy resources for a workload in a secure fashion, minimising the overhead of starting a new project.

The solution is designed around users storing Terraform assets within an AWS CodeCommit repository. Commits to this repository will trigger an AWS CodePipeline pipeline which will scan the code for security vulnerabilities, before deploying the project into the AWS account.

The solution supports multiple deployment environments and supports best practices such Infrastructure as Code and static analysis.

The default AWS region is set to eu-west-1.

- There is a convention applied for working with Terraform in multiple deployment environments, so you don't need to reinvent the wheel

- Operations are automated

- The same operations are to be performed locally and in a CICD pipeline

- A CICD pipeline is provided, and it can be extended by adding more stages or actions

- Shift left approach for security is applied

There are several practices implemented by this solution:

- least privilege principle

- pipelines as code

- environment as code

- Infrastructure as Code

- enforced consistency between local environment and environment provided by CICD

- multiple (2) deployment environments (testing and production)

- security vulnerabilities code scanning

- 1 git repository is used to store all the code and it it treated as a single source of truth

The scope of this solution is limited to 1 AWS Account. This means, that all the AWS resources, even though deployed to various environments (testing and production), will be deployed into the same 1 AWS Account. This is not recommended. Instead, a recommendation is to transition to multiple AWS accounts. Please follow this link for more details.

The solution was tested on Mac OS, using Bash shell. It should also work on Linux.

The main infrastructure code - terraform/

The directory terraform/ contains the main infrastructure code, AWS resources. The workflow of these resources is:

- first deploy them to a testing environment,

- then verify that they were deployed correctly in the testing environment,

- then deploy them to a production environment,

- then verify that they were deployed correctly in the production environment.

Terraform backend - terraform_backend/

In order to use Terraform, Terraform backend must be set up. For this solution the S3 Terraform backend was chosen. This means that an S3 bucket and a DynamoDB table have to be created. There are many ways of creating these 2 resources. In this solution it was decided to use Terraform with local backend to set up the S3 Terraform backend. The code needed to set up the S3 backend is kept in the terraform_backend/ directory.

CICD pipeline - cicd/

In order to be able to use a CICD pipeline to deploy the main infrastructure code, a pipeline has to be defined and deployed. In this solution, AWS CodePipeline and AWS CodeBuild are used to provide the CICD pipeline capabilities. The AWS CodePipeline pipeline and AWS CodeBuild projects are deployed also using Terraform. The Terraform code is kept in the cicd/ directory.

There is also the docs/ directory. It contains files which help to document this solution.

In summary, there are 3 directories with Terraform files. Thanks to that, deploying this solution:

- is automated

- is done in an idempotent way (meaning - you can run this deployment multiple times and expect the same effect)

- can be reapplied in a case of configuration drift

The process of deploying this solution involves these steps:

- Set up access to AWS Management Console and to AWS CLI.

- Create an AWS CodeCommit git repository and upload code to it.

- Set up Terraform backend (using terraform_backend/ directory).

- Deploy a CICD pipeline (using cicd/ directory).

- Deploy the main infrastructure code (using terraform/ directory).

- Clean up.

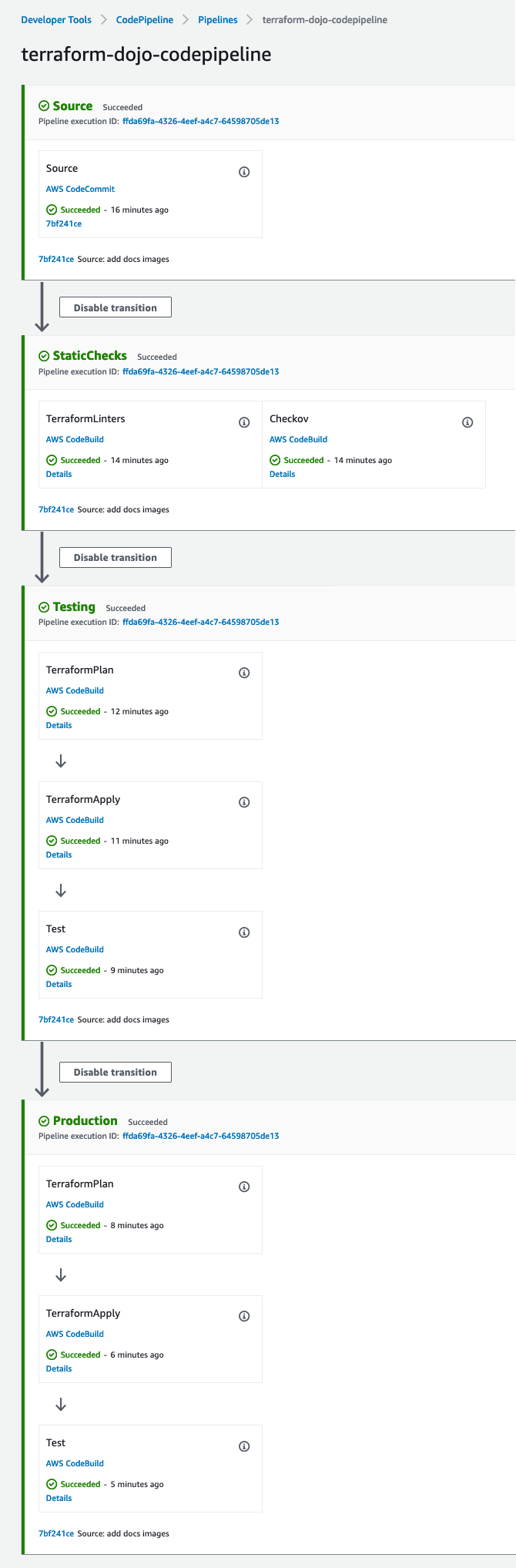

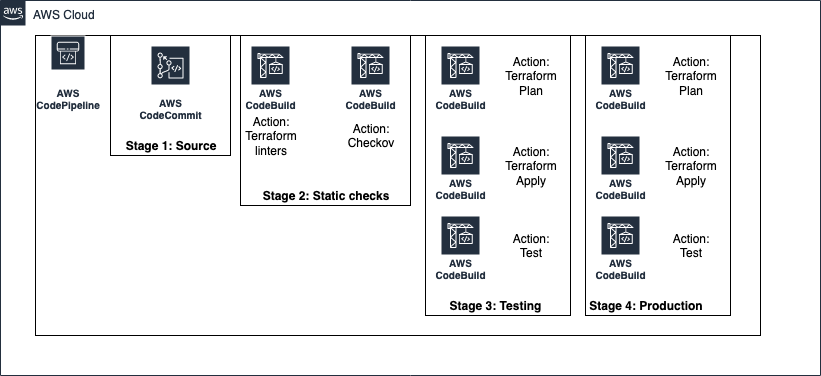

AWS CodePipeline and AWS CodeBuild are used here. There is 1 pipeline. It covers all the environments. The following stages are provided:

- Source - uses an AWS CodeCommit git repository

- StaticChecks - runs 2 actions (Terraform static checks and Checkov)

- Testing - deploys example AWS resources into the testing environment using terraform plan and terraform apply commands, and then runs a simple test

- Production - deploys example AWS resources into the production environment using terraform plan and terraform apply commands, and then runs a simple test

All the code needed to deploy the pipeline is deployed by Terraform and kept in cicd/terraform directory. There are also several buildspec files, available in cicd/ directory.

By default, the 2 actions run in the StaticChecks are run sequentially. In order to run them in parallel, please add this line near the top of the tasks file:

export TF_VAR_run_tf_static_checks_parallely=true

There are separate CodeBuild projects provided for different CodePipeline Actions. But, the same buildspec file is used to run terraform plan in all the environments. (AWS docs).

Both the tasks file and the pipeline serve also as documentation about how to operate this solution.

The architecture of the pipeline is presented in the picture below.

- Add a CodePipeline Stage or Action in cicd/terraform-pipeline/pipeline.tf

- You can either reuse a CodeBuild Project, or add a new one in cicd/terraform-pipeline/codebuild.tf. Adding a new CodeBuild Project also demands a new buildspec file. Create one in the cicd/ directory.

There are 2 IAM roles provided:

automation_testingautomation_production

The roles are supposed to be assumed by an actor (either a human or CICD server) performing a deployment. The automation_testing role is to be assumed when deployment targets the testing environment, and automation_production - when the deployment targets production environment.

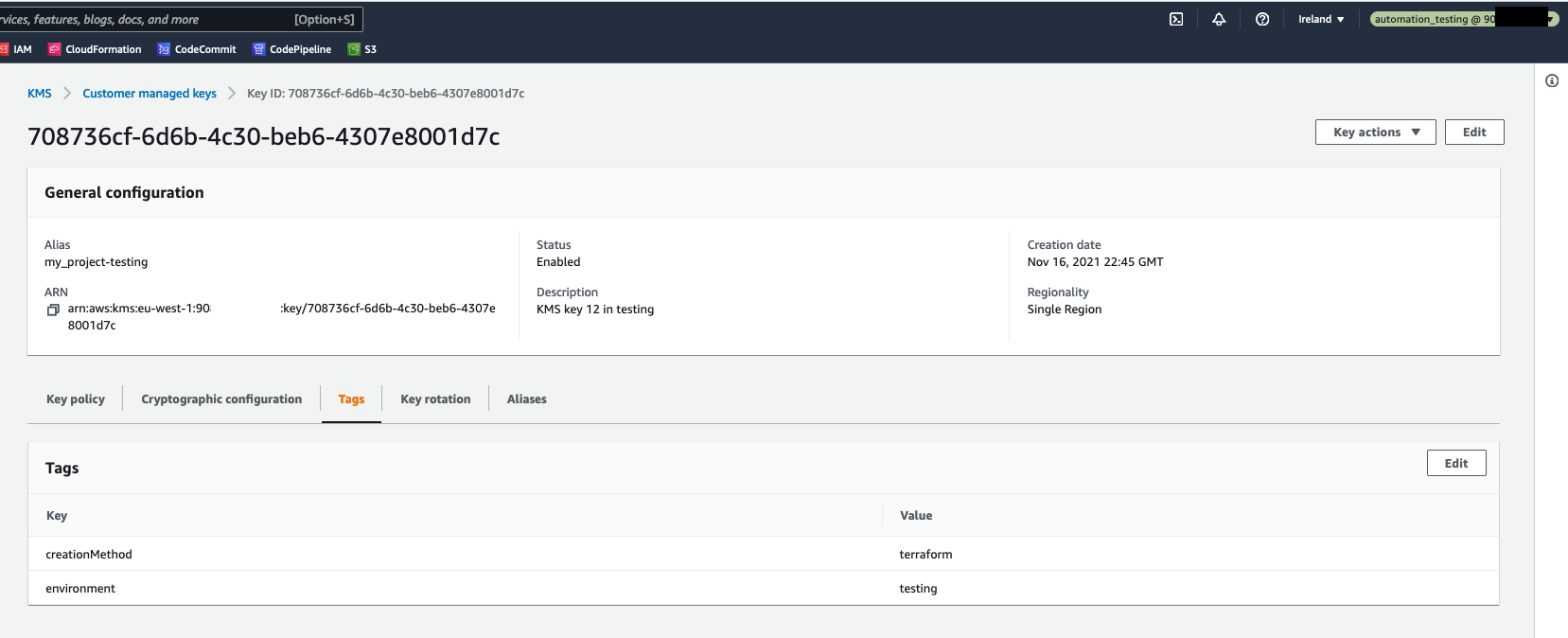

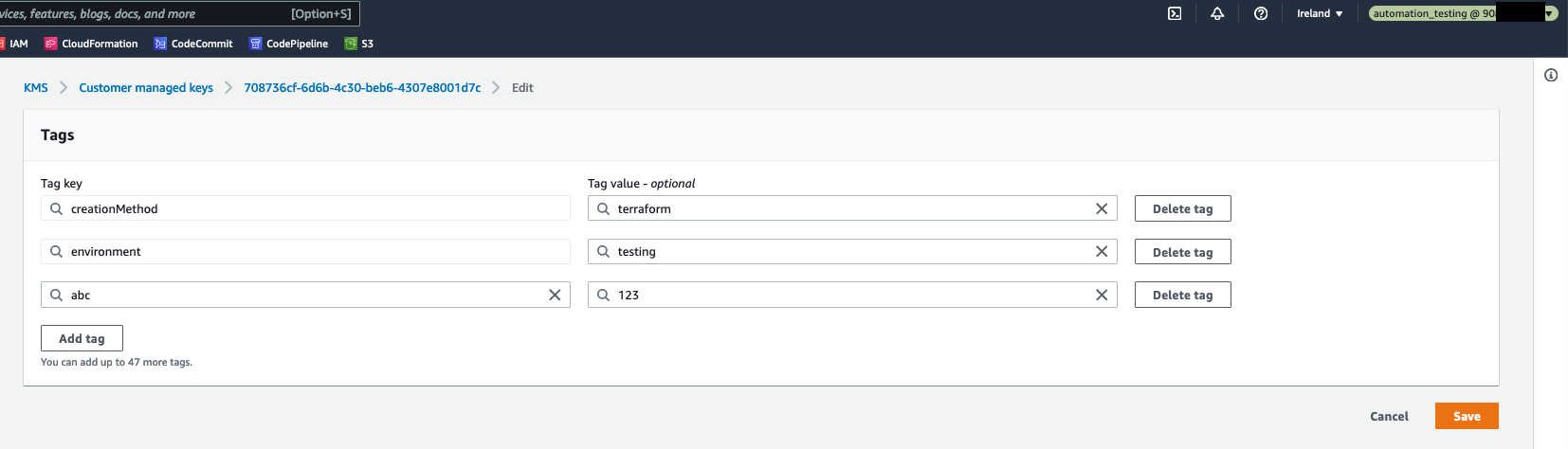

Both roles provide read only access to the AWS services, by utilizing the SecurityAudit AWS Managed Policy. Furthermore, the automation_testing role allows write access to AWS resources which are tagged as environment=testing. Similarly, automation_production role allows write access to AWS resources tagged as environment=production. This is implemented in the following way:

"Statement": [

{

"Sid": "AllowOnlyForChosenEnvironment",

"Effect": "Allow",

"Action": [ "*" ],

"Resource": "*",

"Condition": {

"StringNotLike": {

"aws:ResourceTag/environment": [ "production" ]

}

}

}

in cicd/terraform-pipeline/tf_iam_roles.tf. For more information please read IAM UserGuide

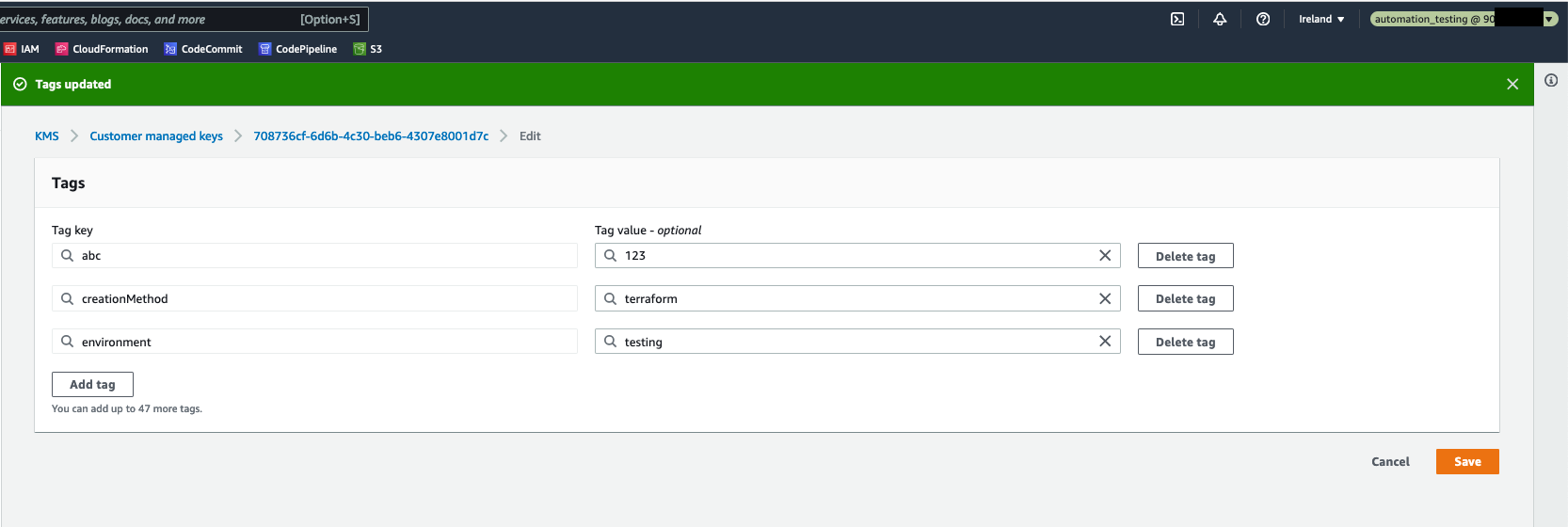

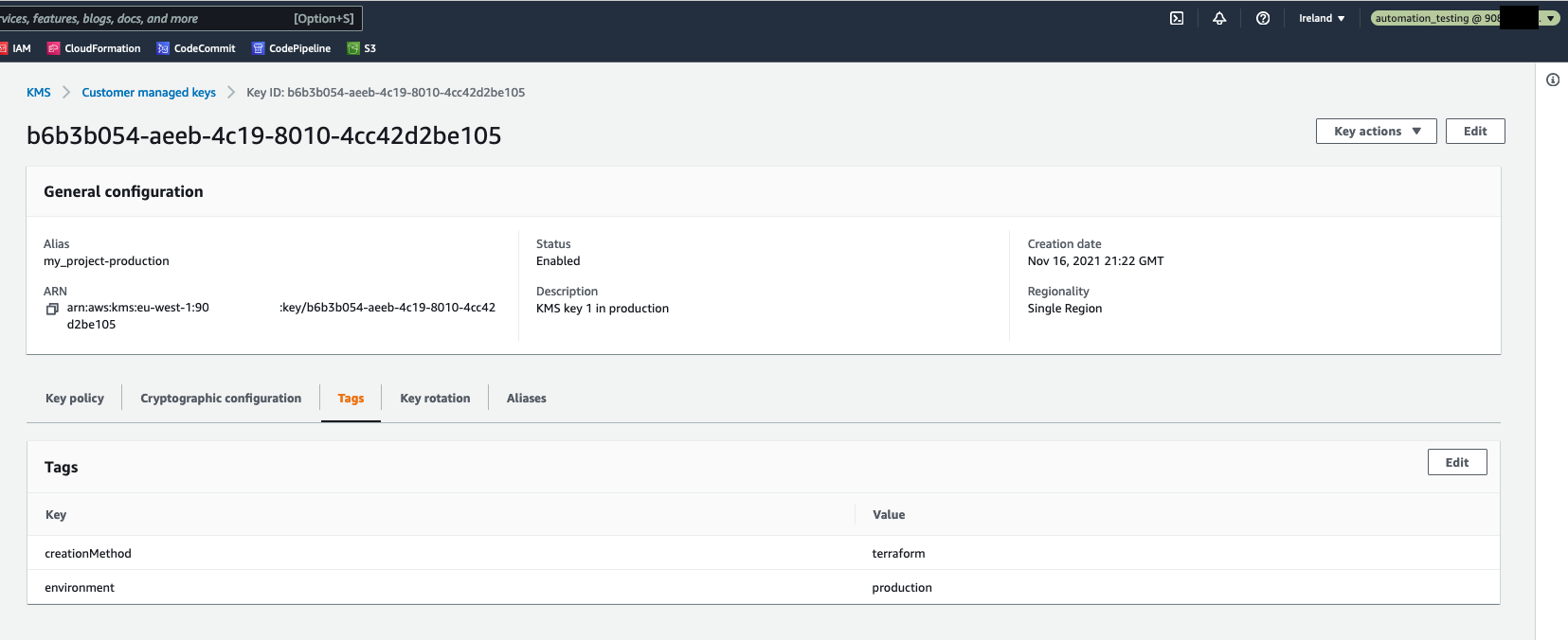

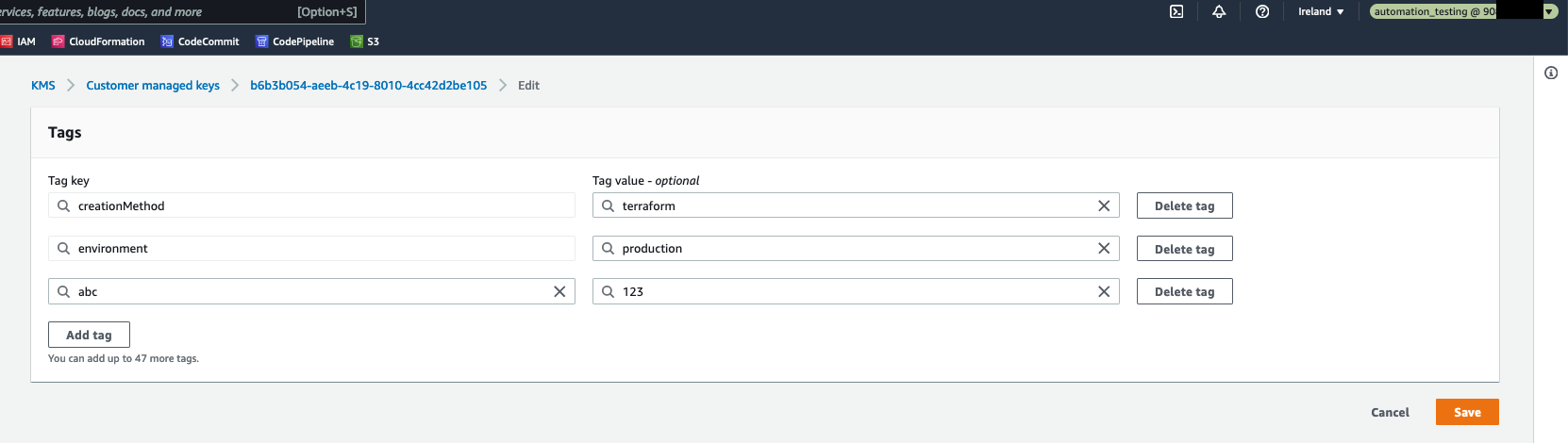

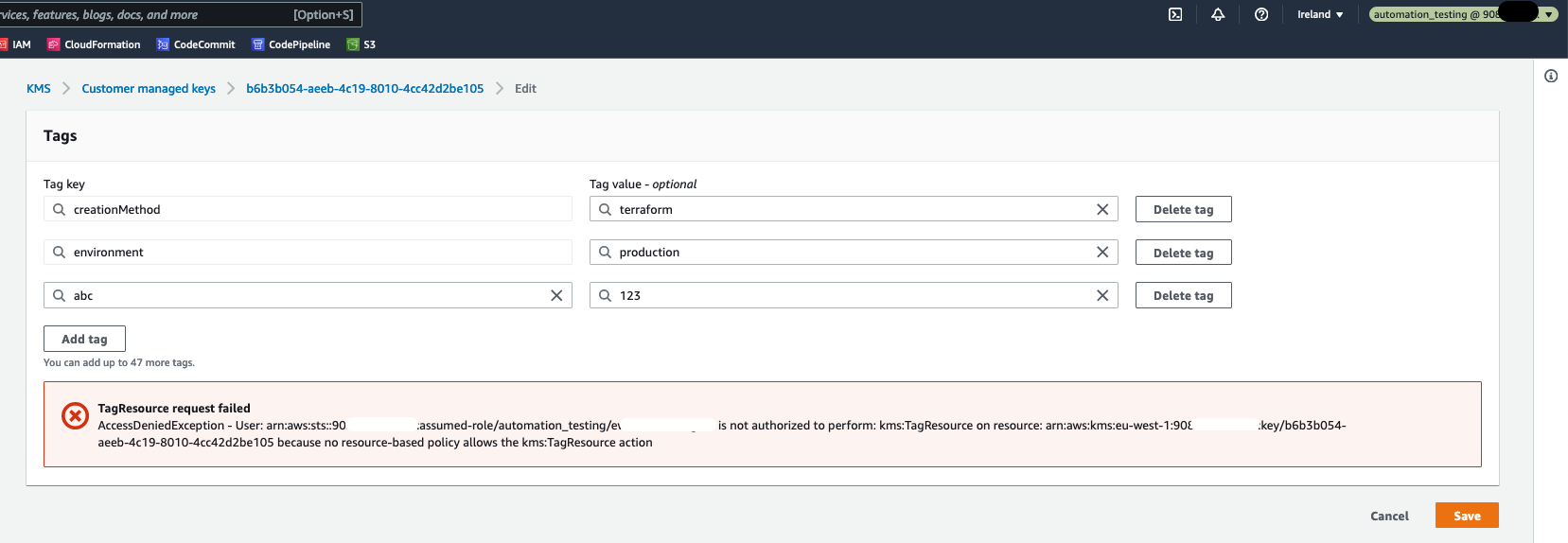

In result, when the automation_testing IAM role is assumed, it is possible to edit an AWS resource which is tagged as environment=testing, and it is not possible to edit any AWS resource without this tag. Please see the pictures below.

But, when trying to modify a resource tagged as environment=production, there is an error.

Environment as code and enforced consistency between local environment and environment provided by CICD

Environment as code is provided thanks to Docker and Dojo. Dojo is a CLI program used to facilitate development in Docker containers. Thanks to this, environment is specified as code. In this solution, there is one configuration file - Dojofile file which specifies the environment needed to run Terraform commands. Dojofile is a Dojo configuration file.

The benefits are:

- the same software versions are used by all the engineers (e.g. Terraform version or AWS CLI version). This fixes the "works for me" problem, where a program works on 1 machine, and does not work on another

- you can use multiple software versions at once without reinstalling them. This is solved by using different Docker images and saving them in Dojofiles.

- the same software versions are used by human engineers and by CICD server

There is also a tasks file used here. It is a Bash script, which provides a programming language switch, and in turn you can run different tasks. It is similar to Makefile/Rakefile, but you don't have to install anything but Bash. Thanks to this, it's possible to run e.g.:

./tasks tf_lint

and it will create a Docker container and run Terraform static checks inside of that container. The Docker container is orchestrated by Dojo. Dojo takes care of mounting directories, ensuring container is not run as root, providing a standard way for development.

You can notice that the same commands are supposed to be run locally on a workstation and the same are invoked in buildspecs, e.g. buildspec_checkov.yml. Additional benefit is, that since the commands can be run also locally, it speeds up the development, because you get feedback faster.

There is a convention that states that all the tasks which run in a Docker container, start with a letter, e.g.

./tasks tf_lint

and all the tasks that run locally, start with an underscore, e.g.

./tasks _tf_lint

Thanks to that you can choose which way you prefer - running locally or running in a Docker container.

Both the tasks file and the pipeline serve also as documentation about how to operate this solution.

Furthermore, Terraform providers versions are pinned. The versions are set in the backend.tf files in each of 3 directories with Terraform code (terraform_backend, cicd, terraform). This follows the HashiCorp recommendation:

When multiple users or automation tools run the same Terraform configuration, they should all use the same versions of their required providers

The main infrastructure code is kept in terraform/ directory. There are 2 environments provided: testing and production. Terraform variables values are set for each environment in an *.tfvars file. There is 1 such file provided for each environment (so there are 2 such files here).

Terraform state is managed in S3. There is 1 S3 bucket, called: terraform-dojo-codepipeline-terraform-states-${account_id} and it contains the following state files:

terraform-dojo-codepipeline-cicd.tfstate # CICD pipeline infrastructure state

testing/

terraform-dojo-codepipeline-testing.tfstate # State for testing environment

production/

terraform-dojo-codepipeline-production.tfstate # State for production environment

For each of the environments, there is an applicable pipeline Stage. The Stages consist of the same Actions: TerraformPlan, TerraformApply, Test.

The end commands that are supposed to be used in each environment to deploy the infrastructure are:

MY_ENVIRONMENT=testing ./tasks tf_plan

MY_ENVIRONMENT=testing ./tasks tf_apply

MY_ENVIRONMENT=production ./tasks tf_plan

MY_ENVIRONMENT=production ./tasks tf_apply

Under the hood, the ./tasks tf_plan task does:

- assumes the applicable IAM role, either

automation_testingorautomation_production - runs

dojoto invoke another task./tasks _tf_plan - and this task in turn runs:

cd terraform/

func_tf_init

terraform plan -out ${project_name}-${MY_ENVIRONMENT}.tfplan -var-file ${MY_ENVIRONMENT}.tfvars

Thanks to this, the two environments are orchestrated in the same way, using the same CLI commands. The only difference is the MY_ENVIRONMENT environment variable value.

This is provided by Checkov. Please run the following command to invoke the scan:

./tasks checkov

Please install these first:

The process of deploying this solution involves these steps:

- Set up access to AWS Management Console and to AWS CLI.

- Create an AWS CodeCommit git repository and upload code to it.

- Set up Terraform backend (using terraform_backend/ directory).

- Deploy a CICD pipeline (using cicd/ directory).

- Deploy the main infrastructure code (using terraform/ directory).

- Clean up.

- Set up access to AWS Management Console and to AWS CLI.

- It is assumed that you already have an AWS Account and that you can connect to the AWS Management Console using for example an IAM Role or an IAM User.

- Please ensure that you also have programmatic access set up. You may verify this by running the following command:

It should succeed without errors. Please reference this about programmatic access.aws s3 ls- Please ensure that you have enough permissions set up for your IAM User or IAM Role. You may use for example the AWS Managed IAM Policy - AdministratorAccess.

- Create an AWS CodeCommit git repository and upload code to it.

- Clone this git repository using your preferred protocol, such as HTTPS or SSH.

- Create an AWS CodeCommit git repository by running:

project_name=terraform-dojo-codepipeline aws codecommit create-repository --repository-name ${project_name}- Upload the code from the locally cloned git repository to the AWS CodeCommit git repository. There are multiple options how to do it.

- It is recommended to use GRC (git-remote-codecommit).

- Please follow these instructions first.

- Then add a second Git Remote to the just cloned repository:

project_name=terraform-dojo-codepipeline git remote add cc-grc codecommit::eu-west-1://${project_name}- And upload (push) the code to the just added Git Remote by running:

git push cc-grc main - If you prefer using SSH:

- Please follow these instructions first. Ensure that you have SSH key pair available and that you have configured SSH how to connect to AWS CodeCommit.

- Then add a second Git Remote to the just cloned repository:

project_name=terraform-dojo-codepipeline git remote add cc-ssh ssh://git-codecommit.eu-west-1.amazonaws.com/v1/repos/${project_name}- And upload (push) the code to the just added Git Remote by running:

git push cc-ssh main

- Set up Terraform backend (using terraform_backend/ directory).

- Run the following command:

./tasks setup_backend- This step will run

terraform planandterraform applycommands. If you need to review theterraform planoutput first, you may want to run the commands manually, please see the tasks file for the specific commands. When running the commands manually, please remember to export the same environment variables as set in the tasks file. - This step uses local Terraform backend. The state is saved to the file:

terraform_backend/terraform.tfstate. You may choose to git commit that file and upload it (push it) to your AWS CodeCommit git repository. - This step will create an S3 bucket and a DynamoDB table. This is needed to manage Terraform state remotely. This state will be used for the resources defined in terraform/ and in cicd/ directories.

- Deploy a CICD pipeline (using cicd/ directory).

- Please ensure that the above steps were run first.

- Run the following command:

./tasks setup_cicd- This step will run

terraform planandterraform applycommands. If you need to review theterraform planoutput first, you may want to run the commands manually, please see the tasks file for the specific commands. When running the commands manually, please remember to export the same environment variables as set in the tasks file. - This step uses the remote S3 Terraform backend. The state is saved in the S3 bucket created in step 3.

- This step will create an AWS CodePipeline pipeline, together with all the other necessary AWS resources (such as: an S3 bucket for pipeline artifacts, and AWS CodeBuild projects). It will also create the 2 IAM Roles, described under Least privilege principle.

- Deploy the main infrastructure code (using terraform/ directory).

- All the steps here are run automatically by a CICD pipeline. You don't need to do anything. The CICD pipeline will be triggered automatically as soon as it was created and also on git push events. You may also trigger the CICD pipeline manually.

- You may choose to run these commands locally (in addition to them being run already in a CICD pipeline):

# Run static checks ./tasks tf_lint ./tasks checkov # Deploy to a testing environment MY_ENVIRONMENT=testing ./tasks tf_plan MY_ENVIRONMENT=testing ./tasks tf_apply # Deploy to a production environment MY_ENVIRONMENT=production ./tasks tf_plan MY_ENVIRONMENT=production ./tasks tf_apply- The Terraform commands here use the remote S3 Terraform backend. The state is saved in the S3 bucket created in step 3.

- The Terraform commands here will create some example AWS resources, such as a KMS key, an S3 bucket, a SSM parameter. One set of the resources is created in the testing environment, another set is created in the production environment.

- Clean up.

- When you are done with this solution you may want to destroy all resources, so that you do not incur additional costs.

- Please run the following commands in the given order:

MY_ENVIRONMENT=testing ./tasks tf_plan destroy MY_ENVIRONMENT=testing ./tasks tf_apply MY_ENVIRONMENT=production ./tasks tf_plan destroy MY_ENVIRONMENT=production ./tasks tf_apply ./tasks setup_cicd destroy ./tasks setup_backend destroy- You may want to destroy the CodeCommit git repository too.

Going through the code you may notice that there is the variable project_name set. This variable is used as a part of the name of many AWS resources created by this solution, including the CodeCommit git repository. It is used in all 3 directories with Terraform code:

This variable is set in the tasks file. It defaults to "terraform-dojo-codepipeline". The variable value is propagated to Terraform also in the tasks file by running: export TF_VAR_project_name="terraform-dojo-codepipeline".

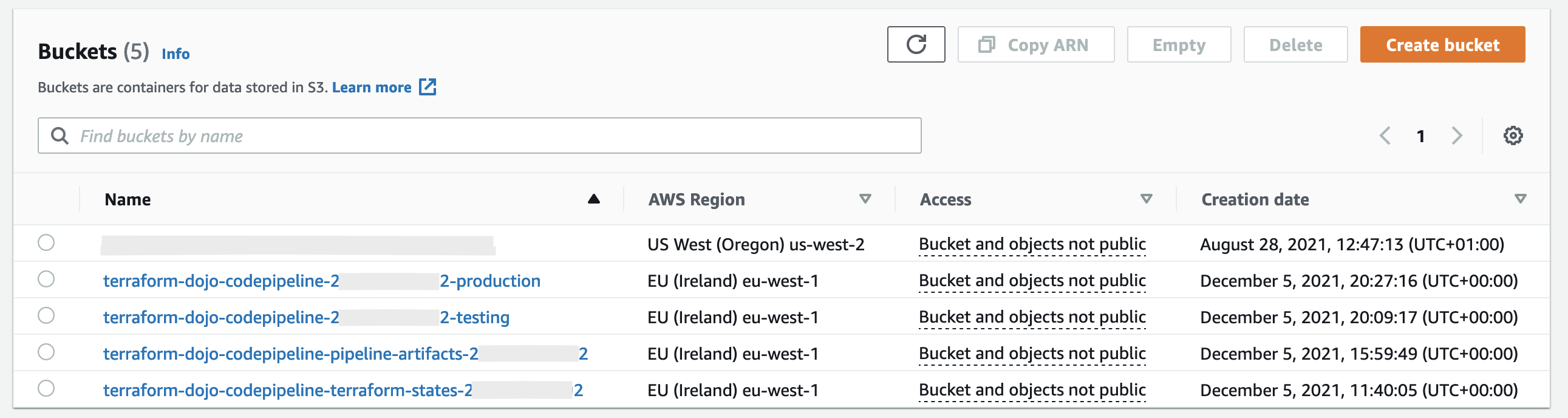

Using the default value of the project_name variable, the following four S3 buckets would be created:

terraform-dojo-codepipeline-terraform-states-${account_id}to keep Terraform state filesterraform-dojo-codepipeline-pipeline-artifacts-${account_id}to keep CodePipeline artifactsterraform-dojo-codepipeline-${account_id}-testingandterraform-dojo-codepipeline-${account_id}-productionto serve as example Terraform resources

S3 buckets are global resources, and each S3 bucket name must be unique, not only in 1 AWS account, but unique globally. This means, that creating an S3 bucket may fail, if the name is already taken. One approach to overcome this is to add AWS Account ID into the S3 bucket name. This approach was applied in this solution.

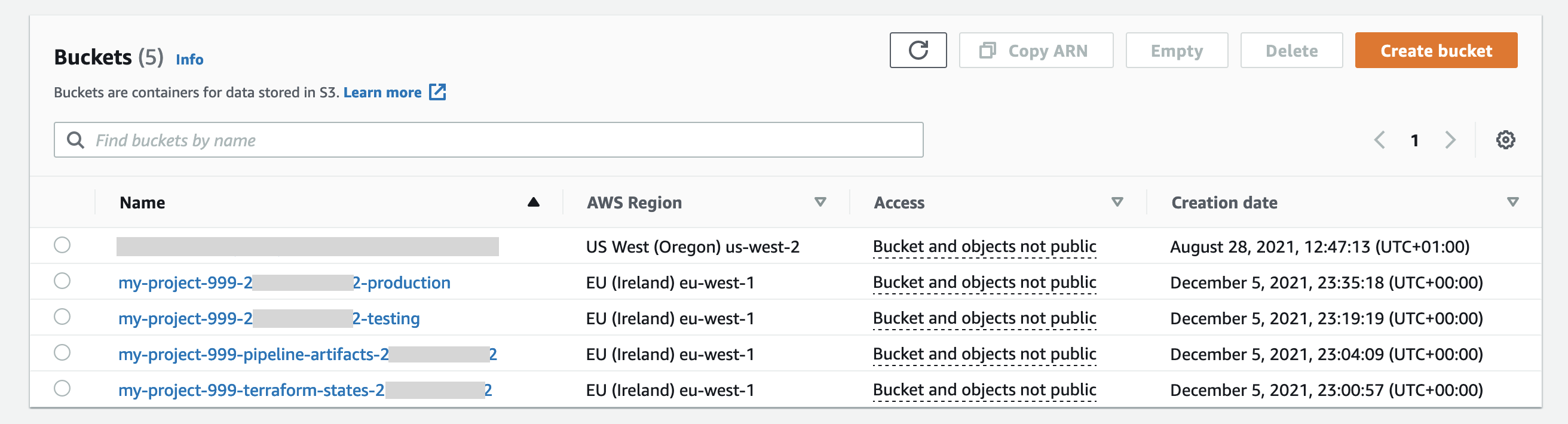

You may want to set this variable to some custom value, e.g. "my-project-999". To change the variable value, please:

- Be aware that only lowercase alphanumeric characters and hyphens will be allowed. This limitation comes from S3 buckets naming rules.

- Set the variable value in the tasks file. Replace:

export project_name="terraform-dojo-codepipeline"

with your custom value, e.g.:

export project_name="my-project-999"

and then git commit and git push that change to a git repository named my-project-999.

This change should be done before any resources are deployed.

Setting the custom value for the project_name variable to "my-project-999", would lead to creating such S3 buckets:

This solution provides a Bash script, the tasks file. It is expected that this file will be used to run commands like terraform plan and terraform apply. But sometimes this is not enough, and there is a need to run more Terraform commands, such as terraform taint or terraform state list, etc.

There are multiple alternative solutions here:

- You may want to add a new task in the tasks file. Then, you can invoke the task in the same way as other tasks.

- You can invoke the needed commands manually.

- Start with running

dojoin the root directory of this git repository. This will create a Docker container and it will also mount the current directory into the Docker container. - You may now run the needed commands interactively, in the Docker container.

- When you are done, just run

exitand you will exit the Docker container. The container will be removed automatically.

This solution uses a public Docker image, terraform-dojo, hosted on DockerHub. This Docker image is used to run commands locally and also in AWS CodeBuild projects.

When pulling the Docker image, you may experience the following error:

docker: Error response from daemon: toomanyrequests:\

You have reached your pull rate limit. You may increase the limit by\

authenticating and upgrading: https://www.docker.com/increase-rate-limit

This problem occurs, because Docker has recently introduced Download Rate Limit. The documentation page informs that: Docker Hub limits the number of Docker image downloads (“pulls”) based on the account type of the user pulling the image.

There are multiple alternative solutions, documented e.g. in this AWS Blog Post:

- You may want to use Amazon Elastic Container Registry as your Docker images registry. There are 2 options here:

- you can pull the

terraform-dojodocker image from DockerHub and push it into Amazon ECR. - you can use Amazon ECR as a pull through cache. Please read more here.

- You may configure CodeBuild to authenticate the layer pulls using your DockerHub account credentials. Please read more here.

- You may want to have a paid Docker subscription. Please read more here.

Dockerfile for terraform-dojo Docker image is available here. You can build the Docker image by:

- Cloning the docker-terraform-dojo git repository.

- Running this command:

./tasks build_local

If you are using Amazon ECR, you will need to add IAM permissions for CodeBuild to pull Docker images from Amazon ECR. Please add the following into cicd/terraform-pipeline/codebuild.tf

# Allow CodeBuild to run `aws ecr get-login-password` which is needed

# when CodeBuild needs to pull Docker images from Amazon ECR

resource "aws_iam_role_policy" "codebuild_policy_allow_ecr" {

name = "${var.project_name}-codebuild-policy-allow-ecr"

role = aws_iam_role.codebuild_assume_role.id

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Resource": [

"*"

],

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchGetImage",

"ecr:GetDownloadUrlForLayer"

]

}

]

}

EOF

}

You would also have to edit the buildspec files. In each of them, please add 2 lines after the commands directive. The example for buildspec_checkov.yml would be as following. Please replace <AWS-Account-ID> with the AWS Account ID in which you store the ECR Docker image.

commands:

# without the following line, HOME is set to /root and

# therefore, `docker login` command fails

- export HOME=/home/codebuild-user

- aws ecr get-login-password --region eu-west-1 | docker login --username AWS --password-stdin <AWS-Account-ID>.dkr.ecr.eu-west-1.amazonaws.com

- ./tasks checkov

- add support for multiple AWS accounts

- add support to running operations (tasks) on Windows

- add support to using another CICD server, e.g. CircleCI instead of AWS CodePipeline

- add manual approval stage in Production Stage of the pipeline

- add more tests, using some test framework

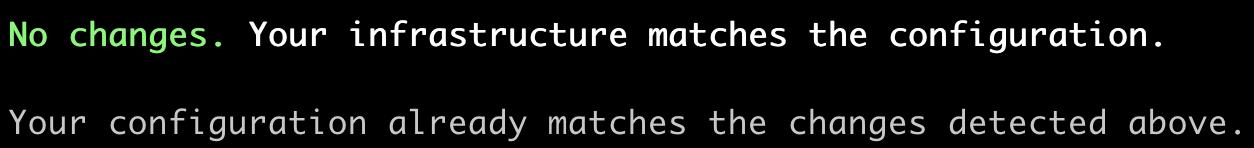

- add additional action in the pipeline stages: testing and production to check that running

terraform planafterterraform applywas already finished would not try show any changes. The expected output fromterraform planwould contain the "No changes" string, like in the picture below:

- https://github.com/kudulab/dojo - Dojo

- https://github.com/slalompdx/terraform-aws-codecommit-cicd/blob/v0.1.3/main.tf https://github.com/aws/aws-codebuild-docker-images/blob/master/ubuntu/standard/5.0/Dockerfile - CodeBuild supported Docker images

- https://docs.aws.amazon.com/codepipeline/latest/userguide/concepts-devops-example.html - example CodePipeline pipeline

- https://aws.amazon.com/premiumsupport/knowledge-center/codebuild-temporary-credentials-docker/ - how to run Docker containers in CodeBuild

- https://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies_managed-vs-inline.html - IAM

- https://docs.aws.amazon.com/IAM/latest/UserGuide/access_tags.html#access_tags_control-resources - IAM

- https://aws.amazon.com/blogs/developer/build-infrastructure-ci-for-terraform-code-leveraging-aws-developer-tools-and-terratest/ - AWS Blog post with example usage of Terratest framework to test Terraform resources

- https://aws.amazon.com/blogs/devops/adding-custom-logic-to-aws-codepipeline-with-aws-lambda-and-amazon-cloudwatch-events/ - AWS Blog post explaining, among others, how to trigger a CodePipeline pipeline on git commit changes while also avoid triggering the pipeline on commits that change only the

readme.mdfile - https://docs.aws.amazon.com/general/latest/gr/aws-sec-cred-types.html#access-keys-and-secret-access-keys - Programmatic access to an AWS Account

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.