In this workshop, you will go through the steps required to build a machine learning application on AWS using Pytorch, Amazon SageMaker AI.

- 00 - Introduction: Perform prerequisites and validations using a SageMaker Studio Jupyterlab notebook for the labs.

- 01 - Build a Two Tower recommender model: Perform data preparation using the SageMaker Studio Jupyterlab notebook experience and trigger a SageMaker Training job. MLflow will be used to track and observe the experiments.

- 02 - Deploy a Two Tower recommender model: You will learn to use a SageMaker Studio Jupyterlab notebook to deploy the model into an endpoint.

- 03 - Shadow Testing: You will learn to setup a shadow testing using SageMaker SDK and deploy 2 models behind a single endpoint, and run test and analyze the results.

Amazon SageMaker AI is a fully-managed service that enables developers and data scientists to quickly and easily build, train, and deploy machine learning models at any scale.

Amazon SageMaker removes the complexity that holds back developer success with each of these steps; indeed, it includes modules that can be used together or independently to build, train, and deploy your machine learning models.

You will use the Movielens Dataset from Grouplens to train a two tower recommender model. The dataset contains real and synthetic data that represents users, movies/shows and the corresponding ratings for large number of movies. The dataset ranges between 10k to 32 million records, making it suitable for training a recommender model.

In this workshop, your goal is to build a two tower recommender model that recommends the movies based on the given preference.

Following is an excerpt from the rating dataset:

All ratings are contained in the file "ratings.dat" and are in the following format:

UserID::MovieID::Rating::Timestamp

- UserIDs range between 1 and 6040

- MovieIDs range between 1 and 3952

- Ratings are made on a 5-star scale (whole-star ratings only)

- Timestamp is represented in seconds since the epoch as returned by time(2)

- Each user has at least 20 ratings

The ratings column, is the target target variable, in fact we are transforming the labels into [0,1] based on the individual rating. For instance, if the rating is 4 and above, we consider a movie to recommend a user, if rating is below 4, we consider not to recommend to the user. Based on this assumption, we frame the problem as a binary classification problem. In this workshop, you will build a neural network model, called two tower model. A two-tower model is a neural network architecture that processes two different types of inputs through separate "towers" to generate embeddings in a shared vector space. Each tower independently processes its respective inputs, making it particularly effective for recommendation systems and retrieval tasks. For more information about two tower, please refer to thse papers here, here and here. During inference, the system only needs to process the query/user features through the query tower to generate the query embedding, and perform similarity search between the query embedding and the pre-computed item embeddings to find the top recommended items.

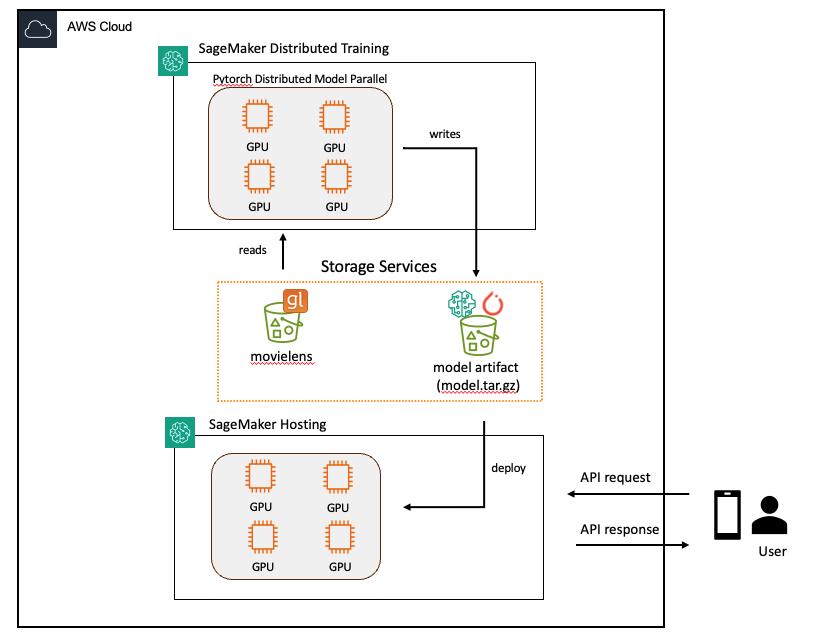

Due to potential large number (millions or billions) of user and item interactions, training a two tower model is commonly involves using distributed model and data parallel approach. Using distributed model and data parallel allows large amount of training data to be sharded across GPU nodes, as well as distributing the large embedding tables across these nodes for training efficiency. We are going to leverage an open source framework TorchRec to build a Two Tower recommender system.

The end to end architecture for distributed training and inference can be visualized in the following architecture diagram:

If you are attending the Distributed Training and Inference with Pytorch, MLFlow and Amazon SageMaker AI workshop run by AWS, the AWS event facilitator has provided you access to a temporary AWS account preconfigured for this workshop. Proceed to Module 0: Introduction.

If you want to use your own AWS account, you'll have to execute some preliminary configuration steps as described in the Setup Guide.

⚠️ Running this workshop in your AWS account will incur costs. You will need to delete the resources you create to avoid incurring further costs after you have completed the workshop. See the clean up steps.

Wei Teh - Senior AI/ML Specialist Solutions Architect - Amazon Web Services

Asim Jalis - Senior AI/ML/Analytics Solutions Architect - Amazon Web Services

Eddie Reminez - Senior Solutions Architect - Amazon Web Services

Jared Sutherland - Senior Solutions Architect - Amazon Web Services

Vivek Gangassani - Senior Generative AI Specialist Solutions Architect - Amazon Web Services