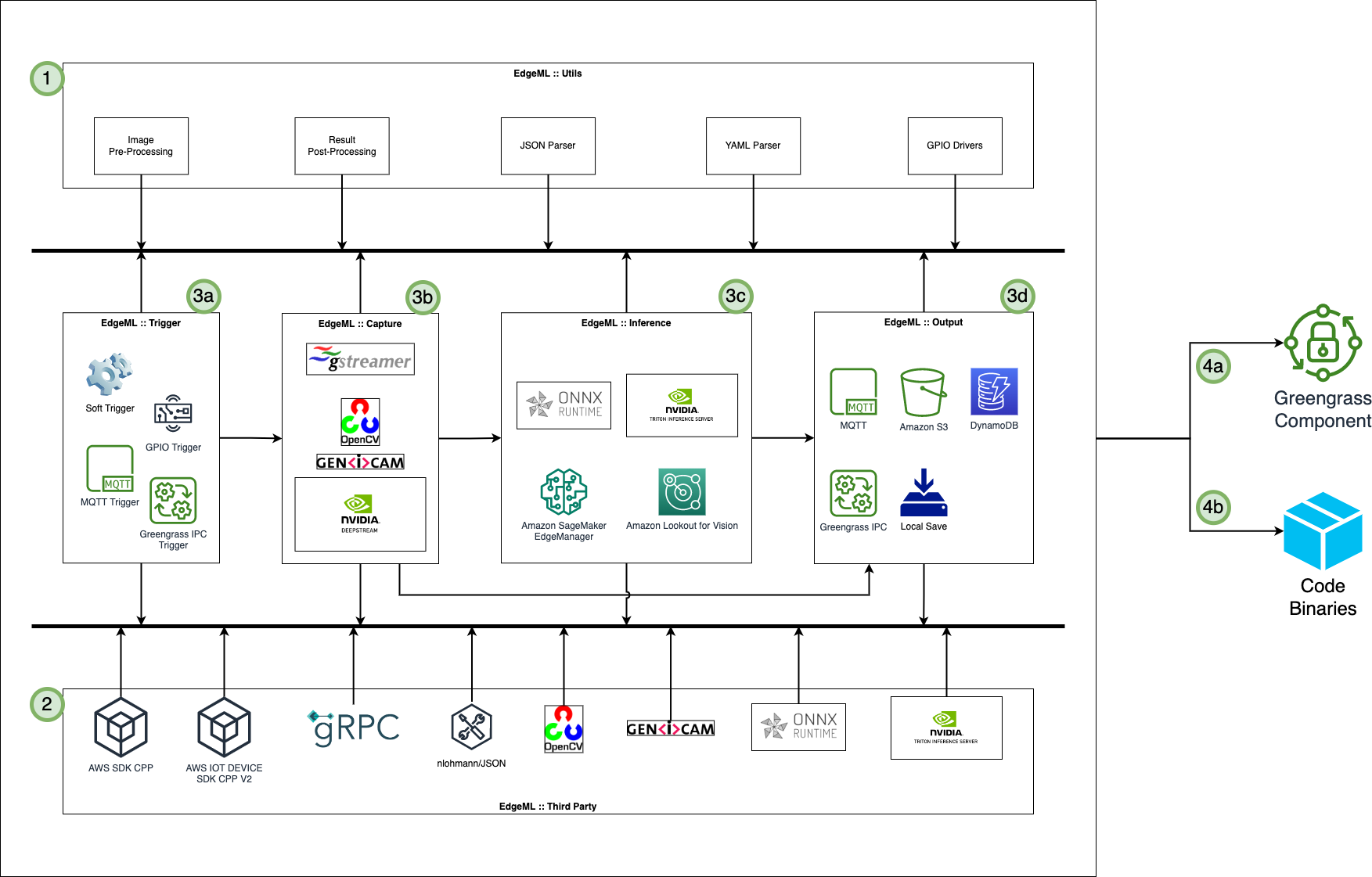

Edge ML Accelerator is a C++ based, highly configurable & repeatable artifact used for deploying on any IoT edge devices and running ML models, manage interfaces like Cameras, GPIO, Output just by making required changes to config file. This is a highly configurable project for running ML Models at the Edge. The accelerator is configurable for type of camera, number of cameras, ML model framework, single/parallel ML models and output requirements.

Edge ML Accelerator comprises of multiple plugins that interact with each other in a dedicated flow. The flow can be determined by defining a JSON or YAML config file. Based on the flow, the Trigger API, Camera API, Inference API, and Output API are executed. The overall flow is asynchronous and structured so none of the APIs run into race conditions.

- The flow is as follows:

- 1

edgeml :: utilsare used for general utility tasks like image pre-processing, result post-processing, json/yaml parser and GPIO interfacing - 2

edgeml :: third partyare the dependencies which are used to carry out the tasks and run the different plugins - 3

edgeml :::- (a)

edgeml :: triggeris used to define how the pipeline will be triggered using different techniques - (b)

edgeml :: captureis used to determine which API to use to capture image from video, image, USB camera or IP camera - (c)

edgeml :: inferenceis used to run inference on the captured image using different ML model frameworks - (d)

edgeml :: outputis used to direct the output results into various sinks

- (a)

- 4 Usage:

- (a) Using AWS IoT Greengrass V2 to deploy and run component on the edge device

- (b) Simply run the binaries on the edge device

- 1

- In order to build the binaries:

$ cd {Edge ML Accelerator Project Directory}

$ bash buildscripts/localbuild.sh -{flags}

-

sudo Skip Updates sudo + Skip Updates EdgeML + 3rd Party s o os/so EdgeML Only es/es oe/oe eos/eso/ose/oes/seo/soe

$ cmake flags: -DCMAKE_BUILD_TESTS=ON/OFF- To build and test out the different modes

$ cmake flags: -DUSE_PYLON=ON/OFF- To build Pylon Capture and utilize it in the other plugins

$ cmake flags: -DLOGS_LEVEL=0/1/2/3/4/5- To display detailed logs

$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:`pwd`/package/lib

$ export PATH=$PATH:`pwd`/package/bin

$ export GENICAM_GENTL64_PATH=package/lib/rc_genicam_api

Assuming Pylon SDK is downloaded, untarred and saved in /opt/pylon/:

$ export PATH=$PATH:/opt/pylon/bin

$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/pylon/lib

$ export EDGE_ML_CONFIG='pwd'/examples/example_config_GENERIC.json

-OR-

$ export EDGE_ML_CONFIG='pwd'/examples/example_config_GENERIC.yml

$ [From Main Directory]

$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:`pwd`/package/lib

$ export PATH=$PATH:`pwd`/package/bin

$ export EDGE_ML_CONFIG=/path/to/config.json -OR- export EDGE_ML_CONFIG=/path/to/config.yml

$ ctest

$ export NUMITER=5

$ cd build

$ ./package/bin/pipeline_app $NUMITER

By default the EdgeML accelerator connects to a localhost::8001 using Grpc. The system produces by default 2 inputs. One with an image of any size and 3 channels (either RGB or BGR) and and metadata input as string. For example this metadata can be and outfolder, and stringfied json, among others. The reponse must be a stringfied jsonenconding the predictions. For example, if you wish just to send the classification label or a base64 image, just put that as a json in a postprocessing part of an ensemble backend. See this to get an example for object detection and unpervised anomaly detection Gitlab link

- Adding a better way of Versioning

- Adding DynamoDB support, DeepStream support

- To build the C++ plugins, the project uses CMake >= 3.18.0

- The project utilizes multiple submodules and dependencies with specific versions as follows:

- AWS IoT Device SDK for C++ v2 ==

tags/v1.15.2 - AWS SDK CPP ==

tags/1.9.253 - GRPC ==

tags/v1.46.2 - OPENCV ==

tags/4.6.0 - RC_GENICAM ==

tags/v.2.5.14 - TRITON CLIENT ==

commit/3d05400 - ONNXRUNTIME ==

tags/v1.13.1 - NLOHMANN/JSON ==

tags/v.3.11.2

- AWS IoT Device SDK for C++ v2 ==

- For Pylon, download the respective SDK from here and unzip into

/opt/pylon.

For any issues, queries, concerns, updates, requests, kindly contact: