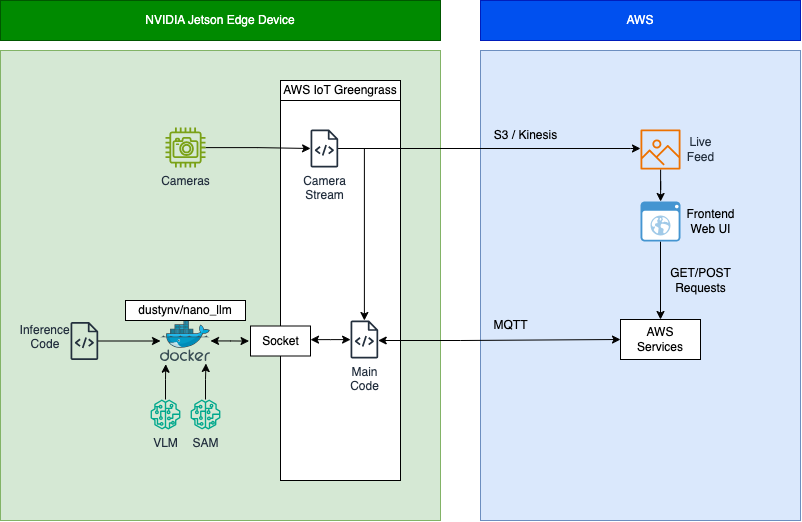

This repository demonstrates the use of AWS IoT GreengrassV2 for running Generative AI models on Edge devices specifically NVIDIA Jetson Devices. The work here focuses on running large models on edge devices for a cheaper, faster, and efficient solution. The repository using AWS IoT GreengrassV2 on the edge device to communicate between AWS services and device. Here, the models once hosted on the edge device which is conncted to a camera, can accept text prompts to capture images and respond based on queries asked and chosen model. The query to the device and the response back to cloud is being done using MQTT protocol.

Currently the sample supports 2 types of GenAI models:

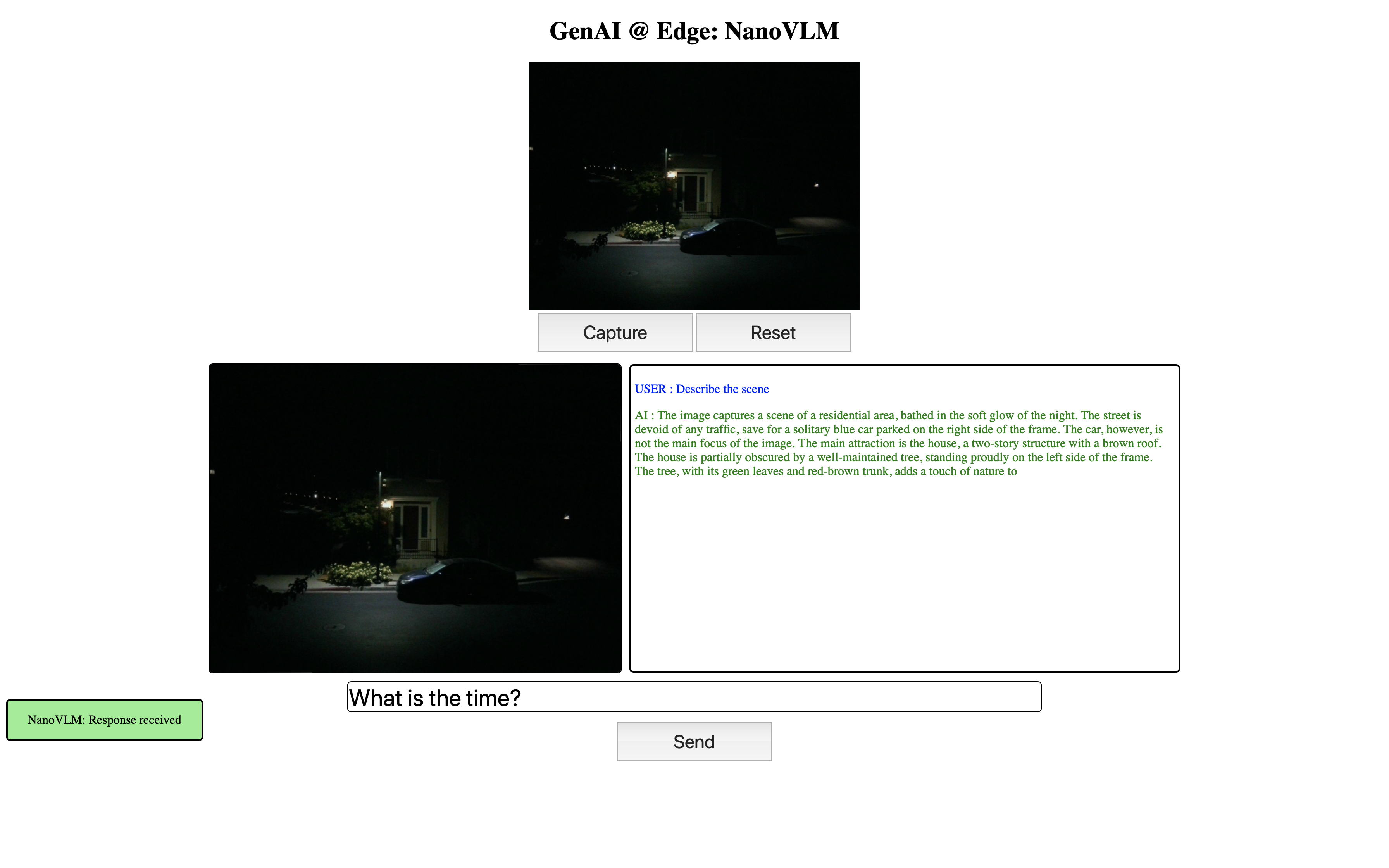

- NanoVLM - A Visual Language Models used for Text+Image to Text which is based on Jetson AI Lab NanoVLM Example.

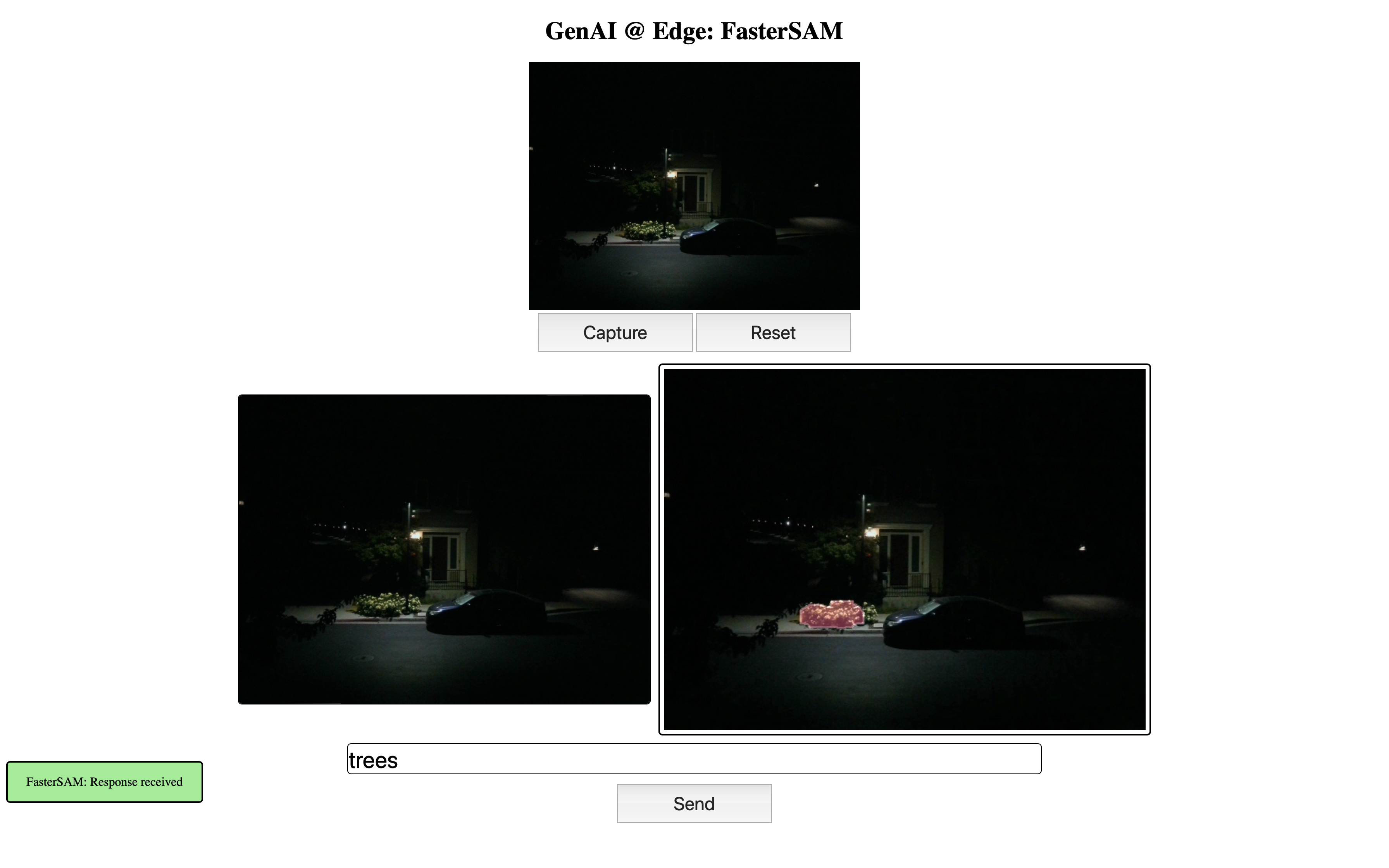

- FasterSAM - A Segment Anything Model variant of FastSAM used for segmenting pixels based on text queries.

Note: This is specifically for NVIDIA Jetson like edge devices and have been tested on NVIDIA Jetson Orin 8/16/32 GB and Jetson Orin Nano.

-

Requirements:

- NVIDIA Jetson device with >32GB free memory

- Local PC or EC2 Instance with Ubuntu ARM64v

-

Models used:

- NanoVLM:

- openai/clip-vit-large-patch14-336 - MIT-0 License

- Efficient-Large-Model/VILA-2.7b - Apache 2.0 License

- FasterSAM:

- ultralytics/yolov8s-seg - Apache 2.0 License

- jinaai/clip-models/ViT-B-32-textual - Apache 2.0 License

- jinaai/clip-models/ViT-B-32-visual - Apache 2.0 License

- NanoVLM:

-

Prerequisites:

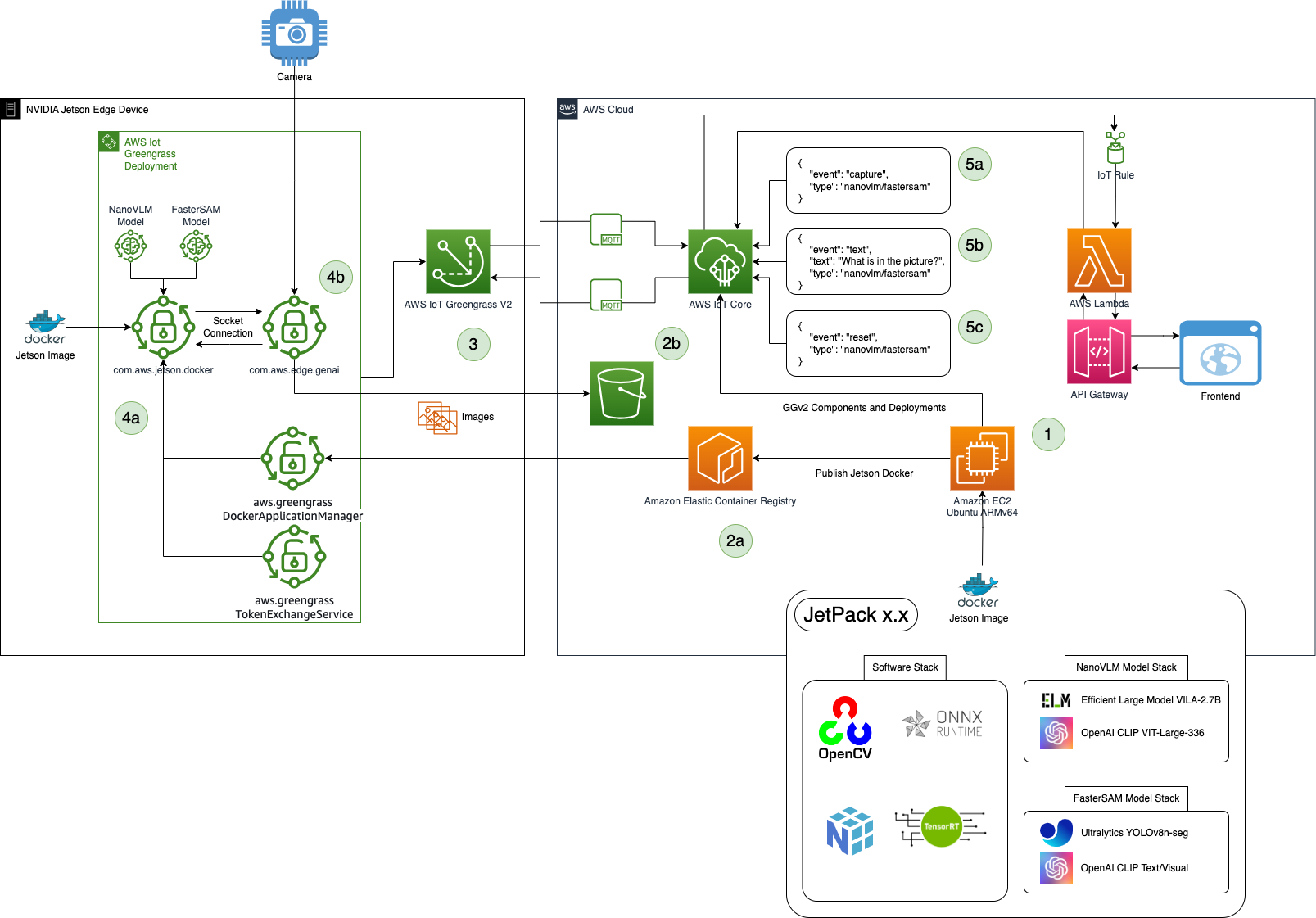

The above image shows the overall connection of Jetson device and AWS services for running different GenAI models on edge. Details of the services used and their integration with the edge device is shown below.

The above image shows the overall connection of Jetson device and AWS services for running different GenAI models on edge. Details of the services used and their integration with the edge device is shown below.

Follow these steps after edge device is provisioned for AWS IoT GreengrassV2:

Follow these steps after edge device is provisioned for AWS IoT GreengrassV2:

- Using Local PC or Amazon EC2 instance with Ubuntu and ARMv64 cloning the GitHub repository. Export desired environment variables:

$ export AWS_ACCOUNT_NUM="ADD_ACCOUNT_NUMBER" $ export AWS_REGION="ADD_ACCOUNT_REGION" $ export AWS_REGION="ADD_REGION" $ export DEV_IOT_THING="NAME_OF_OF_THING" $ export DEV_IOT_THING_GROUP="NAME_OF_IOT_THING_GROUP" - Build a Jetson docker image published to Amazon ECR and GreengrassV2 components using GDK:

- (a) Jetson docker image is on Amazon ECR

- (b) GreengrassV2 components built/published and deployments published to AWS IoT Core and eventually to the Edge device

- Edge device is provisioned for GreengrassV2 for the AWS Account and has the required GreengrassV2 components, namely:

- com.aws.jetson.docker: This component downloads the Jetson Docker image from the image stored in ECR and runs it.

- (com.greengrass.DockerApplicationManager)[https://docs.aws.amazon.com/greengrass/v2/developerguide/docker-application-manager-component.html]: The Docker application manager component enables AWS IoT Greengrass to download Docker images.

- (com.greengrass.TokenExchangeService)[https://docs.aws.amazon.com/greengrass/v2/developerguide/token-exchange-service-component.html]: The token exchange service component provides AWS credentials that you can use to interact with AWS services in your custom components.

- com.aws.edge.genai: This component is responsible for communicating between AWS IoT Core using MQTT and the

com.aws.jetson.dockercomponent using socket communication.

- com.aws.jetson.docker: This component downloads the Jetson Docker image from the image stored in ECR and runs it.

- Working of different components on the device:

- (a)

com.aws.jetson.dockercomponent runs the docker container and communicates using socket with thecom.aws.edge.genaicomponent. - (b)

com.aws.edge.genaiis responsbile to connect with camera and communicate between cloud and docker using MQTT and socket respectively for running inference and sending results to AWS IoT Core.

- (a)

- MQTT Commands to run VLM on edge:

- (a) CAPTURE: Used to run an image capture on the device which will be sent to the right GenAI model as identified:

{ "event": "capture", "type": "nanovlm" / "fastersam" } - (b) TEXT: Prompts sent to the model for running inference and output response will be sent to the MQTT client:

{ "event": "text", "text": "Describe the scene" / "Segment trees and roads", "type": "nanovlm" / "fastersam" } - (c) RESET: Reset the image and the history:

{ "event": "reset", "type": "nanovlm" / "fastersam" }

- (a) CAPTURE: Used to run an image capture on the device which will be sent to the right GenAI model as identified:

- Responses from the AWS IoT Core MQTT Client can be received and executed as required. For example, AWS IoT Rule can be used to redirect responses through AWS Lambda for visualization of results through an API GateWay connected to a Frontend UI. Examples of FrontEnd UI are shown here by using a locally hosted HTML WebPage or StreamLit Application.

- Use the Blog to provision an edge device like NVIDIA Jetson with IoT Greengrass V2. Also use Docs to configure docker related settings on the device.

- Alternatively, you can use the following script and run in the Edge Device:

[On Edge Device] $ git clone https://github.com/aws-samples/genai-at-edge $ cd genai-at-edge/greengrass $ chmod u+x provisioning.sh $ ./provisioning.sh $ [Check Jetpack version of Jetson device] sudo apt-cache show nvidia-jetpack- The

provisioning.shscript only works for Ubuntu based system. - It would prompt for AWS Credentials which can be bypassed if already configured by clicking Enter.

- It would prompt for providing name of

IoT Thing&IoT Thing Groupand if not entered, would take default values. - Once completed, the

IoT Thingand itsIoT Thing Groupwould be available on the AWS Console.

- The

```

$ export ALGO_NAME="genai-at-edge"

$ [For JetPack 5] docker pull dustynv/nano_llm:r35.4.1 && docker build -t ${ALGO_NAME} -f docker/Dockerfile.r35.4.1 .

$ [For JetPack 6] docker pull dustynv/nano_llm:r36.2.0 && docker build -t ${ALGO_NAME} -f docker/Dockerfile.r36.2.0 .

$ aws ecr get-login-password --region ${AWS_REGION} | docker login --username AWS --password-stdin ${AWS_ACCOUNT_NUM}.dkr.ecr.${AWS_REGION}.amazonaws.com

$ (Once to create ECR Repository) aws ecr create-repository --repository-name ${ALGO_NAME}

$ docker tag ${ALGO_NAME} ${AWS_ACCOUNT_NUM}.dkr.ecr.${AWS_REGION}.amazonaws.com/${ALGO_NAME}:latest"

$ docker push ${AWS_ACCOUNT_NUM}.dkr.ecr.${AWS_REGION}.amazonaws.com/${ALGO_NAME}:latest"

```

```

$ python3 -m pip install -U git+https://github.com/aws-greengrass/aws-greengrass-gdk-cli.git@v1.2.0

[Install jq for Linux]

$ apt-get install jq

[Install jq for Linux]

$ brew install jq

$ cd greengrass/

$ chmod u+x deploy-gdk-build.sh

$ ./deploy-gdk-build.sh

[Upon request for options to build components, choose accordingly]

Select update option:

1. Update Component 1: EdgeGenAI

2. Update Component 2: Jetson Docker

3. Update All components (EdgeGenAI & Jetson Docker)

Enter your choice (1/2/3):

```

```

[Run the code as follows to cleanup]

$ cd greengrass/

$ python3 cleanup_gg.py

```

This project is loosely based on Jetson Tutorials for GenAI like:

- https://www.jetson-ai-lab.com/tutorial_nano-vlm.html

- https://hub.docker.com/r/dustynv/nano_llm

- https://github.com/dusty-nv/NanoLLM

- https://github.com/CASIA-IVA-Lab/FastSAM

MIT-0

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.