In this repository, we'll outline and provide a sample framework for monitoring your Generative AI based applications on AWS. This includes how and what to monitor for your application, users, and LLM(s).

The use case we will be using throughout this repository is creating a simple conversational interface for generating AWS CloudFormation templates through natural language instructions.

NOTE) The architecture in this repository is for development purposes only and will incur costs.

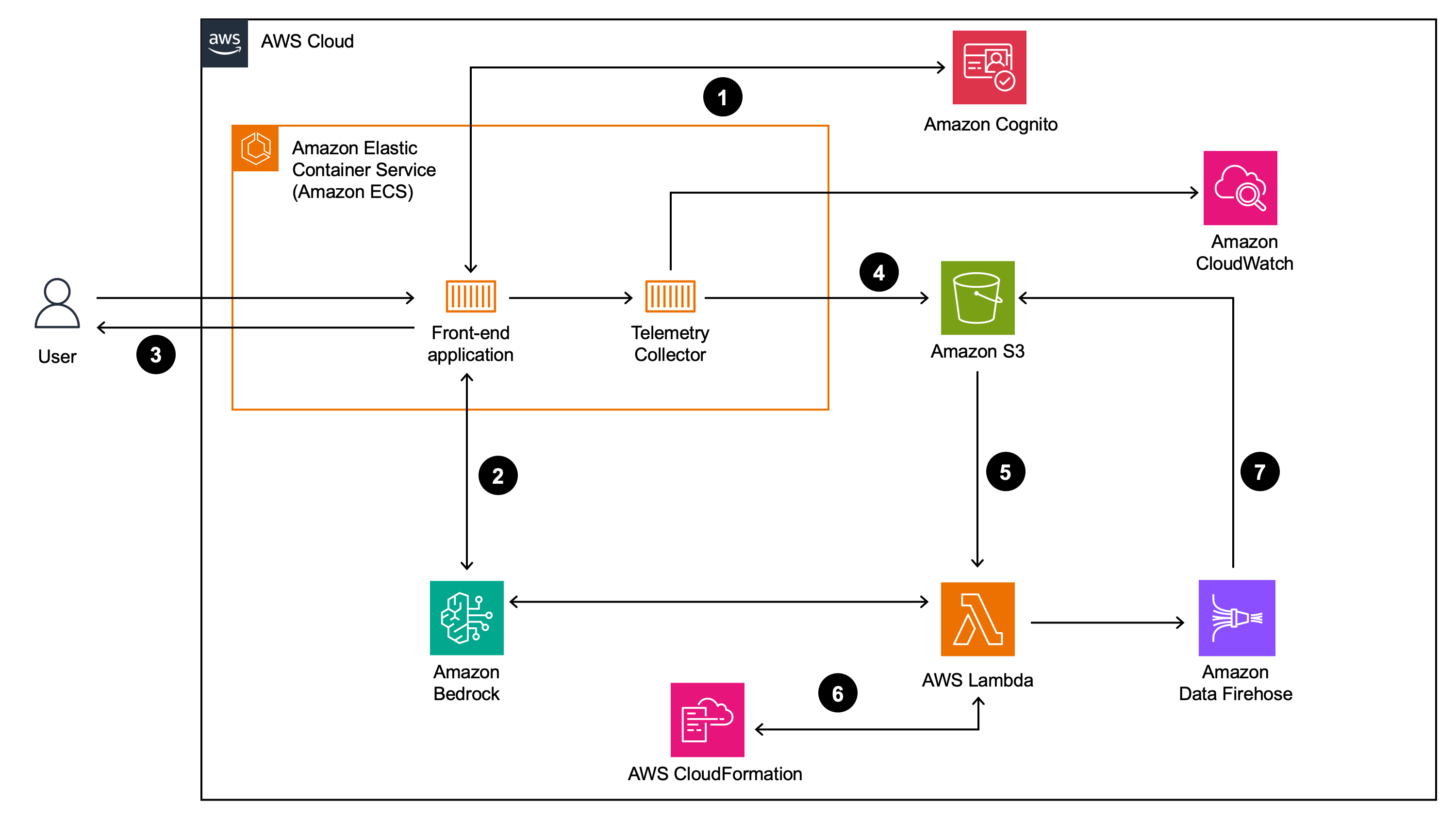

For the demonstration in this repository, you will send your prompts from a Streamlit, hosted on Amazon Elastic Container Service (ECS), front-end application to Amazon Bedrock. All prompts, responses, metrics and analysis will be stored in Amazon Simple Storage Service (S3). The following is a detailed outline of the architecture in this repository:

- A user logs in to the front-end application using an Amazon Cognito identity

- User prompts are submitted from the front-end application to a large language model (LLM) on Amazon Bedrock. These prompt input and outputs are tracked using OpenLLMetry which is an open source telemetry tool designed specifically for monitoring LLM applications

- The LLM response is returned to the user and the conversational memory is preserved in the front-end application

- Using the open source OpenTelemetry collector container, ECS exports OpenLLMetry traces to S3 and Amazon CloudWatch

- These traces are then post-processed by an Amazon Lambda function which extracts relevant information from the trace JSON packets

- The post-processed information is then sent to various APIs for evaluation of the model responses. In this example, we used LLMs to evaluate the outputs of the original LLM through Amazon Bedrock. See more about this LLM-as-a-judge pattern here. Furthermore, we also validate any CloudFormation templates created via the ValidateTemplate API from CloudFormation

- The results of this evaluation are then sent to Amazon Data Firehose to be written to Amazon S3 for consumption in downstream systems including, but not limited to, reporting stacks, analytics ecosystems, or LLM feedback mechanisms

The illustration below details what this solution will look like once fully implemented.

To follow through this repository, you will need an AWS account, an Amazon Bedrock supported region, permissions to create AWS Identity and Access Management (IAM) roles and policies, create AWS Lambda Functions, create Amazon ECS Cluster, create Amazon Elastic Container Registry (ECR) , create Amazon S3 buckets, create Amazon Data Firehose streams, access to Amazon Bedrock and access to the AWS CLI. In addition, you will need an exiting hosted zone in Amazon Route53 and existing wildcard certificate in AWS Certificate Manager (ACM). Finally, you will need Docker, Node.js and the AWS Cloud Development Kit (CDK) installed locally. We also assume you have familiar with the basics of Linux bash commands.

-

Create two subdomains under your Amazon Route53 hosted zone. One will be for the Application Load Balancer and the other will be for the front-end application URL. For example, if your hosted zone is

foo.com, you could pick:alb1.foo.comapp1.foo.com

-

Run the AWS CDK commands below to deploy this application.

- NOTE) If this is your first time deploying this application, make sure you install all Node.js packages by running

npm installbelow from thecdkfolder

- NOTE) If this is your first time deploying this application, make sure you install all Node.js packages by running

#Ensure you update all placeholder values in the commands below

#Change directories to the cdk directory

cd cdk

#Bootstrap your environment

cdk bootstrap \

-c domainPrefix=<Enter Custom Domain Prefix to be used for Amazon Cognito> \

-c appCustomDomainName=<Enter Custom Domain Name to be used for Front-end application> \

-c loadBalancerOriginCustomDomainName=<Enter Custom Domain Name to be used for Load Balancer Origin> \

-c customDomainRoute53HostedZoneID=<Enter Route53 Hosted Zone ID for the Custom Domain being used> \

-c customDomainRoute53HostedZoneName=<Enter Route53 Hosted Zone Name for the Custom Domain being used> \

-c customDomainCertificateArn=<Enter ACM Certificate ARN for Custom Domains provided>

#Synthesize your environment

cdk synth \

-c domainPrefix=<Enter Custom Domain Prefix to be used for Amazon Cognito> \

-c appCustomDomainName=<Enter Custom Domain Name to be used for Front-end application> \

-c loadBalancerOriginCustomDomainName=<Enter Custom Domain Name to be used for Load Balancer Origin> \

-c customDomainRoute53HostedZoneID=<Enter Route53 Hosted Zone ID for the Custom Domain being used> \

-c customDomainRoute53HostedZoneName=<Enter Route53 Hosted Zone Name for the Custom Domain being used> \

-c customDomainCertificateArn=<Enter ACM Certificate ARN for Custom Domains provided>

#Deploy your stack

cdk deploy --all \

--stack-name ObserveLLMStack \

-c domainPrefix=<Enter Custom Domain Prefix to be used for Amazon Cognito> \

-c appCustomDomainName=<Enter Custom Domain Name to be used for Front-end application> \

-c loadBalancerOriginCustomDomainName=<Enter Custom Domain Name to be used for Load Balancer Origin> \

-c customDomainRoute53HostedZoneID=<Enter Route53 Hosted Zone ID for the Custom Domain being used> \

-c customDomainRoute53HostedZoneName=<Enter Route53 Hosted Zone Name for the Custom Domain being used> \

-c customDomainCertificateArn=<Enter ACM Certificate ARN for Custom Domains provided>- Ensure you have granted model access to the

Anthropic Claude Instantmodel in Amazon Bedrock.

- Create a Amazon Cognito user in the user pool created in the last step.

aws cognito-idp admin-create-user --user-pool-id <REPLACE_WITH_POOL_ID> --username <REPLACE_WITH_USERNAME> --temporary-password <REPLACE_WITH_TEMP_PASSWORD>

- Open the front-end application using the URL given in the CDK output

AppURL. You will need to login with the Amazon Cognito identity created in step 2. You will be prompted to update the password.

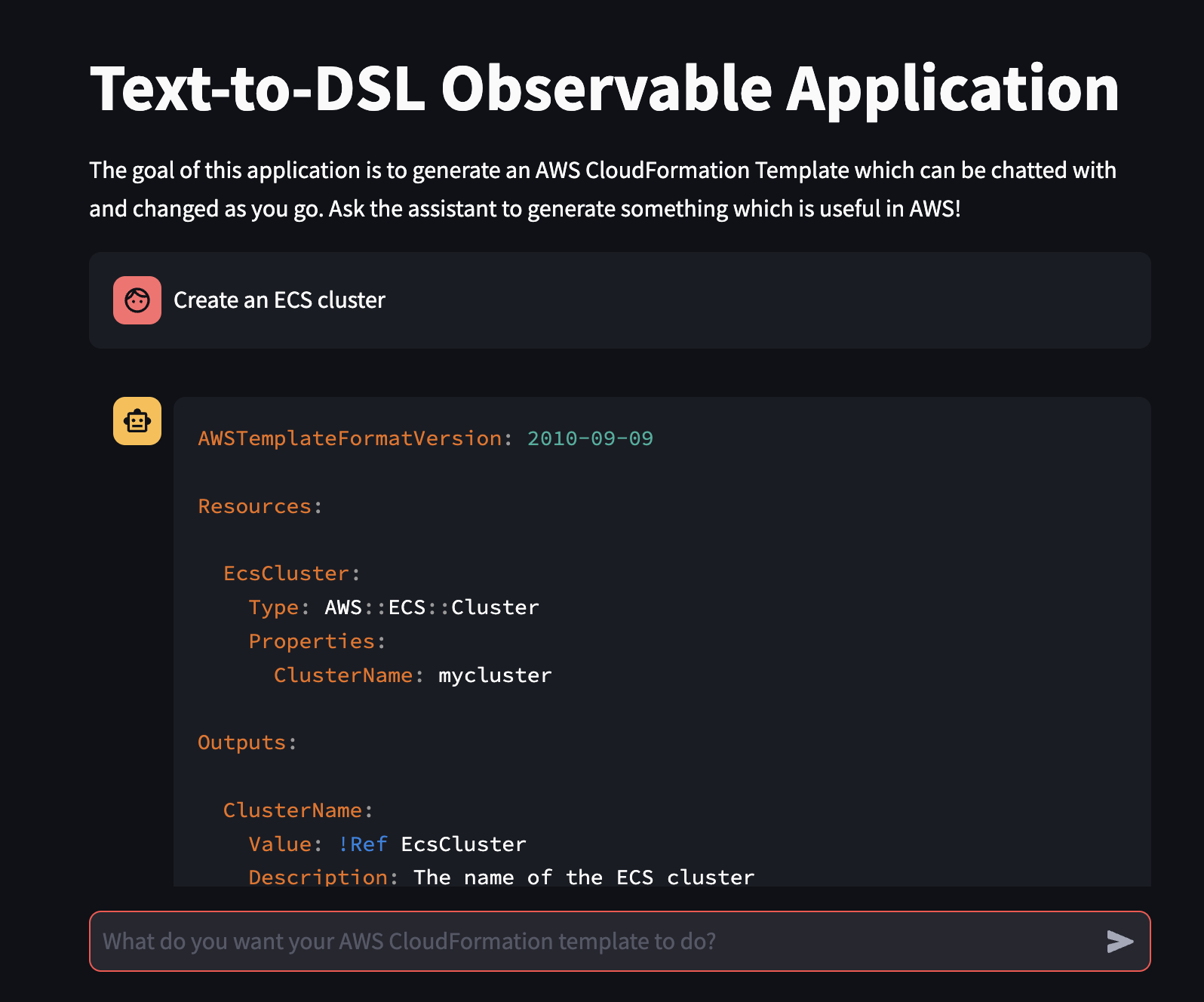

- Submit a prompt such as

Create an ECS clusterinto the front-end application - From here, you should get a response similar to the image below.

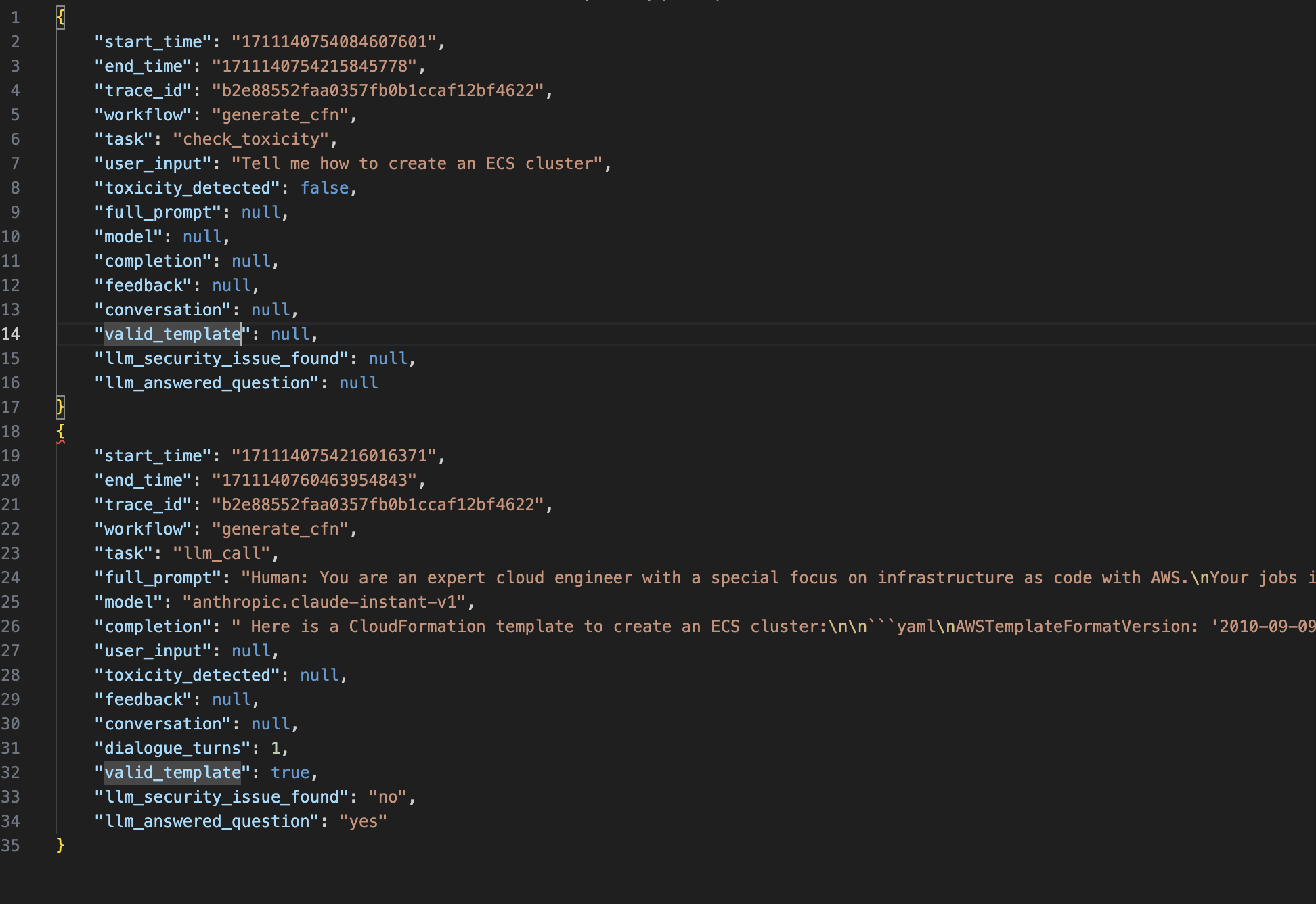

- Navigate to your Amazon S3 bucket and open the folder

otel-trace-analysis - Navigate the folder structure using the latest year, month and day folders

- Review the latest analysis file to see the prompts, responses, metrics and analysis collected. Your analysis file should look similar to the image below.

Be sure to remove the resources created in this repository to avoid continued charges. Run the following commands to delete these resources:

cdk destroy --all --stack-name ObserveLLMStackaws admin-delete-user --user-pool-id <REPLACE_WITH_POOL_ID> --username <REPLACE_WITH_USERNAME>

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.