In this sample, we show how you can use Large Language Models (LLMs) to translate natural language questions into analytical queries against structured data sources like SQL and graph databases.

This can accelerate self-serve exploratory data analysis by reducing the time and skills required to translate business questions into technical query languages.

In general, so long as the schema of available data is provided to the language model, we've observed:

- Good performance out-of-the-box for generating SQL queries

- Acceptable performance after coaching/prompt-engineering for generating OpenCypher graph queries.

Automated query generation can fail or produce unexpected results for a range of reasons, including:

- Asking questions that are not answerable from the underlying data source

- Misunderstanding the meaning or context of the schema of the underlying data source

- Failing to generate valid queries in the target query language

- Malicious user input attempting to modify the database (or deny service to other users by intentionally consuming a large amount of resources)

Because of this, use-cases should consider the following best practices:

- Show generated queries (and also the LLM's reasoning) to users to help them validate whether the answering method looks correct from their knowledge of the data schema.

- Secure your applications by:

- Restricting database access to read-only queries (and ideally propagating users' own identities through to the database connection!) - to prevent maliciously constructed queries from modifying or accessing unintended data.

- Limiting per-user or per-query run time and resources, to prevent a few demanding users from degrading database query performance for others.

Modern Generative AI models are capable (with some limitations) of reasoning over and generating both natural languages (like English) and computer programming languages (like SQL, GraphQL, Gremlin and Cypher).

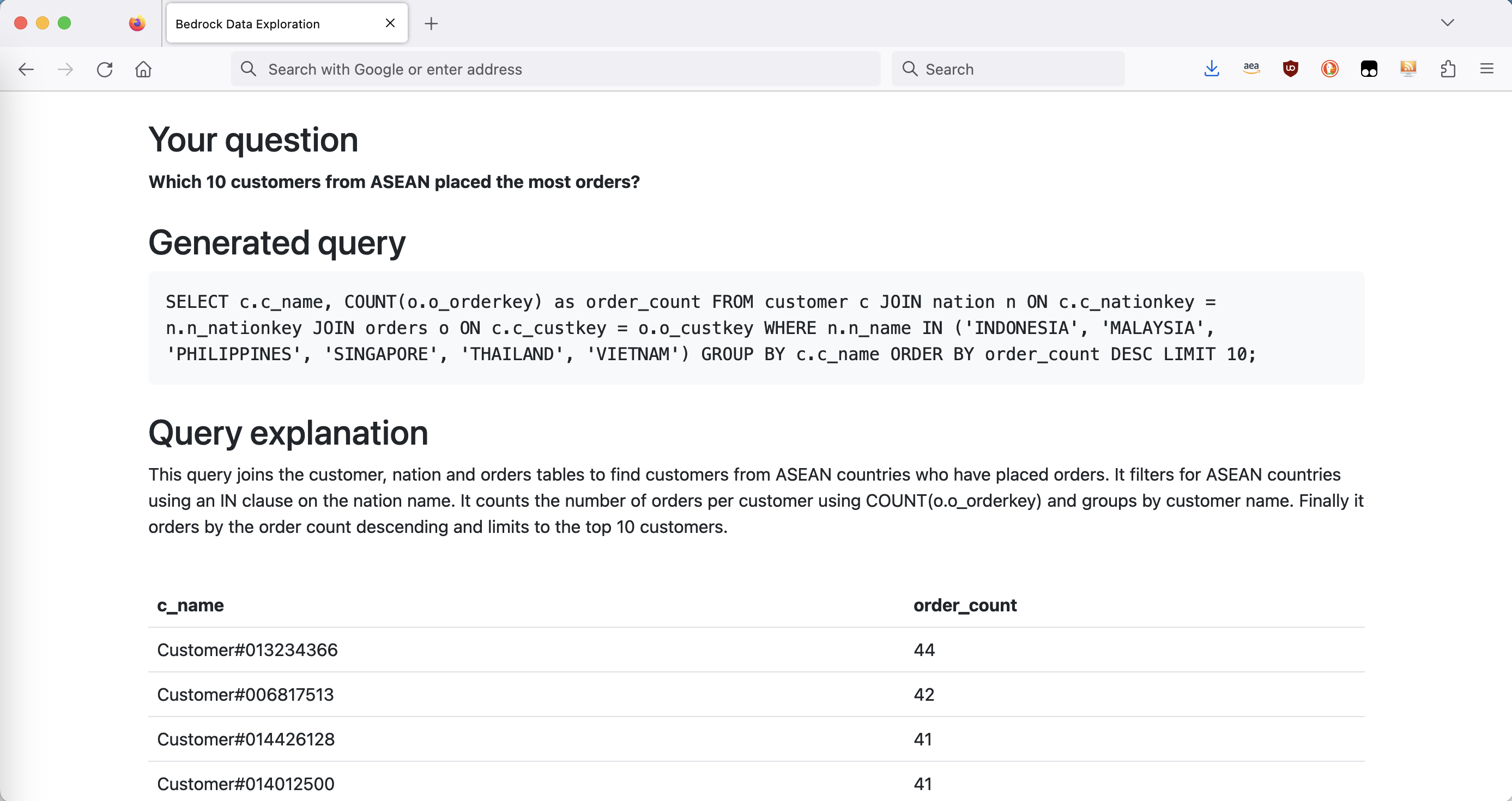

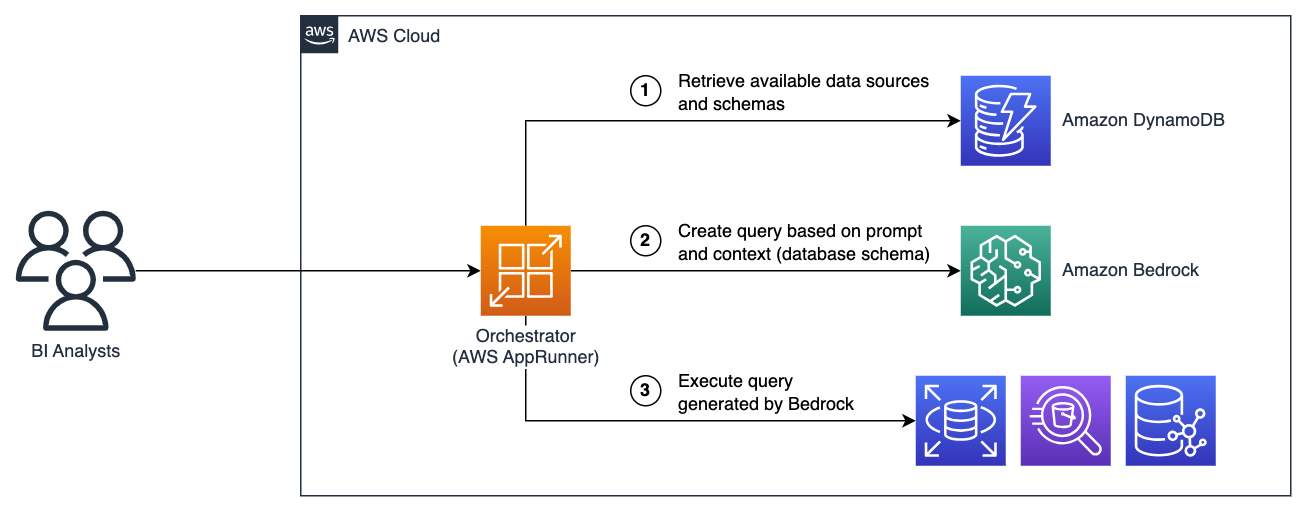

In this sample users enter natural-language questions that should be answerable from organizational data sources, and we apply an LLM on Amazon Bedrock to draft a database query equivalent: Automating work that would otherwise require support from a skilled data analyst. An orchestrator runs this query against the data source and presents the results to the user, as shown in the architecture diagram below:

To support transparency and verification, the LLM also attempts to explain the reasoning behind the generated query, and both the query and the explanation are surfaced to the user alongside the results.

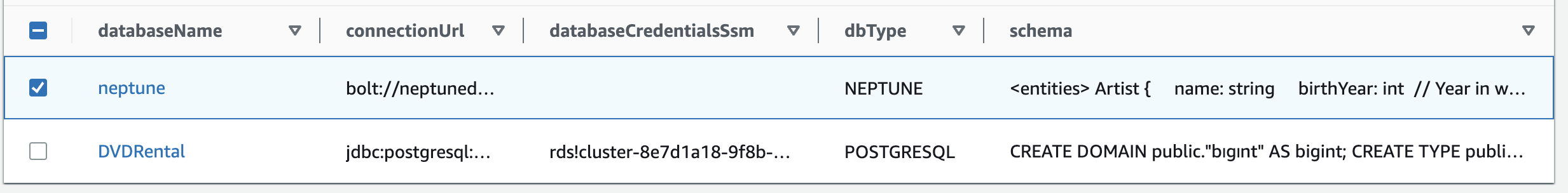

Available data sources and their schemas are configured in a DynamoDB table, which records both structured connection attributes (like connectionUrl, dbType, databaseCredentialsSsm) and free text schema information. The target database's schema is passed as input to the LLM, to surface which fields and relations are available.

Although automated schema crawling should be possible in principle, we note that annotating your schemas with additional commentary can significantly improve results (especially e.g. in cases where there are counter-intuitive or non-obvious field names and relations). This sample does not yet crawl data source schemas automatically.

The sample supports 3 example data sources and by default pre-populates an example dataset for each:

- Amazon Athena serverless structured data analytics, pre-populated with the TPC-H supply-chain oriented business decision benchmarking dataset.

- Amazon Neptune graph database, pre-populated with an Internet Movie Database (IMDb) sample dataset.

- Amazon RDS for Postgres SQL database, pre-populated with the Sakila DVD rentals sample dataset.

This sample is built with AWS CDK, which requires setting up a development environment as described below. However, if you'd like to just deploy the stack as-is - you can use the one-click CloudFormation template linked below:

Configuration notes:

- You'll need to target an AWS Region (e.g.

N. Virginia) where Bedrock is currently supported and Anthropic Claude text generation models have been enabled- ⏰ This CloudFormation stack starts the CDK solution deployment process via AWS CodeBuild, but does not wait for it to complete. Refer to the other stacks created in CloudFormation console, and logs in CodeBuild, for overall deployment status.

To set up and customize this sample with CDK, you'll need a development environment with:

- Installed NodeJS v18+

- Installed Java Development Kit (JDK), and Maven

- Running Docker

- (AWS CLI) logged in to your AWS Account

- Targeting a region (e.g.

export AWS_REGION=us-east-1) where Bedrock is currently supported and Anthropic Claude text generation models have been enabled. - To log docker CLI in to Amazon ECR Public:

aws ecr-public get-login-password --region us-east-1 | docker login --username AWS --password-stdin public.ecr.aws

To deploy the solution via AWS CDK (once you have the pre-requisites above):

- Open your terminal in the

cdksubfolder npm installto install dependencies of the solutionnpx cdk bootstrapif you haven't already bootstrapped your target AWS environment for CDK deploymentsnpx cdk deploy- This will attempt to install dependencies and compile the Java application locally (so you must have Java installed) before running the actual CDK synth/deployment.

- If you don't want all three sample data sources (Amazon Athena, Amazon Neptune and Amazon RDS) to be created, consider editing the

disable*parameters in cdk/bin/cdk.ts before deploying.

- ⏰ Check in CloudWatch (as described here) that your Neptune data is fully copied (which may take some additional time after the main stack deployment is finished)

That should be it! The deployed stack should list your application URL as an output, or you can go find the service in the AWS AppRunner Console.

If there's a problem with the CDK solution, or you want to customize the solution after deployment without building your changes in through CDK, see the "Advanced setup notes" section below.

In this section we share some sample questions you might like to try. For more ideas, check out the schemas of the sample datasets loaded into each source.

- Which 10 customers from ASEAN placed the most orders?

- what parts are low in stock?

- show me the top 5 parts that were returned eventually

- show me the top 10 suppliers from Jordan with highest sales

- show me the number of customers from the African continent who have not places any orders between Jan 2nd and Nov 2nd of 1994

- who are the top 50 customers that haven't bought wood parts yet are most likely to in future?

- which 10 products are bought most frequently together?

- Other languages:

- montrez-moi le top 5 des produits les plus achetés

Although many queries perform well in Neptune, we've observed lower success rate than with SQL data sources. The most common cause of failures is attempting to traverse relations in the wrong direction e.g.

(Artist)-[:actor]->(movie)instead of(movie)-[:actor]->(Artist), which normally produces empty result sets. We've also sometimes seen invalid queries generated due to mixing SQL patterns in with Cypher.

- Who's Quentin Tarantino's favourite actor?

- How many movies has Robin Williams starred in by genre?

- What's the average run time of movies directed by Quentin Tarantino

- What movies did Angelina Jolie star in before 1996?

- What movie launched Jonny Lee Miller's career?

- This one is unusual because from the query you can tell the LLM already looks in a specific year...

- Other languages:

- Welches ist das Genre in dem Robin Williams die meisten Filme gedreht hat?

- アンジェリーナジョリーの最初の映画はいつか?

- Angelina JolieとJonny Lee Millerとはどちらが一番人気ですか

- Show me the name of the 5 customers who rented the most dvds

- Find the 5 actors whose movies have been rented the most

- How many rentals have there been in average per customer

- Give me the name of the category which has been rented most often

- Other languages:

- Welche Filme wurden am meisten ausgeliehen?

The main components of the solution to configure include:

- The orchestrating web application, which runs as a Java Spring application in AWS AppRunner

- The DynamoDB table listing accessible data sources

- The data sources you'd like to connect to (which must also be supported by the Java app)

The Java application depends on the Amazon Athena JDBC Connector, which needs to be downloaded separately from other dependencies. We've configured the maven-download-plugin to help with this, so you can build the application by running the same build commands as used in cdk/lib/orchestrators/java-app.ts:

# (From the app-java/ folder)

# Fetch the Athena JDBC .jar:

mvn process-resources

# Install the Athena JDBC .jar:

mvn install:install-file -Dfile=lib/AthenaJDBC42-2.1.1.1000.jar -DgroupId=Athena -DartifactId=AthenaJDBC42 -Dversion=2.1.1.1000 -Dpackaging=jar -DgeneratePom=true

# Build the rest of the Java app:

mvn install

mvn clean packageOnce the Java application is built:

- Build the container image using (Docker or)

finch build --platform linux/amd64 -t <ACCOUNT_ID>.dkr.<REGION>.amazonaws.com/<ECR_REPO_NAME>:latest . - Push the image to ECR

finch push <ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/<ECR_REPO_NAME>:latest - Create a AppRunner service pointing to your image inside ECR

- Make sure to select

Custom VPCas the networking mode and add a VPC Connector pointing to your subnets containing your data sources. - Pass the DynamoDB Table Name to the Application via the environment variable BEDROCK_DATA_EXPLORATION_DYNAMO_TABLE_NAME

Once your solution is set up and the AppRunner app deployed, you should be able to navigate to its URL in your browser and start asking questions to your data!

New data sources (of supported types) are connected by adding/updating entries to the DynamoDB data sources table.

Each item in the data sources table should contain:

databaseName: A human-readable name for the data source (which will appear in the user's view)dbType: Currently supportsATHENA,NEPTUNE, orPOSTGRESQL(must also be supported by the Java app)schema: A text representation of the database schema (which will be injected into the LLM query generation prompt)connectionUrl: A JDBC-like connection string containing the required configuration to connect to the database.- (

databaseCredentialsSsm): Optional name of the AWS Secrets Manager secret storing the credentials to connect to the database (for RDS).

You can create a Neptune graph database cluster and reference it as a data source.

Neptune clusters can only be deployed within an AWS VPC to limit access, and you should check your AppRunner service can reach (via VPC Connector and relevant subnets/routing) the target cluster. Check also that the security groups allow connectivity from AppRunner to the Neptune cluster endpoint(s).

To create a cluster and load the IMDb sample dataset as we do for the sample: in AWS CloudFormation, deploy the following stack as used by the "Build Your First Graph Application with Amazon Neptune" workshop: https://neptune-workshop-assets.s3.amazonaws.com/neptune-immersion-day.yaml

This stack will automatically deploy a Neptune cluster and start ingesting the IMDb sample data. Note that it will ⏰ take additional time after stack creation for the full ~15GB data load to complete - and you can check progress as described here.

As shown in the dataSourceDescriptor property of cdk/lib/data-sources/neptune.ts, the data source descriptor entry in DynamoDB should use the bolt:// connection scheme in the following form:

{

"databaseName": "{Human-readable name}",

"connectionUrl": "bolt://{Cluster Endpoint}:{Port number}",

"dbType": "NEPTUNE",

"schema": "{Annotated entity & relation schema for your database}"

}For graph databases / Cypher queries, we've observed LLMs can struggle to consistently navigate one-way relations in the correct direction - sometimes resulting in returning empty result sets. You can refer to cdk/lib/data-sources/neptune.ts for our attempt at prompt engineering this into the database schema.

You can create an RDS cluster and reference it as a data source. We've only tested with Postgres-based clusters so far.

RDS databases are typically deployed within an AWS VPC to limit access, in which case you should check your AppRunner service can reach (via VPC Connector and relevant subnets/routing) the target cluster. Check also that the security groups allow connectivity from AppRunner to the RDS cluster/instances.

For our sample, we load the Pagila sample dataset into RDS using the SQL statements available at https://raw.githubusercontent.com/devrimgunduz/pagila/master/pagila-schema.sql and https://raw.githubusercontent.com/devrimgunduz/pagila/master/pagila-insert-data.sql. These are executed through an AWS Lambda function (cdk/lib/data-sources/lambda-load-data/rds.ts) with connectivity to the target VPC and subnets. You could alternatively use an EC2 Bastion Host or similar, to access your RDS cluster.

As shown in the dataSourceDescriptor property of cdk/lib/data-sources/rds.ts, the access credentials for the database should be kept in AWS Secrets Manager and the data source descriptor entry in DynamoDB for RDS PostgreSQL sources should have the form:

{

"databaseName": "{Human-readable name}",

"connectionUrl": "jdbc:postgresql://{Cluster read-only query endpoint including :PortNumber}/{Database name}",

"databaseCredentialsSsm": "{AWS Secrets Manager Secret Name}",

"dbType": "POSTGRESQL",

"schema": "{Schema/creation commands of your DB}"

}You can query S3 data or any federation supported data sources using Amazon Athena. For the sample TPC-H dataset, you can set up by running the SQL script as given in the getCreationQueryString() method of cdk/lib/data-sources/athena.ts.

As shown in the dataSourceDescriptor() property of cdk/lib/data-sources/athena.ts, the data source descriptor entry in DynamoDB for Athena sources should have the form:

{

"databaseName": "{Human-readable name}",

"connectionUrl": "jdbc:awsathena://AwsRegion={?};AwsCredentialsProviderClass=com.simba.athena.amazonaws.auth.DefaultAWSCredentialsProviderChain;Catalog={?};S3OutputLocation={?};Schema={Athena DB Name};Workgroup={?}",

"dbType": "ATHENA",

"schema": "{Schema or creation commands of your DB}"

}Check that your AppRunner service role has appropriate permissions to query Amazon Athena.

This sample is a basic illustration of the pattern of natural language data analytics across various data sources, and has not been hardened for production usage. Some particular areas that could be improved include:

- Improved error handling: Failed database queries often produce opaque error messages in the UI which administrators must delve into AppRunner application logs to investigate further.

- Additional security controls to scope down database access, including:

- Preventing data editing queries to Amazon Athena

- Passing users' own identities through to database interactions instead of using service-level credentials

- Enforcing limits to prevent demanding user queries from degrading service for other concurrent users

- Extended functionality including:

- An additional LLM call to automatically select the correct data source for a given question, instead of requiring a user selection.

- APIs or UIs to add and manage data sources

- Going beyond presenting query result sets to answering user queries in natural language (without sacrificing transparency to the underlying query & results).

- Supporting additional database types

To release your cloud resources and avoid ongoing costs once you're finished exploring this sample, you can delete the created stacks in AWS CloudFormation or (if you deployed with AWS CDK) run npx cdk destroy. In addition:

- You'll probably note that the stack's

AthenaBucketfails to delete because it contains objects. You click through from CloudFormation to find this bucket in the Amazon S3 Console and delete all objects inside, after which you should be able to delete the stack successfully. - The stack does not automatically delete the

bedrockathenacatalog in Amazon Athena. You can (and if you want to re-deploy the stack in the same AWS Account without errors, must) delete all the tables and thebedrockathenacatalog. This can be done from the Query Editor in the Amazon Athena Console: so long as you've set up a default S3 location to store your console query results.

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.