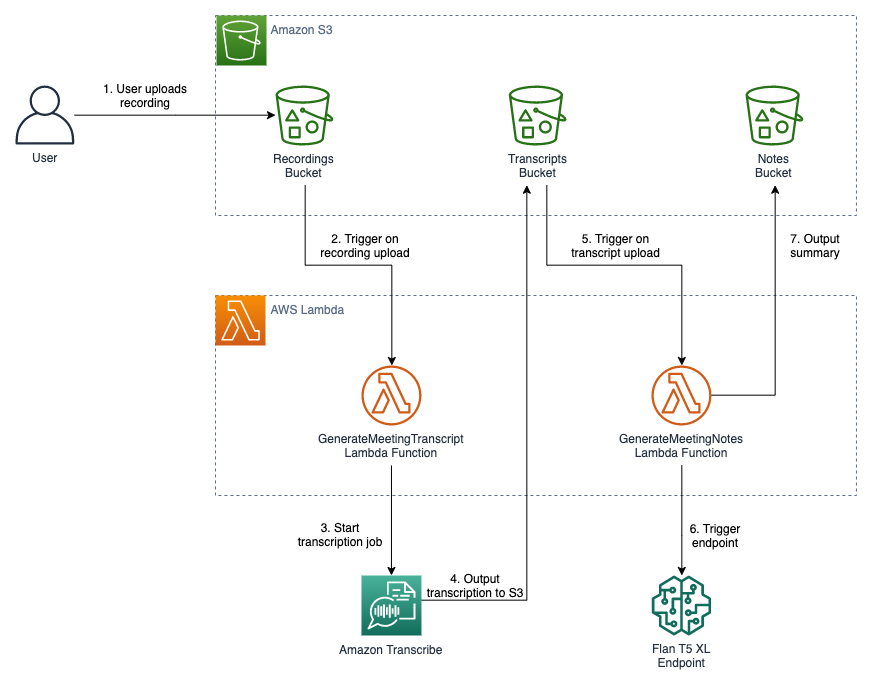

This repository contains a solution for an automated meeting notes generator application leveraging Large-Language Models (LLMs) on SageMaker JumpStart, a machine learning (ML) hub that can help you accelerate your ML journey.

Explore more foundation models on SageMaker JumpStart.

The IAM roles and policies provided in the CloudFormation template are created to demonstrate the functionality of the demo solution. Apply the principles of least privilege to secure resources and their permissions for deploying into a production environment. Learn more about Security best practices in IAM.

- Uses CloudFormation to automate deployment of all resources

- Uses Lambda functions with S3 upload triggers to create an automated workflow

- Uses Amazon Transcribe to transcribe audio/video meeting recordings

- Uses the Flan T5 XL model from SageMaker JumpStart

- (Optional) Submit service quota requests to increase limits on instance capacity as desired.

- Select deployment region

- Choose an AWS Region that Amazon SageMaker JumpStart. The default is

us-east-1and recommended. - If using a region other than

us-east-1, update theImageURIandModelDatausing the documentation.

- Choose an AWS Region that Amazon SageMaker JumpStart. The default is

- Create the Lambda layer

- Create a Cloud9 environment in the deployment region and open the Cloud9 IDE.

- In the Cloud9 IDE, open a new terminal and run the commands in lambda_layer_script.sh

- Verify in the AWS Lambda page of the AWS Console that a layer called

demo-layerhas been created. - Delete the Cloud9 environment.

- Deploy CloudFormation template

- (Optional) Adjust parameters:

InstanceType: instance type for SageMaker endpoint (default =ml.p3.2xlarge)InstanceCount: number of instances for SageMaker endpoint (default = 1)ImageURI: container URI for inference endpoint (default =huggingface-pytorch-inference:1.10.2-transformers4.17.0-gpu-py38-cu113-ubuntu20.04)ModelData: S3 location of LLM model artifact (default =huggingface-text2text-flan-t5-xl)

- (Optional) Adjust parameters:

- Upload a meeting recording to the

recordings/folder of the S3 bucket. Note: the recording must be in one of the media formats supported by Amazon Transcribe. - Find the generated transcript in the

transcripts/folder of the S3 bucket. It may take a few minutes for the transcripts to show up. - Find the generated notes in the

notes/folder of the S3 bucket. It may take a few minutes for the notes to show up.

- Delete all objects in the demo S3 bucket.

- Delete the CloudFormation stack.

- Delete the Lambda layer.

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.