TwinGraph provides a Python-based high-throughput container orchestration framework for simulation, predictive modeling and optimization workflows. It supports asynchronous multi-host computing, dynamic directed-acyclic-graph (DAG) pipelines, and recording of custom workflow artifacts and attributes within a graph database, for repeatability and auditability.

TwinGraph is used by adding decorators to Python functions to record attributes associated with these functions, such as inputs/outputs, source code and compute platform in a TinkerGraph or Amazon Neptune database. It is also optionally a graph orchestrator using Celery in the backend and runs the decorated functions on a chosen compute (AWS Batch, AWS Lambda, Amazon EKS) and container orchestrator (Kubernetes, Docker Compose) in an asynchronous manner.

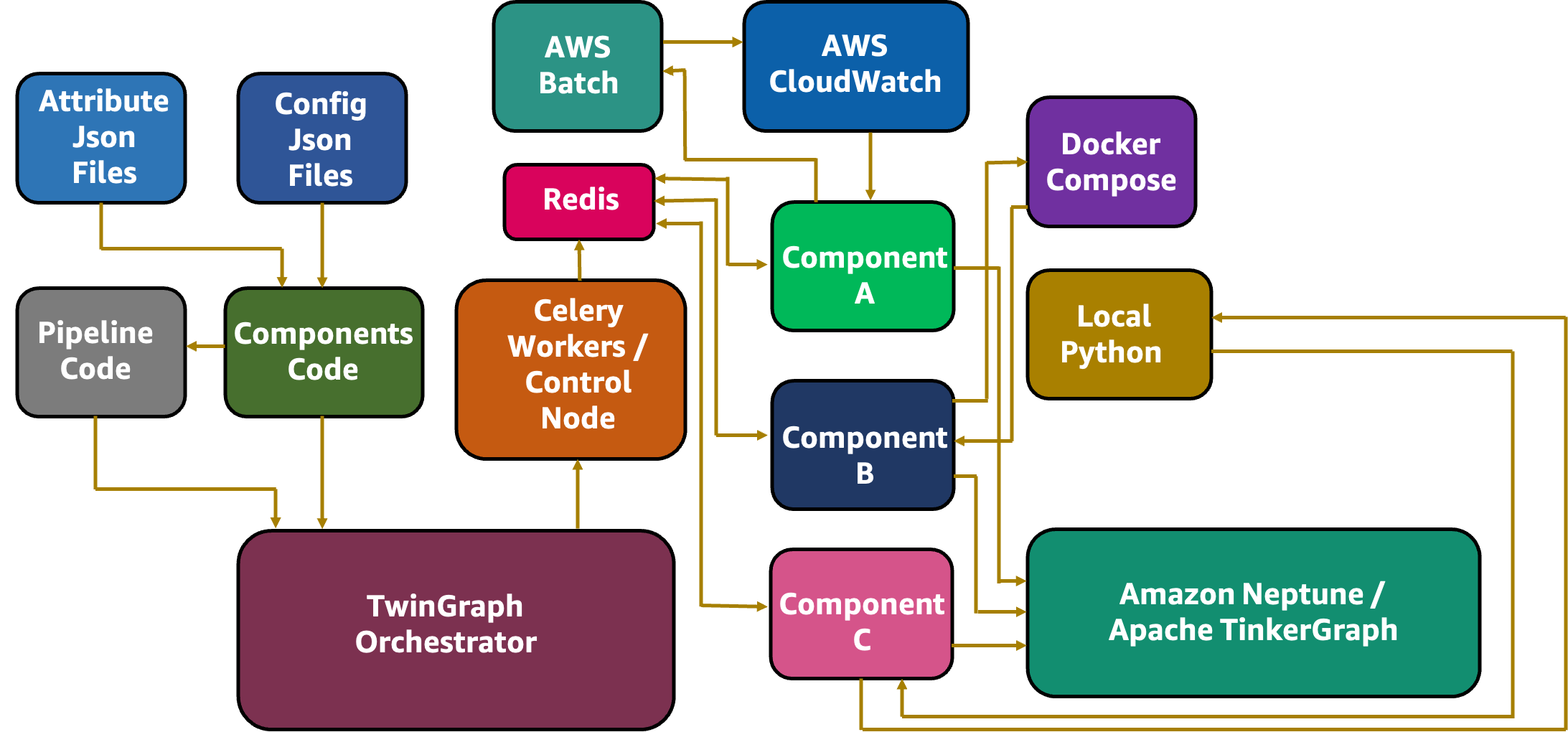

TwinGraph can be used to run a few concurrent or linked compute tasks, or scale up to hundreds of thousands of containerized compute tasks in a number of different compute nodes/hosts; communication of information between tasks is handled through message queues in an event-driven workflow chain. An example architectural flow of information is shown in Figure 1:

Figure 1: Overall Information Flow.

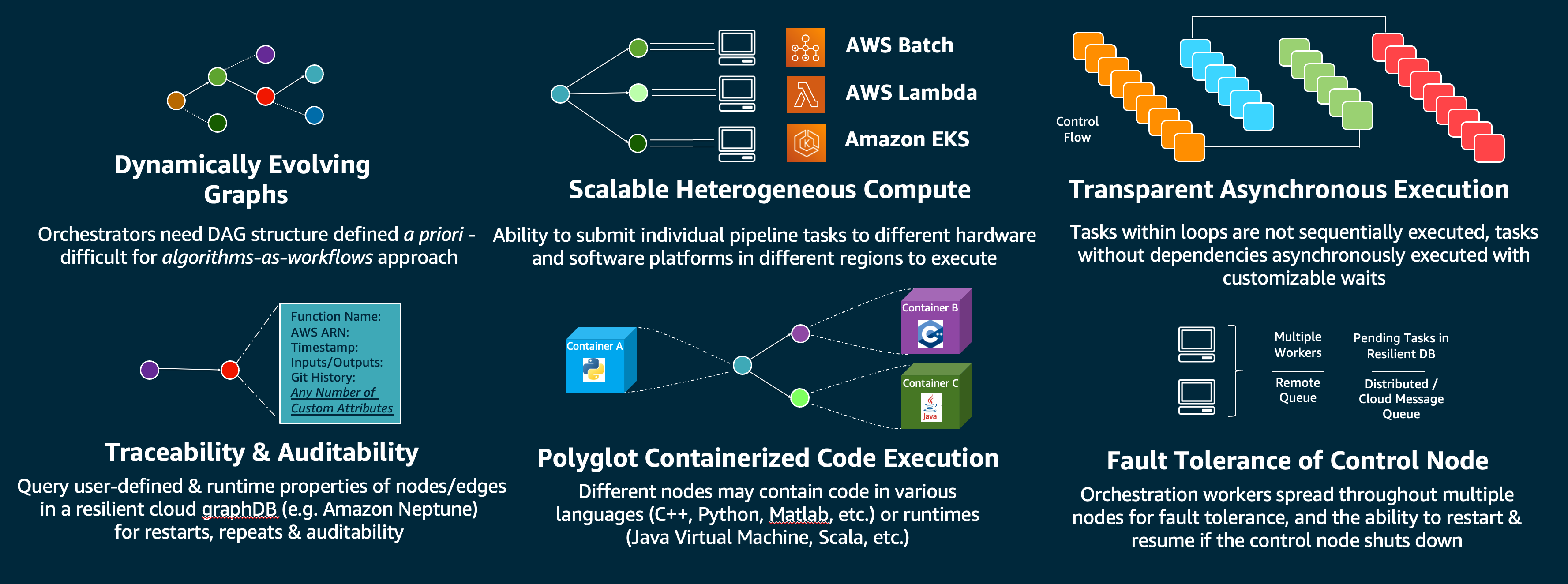

There are a number of key capabilities outlined in the following Figure 2 for TwinGraph. Instead of domain specific language (DSL) for specifying pipelines, TwinGraph uses algorithms defined in native Python (loops, conditionals & recursion) to define the control flow, i.e. dependencies of graph structure on intermediate outputs and runtime stochasticity.

Figure 2: Challenges in Designing Orchestrators

The examples highlighted in the next section provide an overview of how to use TwinGraph.

- Linux

- Amazon Linux 2, Centos 7, RHEL, Fedora, Ubuntu, etc.

- Other Operating Systems

- Please use the Docker Compose installation option

There are two API calls within TwinGraph - component and pipeline - information about these can be found here.

There are a series of examples located in the examples/orchestration_demos folder. These are designed to show how TwinGraph can be used through the two exposed Python decorator APIs, 'component' and 'pipeline' to orchestrate complex workflows on heterogeneous compute environments. The summary and links to the individual Readmes is given below. Before running AWS service specific examples (Demo 1-) please ensure aws-cli is configured, and run update_credentials.py. To stop running Celery tasks, use stop_and_delete.py.

| Example | Description |

|---|---|

| Demo 1 | Demonstrate graph tracing capability with local compute |

| Demo 2 | Building a Docker container & graph tracing with containerized (Docker) compute |

| Demo 3 | Automatically including git history in traced attributes |

| Demo 4 | Using Celery to dispatch tasks and perform asynchronous computing |

| Demo 5 | Using Celery to dispatch and run containerized tasks on Kubernetes (MiniK8s, EKS) |

| Demo 6 | Using Celery to dispatch and run containerized tasks on AWS Batch |

| Demo 7 | Using Celery to dispatch tasks to AWS Lambda |

| Demo 8 | Using Celery to dispatch multiple tasks to different platforms/environments |

| Demo 9 | Using Amazon Neptune instead of TinkerGraph for graph tracing |

| Demo 10 | Running multiple pipelines together |

- RHEL/Centos/Fedora/AL2:

sudo yum install make git docker python- Ubuntu/Debian:

sudo apt-get install -y make git python3 python-is-python3 apt-transport-https curl gnupg-agent ca-certificates software-properties-common unzip python3-pip

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu focal stable"

sudo apt install -y docker-ce docker-ce-cli containerd.io- Clone the repository:

git clone https://github.com/aws-samples/twingraph.gitNavigate to the TwinGraph folder, where the Makefile is located, and key in:

make install

make docker_containers_poetryNote that this step might indicate some missing packages on some distributions, please remedy this by installing any missing base packages as indicated during the installation.

This make install and the make docker_containers_poetry commands are run once to setup the environment for message passing, graph recording or visualization.

After installing and running TwinGraph the first time, for subsequent usage, if the docker socket is restarted or the containers are no longer running, you can use the convenience script provided to restart the containers (this will also prune stopped containers):

sh start_containers.shNote If the containers are already running, this will throw an error when trying to start the containers - you only need one instantiation/container of each image running at a time for most use cases.

Additionally, when using TwinGraph, ensure either that the TwinGraph environment is enabled using poetry, which has it installed:

poetry shellAlternatively install the package in your own existing environment with the appropriate version, for which you can build first:

poetry buildOnce it completes, activate your existing Python virtual environment (conda/venv) and install TwinGraph:

(existing-python-env) pip install dist/twingraph-*.whlFor users of Kubernetes and Amazon EKS, please also install kubectl and eksctl separately, and optionally Kubernetes dashboard for monitoring and debugging.

Once again, inside the TwinGraph folder with Makefile, key in:

make uninstall- The packages AWS-CLI and poetry virtual environment have to be removed manually:

poetry env remove <PATH-TO-PYTHON-EXE>-

Install and ensure Docker with the Compose tool is running:

docker info

-

Clone/Download TwinGraph, and within the folder with

docker-compose.yamlrun Docker Compose, and open an interactive shell:docker compose up -d

- Note: When running for the first time,

composemight show error in the first line as the image does not exist yet, but this will be remedied automatically by the script building/pulling the container images.

- Note: When running for the first time,

-

Open an interactive shell to run the code, or develop within the container using VSCode remote development extension (next point).

docker exec -it twingraph-twingraph-1 bash -

Please install VS Code development using the remote containers extension - it is advised for ease of use, together with the Docker extension where you can attach a new session to running containers.

The interactive Shell enables you to run and execute code, but will not persist once the containers are cleaned up. For this, please add a local folder (outside the container) to the docker-compose.yaml file after line 16 as follows:

14 volumes:

...

17 - /LOCAL-FOLDER:/home/twingraph-user/DOCKER-FOLDERIt is then possible to work on local files outside of the container and use the container only to deploy them with TwinGraph.

- Once completed, you can bring down all the containers (for this, open a shell outside the container, in the same folder where TwinGraph was downloaded or cloned where

docker-compose.yamlresides):docker compose down

- Note: When using Compose, TwinGraph API decorator variables need to point to Redis, Gremlin server and TwinGraph hosts - examples included in the provided Dockerfile should run without any changes with the provided Compose script

- Key in 'tinkergraph-server' instead of 'localhost' when using the Gremlin visualizer in the host field.

- If using the provided Dockerfile without Compose, please replace the ENV vars in lines 3-5 with localhost or the host where the other containers are running.

If you have any issues with the quick installation, and are not able to use Docker Compose, follow instructions here for manually installing required packages and core modules of TwinGraph.

The following ports need to be forwarded to visualize on the local/client machine on a web browser (e.g. on a local browser such as Chrome or Edge, go to localhost:PORT). For more details on how to port forward using various tools and a list of additional ports to forward, please see here.

| PORT | Description |

|---|---|

| 3000 | Gremlin Visualizer Viewing Port - Open localhost:3000 |

| 3001 | Gremlin Visualizer Data Backend |

Security Best Practice: When opening connections from a remote host (i.e. Linux machine running TwinGraph) to local client, ensure that security best practices are followed and the remote machine is not open to unrestricted access to the public (0.0.0.0/0). If using AWS resources, please consult with your security team for any concerns and follow AWS guidelines here.

The known issues and limitations can be found here.

Tests can be run using the PyTest system, with the following command:

cd tests

pytestNote that AWS credentials need to be configured prior to running tests for AWS Batch and AWS Lambda, and Kubernetes config has to be done prior to Kubernetes tests.

We welcome all contributions to improve the code, identify bugs and adopt best development and deployment practices. Please be sure to run the tests prior to commits in the repo. Rules and instructions for contributing can be found here.

Tools used in this framework:

AWS Resources:

- Amazon Web Services (AWS)

- Amazon EC2

- AWS Lambda

- Amazon Elastic Container Service (ECS)

- Amazon Elastic Kubernetes Service (EKS)

- Amazon EKS Blueprints

- AWS Batch

- Amazon Neptune

- Amazon SQS

This open source framework was developed by the Autonomous Computing Team within Amazon Web Services (AWS) Worldwide Specialist Organization (WWSO), led by Vidyasagar Ananthan and Satheesh Maheswaran, with contributions from Cheryl Abundo on Amazon EKS Blueprints, David Sauerwein on applications, and Ross Pivovar for initial testing and identifying issues. The authors acknowledge and thank Adam Rasheed for his leadership and support to our team, and to Alex Iankoulski for his detailed guidance and contributions on Docker tools and expertise in reviewing the code.