A production-focused End to End churn prediction pipeline on AWS

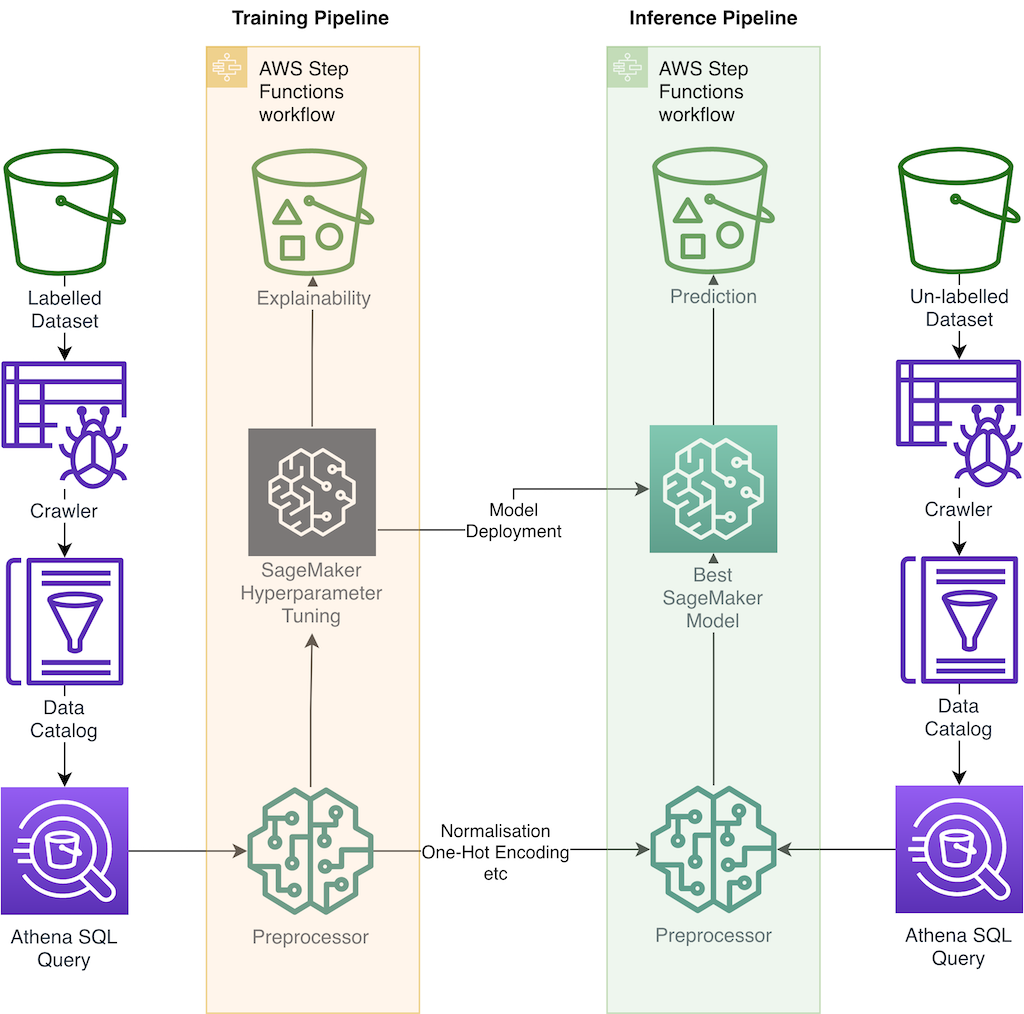

It provides:

- One-click Training and Inference Pipelines for churn prediction

- Preprocessing, Validation, Hyperparameter tuning, and model Explainability all backed into the pipelines

- Amazon Athena and AWS Glue backend that allows for the pipeline to scale on demand and with new data

- End to End Implementation for your own custom churn pipeline

An AWS Professional Service open source initiative | aws-proserve-opensource@amazon.com

- Step 1 - Modify default Parameters

Update the .env file in the main directory.

To run with Cox proportional hazard modeling instead of binary logloss set COXPH to "positive".

S3_BUCKET_NAME="{YOU_BUCKET_NAME}"

REGION="{YOUR_REGION}"

STACK_NAME="{YOUR_STACK_NAME}"

COXPH="{negative|positive}"- Step 2 - Deploy the infrastructure

./standup.sh

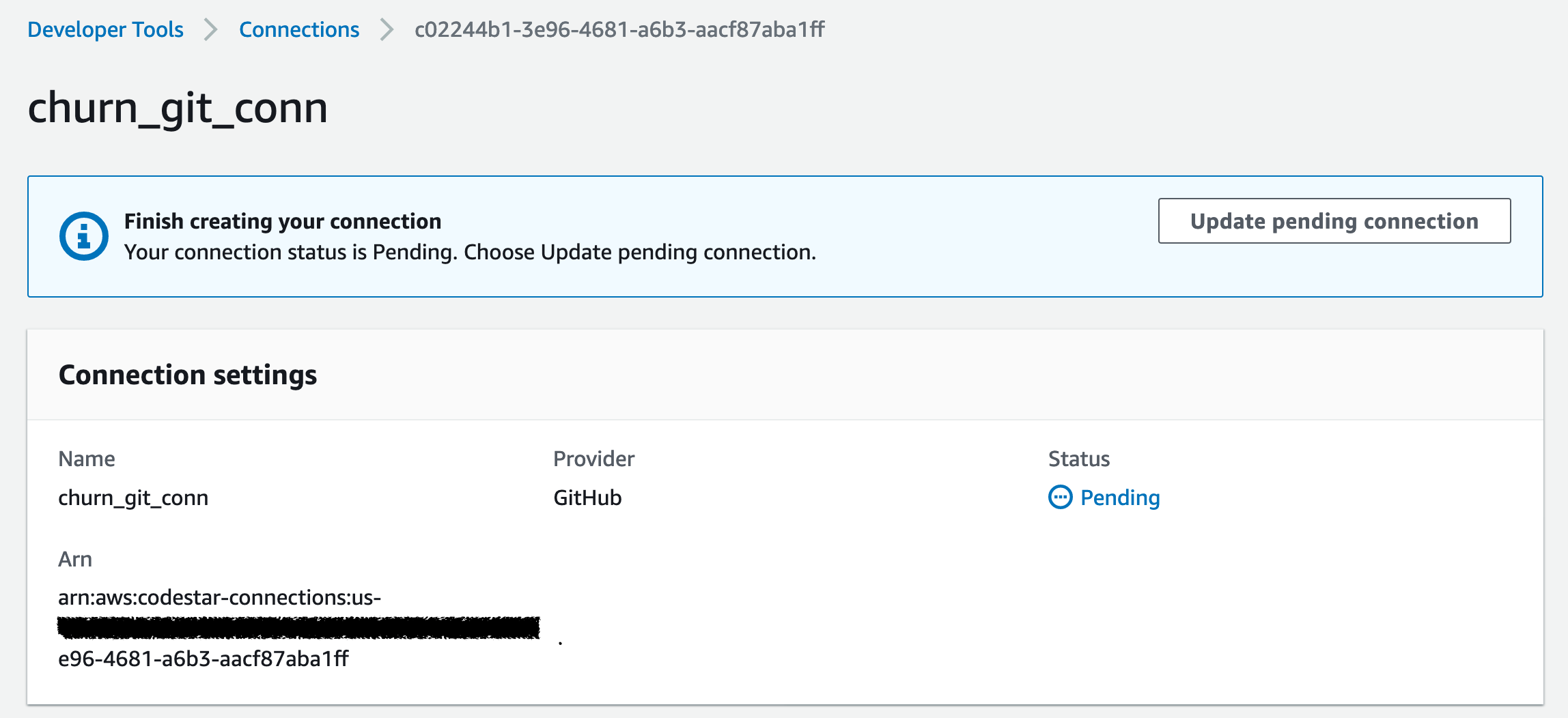

- Step 3 - Update the pending GitHub Connections

To configure the Github connection manually in the CodeDeploy console, go to Developer Tools -> settings -> connections. This is a one time approval. Install as App or choose existing.

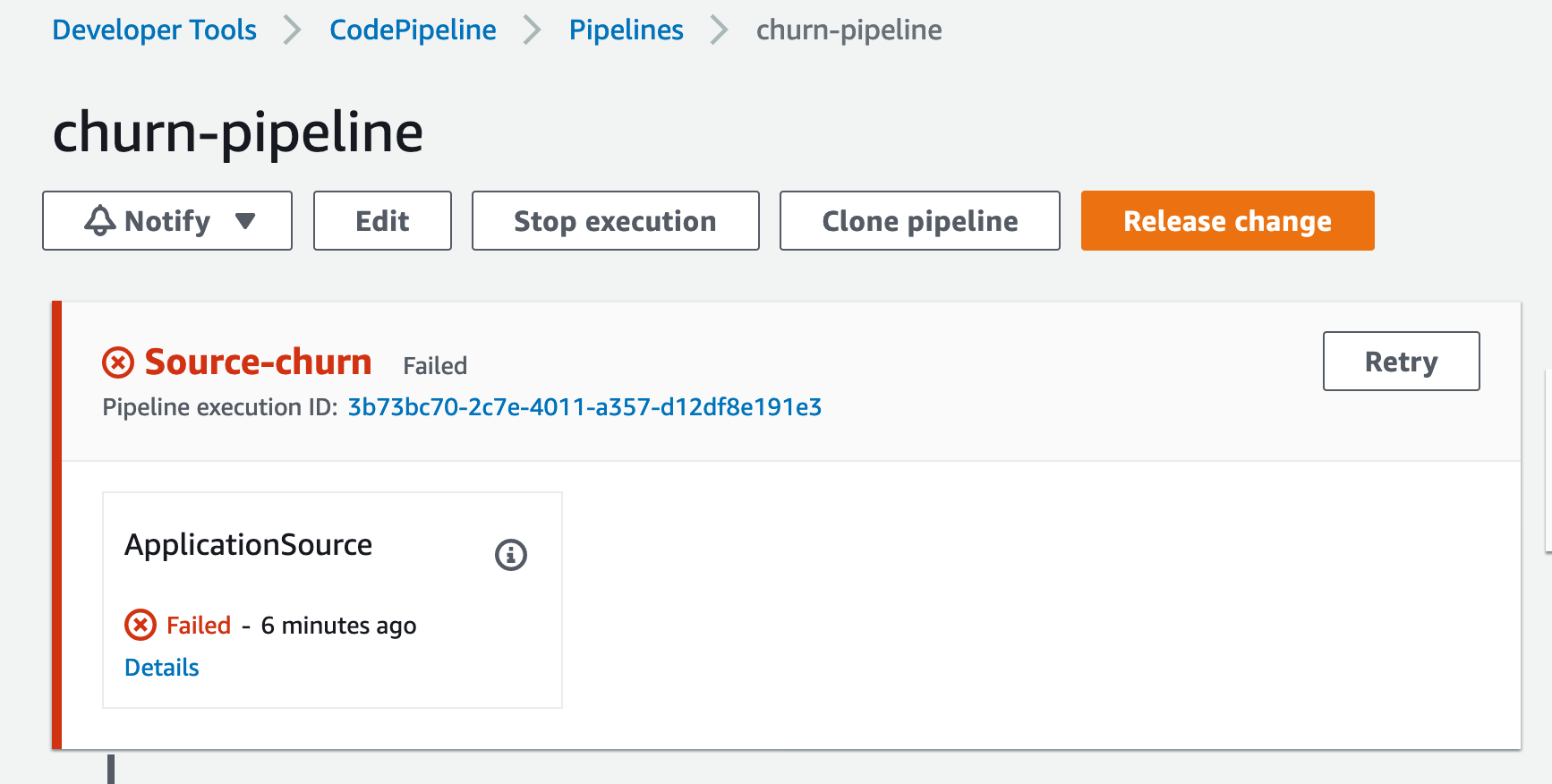

- Step 4 - Release change in churn pipeline for the first time

- Step 5 - Once the build succeeds, navigate to Step Functions to verify completion

Note that as part of the build, it's going to run the Churn Training Pipeline.

- Step 6- Trigger Inference pipeline. Batch Inference can be automated using cron jobs or S3 triggers as per business needs.

AWS_REGION=$(aws configure get region)

aws lambda --region ${AWS_REGION} invoke --function-name invokeInferStepFunction --payload '{ "": ""}' out./delete_resources.sh

This does not delete the S3 bucket. In order to delete the bucket and the contents in it, run the below -

source .env

accountnum=$(aws sts get-caller-identity --query Account --output text)

aws s3 rb s3://${S3_BUCKET_NAME}-${accountnum}-${REGION} --forceIn addition, check out the blog posts:

- Deploying a Scalable End to End Customer Churn Prediction Solution with AWS!

- Retain Customers with Time to Event Modeling-Driven Intervention

For how to Contribute see here.