Based is an efficient architecture inspired by recovering attention-like capabilities (i.e., recall). We do so by combining 2 simple ideas:

- Short sliding window attention (e.g., window size 64), to model fine-grained local dependencies

- "Dense" and global linear attention, to model long-range dependencies

In this way, we aim to capture the same dependencies as Transformers in a 100% subquadratic model, with exact softmax attention locally and a softmax-approximating linear attention for all other tokens.

We find this helps close many of the performance gaps between Transformers and recent subquadratic alternatives (matching perplexity is not all you need? [1, 2, 3]).

In this repo, please find code to (1) train new models and (2) evaluate existing checkpoints on downstream tasks.

Note. The code in this repository is tested on python=3.8.18 and torch=2.1.2. We recommend using these versions in a clean environment.

# clone the repository

git clone git@github.com:HazyResearch/based.git

cd based

# install torch

pip install torch==2.1.2 torchvision==0.16.2 torchaudio==2.1.2 --index-url https://download.pytorch.org/whl/cu118 # due to observed causal-conv1d dependency

# install based package

pip install -e .We are releasing the following checkpoints for research, trained at the 360M and 1.3B parameter scales. Each checkpoint is trained on the same 10B tokens of the Pile corpus, using the same data order. The checkpoints are trained using the same code and infrastructure.

Use the code below to load the Based checkpoints:

import torch

from transformers import AutoTokenizer

from based.models.gpt import GPTLMHeadModel

tokenizer = AutoTokenizer.from_pretrained("gpt2")

model = GPTLMHeadModel.from_pretrained_hf("hazyresearch/based-360m").to("cuda", dtype=torch.float16)| Architecture | Size | Tokens | WandB | HuggingFace | Config |

|---|---|---|---|---|---|

| Based | 360m | 10b | 02-20-based-360m | hazyresearch/based-360m | reference/based-360m.yaml |

| Based | 1.4b | 10b | 02-21-based-1b | hazyresearch/based-1b | reference/based-1b.yaml |

| Attention | 360m | 10b | 02-21-attn-360m | hazyresearch/attn-360m | reference/attn-360m.yaml |

| Attention | 1b | 10b | 02-25-attn-1b | hazyresearch/attn-1b | reference/attn-360m.yaml |

| Mamba | 360m | 10b | 02-21-mamba-360m | hazyresearch/mamba-360m | reference/mamba-360m.yaml |

| Mamba | 1.4b | 10b | 02-22-mamba-1b | hazyresearch/mamba-1b | reference/mamba-1b.yaml |

Warning. We are releasing these models for the purpose of efficient architecture research. Because they have not been instruction fine-tuned or audited, they are not intended for use in any downstream applications.

The following code will run text generation for a prompt and print out the response.

input = tokenizer.encode("If I take one more step, it will be", return_tensors="pt").to("cuda")

output = model.generate(input, max_length=20)

print(tokenizer.decode(output[0]))Note. For the checkpoints from other models, you will need to install other dependencies and use slightly different code.

To load the Attention models, use the following code:

import torch

from transformers import AutoTokenizer

from based.models.transformer.gpt import GPTLMHeadModel

tokenizer = AutoTokenizer.from_pretrained("gpt2")

model = GPTLMHeadModel.from_pretrained_hf("hazyresearch/attn-360m").to("cuda")To use the Mamba checkpoints, first run pip install mamba-ssm and then use the following code:

import torch

from transformers import AutoTokenizer

from based.models.mamba import MambaLMHeadModel

tokenizer = AutoTokenizer.from_pretrained("gpt2")

model = MambaLMHeadModel.from_pretrained_hf("hazyresearch/mamba-360m").to("cuda")In order to train a new model with our code, you'll need to do a bit of additional setup:

# install train extra dependencies

pip install -e .[train]

# install apex

git clone https://github.com/NVIDIA/apex

cd apex

pip install -v --disable-pip-version-check --no-cache-dir --no-build-isolation --config-settings "--build-option=--cpp_ext" --config-settings "--build-option=--cuda_ext" ./

cd ..

# install kernel (to be replced with new custom kernels)

cd train/csrc/causal_dot_prod/

python setup.py installTo train a new model, construct a config.yaml file at train/configs/experiment/. We are including the configs used to produce the pretrained checkpoints for the paper (released on HF below) at train/configs/experiment/reference/.

You can launch a training job using the following command from the train/ directory, where you can modify the config name and number of GPUs (trainer.devices):

cd train/

python run.py experiment=reference/based-1b trainer.devices=8

In our paper, we evaluated on the Pile corpus, which is no longer available online, so the train/configs/experiment/reference/ configs are unfortunately not directly runnable. For your use, we are including an example config that would train on the WikiText103 language modeling data. You can launch using the following script:

cd train/

python run.py experiment=example/based-360m trainer.devices=8

You can adapt the training dataset by adding a new dataset config file under train/configs/datamodule/. Follow the examples in wikitext103.yaml. Once you've constructed the yaml file for your new dataset, go to the experiment config (e.g. train/configs/experiment/example/based-360m.yaml) and update the name of the datamodule under override datamodule to the filename of your new dataset yaml file.

Be sure to update the checkpointing directory in the config prior to launching training.

Note that this training code is from: https://github.com/Dao-AILab/flash-attention/tree/main/training

In our paper, we evaluate pretrained language models on a standard suite of benchmarks from the LM Evaluation Harness, as well as a suite of three recall-intensive tasks:

- SWDE (Info. extraction). A popular information extraction benchmark for semi-structured data. SWDE includes raw HTML documents from 8 Movie and 5 University websites (e.g.IMDB, US News) and annotations for 8-274 attributes per website (e.g., Movie runtime). HuggingFace: hazyresearch/based-swde

- FDA (Info. extraction). A popular information extraction benchmark for unstructured data. The FDA setting contains 16 gold attributes and 100 PDF documents, which are up to 20 pages long, randomly sampled from FDA 510(k). HuggingFace: hazyresearch/based-fda [WIP - coming soon]. Here is a temporary link to FDA data in the meantime: HF FDA.

- SQUAD-Completion (Document-QA). We find that original SQUAD dataset is challenging for our models without instruction fine-tuning. So we introduce a modified version of SQUAD where questions are reworded as next-token prediction tasks. For example, "What is the capital of France?" becomes "The capital of France is". HuggingFace: hazyresearch/based-squad [WIP - coming soon]

Under evaluate, we have a clone of EleutherAI's lm-evaluation-harness that includes these new tasks and provides scripts for running all the evaluations from the paper. The following instructions can be used to reproduce our results on the LM-Eval harness using the pretrained checkpoints.

cd evaluate

# init the submodule and install

git submodule init

git submodule update

pip install -e . We provide a script evaluate/launch.py that launch evaluations on the checkpoints we've released.

For example, running the following from the evaluate folder will evaluate the 360M Based, Mamba, and Attention models on the SWDE dataset.

python launch.py \

--task swde \

--model "hazyresearch/based-360m" \

--model "hazyresearch/mamba-360m" \

--model "hazyresearch/attn-360m" \

--limit=100Optionally, if you have access to multiple GPUs, you can pass the -p flag to run each evaluation in parallel.

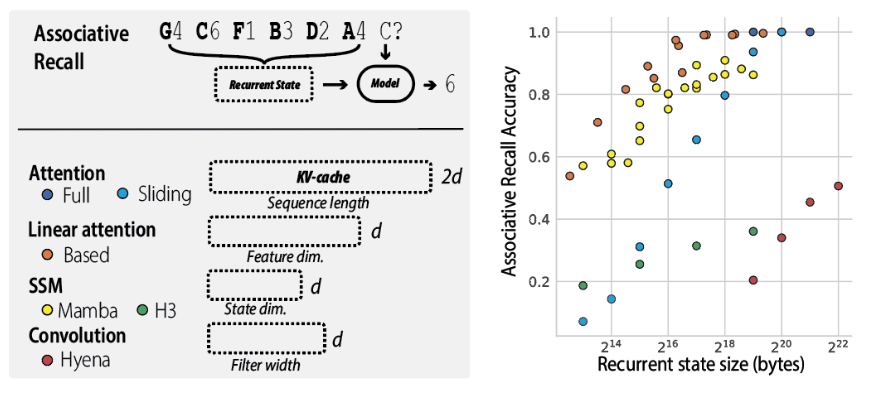

In our paper, we demonstrate the recall-throughput tradeoff using a synthetic associative recall task (see Figure 2, below, and Figure 3 in the paper).

The code for reproducing these figures is provided in a separate repository: HazyResearch/zoology. Follow the setup instruction in the Zoology README. The instructions for reproducing the are provided in zoology/experiments. For example, you can create the figure above using.

python -m zoology.launch zoology/experiments/arxiv24_based_figure2/configs.py -p

We include the kernels evaluated in the Based paper under based/benchmarking/. We provide additional details on the CUDA releases in the README in this folder. Stay tuned!

This repo contains work based on the following papers. Please consider citing if you found the work or code useful:

# Based

@article{arora2024simple,

title={Simple linear attention language models balance the recall-throughput tradeoff},

author={Arora, Simran and Eyuboglu, Sabri and Zhang, Michael and Timalsina, Aman and Alberti, Silas and Zinsley, Dylan and Zou, James and Rudra, Atri and Ré, Christopher},

journal={arXiv:2402.18668},

year={2024}

}

# Hedgehog (Linear attention)

@article{zhang2024hedgehog,

title={The Hedgehog \& the Porcupine: Expressive Linear Attentions with Softmax Mimicry},

author={Zhang, Michael and Bhatia, Kush and Kumbong, Hermann and R{\'e}, Christopher},

journal={arXiv preprint arXiv:2402.04347},

year={2024}

}

# Zoology (BaseConv, Synthetics, Recall Problem)

@article{arora2023zoology,

title={Zoology: Measuring and Improving Recall in Efficient Language Models},

author={Arora, Simran and Eyuboglu, Sabri and Timalsina, Aman and Johnson, Isys and Poli, Michael and Zou, James and Rudra, Atri and Ré, Christopher},

journal={arXiv:2312.04927},

year={2023}

}

This project was made possible by a number of other open source projects; please cite if you use their work. Notably:

- Our training code and sliding window implementation are based on Tri Dao's FlashAttention.

- We use EleutherAI's lm-evaluation-harness for evaluation.

- We use the causal dot product kernel from Fast Transformers in preliminary training Fast Transformers.

- We use the conv1d kernel from Mamba.

Models in this project were trained using compute provided by:

- Together.ai

- Google Cloud Platform through Stanford HAI

Please reach out with feedback and questions!