- 💎 Neural Networks

- 📜 Tasks

- ⚙️ Methods

- 🎁 Datasets

- ⚔ Benchmarks

- 🌍 Applications

- 💻 Software

- 📈 Overview

- 💬 Opinions

Notations

✅ - Checked

⭕ - To Check

📜 - Survey

1998 - [LeNet]: Gradient-based learning applied to document recognition

2012 - [AlexNet] ImageNet Classification with Deep Convolutional Neural Networks ✅

2013 - Learning Hierarchical Features for Scene Labeling

2013 - [R-CNN] Rich feature hierarchies for accurate object detection and semantic segmentation

Object detection performance, as measured on the canonical PASCAL VOC dataset, has plateaued in the last few years. The best-performing methods are complex ensemble systems that typically combine multiple low-level image features with high-level context. In this paper, we propose a simple and scalable detection algorithm that improves mean average precision (mAP) by more than 30% relative to the previous best result on VOC 2012---achieving a mAP of 53.3%. Our approach combines two key insights: (1) one can apply high-capacity convolutional neural networks (CNNs) to bottom-up region proposals in order to localize and segment objects and (2) when labeled training data is scarce, supervised pre-training for an auxiliary task, followed by domain-specific fine-tuning, yields a significant performance boost. Since we combine region proposals with CNNs, we call our method R-CNN: Regions with CNN features. We also compare R-CNN to OverFeat, a recently proposed sliding-window detector based on a similar CNN architecture. We find that R-CNN outperforms OverFeat by a large margin on the 200-class ILSVRC2013 detection dataset.

2014 - [OverFeat]: Integrated Recognition, Localization and Detection using Convolutional Networks

2014 - [Seq2Seq]: Sequence to Sequence Learning with Neural Networks

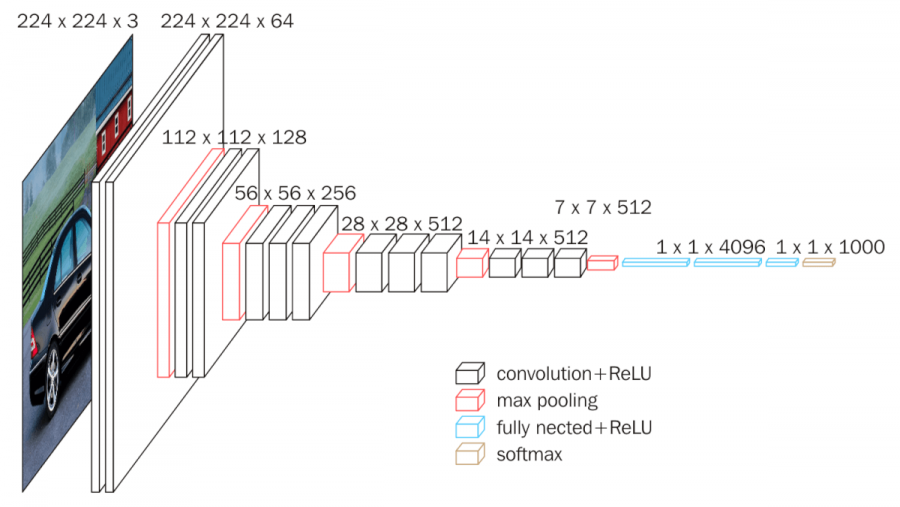

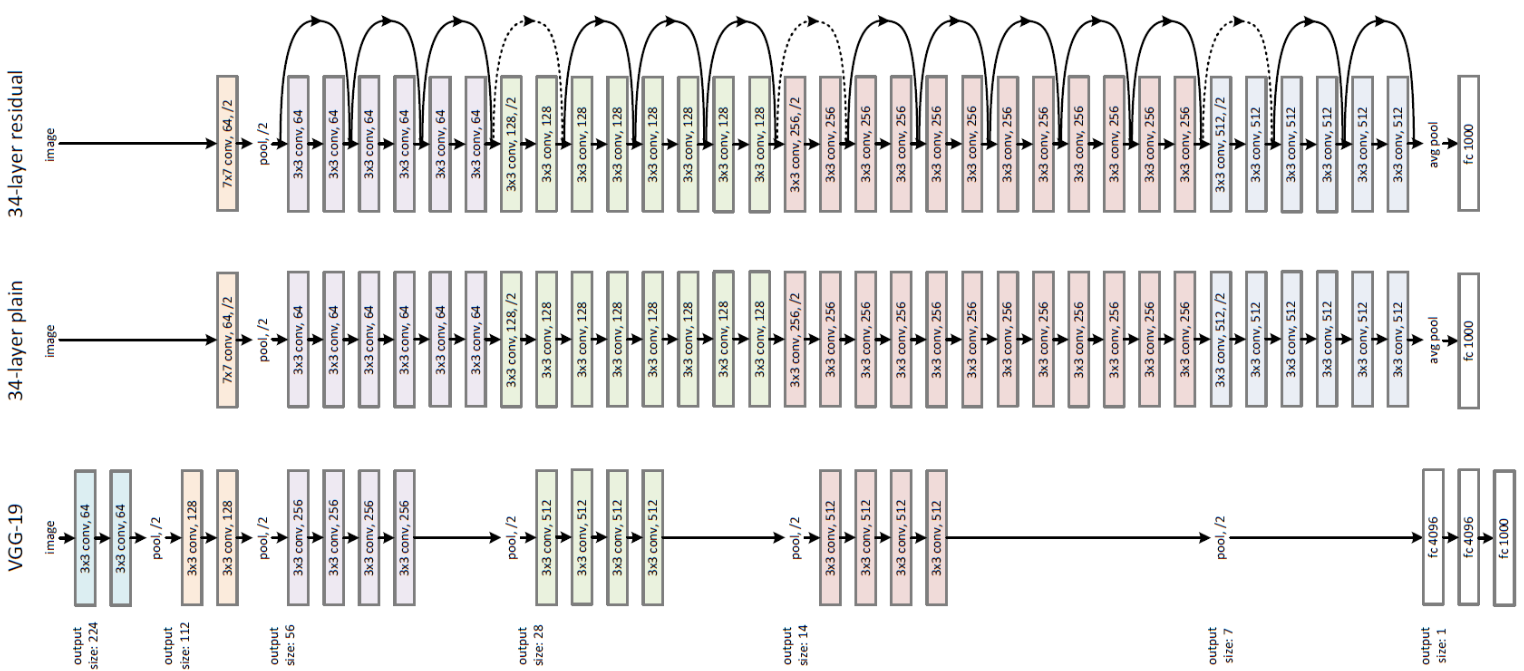

2014 - [VGG] Very Deep Convolutional Networks for Large-Scale Image Recognition ✅

In this work we investigate the effect of the convolutional network depth on its accuracy in the large-scale image recognition setting. Our main contribution is a thorough evaluation of networks of increasing depth using an architecture with very small (3x3) convolution filters, which shows that a significant improvement on the prior-art configurations can be achieved by pushing the depth to 16-19 weight layers. These findings were the basis of our ImageNet Challenge 2014 submission, where our team secured the first and the second places in the localisation and classification tracks respectively. We also show that our representations generalise well to other datasets, where they achieve state-of-the-art results. We have made our two best-performing ConvNet models publicly available to facilitate further research on the use of deep visual representations in computer vision.

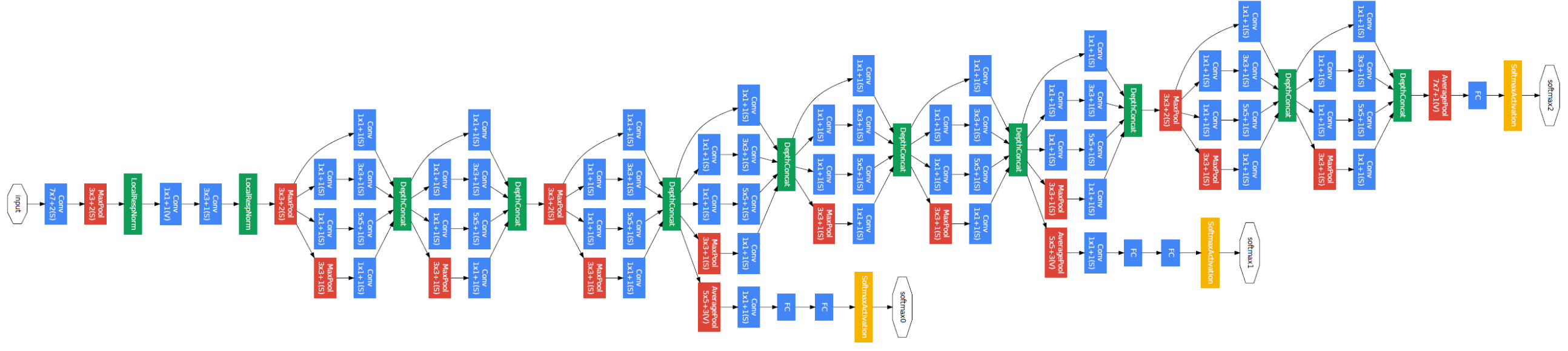

2014 - [GoogleNet] Going Deeper with Convolutions ✅

We propose a deep convolutional neural network architecture codenamed "Inception", which was responsible for setting the new state of the art for classification and detection in the ImageNet Large-Scale Visual Recognition Challenge 2014 (ILSVRC 2014). The main hallmark of this architecture is the improved utilization of the computing resources inside the network. This was achieved by a carefully crafted design that allows for increasing the depth and width of the network while keeping the computational budget constant. To optimize quality, the architectural decisions were based on the Hebbian principle and the intuition of multi-scale processing. One particular incarnation used in our submission for ILSVRC 2014 is called GoogLeNet, a 22 layers deep network, the quality of which is assessed in the context of classification and detection.

2015 - [ResNet] Deep Residual Learning for Image Recognition ✅

Deeper neural networks are more difficult to train. We present a residual learning framework to ease the training of networks that are substantially deeper than those used previously. We explicitly reformulate the layers as learning residual functions with reference to the layer inputs, instead of learning unreferenced functions. We provide comprehensive empirical evidence showing that these residual networks are easier to optimize, and can gain accuracy from considerably increased depth. On the ImageNet dataset we evaluate residual nets with a depth of up to 152 layers---8x deeper than VGG nets but still having lower complexity. An ensemble of these residual nets achieves 3.57% error on the ImageNet test set. This result won the 1st place on the ILSVRC 2015 classification task. We also present analysis on CIFAR-10 with 100 and 1000 layers. The depth of representations is of central importance for many visual recognition tasks. Solely due to our extremely deep representations, we obtain a 28% relative improvement on the COCO object detection dataset. Deep residual nets are foundations of our submissions to ILSVRC & COCO 2015 competitions, where we also won the 1st places on the tasks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation.

2015 - Spatial Transformer Networks

Convolutional Neural Networks define an exceptionally powerful class of models, but are still limited by the lack of ability to be spatially invariant to the input data in a computationally and parameter efficient manner. In this work we introduce a new learnable module, the Spatial Transformer, which explicitly allows the spatial manipulation of data within the network. This differentiable module can be inserted into existing convolutional architectures, giving neural networks the ability to actively spatially transform feature maps, conditional on the feature map itself, without any extra training supervision or modification to the optimisation process. We show that the use of spatial transformers results in models which learn invariance to translation, scale, rotation and more generic warping, resulting in state-of-the-art performance on several benchmarks, and for a number of classes of transformations.

2016 - [WRN]: Wide Residual Networks [github]

2015 - [FCN] Fully Convolutional Networks for Semantic Segmentation

2015 - [U-net]: Convolutional networks for biomedical image segmentation ✅

2016 - [Xception]: Deep Learning with Depthwise Separable Convolutions Implementation

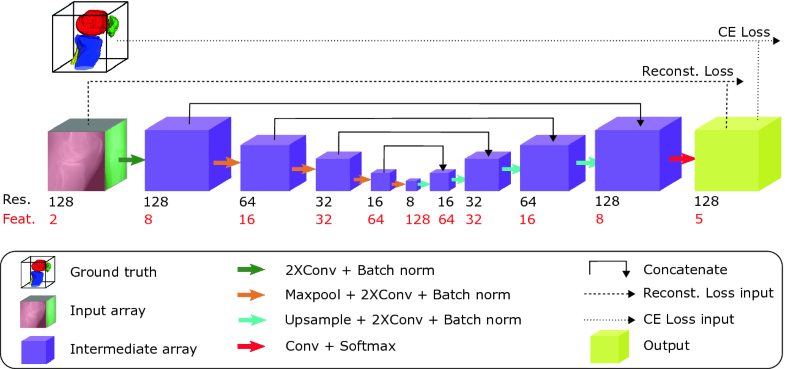

2016 - [V-Net]: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation

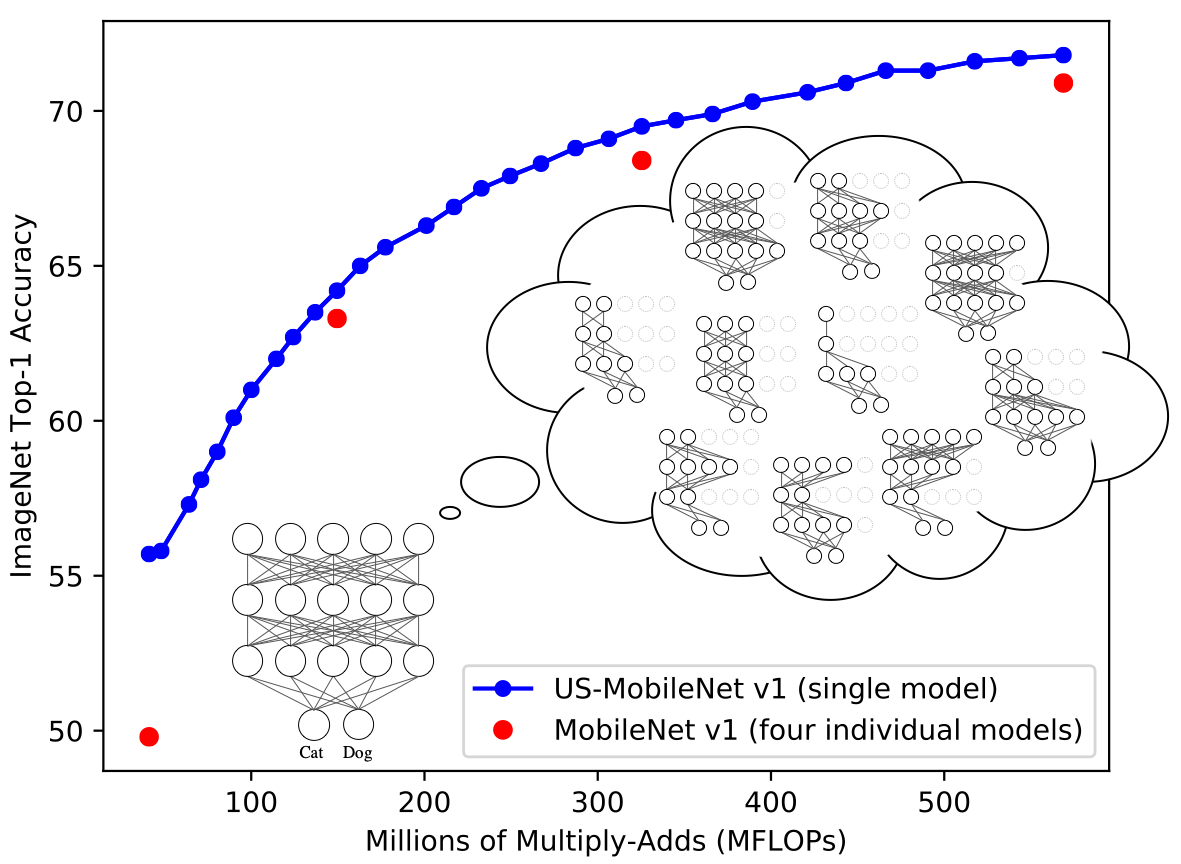

2017 - [MobileNets]: Efficient Convolutional Neural Networks for Mobile Vision Applications

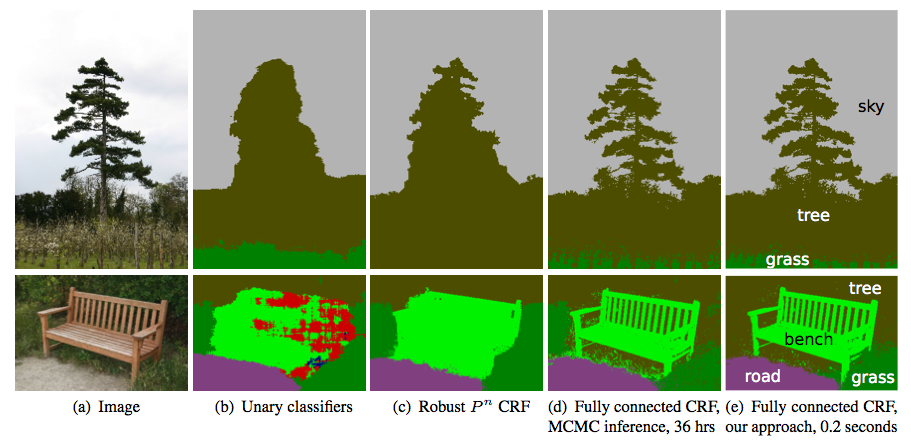

Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials

2018 - [TernausNet]: U-Net with VGG11 Encoder Pre-Trained on ImageNet for Image Segmentation ✅

2018 - CubeNet: Equivariance to 3D Rotation and Translation[github], [video] ⭕

2018 - Deep Rotation Equivariant Network[github] ⭕

2018 - ArcFace: Additive Angular Margin Loss for Deep Face Recognition

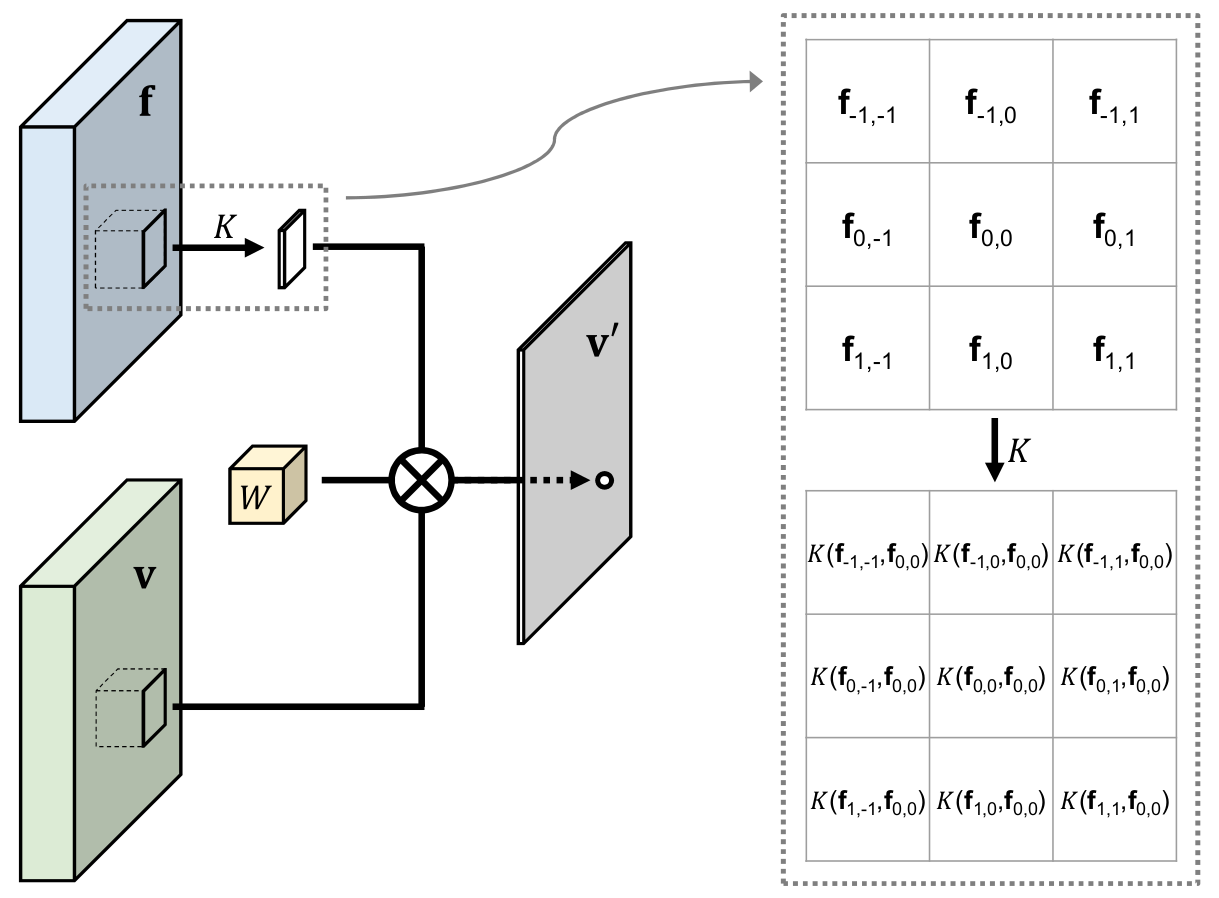

2019 - [PacNet]: Pixel-Adaptive Convolutional Neural Networks

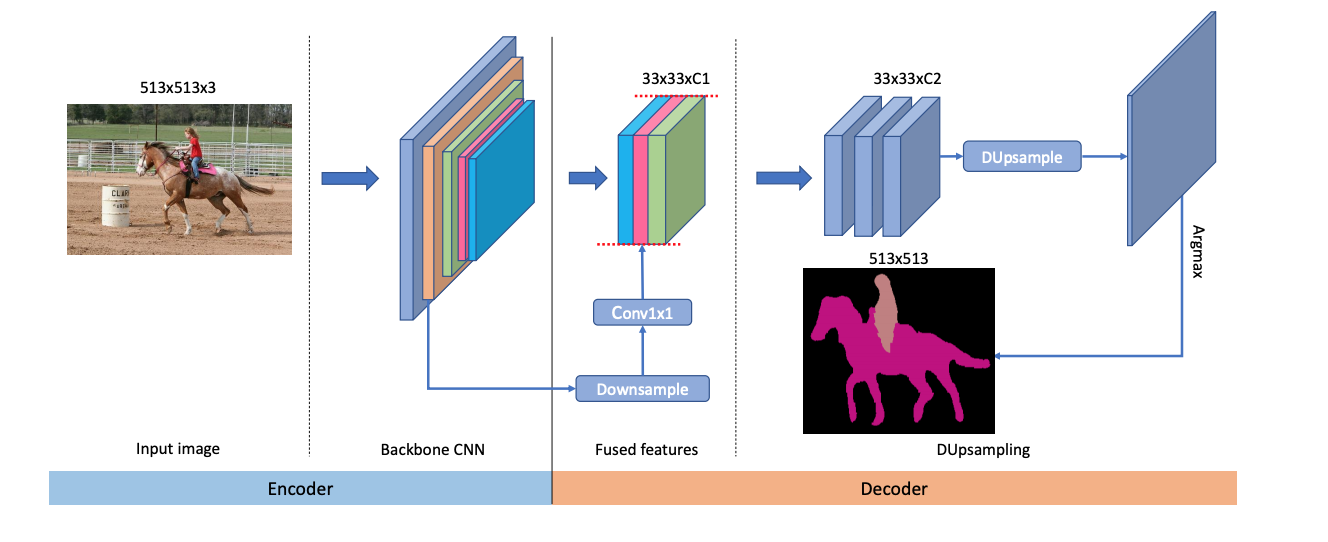

2019 - Decoders Matter for Semantic Segmentation: Data-Dependent Decoding Enables Flexible Feature Aggregation [github] ⭕

2019 - Panoptic Feature Pyramid Networks ⭕

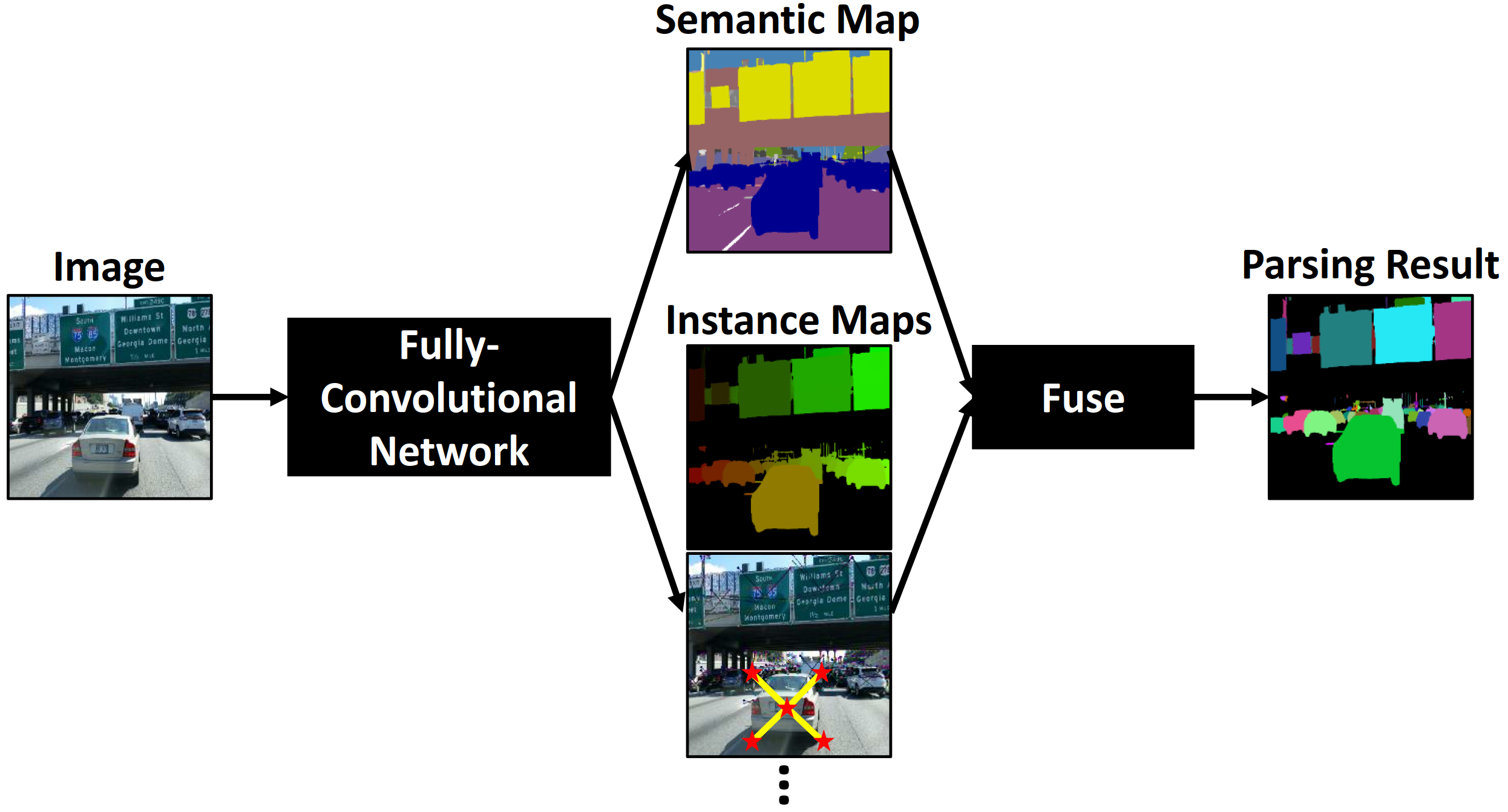

2019 - [DeeperLab]: Single-Shot Image Parser ⭕

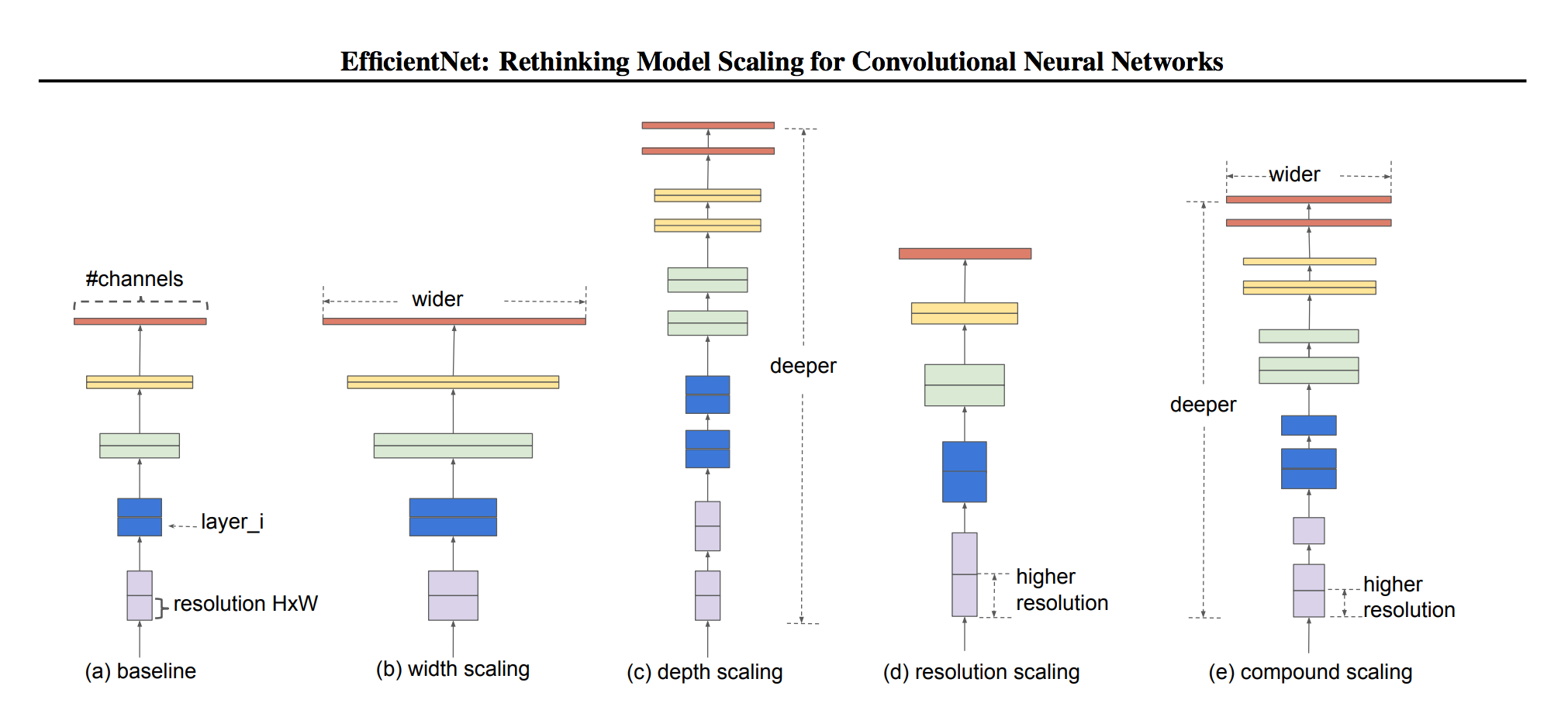

2019 - [EfficientNet]: Rethinking Model Scaling for Convolutional Neural Networks ⭕

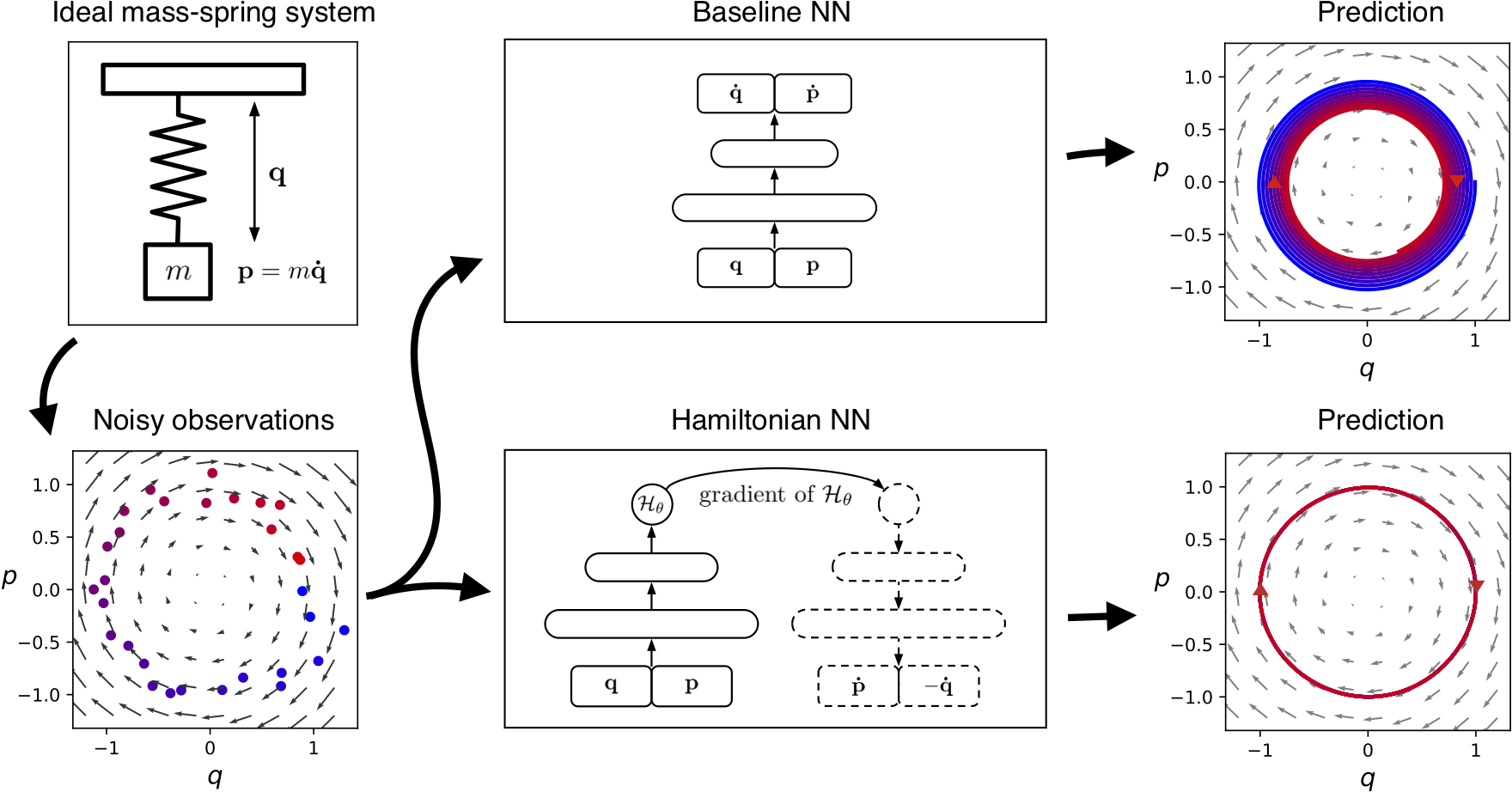

2019 - Hamiltonian Neural Networks

Even though neural networks enjoy widespread use, they still struggle to learn the basic laws of physics. How might we endow them with better inductive biases? In this paper, we draw inspiration from Hamiltonian mechanics to train models that learn and respect exact conservation laws in an unsupervised manner. We evaluate our models on problems where conservation of energy is important, including the two-body problem and pixel observations of a pendulum. Our model trains faster and generalizes better than a regular neural network. An interesting side effect is that our model is perfectly reversible in time.

2020 - Neural Operator: Graph Kernel Network for Partial Differential Equations

2021 - Learning Neural Network Subspaces

Recent observations have advanced our understanding of the neural network optimization landscape, revealing the existence of (1) paths of high accuracy containing diverse solutions and (2) wider minima offering improved performance. Previous methods observing diverse paths require multiple training runs. In contrast we aim to leverage both property (1) and (2) with a single method and in a single training run. With a similar computational cost as training one model, we learn lines, curves, and simplexes of high-accuracy neural networks. These neural network subspaces contain diverse solutions that can be ensembled, approaching the ensemble performance of independently trained networks without the training cost. Moreover, using the subspace midpoint boosts accuracy, calibration, and robustness to label noise, outperforming Stochastic Weight Averaging.

2014 - [SPP-Net] Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition

2016 - [ParseNet]: Looking Wider to See Better

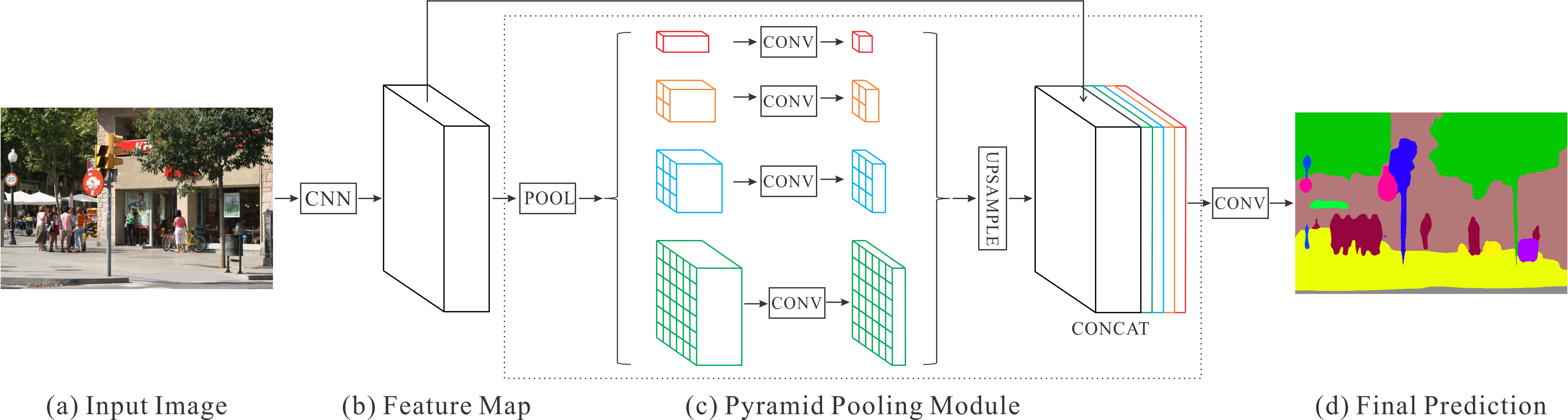

2016 - [PSPNet]: Pyramid Scene Parsing Network [github] ✅

2015 - Zoom Better to See Clearer: Human and Object Parsing with Hierarchical Auto-Zoom Net

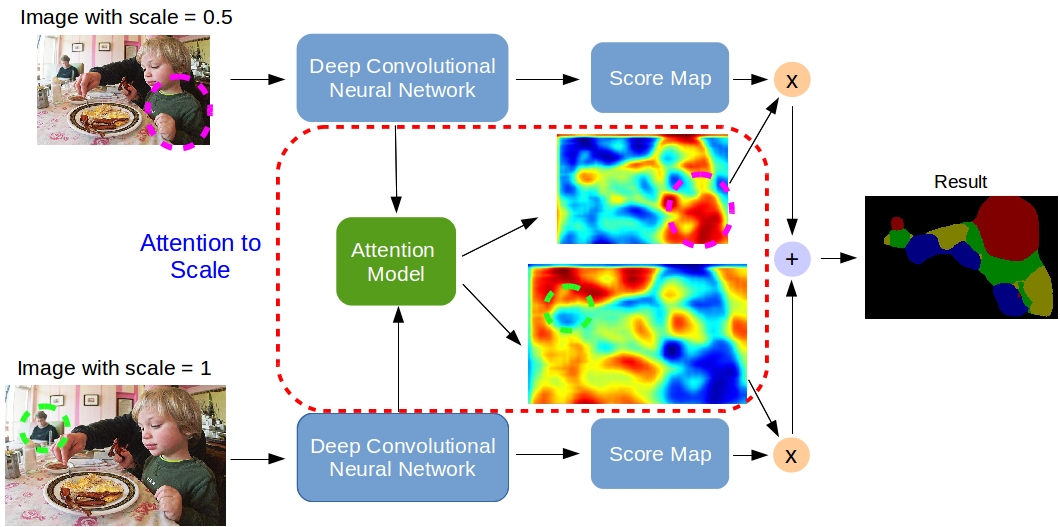

2016 - Attention to Scale: Scale-aware Semantic Image Segmentation

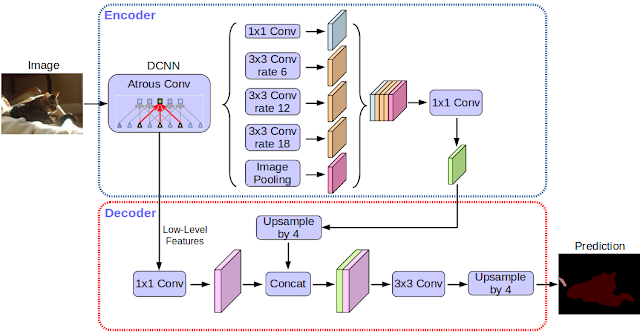

2017 - Rethinking Atrous Convolution for Semantic Image Segmentation

2017 - Feature Pyramid Networks for Object Detection

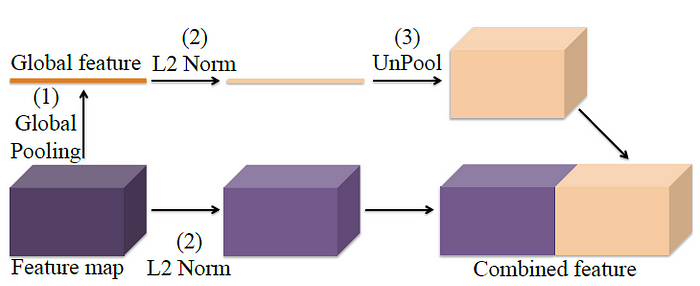

2019 - [FastFCN]: Rethinking Dilated Convolution in the Backbone for Semantic Segmentation [github] ✅

2019 - Making Convolutional Networks Shift-Invariant Again

2019 - [LEDNet]: A Lightweight Encoder-Decoder Network for Real-Time Semantic Segmentation

2019 - Feature Pyramid Encoding Network for Real-time Semantic Segmentation

2019 - Efficient Segmentation: Learning Downsampling Near Semantic Boundaries

2019 - PointRend: Image Segmentation as Rendering

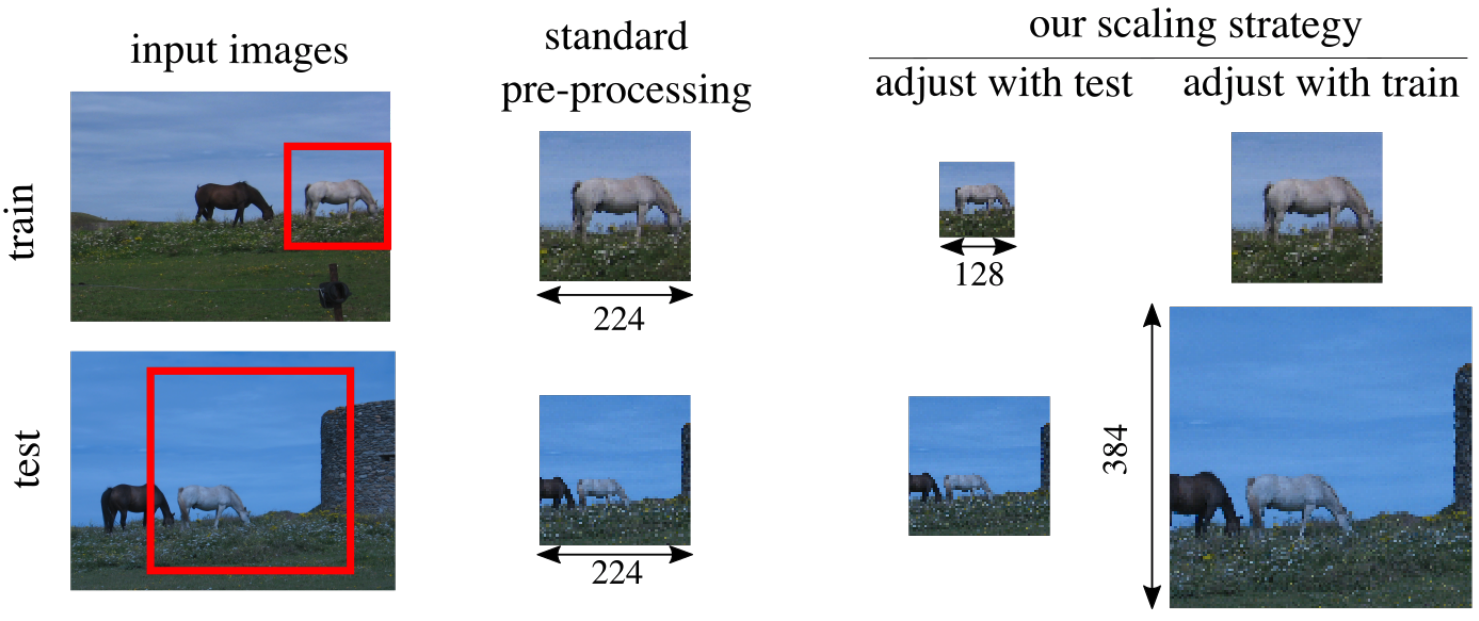

2019 - Fixing the train-test resolution discrepancy ✅

This paper first shows that existing augmentations induce a significant discrepancy between the typical size of the objects seen by the classifier at train and test time.

We experimentally validate that, for a target test resolu- tion, using a lower train resolution offers better classification at test time.

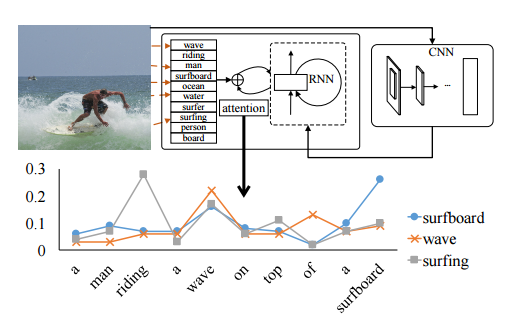

2016 - Image Captioning with Semantic Attention

2018 - [EncNet] Context Encoding for Semantic Segmentation [github] ⭕

2018 - Tell Me Where to Look: Guided Attention Inference Network

2005 - Image Parsing: Unifying Segmentation, Detection, and Recognition ⭕

2013 - Complexity of Representation and Inference in Compositional Models with Part Sharing

2017 - Interpretable Convolutional Neural Networks ⭕

2019 - Local Relation Networks for Image Recognition

2017 - Teaching Compositionality to CNNs ⭕

2020 - Concept Bottleneck Models ⭕

2017 - Dynamic Routing Between Capsules ⭕

2020 - SURVEY: A Survey on Visual Transformer 📜

2021 - SURVEY: Transformers in Vision: A Survey 📜

2023 - SURVEY: A Comprehensive Survey on Applications of Transformers for Deep Learning Tasks 📜

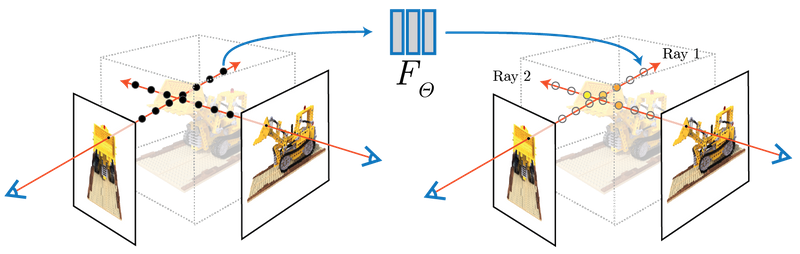

2020 - [NeRF]: Representing Scenes as Neural Radiance Fields for View Synthesis ⭕

We present a method that achieves state-of-the-art results for synthesizing novel views of complex scenes by optimizing an underlying continuous volumetric scene function using a sparse set of input views. Our algorithm represents a scene using a fully-connected (non-convolutional) deep network, whose input is a single continuous 5D coordinate (spatial location (x,y,z) and viewing direction (θ,ϕ)) and whose output is the volume density and view-dependent emitted radiance at that spatial location. We synthesize views by querying 5D coordinates along camera rays and use classic volume rendering techniques to project the output colors and densities into an image. Because volume rendering is naturally differentiable, the only input required to optimize our representation is a set of images with known camera poses. We describe how to effectively optimize neural radiance fields to render photorealistic novel views of scenes with complicated geometry and appearance, and demonstrate results that outperform prior work on neural rendering and view synthesis. View synthesis results are best viewed as videos, so we urge readers to view our supplementary video for convincing comparisons.

2020 - [BLOG] NeRF Explosion 2020

2020 - [SURVEY] State of the Art on Neural Rendering 📜

2020 - AutoInt: Automatic Integration for Fast Neural Volume Rendering

Numerical integration is a foundational technique in scientific computing and is at the core of many computer vision applications. Among these applications, implicit neural volume rendering has recently been proposed as a new paradigm for view synthesis, achieving photorealistic image quality. However, a fundamental obstacle to making these methods practical is the extreme computational and memory requirements caused by the required volume integrations along the rendered rays during training and inference. Millions of rays, each requiring hundreds of forward passes through a neural network are needed to approximate those integrations with Monte Carlo sampling. Here, we propose automatic integration, a new framework for learning efficient, closed-form solutions to integrals using implicit neural representation networks. For training, we instantiate the computational graph corresponding to the derivative of the implicit neural representation. The graph is fitted to the signal to integrate. After optimization, we reassemble the graph to obtain a network that represents the antiderivative. By the fundamental theorem of calculus, this enables the calculation of any definite integral in two evaluations of the network. Using this approach, we demonstrate a greater than 10x improvement in computation requirements, enabling fast neural volume rendering.

2020 - A Curvature and Density‐based Generative Representation of Shapes

This paper introduces a generative model for 3D surfaces based on a representation of shapes with mean curvature and metric, which are invariant under rigid transformation. Hence, compared with existing 3D machine learning frameworks, our model substantially reduces the influence of translation and rotation. In addition, the local structure of shapes will be more precisely captured, since the curvature is explicitly encoded in our model. Specifically, every surface is first conformally mapped to a canonical domain, such as a unit disk or a unit sphere. Then, it is represented by two functions: the mean curvature half‐density and the vertex density, over this canonical domain. Assuming that input shapes follow a certain distribution in a latent space, we use the variational autoencoder to learn the latent space representation. After the learning, we can generate variations of shapes by randomly sampling the distribution in the latent space. Surfaces with triangular meshes can be reconstructed from the generated data by applying isotropic remeshing and spin transformation, which is given by Dirac equation. We demonstrate the effectiveness of our model on datasets of man‐made and biological shapes and compare the results with other methods.

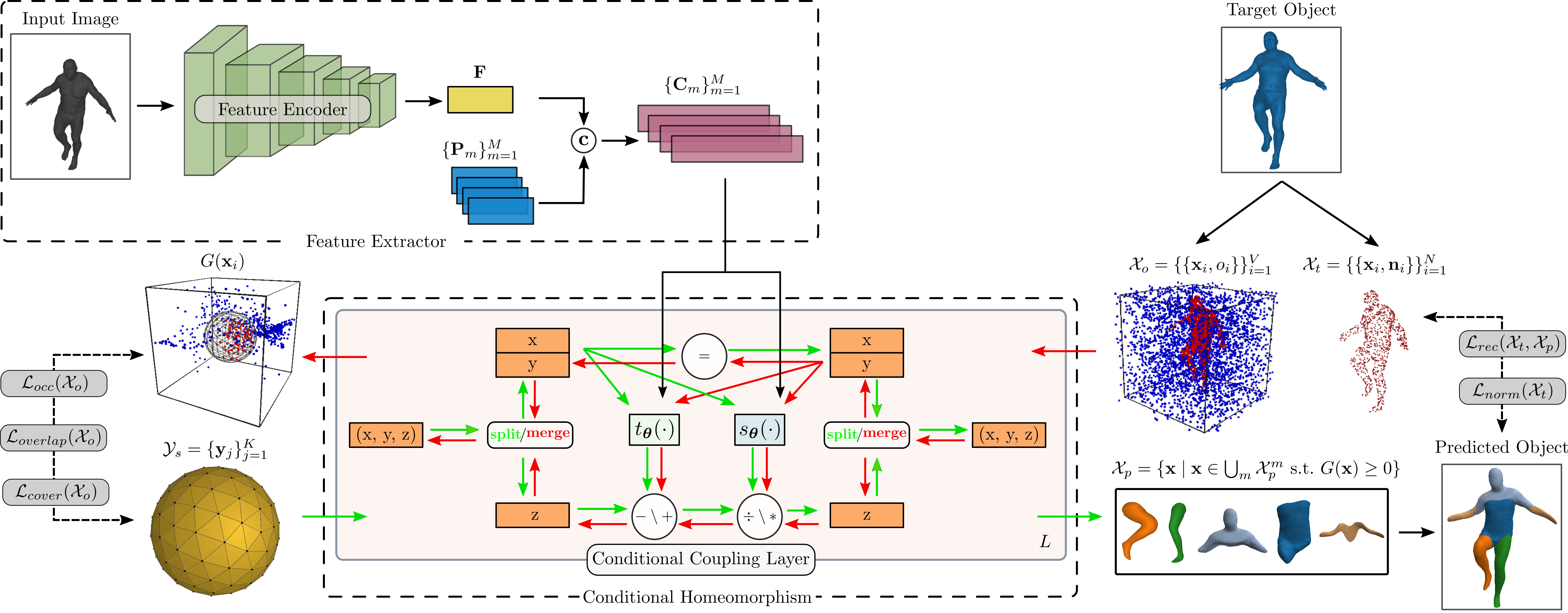

2021 - Neural Parts: Learning Expressive 3D Shape Abstractions with Invertible Neural Networks

We propose the Neural Logic Machine (NLM), a neural-symbolic architecture for both inductive learning and logic reasoning. NLMs exploit the power of both neural networks---as function approximators, and logic programming---as a symbolic processor for objects with properties, relations, logic connectives, and quantifiers. After being trained on small-scale tasks (such as sorting short arrays), NLMs can recover lifted rules, and generalize to large-scale tasks (such as sorting longer arrays). In our experiments, NLMs achieve perfect generalization in a number of tasks, from relational reasoning tasks on the family tree and general graphs, to decision making tasks including sorting arrays, finding shortest paths, and playing the blocks world. Most of these tasks are hard to accomplish for neural networks or inductive logic programming alone.

Random search for hyper-parameter optimisation

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

[Adam]: A Method for Stochastic Optimization

[Dropout]: A Simple Way to Prevent Neural Networks from Overfitting

Discriminative Unsupervised Feature Learning with Exemplar Convolutional Neural Networks ✅

Multi-Scale Context Aggregation by Dilated Convolutions https://arxiv.org/abs/1511.07122

2017 - The Marginal Value of Adaptive Gradient Methods in Machine Learning

(i) Adaptive methods find solutions that generalize worse than those found by non-adaptive methods.

(ii) Even when the adaptive methods achieve the same training loss or lower than non-adaptive methods, the development or test performance is worse.

(iii) Adaptive methods often display faster initial progress on the training set, but their performance quickly plateaus on the development set.

(iv) Though conventional wisdom suggests that Adam does not require tuning, we find that tuning the initial learning rate and decay scheme for Adam yields significant improvements over its default settings in all cases.

DARTS: Differentiable Architecture Search https://arxiv.org/abs/1806.09055

Bag of Tricks for Image Classification with Convolutional Neural Networks ✅

2018 - Tune: A Research Platform for Distributed Model Selection and Training [github]

2017 - Equilibrium Propagation: Bridging the Gap Between Energy-Based Models and Backpropagation

2017 - Understanding deep learning requires rethinking generalization ⭕

2018 - An Empirical Model of Large-Batch Training

2019 - Training Neural Networks with Local Error Signals [github] ⭕

2019 - Switchable Normalization for Learning-to-Normalize Deep Representation

2019 - Revisiting Small Batch Training for Deep Neural Networks

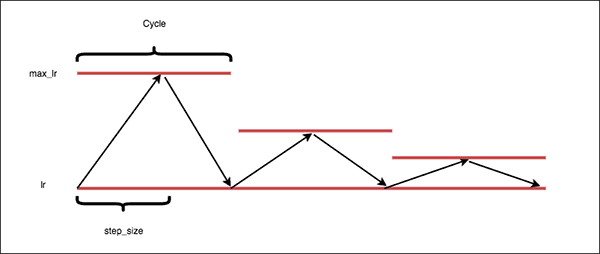

2019 - Cyclical Learning Rates for Training Neural Networks

2019 - DeepOBS: A Deep Learning Optimizer Benchmark Suite

2019 - A Recipe for Training Neural Networks. Andrey Karpathi Blog

2020 - Fantastic Generalization Measures and Where to Find Them ✅

The most direct and principled approach for studying generalization in deep learning is to prove a generalization bound which is typically an upper bound on the test error based on some quantity that can be calculated on the training set.

Kendall’s Rank-Correlation Coefficient: Given a set of models resulted by training with hyperparameters in the set Θ, their associated generalization gap {g(θ)| θ ∈ Θ}, and their respective values of the measure {µ(θ)| θ ∈ Θ}, our goal is to analyze how consistent a measure (e.g. L2 norm of network weights) is with the empirically observed generalization. If complexity and generalization are independent, the coefficient becomes zero

VC-dimension as well as the number of parameters are negatively correlated with generalization gap which confirms the widely known empirical observation that overparametrization improves generalization in deep learning.

These results confirm the general understanding that larger margin, lower cross-entropy and higher entropy would lead to better generalization

we observed that the initial phase (to reach cross-entropy value of 0.1) of the optimization is negatively correlated with the ??speed of optimization?? (error?) for both τ and Ψ. This would suggest that the difficulty of optimization during the initial phase of the optimization benefits the final generalization.

Towards the end of the training, the variance of the gradients also captures a particular type of “flatness” of the local minima. This measure is surprisingly predictive of the generalization both in terms of τ and Ψ, and more importantly, is positively correlated across every type of hyperparameter.

There are mixed results about how the optimization speed is relevant to generalization. On one hand we know that adding Batch Normalization or using shortcuts in residual architectures help both optimization and generalization.On the other hand, there are empirical results showing that adaptive optimization methods that are faster, usually generalize worse (Wilson et al., 2017b).

Based on empirical observations made by the community as a whole, the canonical ordering we give to each of the hyper-parameter categories are as follows:

- Batchsize: smaller batchsize leads to smaller generalization gap

- Depth: deeper network leads to smaller generalization gap

- Width: wider network leads to smaller generalization gap

- Dropout: The higher the dropout (≤ 0.5) the smaller the generalization gap

- Weight decay: The higher the weight decay (smaller than the maximum for each optimizer) the smaller the generalization gap

- Learning rate: The higher the learning rate (smaller than the maximum for each optimizer) the smaller the generalization gap

- Optimizer: Generalization gap of Momentum SGD < Generalization gap of Adam < Generalization gap of RMSProp

2020 - Descending through a Crowded Valley -- Benchmarking Deep Learning Optimizers

2020 - Do Wide and Deep Networks Learn the Same Things? Uncovering How Neural Network Representations Vary with Width and Depth

2021 - Revisiting ResNets: Improved Training and Scaling Strategies

Novel computer vision architectures monopolize the spotlight, but the impact of the model architecture is often conflated with simultaneous changes to training methodology and scaling strategies. Our work revisits the canonical ResNet (He et al., 2015) and studies these three aspects in an effort to disentangle them. Perhaps surprisingly, we find that training and scaling strategies may matter more than architectural changes, and further, that the resulting ResNets match recent state-of-the-art models. We show that the best performing scaling strategy depends on the training regime and offer two new scaling strategies: (1) scale model depth in regimes where overfitting can occur (width scaling is preferable otherwise); (2) increase image resolution more slowly than previously recommended (Tan & Le, 2019). Using improved training and scaling strategies, we design a family of ResNet architectures, ResNet-RS, which are 1.7x - 2.7x faster than EfficientNets on TPUs, while achieving similar accuracies on ImageNet. In a large-scale semi-supervised learning setup, ResNet-RS achieves 86.2% top-1 ImageNet accuracy, while being 4.7x faster than EfficientNet NoisyStudent. The training techniques improve transfer performance on a suite of downstream tasks (rivaling state-of-the-art self-supervised algorithms) and extend to video classification on Kinetics-400. We recommend practitioners use these simple revised ResNets as baselines for future research.

2013 - Do Deep Nets Really Need to be Deep?

2015 - Learning both Weights and Connections for Efficient Neural Networks

2015 - Distilling the Knowledge in a Neural Network ⭕

2017 - Learning Efficient Convolutional Networks through Network Slimming - [github] ⭕

2018 - Rethinking the Value of Network Pruning ✅

For all state-of-the-art structured pruning algorithms we examined, fine-tuning a pruned model only gives comparable or worse performance than training that model with randomly initialized weights. For pruning algorithms which assume a predefined target network architecture, one can get rid of the full pipeline and directly train the target network from scratch.

Our observations are consistent for multiple network architectures, datasets, and tasks, which imply that:

- training a large, over-parameterized model is often not necessary to obtain an efficient final model

- learned “important” weights of the large model are typically not useful for the small pruned model

- the pruned architecture itself, rather than a set of inherited “important” weights, is more crucial to the efficiency in the final model, which suggests that in some cases pruning can be useful as an architecture search paradigm.

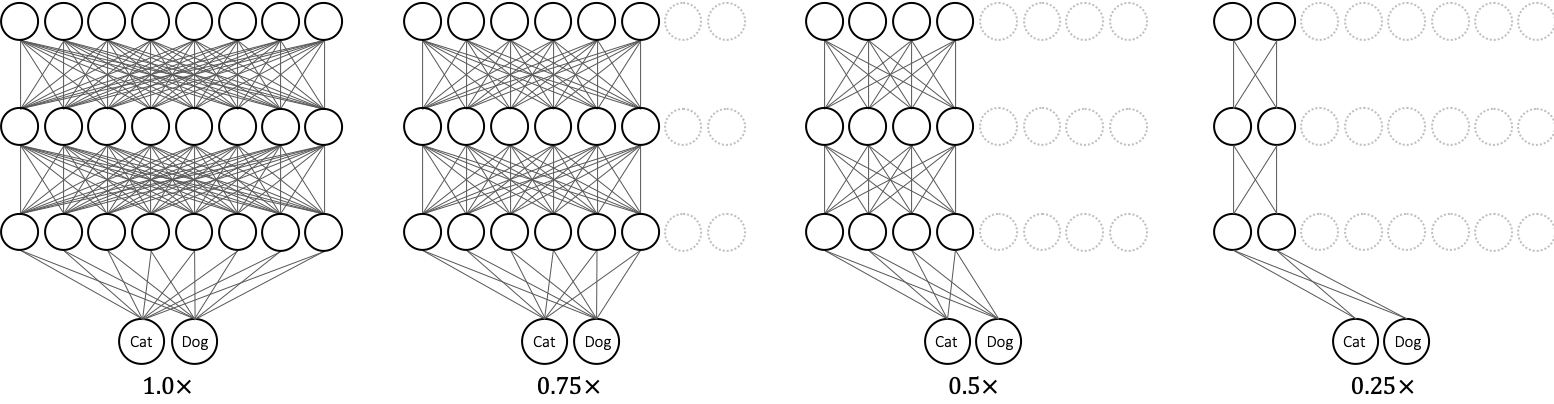

2018 - Slimmable Neural Networks

2019 - Universally Slimmable Networks and Improved Training Techniques

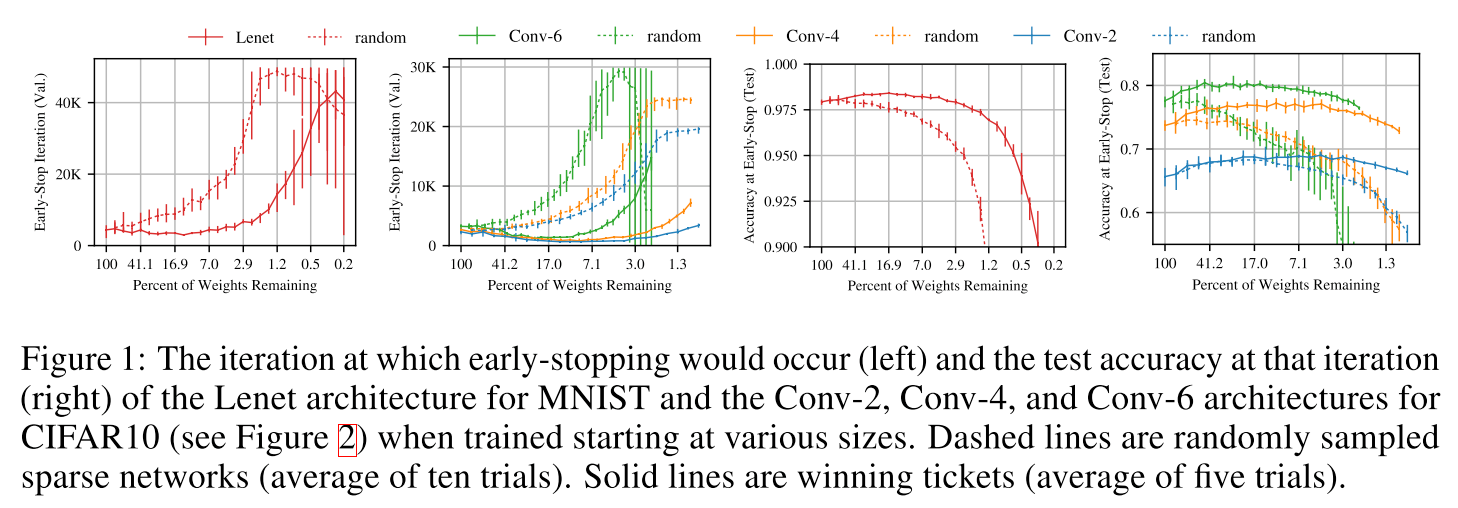

2019 - The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks ✅

Based on these results, we articulate the lottery ticket hypothesis: dense, randomly-initialized, feed-forward networks contain subnetworks (winning tickets) that—when trained in isolation— reach test accuracy comparable to the original network in a similar number of iterations.

The winning tickets we find have won the initialization lottery: their connections have initial weights that make training particularly effective.

2019 - AutoSlim: Towards One-Shot Architecture Search for Channel Numbers

2015 - Visualizing and Understanding Recurrent Networks

2016 - Discovering Causal Signals in Images

2016 - [Grad-CAM]: Why did you say that? Visual Explanations from Deep Networks via Gradient-based Localization [github]

2017 - Visualizing the Loss Landscape of Neural Nets

2019 - [SURVEY] Visual Analytics in Deep Learning: An Interrogative Survey for the Next Frontiers 📜

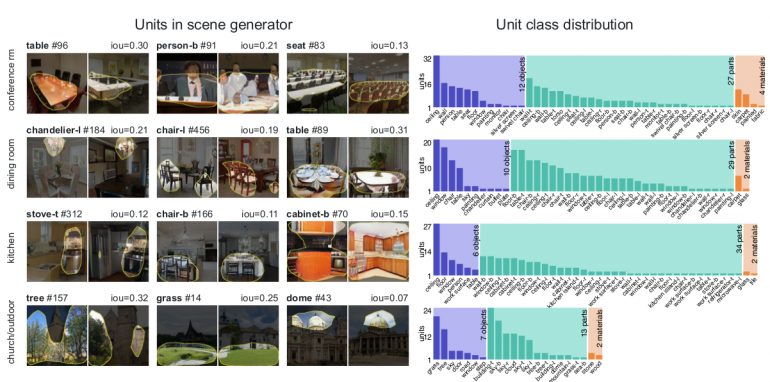

2018 - GAN Dissection: Visualizing and Understanding Generative Adversarial Networks

[Netron ] Visualizer for deep learning and machine learning models

2019 - [Distill]: Computing Receptive Fields of Convolutional Neural Networks

2019 - Unmasking Clever Hans Predictors and Assessing What Machines Really Learn

Current learning machines have successfully solved hard application problems, reaching high accuracy and displaying seemingly "intelligent" behavior. Here we apply recent techniques for explaining decisions of state-of-the-art learning machines and analyze various tasks from computer vision and arcade games. This showcases a spectrum of problem-solving behaviors ranging from naive and short-sighted, to well-informed and strategic. We observe that standard performance evaluation metrics can be oblivious to distinguishing these diverse problem solving behaviors. Furthermore, we propose our semi-automated Spectral Relevance Analysis that provides a practically effective way of characterizing and validating the behavior of nonlinear learning machines. This helps to assess whether a learned model indeed delivers reliably for the problem that it was conceived for. Furthermore, our work intends to add a voice of caution to the ongoing excitement about machine intelligence and pledges to evaluate and judge some of these recent successes in a more nuanced manner.

2020 - Actionable Attribution Maps for Scientific Machine Learning

2020 - Shortcut Learning in Deep Neural Networks

Deep learning has triggered the current rise of artificial intelligence and is the workhorse of today's machine intelligence. Numerous success stories have rapidly spread all over science, industry and society, but its limitations have only recently come into focus. In this perspective we seek to distil how many of deep learning's problem can be seen as different symptoms of the same underlying problem: shortcut learning. Shortcuts are decision rules that perform well on standard benchmarks but fail to transfer to more challenging testing conditions, such as real-world scenarios. Related issues are known in Comparative Psychology, Education and Linguistics, suggesting that shortcut learning may be a common characteristic of learning systems, biological and artificial alike. Based on these observations, we develop a set of recommendations for model interpretation and benchmarking, highlighting recent advances in machine learning to improve robustness and transferability from the lab to real-world applications.

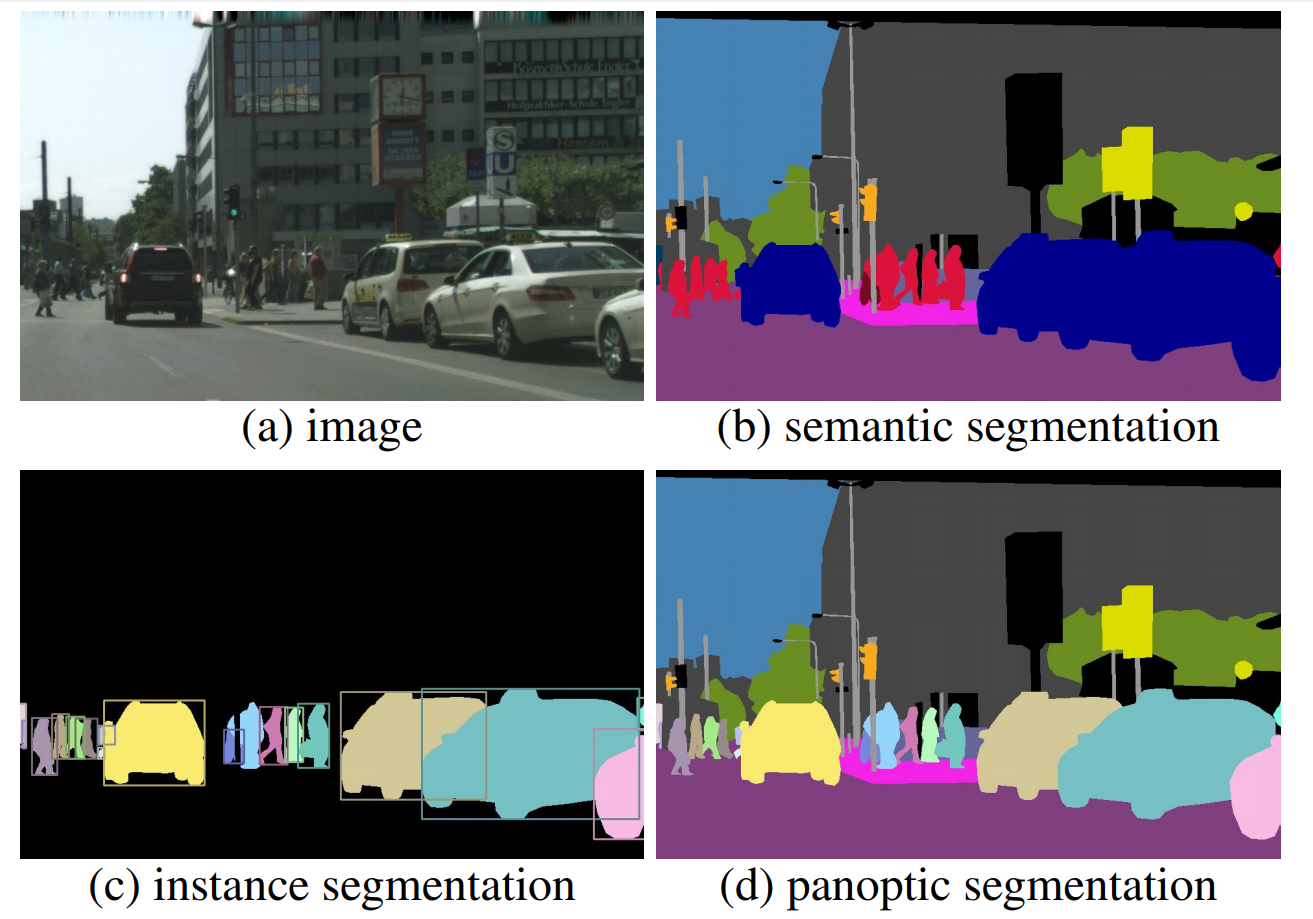

2019 - Panoptic Segmentation ✅

Recognizing unseen objects is a challenging perception task since the robot needs to learn the concept of “objects” and generalize it to unseen objects

An ideal method would combine the generalization capability of training on synthetic depth and the ability to produce sharp masks by training on RGB.

Training DSN with depth images allows for better generalization to the real world data

We posit that mask refinement is an easier problem than directly using RGB as input to produce instance masks.

For the semantic segmentation loss, we use a weighted cross entropy as this has been shown to work well in detecting object boundaries in imbalanced images [29].

In order to train the RRN, we need examples of perturbed masks along with ground truth masks. Since such perturbations do not exist, this problem can be seen as a data augmentation task where we augment the ground truth mask into something that resembles an initial mask

In order to seek a fair comparison, all models trained in this section are trained for 100k iterations of SGD using a fixed learning rate of 1e-2 and batch size of 8.

2019 - ShapeMask: Learning to Segment Novel Objects by Refining Shape Priors

2019 - Learning to Segment via Cut-and-Paste

2019 - YOLACT Real-time Instance Segmentation[github]

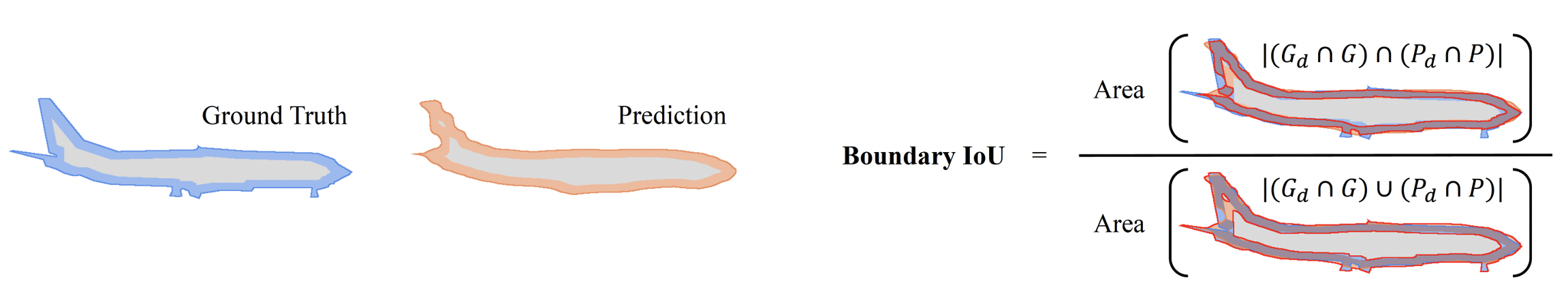

2021 - Boundary IoU: Improving Object-Centric Image Segmentation Evaluation [github]

We present Boundary IoU (Intersection-over-Union), a new segmentation evaluation measure focused on boundary quality. We perform an extensive analysis across different error types and object sizes and show that Boundary IoU is significantly more sensitive than the standard Mask IoU measure to boundary errors for large objects and does not over-penalize errors on smaller objects. The new quality measure displays several desirable characteristics like symmetry w.r.t. prediction/ground truth pairs and balanced responsiveness across scales, which makes it more suitable for segmentation evaluation than other boundary-focused measures like Trimap IoU and F-measure. Based on Boundary IoU, we update the standard evaluation protocols for instance and panoptic segmentation tasks by proposing the Boundary AP (Average Precision) and Boundary PQ (Panoptic Quality) metrics, respectively. Our experiments show that the new evaluation metrics track boundary quality improvements that are generally overlooked by current Mask IoU-based evaluation metrics. We hope that the adoption of the new boundary-sensitive evaluation metrics will lead to rapid progress in segmentation methods that improve boundary quality.

We present a conceptually simple, flexible, and general framework for object instance segmentation. Our approach efficiently detects objects in an image while simultaneously generating a high-quality segmentation mask for each instance. The method, called Mask R-CNN, extends Faster R-CNN by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition. Mask R-CNN is simple to train and adds only a small overhead to Faster R-CNN, running at 5 fps. Moreover, Mask R-CNN is easy to generalize to other tasks, e.g., allowing us to estimate human poses in the same framework. We show top results in all three tracks of the COCO suite of challenges, including instance segmentation, bounding-box object detection, and person keypoint detection. Without bells and whistles, Mask R-CNN outperforms all existing, single-model entries on every task, including the COCO 2016 challenge winners. We hope our simple and effective approach will serve as a solid baseline and help ease future research in instance-level recognition

2019 - Instance Segmentation by Jointly Optimizing Spatial Embeddings and Clustering Bandwidth[github] ⭕

Current state-of-the-art instance segmentation methods are not suited for real-time applications like autonomous driving, which require fast execution times at high accuracy. Although the currently dominant proposal-based methods have high accuracy, they are slow and generate masks at a fixed and low resolution. Proposal-free methods, by contrast, can generate masks at high resolution and are often faster, but fail to reach the same accuracy as the proposal-based methods. In this work we propose a new clustering loss function for proposal-free instance segmentation. The loss function pulls the spatial embeddings of pixels belonging to the same instance together and jointly learns an instance-specific clustering bandwidth, maximizing the intersection-over-union of the resulting instance mask. When combined with a fast architecture, the network can perform instance segmentation in real-time while maintaining a high accuracy. We evaluate our method on the challenging Cityscapes benchmark and achieve top results (5% improvement over Mask R-CNN) at more than 10 fps on 2MP images.

2020 - Continuous Adaptation for Interactive Object Segmentation by Learning from Corrections ⭕

In interactive object segmentation a user collaborates with a computer vision model to segment an object. Recent works employ convolutional neural networks for this task: Given an image and a set of corrections made by the user as input, they output a segmentation mask. These approaches achieve strong performance by training on large datasets but they keep the model parameters unchanged at test time. Instead, we recognize that user corrections can serve as sparse training examples and we propose a method that capitalizes on that idea to update the model parameters on-the-fly to the data at hand. Our approach enables the adaptation to a particular object and its background, to distributions shifts in a test set, to specific object classes, and even to large domain changes, where the imaging modality changes between training and testing. We perform extensive experiments on 8 diverse datasets and show: Compared to a model with frozen parameters, our method reduces the required corrections (i) by 9%-30% when distribution shifts are small between training and testing; (ii) by 12%-44% when specializing to a specific class; (iii) and by 60% and 77% when we completely change domain between training and testing.

2009 - Anomaly Detection: A Survey 📜

2017 - Unsupervised Anomaly Detection with Generative Adversarial Networks to Guide Marker Discovery

2017 - End-to-end weakly-supervised semantic alignment

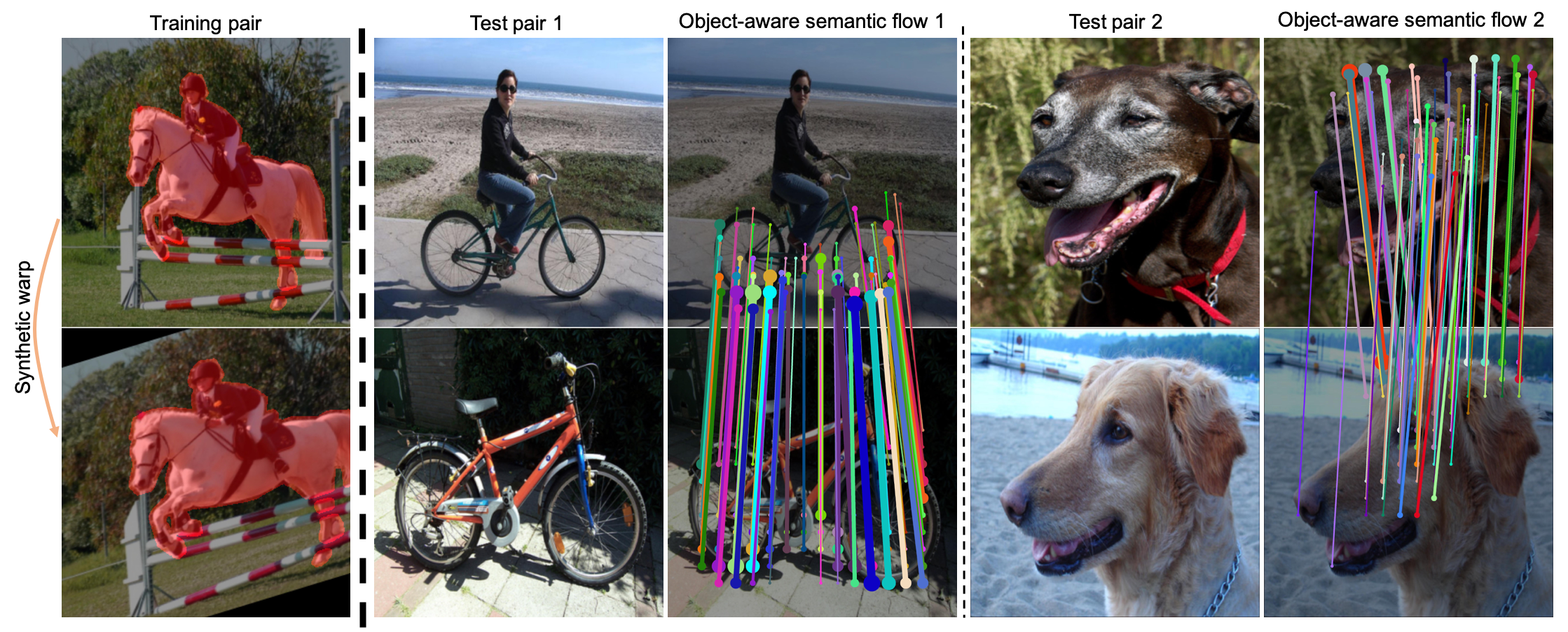

2019 - SFNet: Learning Object-aware Semantic Correspondence - [github]

2020 - Deep Semantic Matching with Foreground Detection and Cycle-Consistency

2019 - SelFlow: Self-Supervised Learning of Optical Flow - [github]

We present a self-supervised learning approach for optical flow. Our method distills reliable flow estimations from non-occluded pixels, and uses these predictions as ground truth to learn optical flow for hallucinated occlusions. We further design a simple CNN to utilize temporal information from multiple frames for better flow estimation. These two principles lead to an approach that yields the best performance for unsupervised optical flow learning on the challenging benchmarks including MPI Sintel, KITTI 2012 and 2015. More notably, our self-supervised pre-trained model provides an excellent initialization for supervised fine-tuning. Our fine-tuned models achieve state-of-the-art results on all three datasets. At the time of writing, we achieve EPE=4.26 on the Sintel benchmark, outperforming all submitted methods.

2021 - AutoFlow: Learning a Better Training Set for Optical Flow

Synthetic datasets play a critical role in pre-training CNN models for optical flow, but they are painstaking to generate and hard to adapt to new applications. To automate the process, we present AutoFlow, a simple and effective method to render training data for optical flow that optimizes the performance of a model on a target dataset. AutoFlow takes a layered approach to render synthetic data, where the motion, shape, and appearance of each layer are controlled by learnable hyperparameters. Experimental results show that AutoFlow achieves state-of-the-art accuracy in pre-training both PWC-Net and RAFT.

2021 - SMURF: Self-Teaching Multi-Frame Unsupervised RAFT with Full-Image Warping

2015 - Constrained Convolutional Neural Networks for Weakly Supervised Segmentation

2018 - Deep Learning with Mixed Supervision for Brain Tumor Segmentation

2019 - Localization with Limited Annotation for Chest X-rays

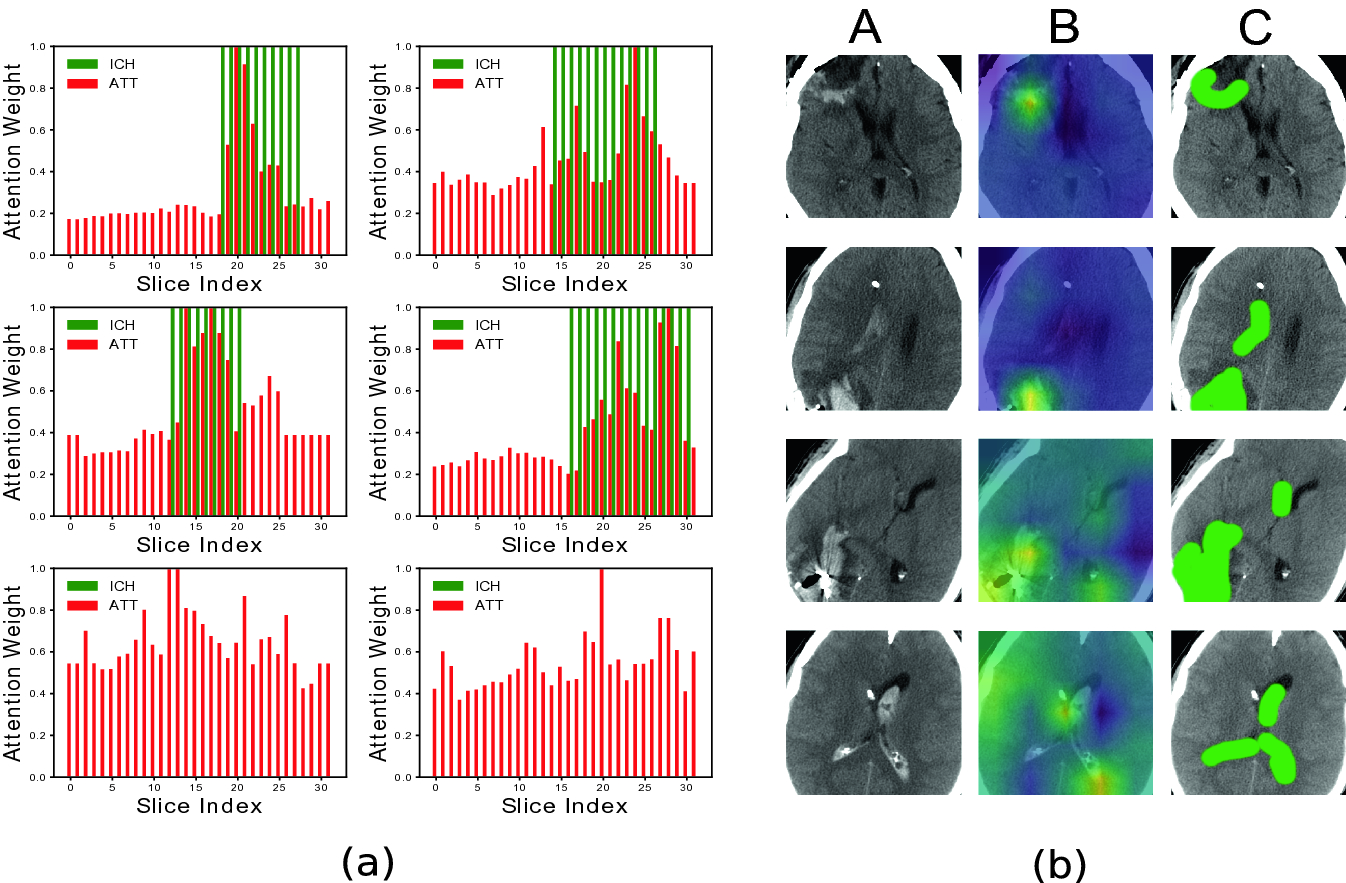

2019 - Doubly Weak Supervision of Deep Learning Models for Head CT

2019 - Training Complex Models with Multi-Task Weak Supervision

As machine learning models continue to increase in complexity, collecting large hand-labeled training sets has become one of the biggest roadblocks in practice. Instead, weaker forms of supervision that provide noisier but cheaper labels are often used. However, these weak supervision sources have diverse and unknown accuracies, may output correlated labels, and may label different tasks or apply at different levels of granularity. We propose a framework for integrating and modeling such weak supervision sources by viewing them as labeling different related sub-tasks of a problem, which we refer to as the multi-task weak supervision setting. We show that by solving a matrix completion-style problem, we can recover the accuracies of these multi-task sources given their dependency structure, but without any labeled data, leading to higher-quality supervision for training an end model. Theoretically, we show that the generalization error of models trained with this approach improves with the number of unlabeled data points, and characterize the scaling with respect to the task and dependency structures. On three fine-grained classification problems, we show that our approach leads to average gains of 20.2 points in accuracy over a traditional supervised approach, 6.8 points over a majority vote baseline, and 4.1 points over a previously proposed weak supervision method that models tasks separately.

2020 - Fast and Three-rious: Speeding Up Weak Supervision with Triplet Methods

2014 - Discriminative Unsupervised Feature Learning with Exemplar Convolutional Neural Networks ✅

2017 - Random Erasing Data Augmentation[github]

2017 - Smart Augmentation - Learning an Optimal Data Augmentation Strategy

2017 - Population Based Training of Neural Networks ⭕

2018 - Albumentations: fast and flexible image augmentations - [github] ✅

2018 - Data Augmentation by Pairing Samples for Images Classification

2018 - [AutoAugment]: Learning Augmentation Policies from Data

2018 - Synthetic Data Augmentation using GAN for Improved Liver Lesion Classification

2018 - GAN Augmentation: Augmenting Training Data using Generative Adversarial Networks

2019 - [UDA]: Unsupervised Data Augmentation for Consistency Training - [github] ⭕

Common among recent approaches is the use of consistency training on a large amount of unlabeled data to constrain model predictions to be invariant to input noise. In this work, we present a new perspective on how to effectively noise unlabeled examples and argue that the quality of noising, specifically those produced by advanced data augmentation methods, plays a crucial role in semi-supervised learning. Our method also combines well with transfer learning, e.g., when finetuning from BERT, and yields improvements in high-data regime, such as ImageNet, whether when there is only 10% labeled data or when a full labeled set with 1.3M extra unlabeled examples is used.

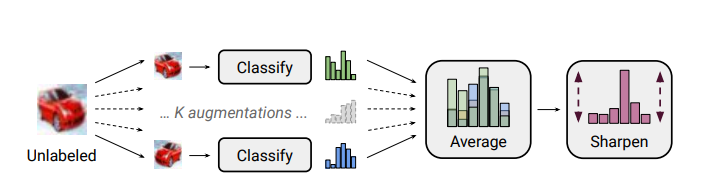

2019 - [MixMatch]: A Holistic Approach to Semi-Supervised Learning ⭕

Semi-supervised learning has proven to be a powerful paradigm for leveraging unlabeled data to mitigate the reliance on large labeled datasets. In this work, we unify the current dominant approaches for semi-supervised learning to produce a new algorithm, MixMatch, that works by guessing low-entropy labels for data-augmented unlabeled examples and mixing labeled and unlabeled data using MixUp. We show that MixMatch obtains state-of-the-art results by a large margin across many datasets and labeled data amounts.

2019 - [RealMix]: Towards Realistic Semi-Supervised Deep Learning Algorithms ✅

2019 - Population Based Augmentation: Efficient Learning of Augmentation Policy Schedules [github] ✅

2019 - [AugMix]: A Simple Data Processing Method to Improve Robustness and Uncertainty [github] ✅

2019 - Self-training with [Noisy Student] improves ImageNet classification ✅

We present a simple self-training method that achieves 88.4% top-1 accuracy on ImageNet, which is 2.0% better than the state-of-the-art model that requires 3.5B weakly labeled Instagram images. On robustness test sets, it improves ImageNet-A top-1 accuracy from 61.0% to 83.7%. To achieve this result, we first train an EfficientNet model on labeled ImageNet images and use it as a teacher to generate pseudo labels on 300M unlabeled images. We then train a larger EfficientNet as a student model on the combination of labeled and pseudo labeled images. We iterate this process by putting back the student as the teacher. During the generation of the pseudo labels, the teacher is not noised so that the pseudo labels are as accurate as possible. However, during the learning of the student, we inject noise such as dropout, stochastic depth and data augmentation via RandAugment to the student so that the student generalizes better than the teacher.

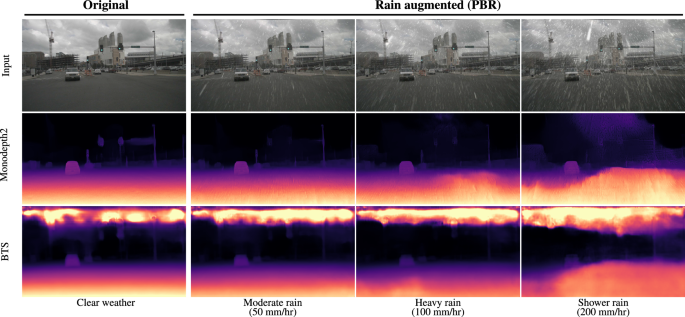

2020 - Rain rendering for evaluating and improving robustness to bad weather

2015 - Unsupervised Visual Representation Learning by Context Prediction

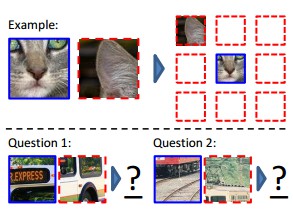

This work explores the use of spatial context as a source of free and plentiful supervisory signal for training a rich visual representation. Given only a large, unlabeled image collection, we extract random pairs of patches from each image and train a convolutional neural net to predict the position of the second patch relative to the first. We argue that doing well on this task requires the model to learn to recognize objects and their parts. We demonstrate that the feature representation learned using this within-image context indeed captures visual similarity across images. For example, this representation allows us to perform unsupervised visual discovery of objects like cats, people, and even birds from the Pascal VOC 2011 detection dataset. Furthermore, we show that the learned ConvNet can be used in the R-CNN framework and provides a significant boost over a randomly-initialized ConvNet, resulting in state-of-the-art performance among algorithms which use only Pascal-provided training set annotations.

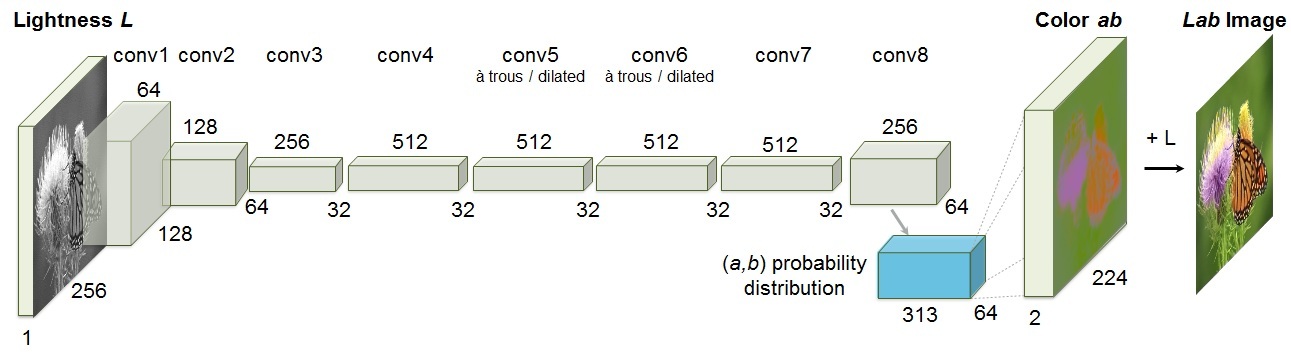

2016 - Colorful Image Colorization

2016 - Unsupervised Learning of Visual Representations by Solving Jigsaw Puzzles

In this paper we study the problem of image representation learning without human annotation. By following the principles of self-supervision, we build a convolutional neural network (CNN) that can be trained to solve Jigsaw puzzles as a pretext task, which requires no manual labeling, and then later repurposed to solve object classification and detection. To maintain the compatibility across tasks we introduce the context-free network (CFN), a siamese-ennead CNN. The CFN takes image tiles as input and explicitly limits the receptive field (or context) of its early processing units to one tile at a time. We show that the CFN includes fewer parameters than AlexNet while preserving the same semantic learning capabilities. By training the CFN to solve Jigsaw puzzles, we learn both a feature mapping of object parts as well as their correct spatial arrangement. Our experimental evaluations show that the learned features capture semantically relevant content. Our proposed method for learning visual representations outperforms state of the art methods in several transfer learning benchmarks.

2016 - Context Encoders: Feature Learning by Inpainting

2018 - Unsupervised Representation Learning by Predicting Image Rotations

2019 - Greedy InfoMax for Biologically Plausible Self-Supervised Representation Learning

2019 - Unsupervised Learning via Meta-Learning

2019 - [PIRL]: Self-Supervised Learning of Pretext-Invariant Representations Ishan Misra, L. V. D. Maaten

The goal of self-supervised learning from images is to construct image representations that are semantically meaningful via pretext tasks that do not require semantic annotations. Many pretext tasks lead to representations that are covariant with image transformations. We argue that, instead, semantic representations ought to be invariant under such transformations. Specifically, we develop Pretext-Invariant Representation Learning (PIRL, pronounced as `pearl') that learns invariant representations based on pretext tasks. We use PIRL with a commonly used pretext task that involves solving jigsaw puzzles. We find that PIRL substantially improves the semantic quality of the learned image representations. Our approach sets a new state-of-the-art in self-supervised learning from images on several popular benchmarks for self-supervised learning. Despite being unsupervised, PIRL outperforms supervised pre-training in learning image representations for object detection. Altogether, our results demonstrate the potential of self-supervised representations with good invariance properties

2019 - Representation Learning with Contrastive Predictive Coding

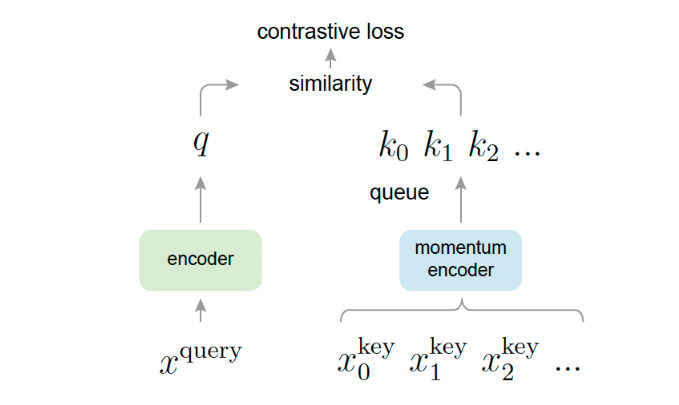

2019 - [MoCo]: Momentum Contrast for Unsupervised Visual Representation Learning

We present Momentum Contrast (MoCo) for unsupervised visual representation learning. From a perspective on contrastive learning as dictionary look-up, we build a dynamic dictionary with a queue and a moving-averaged encoder. This enables building a large and consistent dictionary on-the-fly that facilitates contrastive unsupervised learning. MoCo provides competitive results under the common linear protocol on ImageNet classification. More importantly, the representations learned by MoCo transfer well to downstream tasks. MoCo can outperform its supervised pre-training counterpart in 7 detection/segmentation tasks on PASCAL VOC, COCO, and other datasets, sometimes surpassing it by large margins. This suggests that the gap between unsupervised and supervised representation learning has been largely closed in many vision tasks.

2019 - Self-supervised Visual Feature Learning with Deep Neural Networks: A Survey

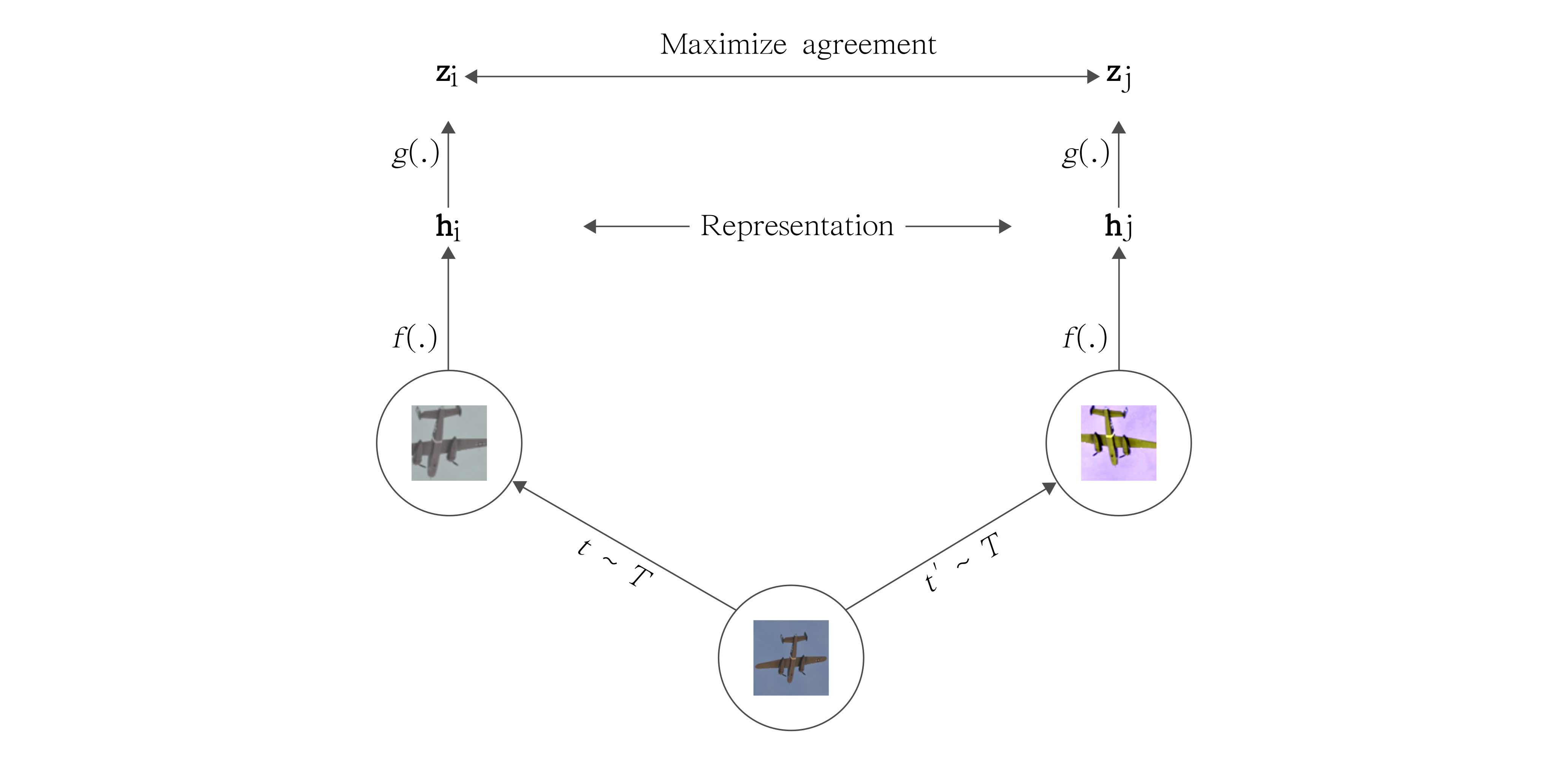

2020 - [SimCLR]: A Simple Framework for Contrastive Learning of Visual Representations ✅

This paper presents SimCLR: a simple framework for contrastive learning of visual representations. We simplify recently proposed contrastive self-supervised learning algorithms without requiring specialized architectures or a memory bank. In order to understand what enables the contrastive prediction tasks to learn useful representations, we systematically study the major components of our framework. We show that (1) composition of data augmentations plays a critical role in defining effective predictive tasks, (2) introducing a learnable nonlinear transformation between the representation and the contrastive loss substantially improves the quality of the learned representations, and (3) contrastive learning benefits from larger batch sizes and more training steps compared to supervised learning. By combining these findings, we are able to considerably outperform previous methods for self-supervised and semi-supervised learning on ImageNet. A linear classifier trained on self-supervised representations learned by SimCLR achieves 76.5% top-1 accuracy, which is a 7% relative improvement over previous state-of-the-art, matching the performance of a supervised ResNet-50. When fine-tuned on only 1% of the labels, we achieve 85.8% top-5 accuracy, outperforming AlexNet with 100X fewer labels.

2020 - ::SURVEY:: Self-supervised Visual Feature Learning with Deep Neural Networks: A Survey 📜⭕

2020 - [NeurIPS 2020 Workshop]: Self-Supervised Learning - Theory and Practice ⭕

2020 - [BYOL]: Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning

We introduce Bootstrap Your Own Latent (BYOL), a new approach to self-supervised image representation learning. BYOL relies on two neural networks, referred to as online and target networks, that interact and learn from each other. From an augmented view of an image, we train the online network to predict the target network representation of the same image under a different augmented view. At the same time, we update the target network with a slow-moving average of the online network. While state-of-the art methods rely on negative pairs, BYOL achieves a new state of the art without them.

2021 - [POST] Facebook: Self-supervised learning: The dark matter of intelligence

2021 - Task Fingerprinting for Meta Learning in Biomedical Image Analysis

2019 - Feature Fusion for Online Mutual Knowledge Distillation

2016 - Cross-Stitch Networks for Multi-task Learning Ishan Misra, Abhinav Shrivastava, A. Gupta, M. Hebert

2017 - An Overview of Multi-Task Learning in Deep Neural Networks

Multi-task learning (MTL) has led to successes in many applications of machine learning, from natural language processing and speech recognition to computer vision and drug discovery. This article aims to give a general overview of MTL, particularly in deep neural networks. It introduces the two most common methods for MTL in Deep Learning, gives an overview of the literature, and discusses recent advances. In particular, it seeks to help ML practitioners apply MTL by shedding light on how MTL works and providing guidelines for choosing appropriate auxiliary tasks.

2017 - Multi-task Self-Supervised Visual Learning

We investigate methods for combining multiple self-supervised tasks--i.e., supervised tasks where data can be collected without manual labeling--in order to train a single visual representation. First, we provide an apples-to-apples comparison of four different self-supervised tasks using the very deep ResNet-101 architecture. We then combine tasks to jointly train a network. We also explore lasso regularization to encourage the network to factorize the information in its representation, and methods for "harmonizing" network inputs in order to learn a more unified representation. We evaluate all methods on ImageNet classification, PASCAL VOC detection, and NYU depth prediction. Our results show that deeper networks work better, and that combining tasks--even via a naive multi-head architecture--always improves performance. Our best joint network nearly matches the PASCAL performance of a model pre-trained on ImageNet classification, and matches the ImageNet network on NYU depth prediction.

An Introduction to Deep Reinforcement Learning https://arxiv.org/abs/1811.12560

Deep Reinforcement Learning https://arxiv.org/abs/1810.06339

Playing Atari with Deep Reinforcement Learning https://arxiv.org/pdf/1312.5602.pdf

Key Papers in Deep Reinforcment Learning https://spinningup.openai.com/en/latest/spinningup/keypapers.html

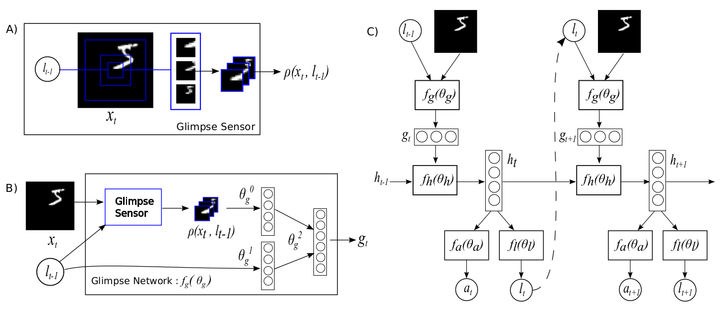

Recurrent Models of Visual Attention https://arxiv.org/abs/1406.6247

2019 - On the Feasibility of Learning, Rather than Assuming, Human Biases for Reward Inference

2015 - Natural Language Object Retrieval

2019 - CLEVR-Ref+: Diagnosing Visual Reasoning with Referring Expressions

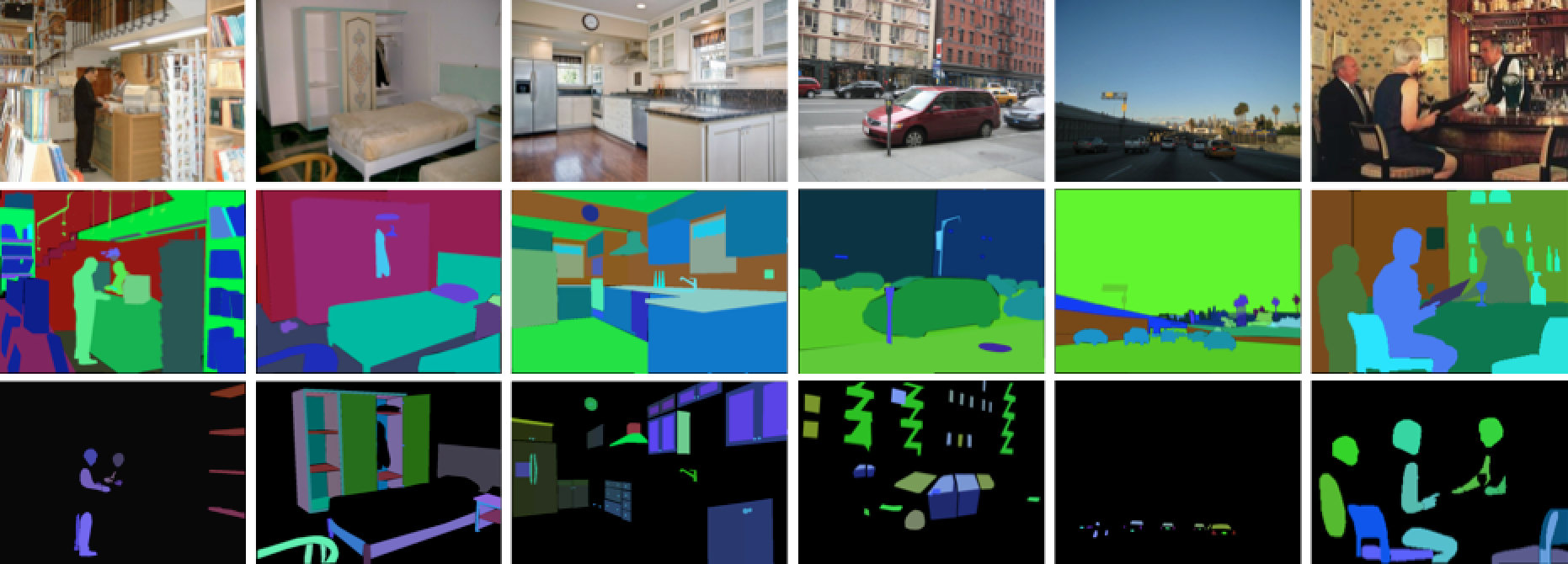

[ADE20K Dataset]: Semantic Segmentation [website]

Scene Parsing through ADE20K Dataset http://people.csail.mit.edu/bzhou/publication/scene-parse-camera-ready.pdf

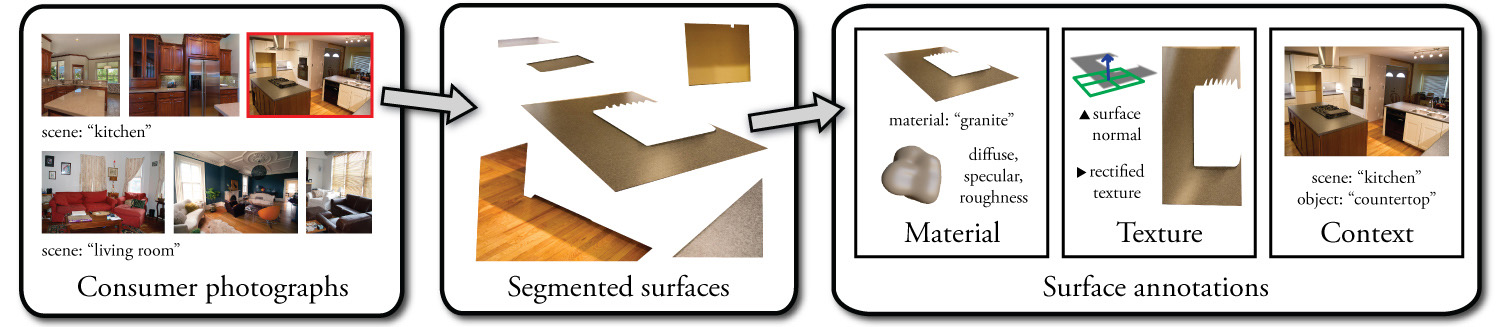

[OPENSURFACES]: A Richly Annotated Catalog of Surface Appearance

https://www.cs.cornell.edu/~paulu/opensurfaces.pdf

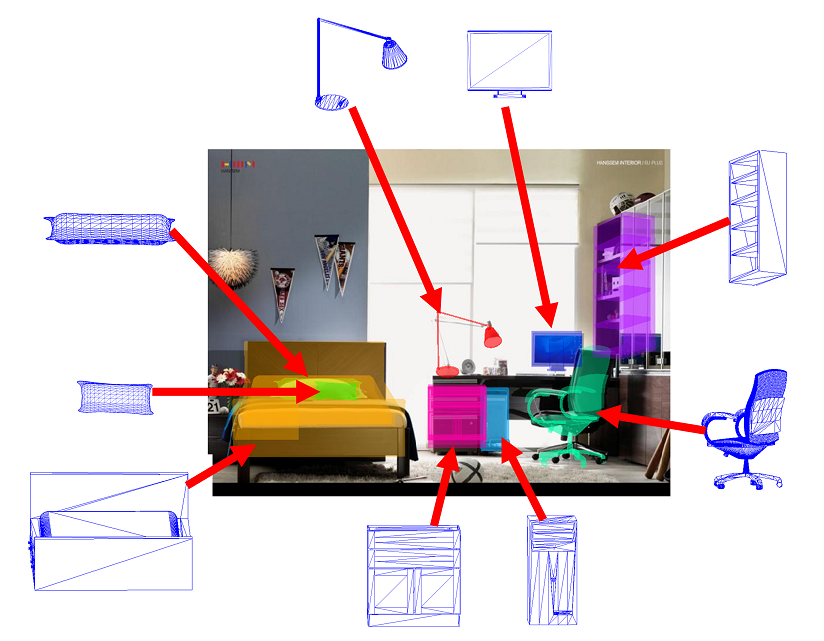

[ShapeNet] - a richly-annotated, large-scale dataset of 3D shapes [website]

ShapeNet: An Information-Rich 3D Model Repository

Beyond PASCAL: A Benchmark for 3D Object Detection in the Wild [website] [paper]

[ObjectNet3D]: A Large Scale Database for 3D Object Recognition

[ModelNet]: a comprehensive clean collection of 3D CAD models for objects [website]

[3D ShapeNets]: A Deep Representation for Volumetric Shapes (2015)

[DTD]: Describable Textures Dataset

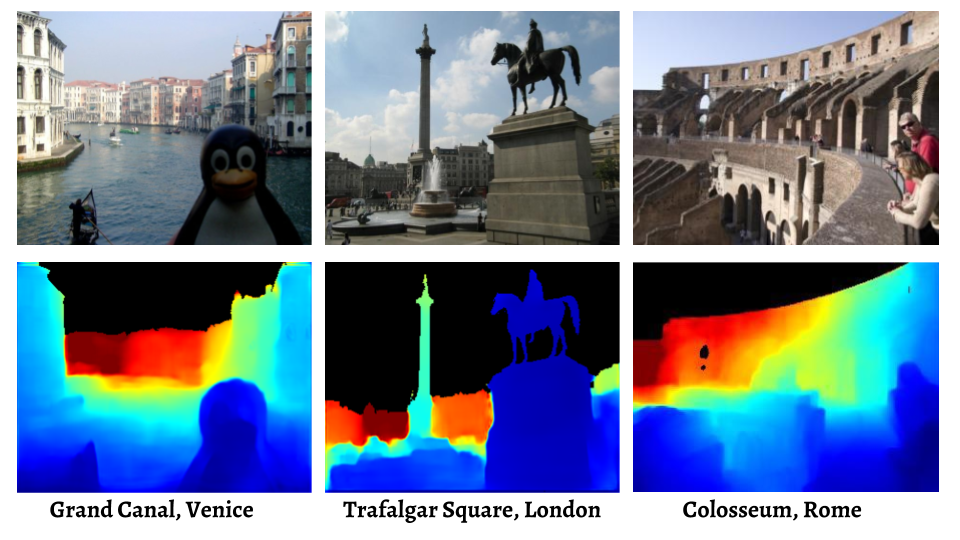

[MegaDepth]: Learning Single-View Depth Prediction from Internet Photos

Microsoft [COCO]: Common Objects in Context[website]

2020 - [CARLA] Open-source simulator for autonomous driving research.

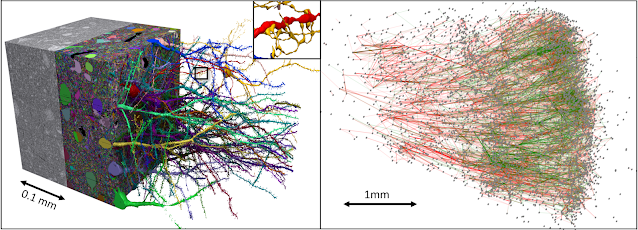

A Browsable Petascale Reconstruction of the Human Cortex

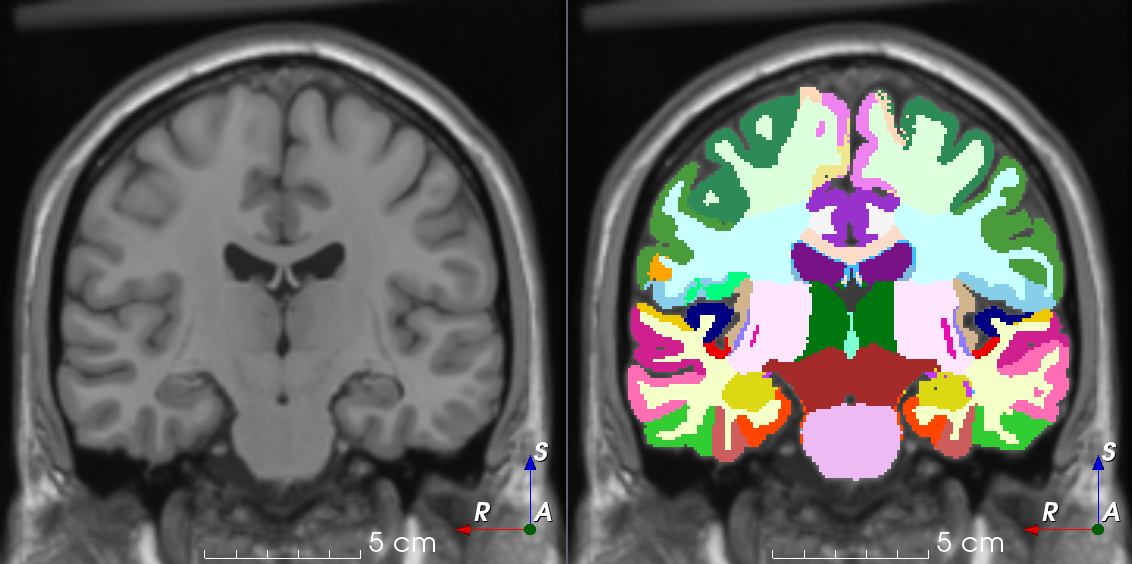

2021 - Medical Segmentation Decathlon. Generalisable 3D Semantic Segmentation

Aim: With recent advances in machine learning, semantic segmentation algorithms are becoming increasingly general purpose and translatable to unseen tasks. Many key algorithmic advances in the field of medical imaging are commonly validated on a small number of tasks, limiting our understanding of the generalisability of the proposed contributions. A model which works out-of-the-box on many tasks, in the spirit of AutoML, would have a tremendous impact on healthcare. The field of medical imaging is also missing a fully open source and comprehensive benchmark for general purpose algorithmic validation and testing covering a large span of challenges, such as: small data, unbalanced labels, large-ranging object scales, multi-class labels, and multimodal imaging, etc. This challenge and dataset aims to provide such resource thorugh the open sourcing of large medical imaging datasets on several highly different tasks, and by standardising the analysis and validation process.

DAWNBench: is a benchmark suite for end-to-end deep learning training and inference.

DAWNBench: An End-to-End Deep Learning Benchmark and Competition (paper) (2017)

Material Recognition in the Wild with the Materials in Context Database http://opensurfaces.cs.cornell.edu/publications/minc/

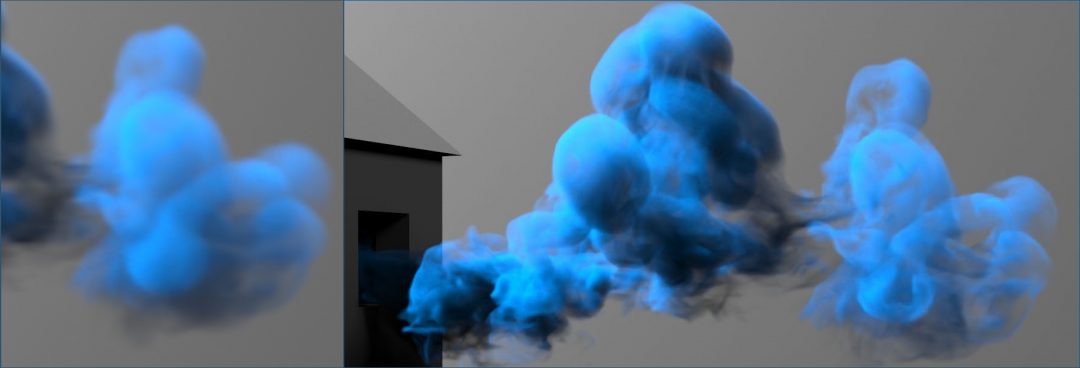

tempoGAN: A Temporally Coherent, Volumetric GAN for Super-resolution Fluid Flow https://ge.in.tum.de/publications/tempogan/

BubGAN: Bubble Generative Adversarial Networks for Synthesizing Realistic Bubbly Flow Images

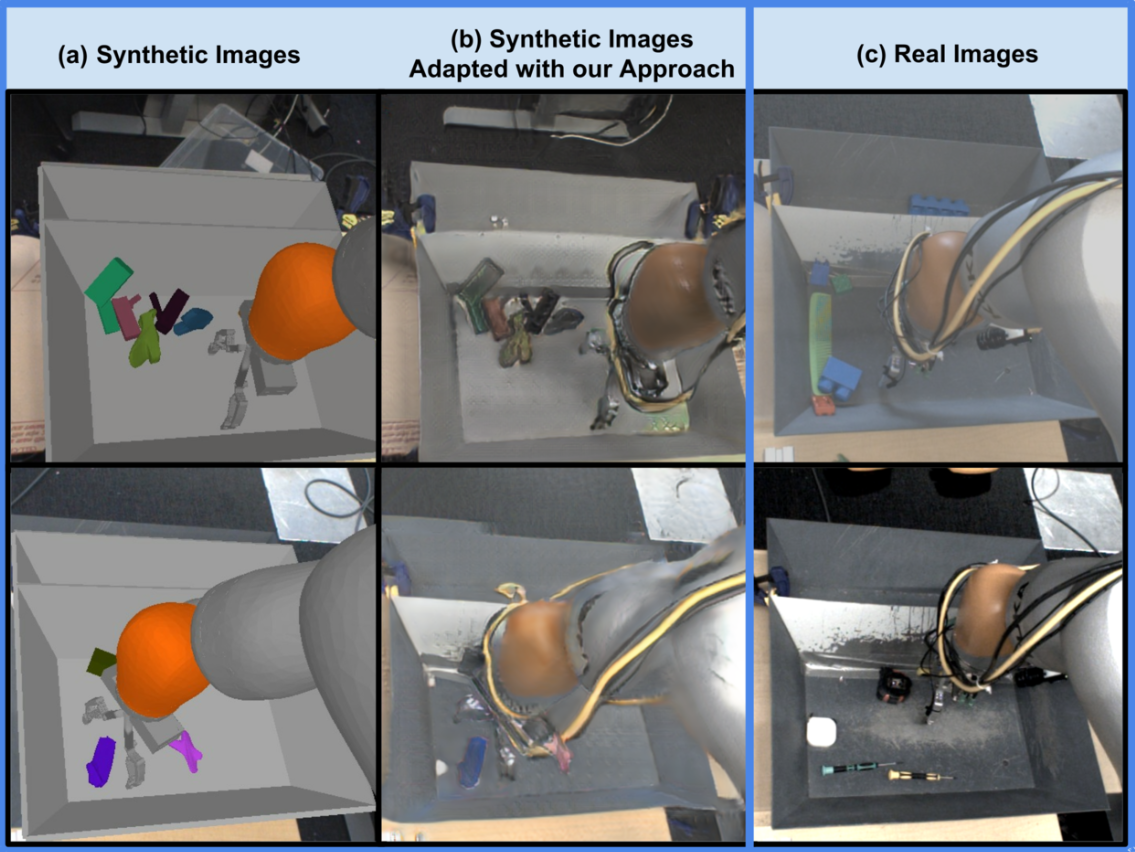

Using Simulation and Domain Adaptation to Improve Efficiency of Deep Robotic Grasping (2018)

Generative adversarial networks for specular highlight removal in endoscopic images

https://doi.org/10.1117/12.2293755

Deep learning with domain adaptation for accelerated projection‐reconstruction MR (2017)

Synthetic Data Augmentation using GAN for Improved Liver Lesion Classification (2018)

GAN Augmentation: Augmenting Training Data using Generative Adversarial Networks (2018)

Abdominal multi-organ segmentation with organ-attention networks and statistical fusion (2018)

Prior-aware Neural Network for Partially-Supervised Multi-Organ Segmentation (2019)

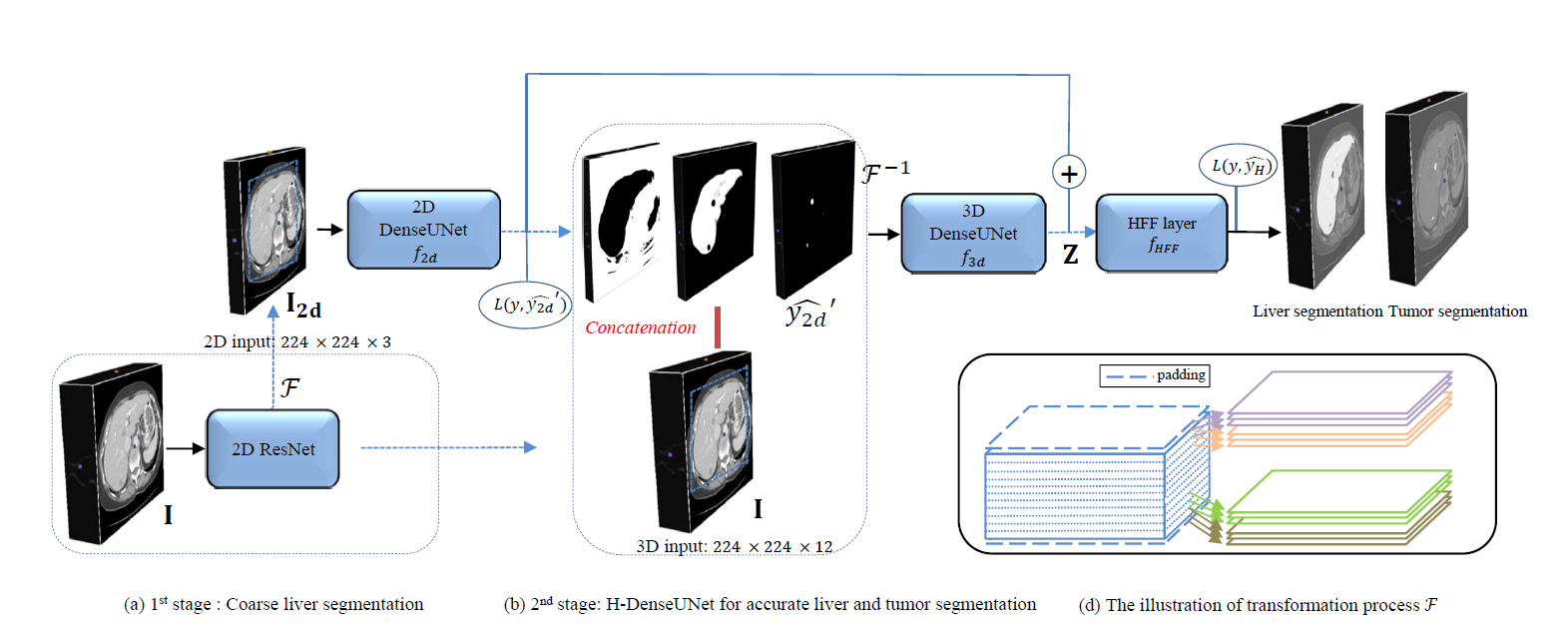

2019 - H-DenseUNet: Hybrid Densely Connected UNet for Liver and Tumor Segmentation from CT Volumes

2020 - [TorchIO]: a Python library for efficient loading, preprocessing, augmentation and patch-based sampling of medical images in deep learning [github]

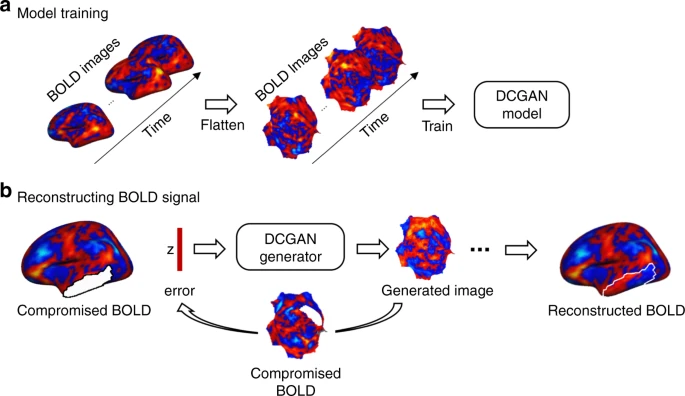

2020 - Reconstructing lost BOLD signal in individual participants using deep machine learning

Low-dose X-ray tomography through a deep convolutional neural network https://www.nature.com/articles/s41598-018-19426-7

In synchrotron-based XRT, CNN-based processing improves the SNR in the data by an order of magnitude, which enables low-dose fast acquisition of radiation-sensitive samples

2019 - Deep learning optoacoustic tomography with sparse data

2019 - A deep learning reconstruction framework for X-ray computed tomography with incomplete data

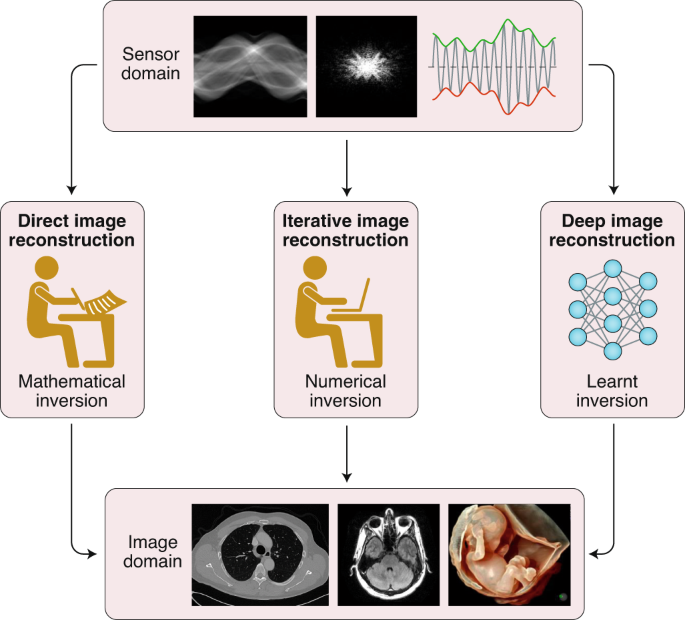

2020 - Deep Learning Techniques for Inverse Problems in Imaging ⭕

2020 - [Review]: Deep learning for tomographic image reconstruction (closed)

2020 - Differentiated Backprojection Domain Deep Learning for Conebeam Artifact Removal

2020 - Extreme Sparse X-ray Computed Laminography Via Convolutional Neural Networks

2021 - [SliceGAN]: Generating 3D structures from a 2D slice with GAN-based dimensionality expansion

Generative adversarial networks (GANs) can be trained to generate 3D image data, which is useful for design optimisation. However, this conventionally requires 3D training data, which is challenging to obtain. 2D imaging techniques tend to be faster, higher resolution, better at phase identification and more widely available. Here, we introduce a generative adversarial network architecture, SliceGAN, which is able to synthesise high fidelity 3D datasets using a single representative 2D image. This is especially relevant for the task of material microstructure generation, as a cross-sectional micrograph can contain sufficient information to statistically reconstruct 3D samples. Our architecture implements the concept of uniform information density, which both ensures that generated volumes are equally high quality at all points in space, and that arbitrarily large volumes can be generated. SliceGAN has been successfully trained on a diverse set of materials, demonstrating the widespread applicability of this tool. The quality of generated micrographs is shown through a statistical comparison of synthetic and real datasets of a battery electrode in terms of key microstructural metrics. Finally, we find that the generation time for a 108 voxel volume is on the order of a few seconds, yielding a path for future studies into high-throughput microstructural optimisation.

2021 - DeepPhase: Learning phase contrast signal from dual energy X-ray absorption images

2022 - Machine learning denoising of high-resolution X-ray nanotomography data

High-resolution X-ray nanotomography is a quantitative tool for investigating specimens from a wide range of research areas. However, the quality of the reconstructed tomogram is often obscured by noise and therefore not suitable for automatic segmentation. Filtering methods are often required for a detailed quantitative analysis. However, most filters induce blurring in the reconstructed tomograms. Here, machine learning (ML) techniques offer a powerful alternative to conventional filtering methods. In this article, we verify that a self-supervised denoising ML technique can be used in a very efficient way for eliminating noise from nanotomography data. The technique presented is applied to high-resolution nanotomography data and compared to conventional filters, such as a median filter and a nonlocal means filter, optimized for tomographic data sets. The ML approach proves to be a very powerful tool that outperforms conventional filters by eliminating noise without blurring relevant structural features, thus enabling efficient quantitative analysis in different scientific fields.

2014 - Do Convnets Learn Correspondence?

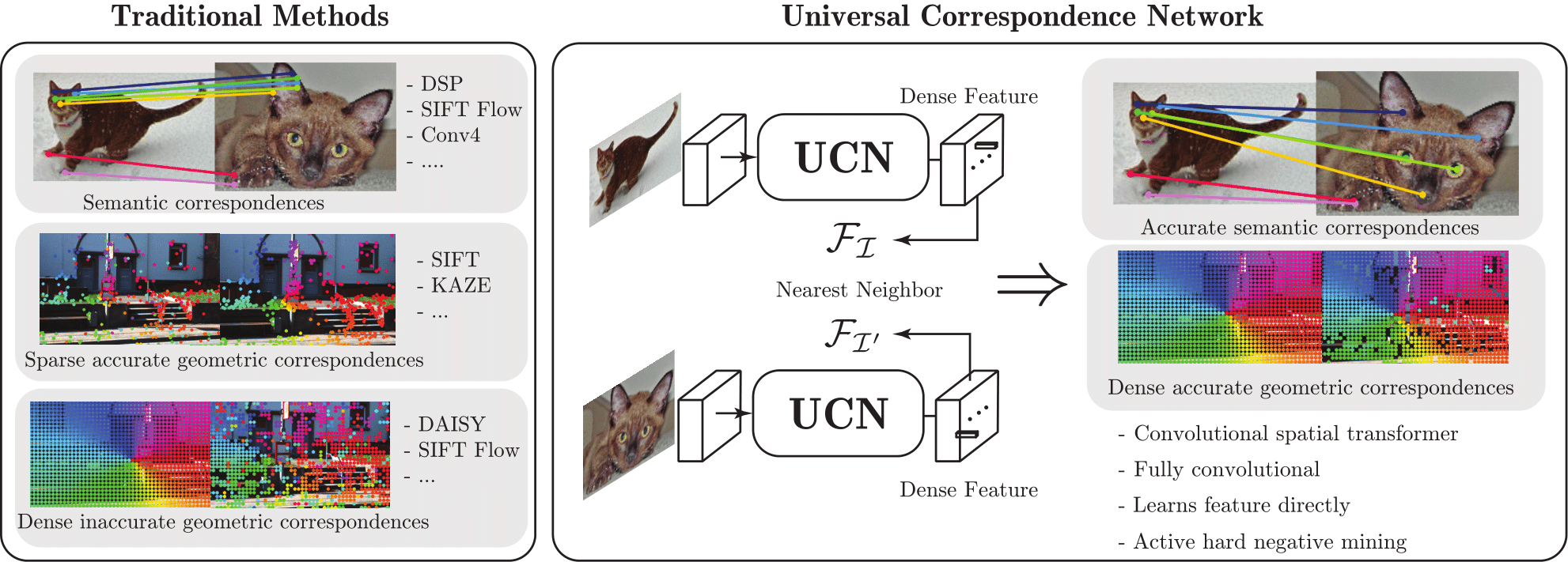

2016 - Universal Correspondence Network

We present a deep learning framework for accurate visual correspondences and demonstrate its effectiveness for both geometric and semantic matching, spanning across rigid motions to intra-class shape or appearance variations. In contrast to previous CNN-based approaches that optimize a surrogate patch similarity objective, we use deep metric learning to directly learn a feature space that preserves either geometric or semantic similarity. Our fully convolutional architecture, along with a novel correspondence contrastive loss allows faster training by effective reuse of computations, accurate gradient computation through the use of thousands of examples per image pair and faster testing with O(n) feed forward passes for n keypoints, instead of O(n2) for typical patch similarity methods. We propose a convolutional spatial transformer to mimic patch normalization in traditional features like SIFT, which is shown to dramatically boost accuracy for semantic correspondences across intra-class shape variations. Extensive experiments on KITTI, PASCAL, and CUB-2011 datasets demonstrate the significant advantages of our features over prior works that use either hand-constructed or learned features.

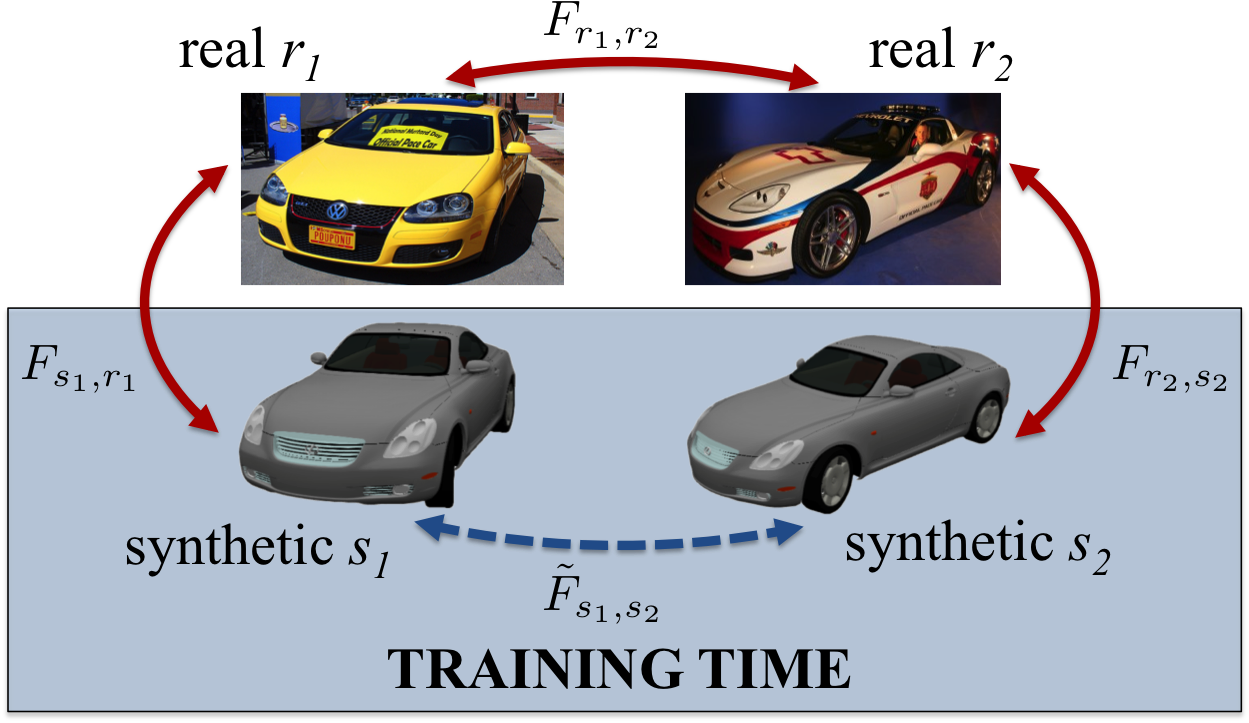

2016 - Learning Dense Correspondence via 3D-guided Cycle Consistency

Discriminative deep learning approaches have shown impressive results for problems where human-labeled ground truth is plentiful, but what about tasks where labels are difficult or impossible to obtain? This paper tackles one such problem: establishing dense visual correspondence across different object instances. For this task, although we do not know what the ground-truth is, we know it should be consistent across instances of that category. We exploit this consistency as a supervisory signal to train a convolutional neural network to predict cross-instance correspondences between pairs of images depicting objects of the same category. For each pair of training images we find an appropriate 3D CAD model and render two synthetic views to link in with the pair, establishing a correspondence flow 4-cycle. We use ground-truth synthetic-to-synthetic correspondences, provided by the rendering engine, to train a ConvNet to predict synthetic-to-real, real-to-real and real-to-synthetic correspondences that are cycle-consistent with the ground-truth. At test time, no CAD models are required. We demonstrate that our end-to-end trained ConvNet supervised by cycle-consistency outperforms state-of-the-art pairwise matching methods in correspondence-related tasks.

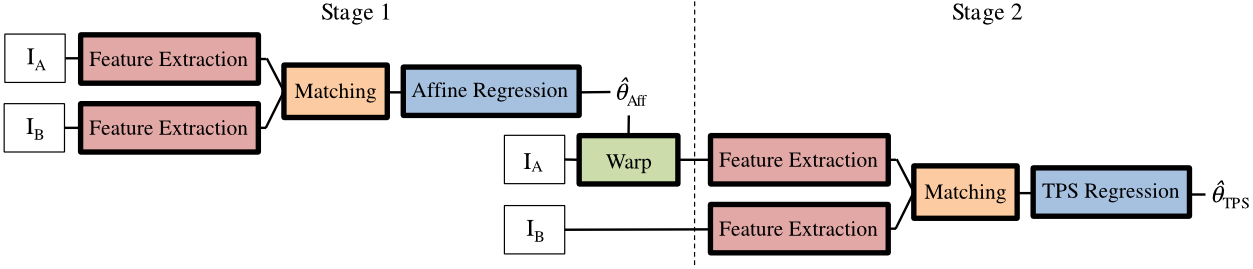

2017 - Convolutional neural network architecture for geometric matching[github]

2018 - [DGC-Net]: Dense Geometric Correspondence Network [github]

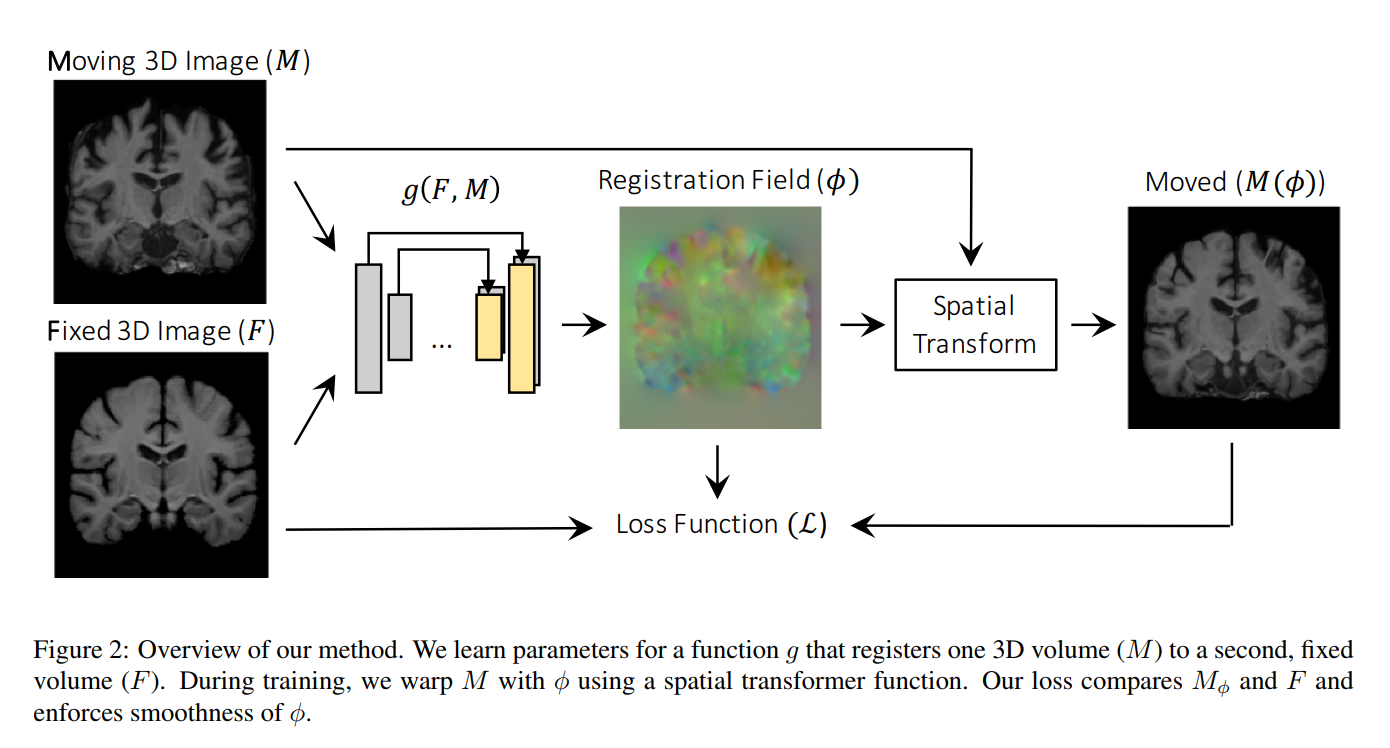

2018 - An Unsupervised Learning Model for Deformable Medical Image Registration

2018 - VoxelMorph: A Learning Framework for Deformable Medical Image Registration [github]

2019 - A Deep Learning Framework for Unsupervised Affine and Deformable Image Registration

2019 - Unsupervised Learning of Probabilistic Diffeomorphic Registration for Images and Surfaces

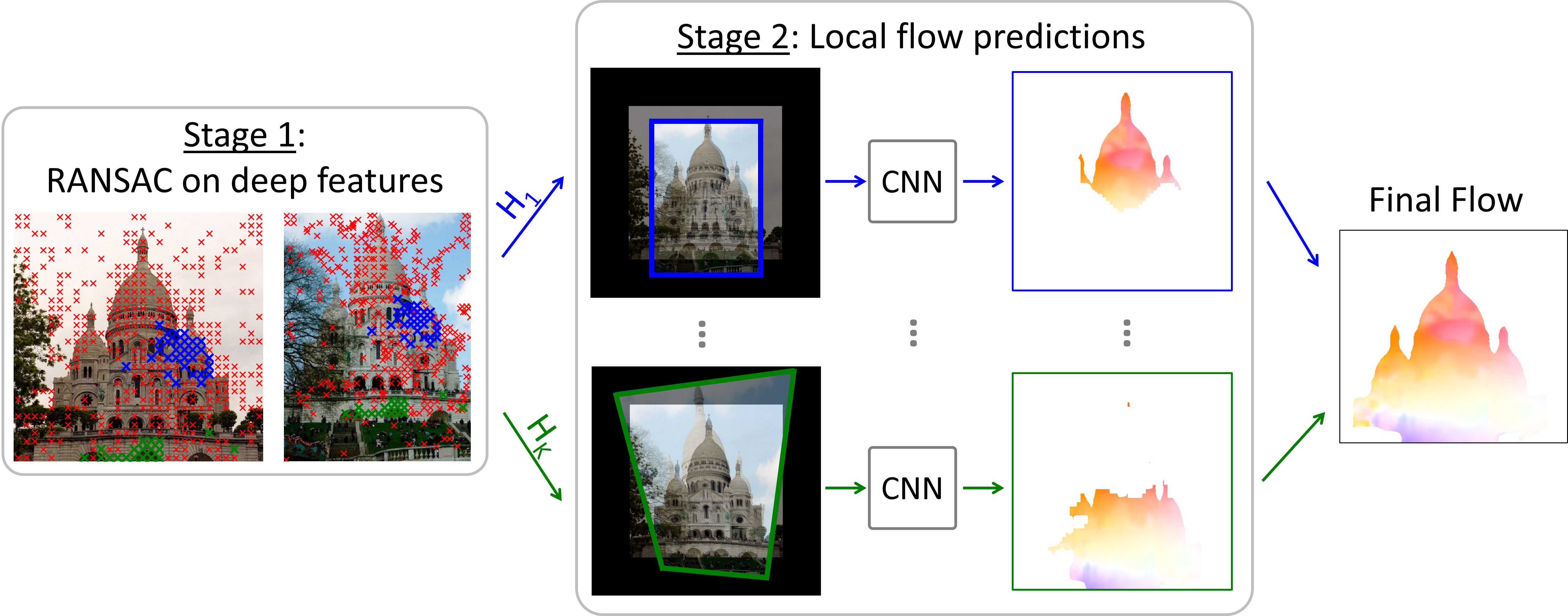

2020 - RANSAC-Flow: generic two-stage image alignment

Flow-Guided Feature Aggregation for Video Object Detection https://arxiv.org/abs/1703.10025

Deep Feature Flow for Video Recognition https://arxiv.org/abs/1611.07715

Video-to-Video Synthesis (2018) [github]

2017 - PWC-Net: CNNs for Optical Flow Using Pyramid, Warping, and Cost Volume[github]

2020 - Softmax Splatting for Video Frame Interpolation [github]

2017 - [TOFlow] Video Enhancement with Task-Oriented Flow

Many video enhancement algorithms rely on optical flow to register frames in a video sequence. Precise flow estimation is however intractable; and optical flow itself is often a sub-optimal representation for particular video processing tasks. In this paper, we propose task-oriented flow (TOFlow), a motion representation learned in a self-supervised, task-specific manner. We design a neural network with a trainable motion estimation component and a video processing component, and train them jointly to learn the task-oriented flow. For evaluation, we build Vimeo-90K, a large-scale, high-quality video dataset for low-level video processing. TOFlow outperforms traditional optical flow on standard benchmarks as well as our Vimeo-90K dataset in three video processing tasks: frame interpolation, video denoising/deblocking, and video super-resolution.

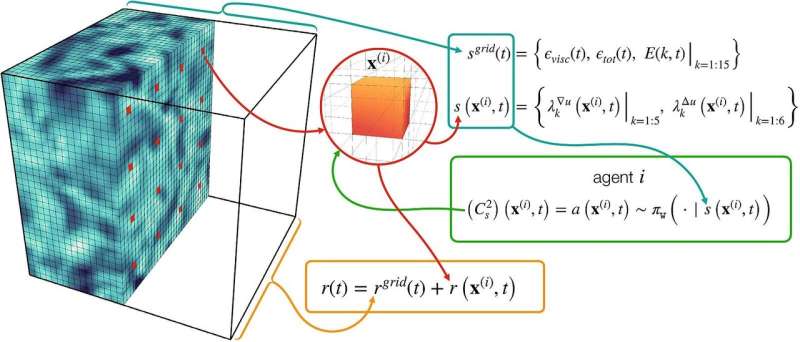

2020 - Automating turbulence modelling by multi-agent reinforcement learning

2017 - "Zero-Shot" Super-Resolution using Deep Internal Learning

2018 - Residual Dense Network for Image Restoration [github]

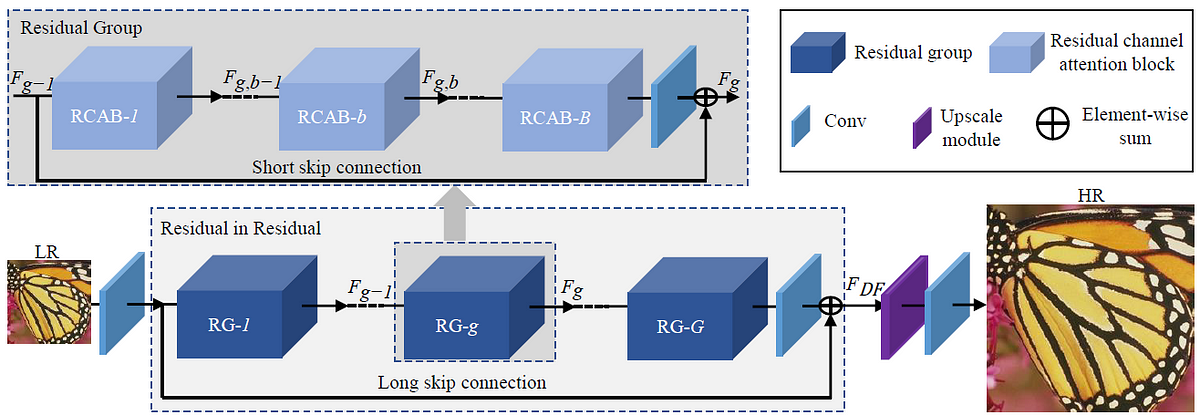

2018 - Image Super-Resolution Using Very Deep Residual Channel Attention Networks

2019 - Noise2Self: Blind Denoising by Self-Supervision

We propose a general framework for denoising high-dimensional measurements which requires no prior on the signal, no estimate of the noise, and no clean training data. The only assumption is that the noise exhibits statistical independence across different dimensions of the measurement, while the true signal exhibits some correlation. For a broad class of functions ("$\mathcal{J}$-invariant"), it is then possible to estimate the performance of a denoiser from noisy data alone. This allows us to calibrate

2020 - Improving Blind Spot Denoising for Microscopy

Many microscopy applications are limited by the total amount of usable light and are consequently challenged by the resulting levels of noise in the acquired images. This problem is often addressed via (supervised) deep learning based denoising. Recently, by making assumptions about the noise statistics, self-supervised methods have emerged. Such methods are trained directly on the images that are to be denoised and do not require additional paired training data. While achieving remarkable results, self-supervised methods can produce high-frequency artifacts and achieve inferior results compared to supervised approaches. Here we present a novel way to improve the quality of self-supervised denoising. Considering that light microscopy images are usually diffraction-limited, we propose to include this knowledge in the denoising process. We assume the clean image to be the result of a convolution with a point spread function PSF) and explicitly include this operation at the end of our neural network. As a consequence, we are able to eliminate high-frequency artifacts and achieve self-supervised results that are very close to the ones achieved with traditional supervised methods.

2021 - Denoising-based Image Compression for Connectomics

2018 - Image Inpainting for Irregular Holes Using Partial Convolutions[github official], [github]

2017 - Globally and Locally Consistent Image Completion[github]

2017 - Generative Image Inpainting with Contextual Attention[github]

2018 - Free-Form Image Inpainting with Gated Convolution

Photo-realistic single image super-resolution using a generative adversarial network (2016)[github]

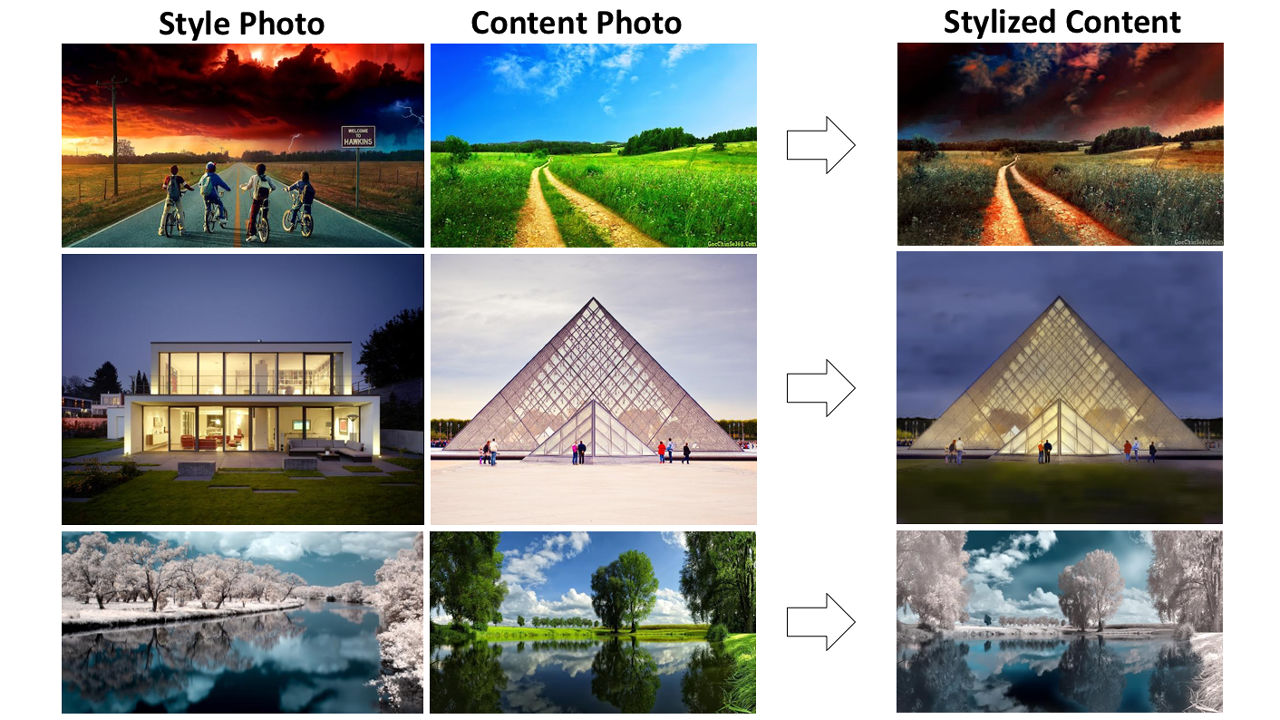

A Closed-form Solution to Photorealistic Image Stylization (2018)[github]

2021 - COIN: COmpression with Implicit Neural representations

[pix2code]: Generating Code from a Graphical User Interface Screenshot [github]

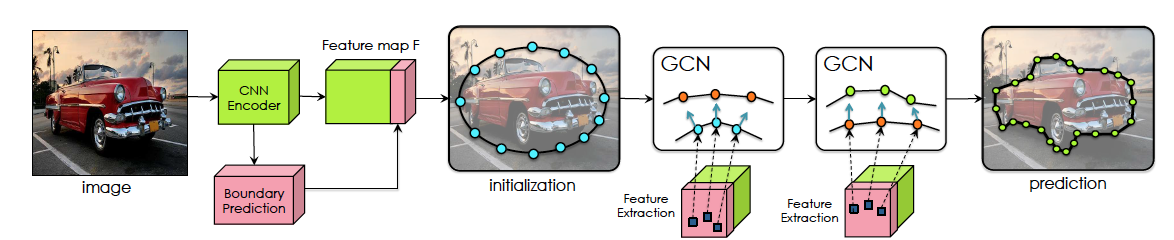

Fast Interactive Object Annotation with Curve-GCN (2019)

2017 - Learning Fashion Compatibility with Bidirectional LSTMs[github]

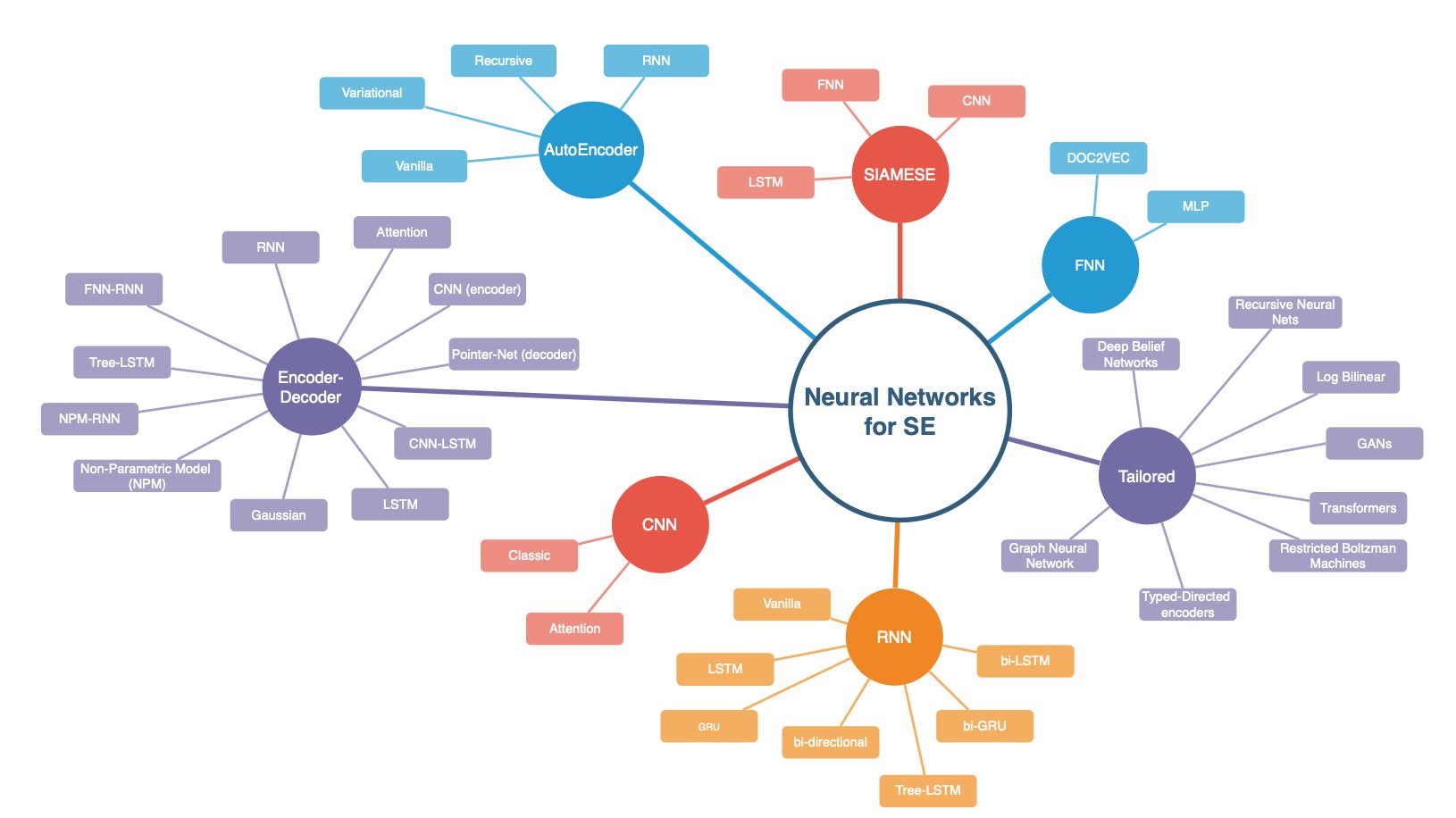

2020 - A Systematic Literature Review on the Use of Deep Learning in Software Engineering Research

2020 - Fourier Neural Operator for Parametric Partial Differential Equations

Caffe: Convolutional Architecture for Fast Feature Embedding

Tune: A Research Platform for Distributed Model Selection and Training (2018) [github]

Glow: Compiler for Neural Network hardware accelerators

Lucid: A collection of infrastructure and tools for research in neural network interpretability

PySyft: A generic framework for privacy preserving deep learning [github]

Crypten: A framework for Privacy Preserving Machine Learning [github]

[Snorkel]: Programmatically Building and Managing Training Data

[Netron ] Visualizer for deep learning and machine learning models

[Interactive Tools] for ML, DL and Math

[mlflow] - An open source platform for the machine learning lifecycle

[TorchIO] - Medical image preprocessing and augmentation toolkit for deep learning

[Ignite] - high-level library to help with training and evaluating neural networks

[Cadene] - Pretrained models for Pytorch

[Rapid] - Open GPU Data Science

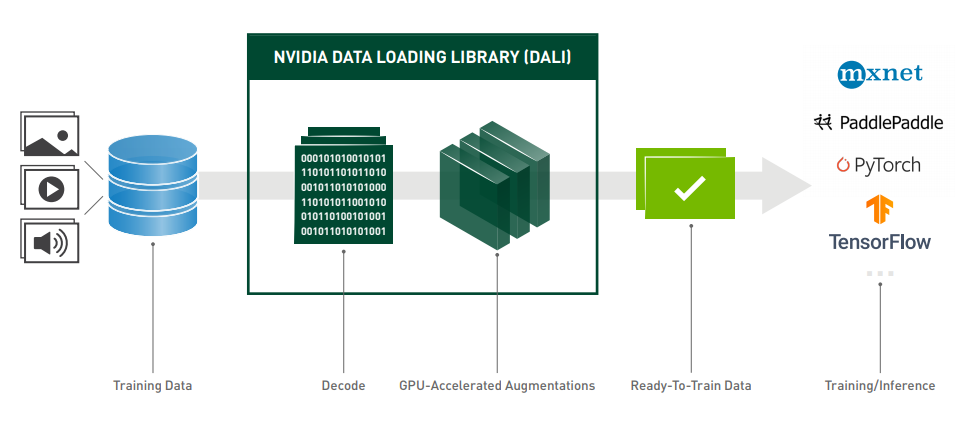

[DALI] - NVIDIA Data Loading Library

[Ray] - Fast and Simple Distributed Computing

[PhotonAI] - A high level Python API for designing and optimizing machine learning pipelines.

[DeepImageJ]: A user-friendly environment to run deep learning models in ImageJ

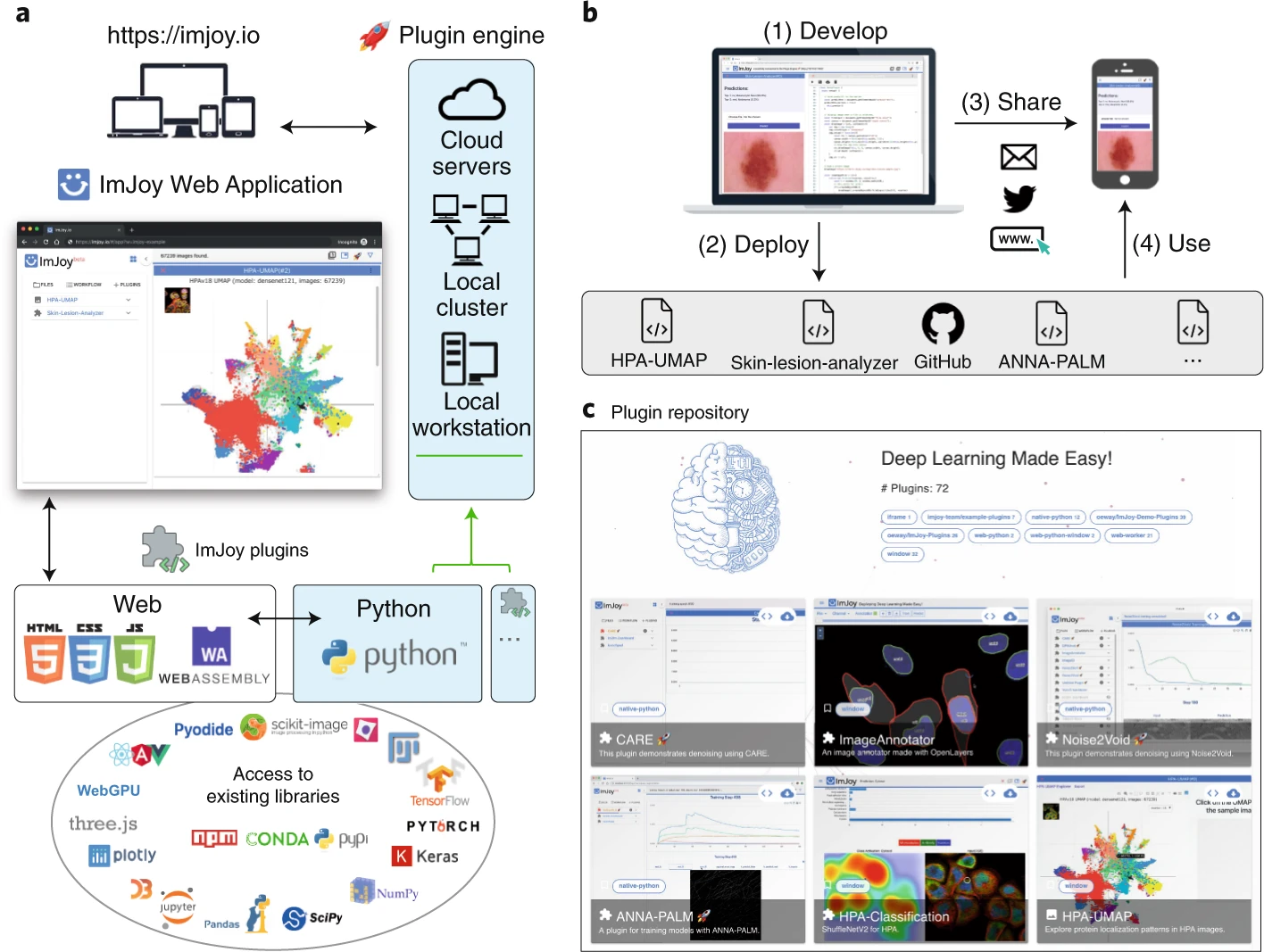

[ImJoy]: Deep Learning Made Easy!

[BioImage.IO]: Bioimage Model Zoo

Analytics Zoo (Intel): Distributed TensorFlow, PyTorch, Keras and BigDL on Apache Spark & Ray

Analytics Zoo is an open source Big Data AI platform, and includes the following features for scaling end-to-end AI to distributed Big Data:

Orca: seamlessly scale out TensorFlow and PyTorch for Big Data (using Spark & Ray)

RayOnSpark: run Ray programs directly on Big Data clusters

BigDL Extensions: high-level Spark ML pipeline and Keras-like APIs for BigDL

Chronos: scalable time series analysis using AutoML

PPML: privacy preserving big data analysis and machine learning (experimental)

[OpenMMLab] - Open source projects for academic research and industrial applications

2021 - BTrack: Bayesian Tracker

BayesianTracker (btrack) is a Python library for multi object tracking, used to reconstruct trajectories in crowded fields. Here, we use a probabilistic network of information to perform the trajectory linking. This method uses spatial information as well as appearance information for track linking.

2016 - An Analysis of Deep Neural Network Models for Practical Applications

2017 - Revisiting Unreasonable Effectiveness of Data in Deep Learning Era

2019 - High-performance medicine: the convergence of human and artificial intelligence

2020 - Maithra Raghu, Eric Schmidt. A Survey of Deep Learning for Scientific Discovery

[ml-surveys [github]] - a selection of survey papers summarizing the advances in the field 📜

[DALI] - NVIDIA Data Loading Library

2016 - Building Machines That Learn and Think Like People ⭕

2016 - A Berkeley View of Systems Challenges for AI

2018 - Deep Learning: A Critical Appraisal

2018 - Human-level intelligence or animal-like abilities?

2018 - When Will AI Exceed Human Performance? Evidence from AI Experts

2018 - The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation

Biological evolution provides a creative fount of complex and subtle adaptations, often surprising the scientists who discover them. However, because evolution is an algorithmic process that transcends the substrate in which it occurs, evolution's creativity is not limited to nature. Indeed, many researchers in the field of digital evolution have observed their evolving algorithms and organisms subverting their intentions, exposing unrecognized bugs in their code, producing unexpected adaptations, or exhibiting outcomes uncannily convergent with ones in nature. Such stories routinely reveal creativity by evolution in these digital worlds, but they rarely fit into the standard scientific narrative. Instead they are often treated as mere obstacles to be overcome, rather than results that warrant study in their own right. The stories themselves are traded among researchers through oral tradition, but that mode of information transmission is inefficient and prone to error and outright loss. Moreover, the fact that these stories tend to be shared only among practitioners means that many natural scientists do not realize how interesting and lifelike digital organisms are and how natural their evolution can be. To our knowledge, no collection of such anecdotes has been published before. This paper is the crowd-sourced product of researchers in the fields of artificial life and evolutionary computation who have provided first-hand accounts of such cases. It thus serves as a written, fact-checked collection of scientifically important and even entertaining stories. In doing so we also present here substantial evidence that the existence and importance of evolutionary surprises extends beyond the natural world, and may indeed be a universal property of all complex evolving systems.

2019 - Deep Nets: What have they ever done for Vision? ✅

This is an opinion paper about the strengths and weaknesses of Deep Nets for vision. They are at the center of recent progress on artificial intelligence and are of growing importance in cognitive science and neuroscience. They have enormous successes but also clear limitations. There is also only partial understanding of their inner workings. It seems unlikely that Deep Nets in their current form will be the best long-term solution either for building general purpose intelligent machines or for understanding the mind/brain, but it is likely that many aspects of them will remain. At present Deep Nets do very well on specific types of visual tasks and on specific benchmarked datasets. But Deep Nets are much less general purpose, flexible, and adaptive than the human visual system. Moreover, methods like Deep Nets may run into fundamental difficulties when faced with the enormous complexity of natural images which can lead to a combinatorial explosion. To illustrate our main points, while keeping the references small, this paper is slightly biased towards work from our group.

2020 - State of AI Report 2020

2020 - The role of artificial intelligence in achieving the Sustainable Development Goals

2020 - The Next Decade in AI: Four Steps Towards Robust Artificial Intelligence ⭕

Recent research in artificial intelligence and machine learning has largely emphasized general-purpose learning and ever-larger training sets and more and more compute. In contrast, I propose a hybrid, knowledge-driven, reasoning-based approach, centered around cognitive models, that could provide the substrate for a richer, more robust AI than is currently possible.

2021 - Why AI is Harder Than We Think by Melanie Mitchell

Since its beginning in the 1950s, the field of artificial intelligence has cycled several times between periods of optimistic predictions and massive investment ("AI spring") and periods of disappointment, loss of confidence, and reduced funding ("AI winter"). Even with today's seemingly fast pace of AI breakthroughs, the development of long-promised technologies such as self-driving cars, housekeeping robots, and conversational companions has turned out to be much harder than many people expected. One reason for these repeating cycles is our limited understanding of the nature and complexity of intelligence itself. In this paper I describe four fallacies in common assumptions made by AI researchers, which can lead to overconfident predictions about the field. I conclude by discussing the open questions spurred by these fallacies, including the age-old challenge of imbuing machines with humanlike common sense.