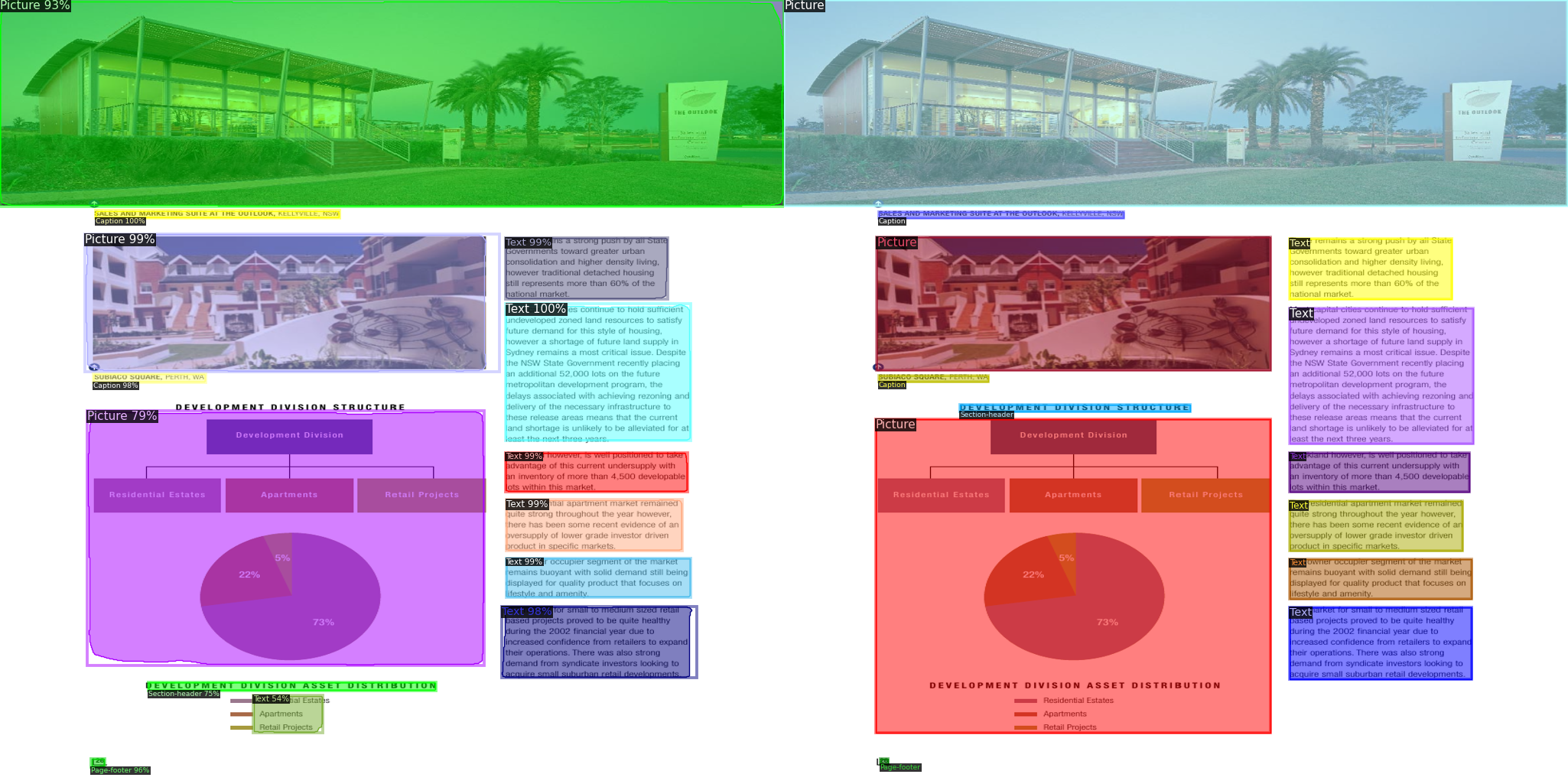

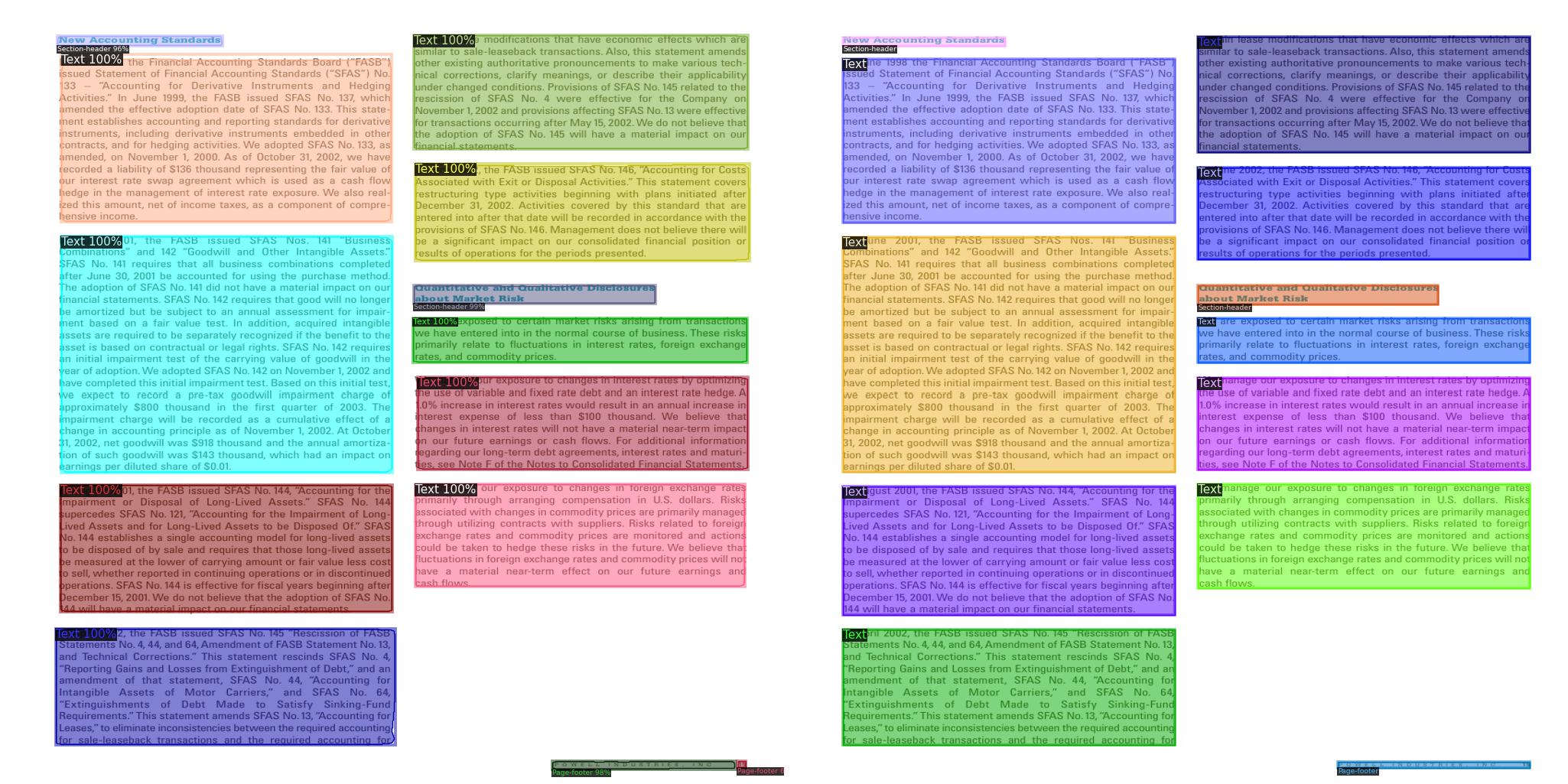

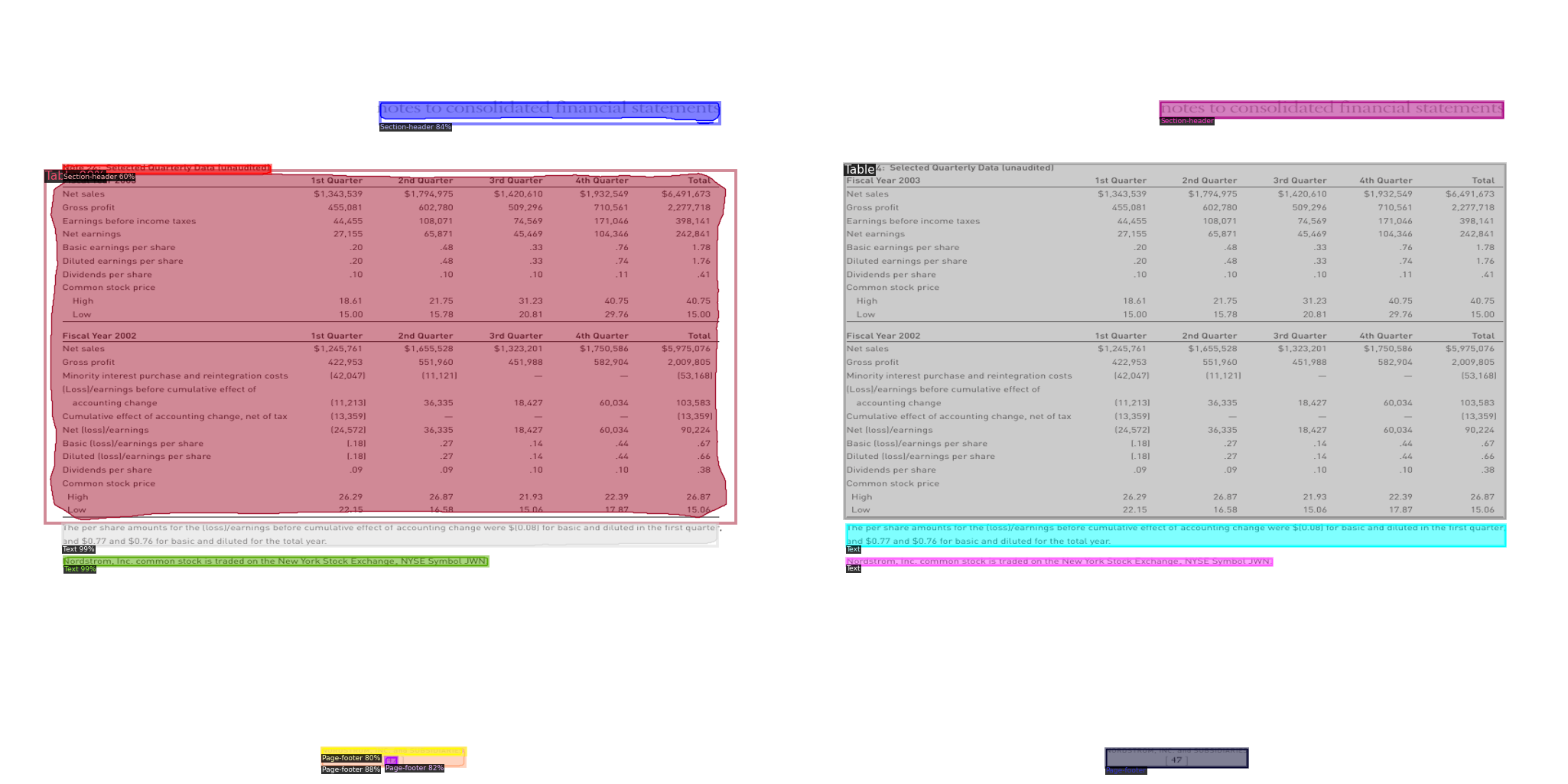

Pytorch implementation of the paper SwinDocSegmenter: An End-to-End Unified Domain Adaptive Transformer for Document Instance Segmentation. This model is implemented on top of the detectron2 framework. The proposed model can be used to analyze complex layouts including magazines, Scientific Reports, historical documents, patents and so on as shown in the following examples.

| Magazines | Scientific Reports |

|

|

| Tables | Others |

|

|

git clone https://github.com/ayanban011/SwinDocSegmenter.git

cd SwinDocSegmenterfollow the installation instructions

python ./train_net.py \

--config-file maskdino_R50_bs16_50ep_4s_dowsample1_2048.yaml \

--eval-only \

--num-gpus 1 \

MODEL.WEIGHTS ./model_final.pthpython train_net.py --num-gpus 1 --config-file config_path SOLVER.IMS_PER_BATCH SET_TO_SOME_REASONABLE_VALUE SOLVER.BASE_LR SET_TO_SOME_REASONABLE_VALUEIn train_net.py

def main(args):

register_coco_instances("dataset_train",{},"path to the ground truth json file","path to the training image folder")

register_coco_instances("dataset_val",{},"path to the ground truth json file","path to the validation image folder")

MetadataCatalog.get("dataset_train").thing_classes = ['name of the classes']

MetadataCatalog.get("dataset_val").thing_classes = ['name of the classes']

...

if __name__ == "__main__":

...

MetadataCatalog.get("dataset_train").thing_classes = ['name of the classes']

MetadataCatalog.get("dataset_val").thing_classes = ['name of the classes']

...In Config File

...

SEM_SEG_HEAD:

...

NUM_CLASSES: #no. of classes

...

DATASETS:

TRAIN: ("dataset_train",)

TEST: ("dataset_val",)

...In this section, we release the pre-trained weights for all the best DocEnTr model variants trained on benchmark datasets.

| Dataset | Config-file | Weights | AP |

|---|---|---|---|

| PublayNet | config-publay | model | 93.72 |

| Prima | config-prima | model | 54.39 |

| HJ Dataset | config-hj | model | 84.65 |

| TableBank | config-table | model | 98.04 |

| DoclayNet | config-doclay | model | 76.85 |

In order to, test the custom images, you should put all the images and create a JSON file that contains all the categories of the dataset; image_name; image_id. We have created a sample test set for DocLayNet. You can create the same for other datasets, by following the same structure.

Dataset Link: Custom DocLayNet Test Set

Annotations Link: A little annotations

Please refer to the SemiDocSeg folder for generating support sets and new_annotations. The rest of the training and testing procedure is as same as SwinDocSegmenter.

If you find this useful for your research, please cite it as follows:

@article{banerjee2023swindocsegmenter,

title={SwinDocSegmenter: An End-to-End Unified Domain Adaptive Transformer for Document Instance Segmentation},

author={Banerjee, Ayan and Biswas, Sanket and Llad{\'o}s, Josep and Pal, Umapada},

journal={arXiv preprint arXiv:2305.04609},

year={2023}

}

@article{banerjee2023semidocseg,

title={SemiDocSeg: Harnessing Semi-Supervised Learning for Document Layout Analysis},

author={Banerjee, Ayan and Biswas, Sanket and Llad{\'o}s, Josep and Pal, Umapada},

year={2023}

}Many thanks to these excellent opensource projects

Thank you for your interest in our work, and sorry if there are any bugs.