Final project for MLOps zoomcamp course hosted by DataTalksClub. This project can be considered as example how to develop end-to-end ML-based system.

Disclaimer: ML is very simple here cause the goal is to try MLOps tools, not to beat SOTA :)

- mlops_zoomcamp_project

The task is to define music genre by wav record. I.e., at the input we get some record and musical genre (class) is predicted at the output. For this project the following genres were analyzed: {blues, classical, country, disco, hip-hop, jazz, metal, pop, reggae, rock}.

For training and validation I took GTZAN dataset, this is sort of MNIST for sound. Then some genres were supplemented by records from ISMIR dataset.

For testing some audio recordings were collected manually by myself (to discover data drift). I provided only piece of the training data, cropped test audios and cropped random audios without labels in the repo because audio files take up a lot of disk space, also working with full dataset version is resource-hungry and requires for about half an hour of free time (depending on hardware). But if you wish, full dataset for training can be downloaded from here. Also you can use your own audios - code is designed to work with wav, mp3 and mp4. Note that data folder should have the following structure (or .mp3, .mp4 instead of .wav), e.g.:

| data_folder_name (e.g., genres_original_subset)

--- | genre_name

--- | genre_name.xxxxx.wav

----| genre_name.xxxxx.wav

--- | another_genre_name

--- | another_genre_name.xxxxx.wav

--- | another_genre_name.xxxxx.wavExtracted features from the whole dataset are located in the data/processed folder. These features plus target encoder are enough to train ML models.

The task of genre prediction can be considered as multiclass classification. To make things easier, 30 seconds were randomly selected from each audio and widely used audio features were extracted (mel-frequency cepstral coefficients, spectral bandwidth, root mean square energy, etc.). For each vectorized feature mean and variance were calculated and three types of classifiers trained (RandomForest, XGBoost and KNearestNeighbors).

GTZAN dataset was balanced (100 records per genre), but after some classes have been supplemented with data from ISMIR, dataset was no longer balanced. So to measure model performance G-Mean Score was used.

.dockerignore

.github

|-- workflows # CI/CD

| |-- test.yaml

.gitignore

.pre-commit-config.yaml # pre-commit hooks

LICENSE

Makefile # useful commands for quality checks, running unit tests, etc.

Pipfile # requirements for audioprocessor_dev image

Pipfile.lock

README.md

configs

|-- grafana_dashboards.yaml # dashboards config

|-- grafana_datasources.yaml # datasources config

|-- init.sql # creates databases when PostgreSQL starts

|-- prometheus.yaml # prometheus datasources

dashboards

|-- model_metrics.json # Grafana Dashboard

data

|-- artifacts # registered models from mlflow

| |-- 1 # the best model

| |-- 2 # second best model

|-- processed # vectorized features to train ML models

| |-- feature_names.pkl # feature names (w/o target)

| |-- target_encoder.pkl # str->int

| |-- test.pkl # test data

| |-- train.pkl # train data

| |-- train_subset.pkl # train subset

| |-- val.pkl # validation data

|-- raw

| |-- genres_original_eval # test data

| | |-- blues

| | |-- classical

| | |-- country

| | |-- disco

| | |-- hiphop

| | |-- jazz

| | |-- metal

| | |-- pop

| | |-- reggae

| | |-- rock

| |-- genres_original_subset # same structure as for genres_original_eval, train subset to make code health fast check

| |-- genres_original # is not present in the repo, but full dataset version should be located here

| |-- random_data_cut # random data to check inference

docker-compose.yaml # main docker compose file to collect all the images

docker_env # env variables for images

|-- audio_processor.env

|-- aws_credentials.env

|-- localstack.env

|-- mlflow.env

|-- postgres.env

|-- prefect.env # prefect cloud keys

|-- tritonserver.env

docker_makefiles # will be mounted as ordinary Makefiles to the corresponding images

|-- Makefile_audioprocessor

|-- Makefile_tritonclient

|-- Makefile_tritonserver

dockerfiles

|-- Dockerfile.audio # dev image

|-- Dockerfile.mlflow # mlflow service

|-- Dockerfile.postgres # postgre sql service

|-- Dockerfile.tritonclient # tritonclient service

|-- Dockerfile.tritonserver # tritonserver service

images

|-- stack.png

|-- system_arch.png

src

|-- __init__.py

|-- deploy

| |-- client # runs requests from client

| | |-- client.py

| |-- conda-pack # conda environment for triton models

| | |-- Dockerfile.condapack

| | |-- conda.yaml

| |-- triton_models # models to be deployed

| | |-- ensemble_1

| | | |-- config.pbtxt

| | |-- post_processor_1

| | | |-- 1

| | | | |-- model.py

| | | | |-- target_encoder.pkl

| | | |-- config.pbtxt

| | |-- pre_processor_1

| | | |-- 1

| | | | |-- model.py

| | | |-- config.pbtxt

| | |-- predictor_1

| | | |-- 1

| | | | |-- model.pkl

| | | | |-- model.py

| | | |-- config.pbtxt

| | |-- predictor_2

| | | |-- 1

| | | | |-- model.pkl

| | | | |-- model.py

| | | |-- config.pbtxt

|-- monitoring # monitoring module

| |-- monitor.py

|-- preprocessing # feature extraction routines

| |-- audio.py

| |-- configs

| | |-- audio_config.yaml

| |-- feature_extractor.py

| |-- get_data_from_s3.py

| |-- preprocessing_workflows.py

| |-- put_data_to_s3.py

| |-- utils.py

|-- register # promotes models to the mlflow registry

| |-- register.py

|-- scripts # scripts to run inside images

| |-- create_data_buckets.sh

| |-- create_server_buckets.sh

| |-- run_mlflow.sh

| |-- run_prefect.sh

|-- training # training routines

| |-- trainer.py

| |-- training_workflows.py

tests # unit tests

|-- test_audios.py

|-- test_data

| |-- audio_config.yaml

| |-- dustbus.mp3

| |-- dustbus.wavThe system is deployed with docker and can be run locally using docker-compose. If you don't have docker-compose, check these instructions. Also you will need to create prefect account before running docker-compose (as prefect cloud is used to implement orchestration). First you should register/login into Prefect Cloud, create workspace, work pool with name you want inside workspace and finally get access key. Then generated access key, pool and workspace names should be assigned to PREFECT_KEY, PREFECT_POOL and PREFECT_WORKSPACE in prefect.env.

If you will work with full dataset version (this is not required for code testing), download it from here and save into src/data/raw. Check if downloaded folder genres_original has the same structure as genres_original_subset.

All required services can be started with docker-compose file:

make build_and_upNote that there are 9 services inside docker-compose, so they will be pulled and built for about an hour if you don't have any of them.

For development/checking code go inside container audioprocessor_dev (you will need to create 2 separate windows with this container):

docker exec -it audioprocessor_dev bashThen run the following steps inside container:

make create_buckets run_prefectcreate_buckets step will create buckets for raw data and for extracted features, run_prefect step will make authorization and start pool afterwards. After prefect agent will start, switch to the second window of audioprocessor_dev container, all commands below must be executed inside this container.

Code quality and health can be checked either locally (outside the container)

make setup

make quality_checks unit_testsor from audioprocessor_dev container

make setup

make quality_checks unit_testsTo check code quality before commits run outside the container

make pre_commitFeature extraction is implemented as individual prefect deployment and uses prefect-dask for parallelization. In practice we don't need feature extraction and training models together, instead more frequent use case is to extract features once and make experiments with different models for pre-defined feature set.

Progress and logs can be tracked in the terminal with prefect agent (first terminal for audioprocessor_dev). After the first run this deployment can be launched from prefect cloud UI.

You have three ways for feature extraction:

- Run full feature extraction (resource-hungry, needs about half an hour depending on the hardware). This step requires full dataset version, download it from Google Drive by the link provided above

- Run feature extraction on piece of the dataset just for testing purposes

- Use extracted features from the full dataset

It's assumed that full dataset version from Google Drive is downloaded into src/data/raw/genres_original. Check if /data/raw/genres_original folder exists inside container and is not empty. Then run feature extraction pipelines:

# put wav files to s3

make put_raw_train_data_to_s3 put_raw_test_data_to_s3

# run feature extraction and save to features bucket

make preprocess_raw_train_data preprocess_raw_test_dataFor debugging purposes data can be downloaded from s3 into /data/proccessed inside container (this folder is mounted as volume for /src/data/processed outside the container):

make get_features_from_s3 # put wav files to s3

make put_raw_train_subset_to_s3 put_raw_test_data_to_s3

# run feature extraction and save to features bucket

make preprocess_raw_train_subset preprocess_raw_test_data # put extracted features from full dataset version to s3

make put_processed_data_to_s3Training models is implemented as individual prefect deployment which operates with previously extracted features. It's assumed that features are stored in separate s3 bucket. There are three pipelines (XGBClassifier, RandomForestClassifier and KNearestNeighborsClassifier):

make run_xgboost_training

make run_random_forest_training

make run_kneighbors_neighbors_classifier_trainingEach of runs saves input data, tunes model hyperparameters with optuna, logs experiment parameters (e.g., metrics, model parameters) using MLFlow. It should be noted that only best model's weights is saved to s3 within one experiment.

After training stage two best models by validation G-Mean score are promoted to the registry to the Production stage. This stage is implemented as individual prefect deployment as well:

make register_best_model make copiesCheckpoints of two best registered models are downloaded from s3 and copied into folder with deployment-ready models. To see registered models, check localhost:5001. After register stage audioprocessor_dev is no longer needed, commands below will be launched in inference containers.

Models are deployed using Triton Inference Server:

Triton Inference Server is an open source inference serving software that streamlines AI inferencing. Triton enables teams to deploy any AI model from multiple deep learning and machine learning frameworks, including TensorRT, TensorFlow, PyTorch, ONNX, OpenVINO, Python, RAPIDS FIL, and more.

Triton is like "advanced" Flask - it supports HTTP/GRPC requests and produces service health metrics which can be collected with Prometheus. Another important features are efficient GPU usage, horizontal scaling for different parts of large models, isolated conda environment for each of models and many more. For this project Triton is probably overkill, but it supports all features I needed here, so I decided to use it.

Triton is not intended for use with prefect and provides all necessary tools both for batch and streaming inference, this is the main reason why I didn't make prefect deployment for this stage, not because I can't :) I implemented batch inference, by the words.

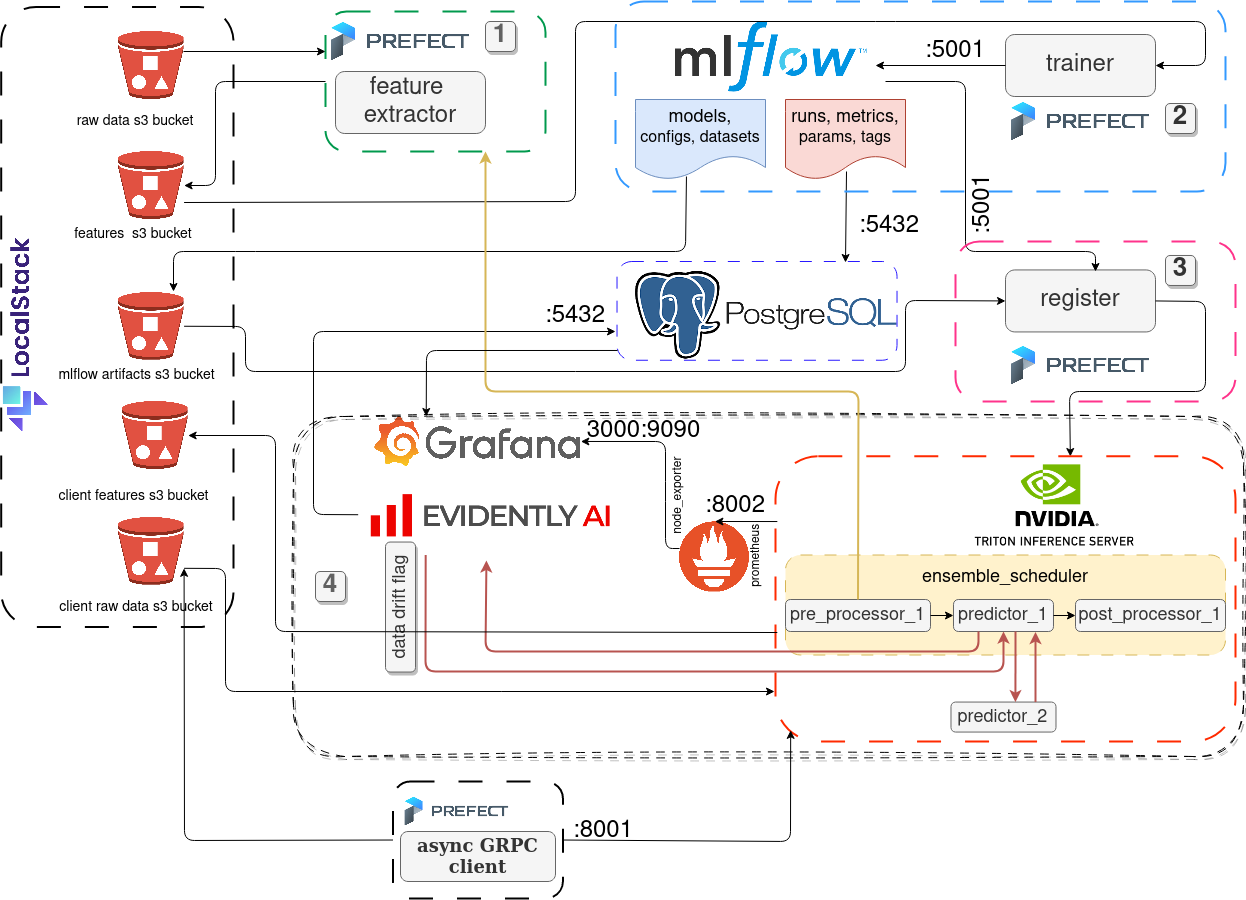

Inference was divided into three stages and implemented with five "models" (each folder with config.pbtxt file is called a model in Triton, config.pbtxt describes model configuration - batch size, number of model instances, inputs/outputs types and names, model backend and other parameters):

- pre_processor_1 extracts batch of features from input audios and passes vectorized features to predictor_1

- predictor_1 outputs batch of integer predictions (numbers of classes) for vectorized features batch, checks data drift, and logs results to PostgreSQL database. If data drift occurred, batch of features is passed to predictor_2. This is sort of mock to perform real monitoring, second predictor is not better that first indeed

- predictor_2 another model to make predictions for batch of vectorized features. Same as first predictor (model kind might differ) except monitoring, this model is outputs predictions

- post_processor_1 decodes integer predictions into human-readable music genres names

- ensemble_1 is ensemble scheduler which orchestrates pre_processor, predictor and post_processor

As mentioned above, Triton supports individual environment for each of models, but here environment is shared between models, just to demonstrate this feature. Triton accepts conda environment saved with conda-pack and specified as environment variable inside config file (e.g., config for ensemble):

parameters: [

{

key: "EXECUTION_ENV_PATH",

value: {string_value: "/models/conda-pack.tar.gz"}

}

]For reproducibility building environment is implemented in Dockerfile.condapack and locally can be done as follows:

make build_conda_packBuilding conda environment is also implemented as continuous deployment pipeline, it outputs models folder with conda-pack archive to use as-is with Triton. If artifact is expired, re-run the job build_conda_pack.

To launch Triton Server, go inside audioprocessor_server container and start it:

docker exec -it audioprocessor_server bash

# prepare stage: create buckets shared between client and server for raw data and extracted features

make make_buckets

# running web-service

make start_triton_serverWhen service is ready you will see the following messages:

[I0819 16:30:44.071854 62 grpc_server.cc:4819] Started GRPCInferenceService at 0.0.0.0:8001

[I0819 16:30:44.072438 62 http_server.cc:3477] Started HTTPService at 0.0.0.0:8000

[I0819 16:30:44.114924 62 http_server.cc:184] Started Metrics Service at 0.0.0.0:8002

Metrics Service at localhost:8002 is specified as Prometheus datasource in this config.

After that go inside client container and run inference on test data:

docker exec -it audioprocessor_client bash

# login into prefect

make run_prefect

make run_client_eval

make run_client_random

The data is chosen so that the data drift must occur, so it is the expected behavior that models perform bad.

Models monitoring is implemented using Evidently AI and Grafana inside predictor_1. PostgreSQL database and Prometheus are specified as data sources for Grafana in configs, dashboard config is located here. To see grafana dashboard, check localhost:3000, Dashboards->model_metrics (default username is admin, password is similar).

As mentioned above, when first model makes prediction for batch, evidently calculates data drift and saves result to PostgreSQL database. If data drift is detected, the prediction is made by the second model. Also default Grafana alerting was set - it sends notifications inside Grafana if batch data drift occurred (I exported it but for some reasons I couldn't import it in the new image).

- Problem description: detailed description provided

- Cloud: the project uses localstack, docker images for required services, collected with docker-compose

- Experiments tracking and model registry: both experiment tracking and model registry are used

- Worfkow orchestration: workflow is fully deployed but requires some manual actions due to Triton Inference Server nature

- Model deployment: special tools for model deployment used (Triton Inference Server and Triton Inference Client for requests)

- Model monitoring: comprehensive model monitoring that runs a conditional workflow (switching to different model if data drift occurs) and generates dashboards for service health and data drift metrics

- Reproducibility: instructions are clear (I hope so:)), it's easy to run the code (I hope so too :)) and it must work, I tested

- Best practices:

- There are unit tests

- There is an integration test [?] there is no separate integration test, I just run all the code inside docker containers and it worked. I don't understand what else is expected after unit tests.

- Linters (flake8, pylint) and code formatter (black) are used

- There are a lot of makefiles (makefile for project, makefiles for services)

- There are pre-commit hooks for quality checks

- There's a CI/CD pipeline:

build_dev_imagebuilds and pushesaudioprocessor_devimage to dockerhub (CD),run_unit_testsruns unit tests and computes code coverage from builded image (CI),build_conda_packbuilds conda environment for Triton Inference server and returns archive as artifact (CD)