This repository is prepared to provide the code resource for the paper:

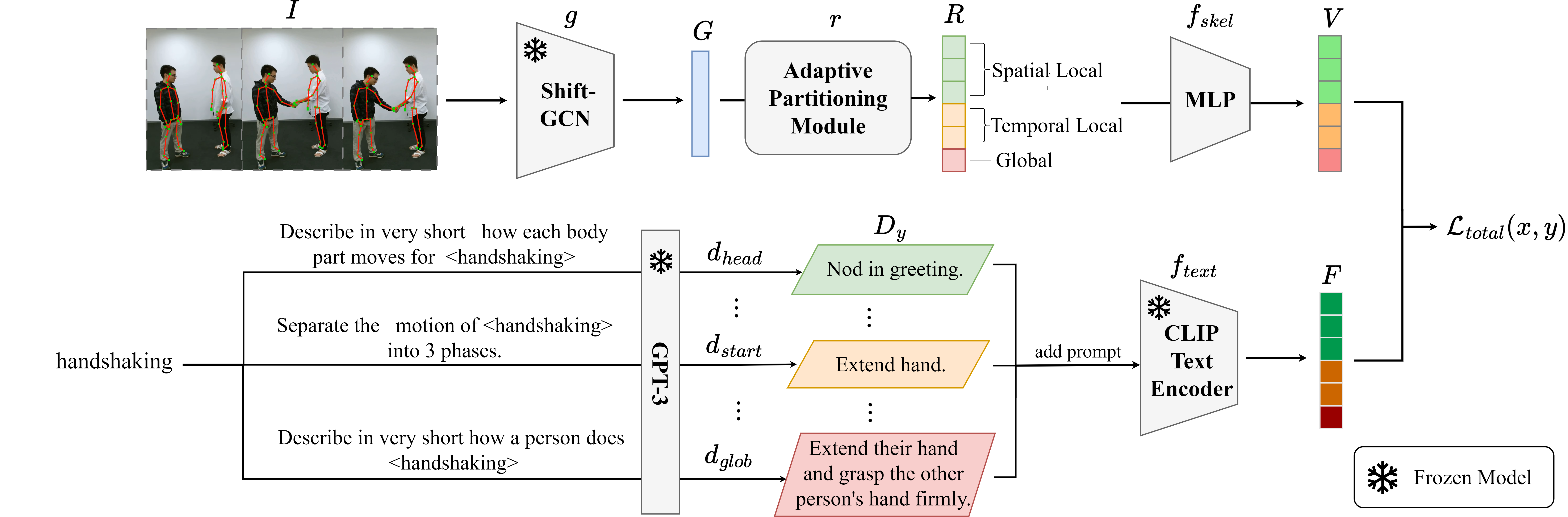

Part-aware Unified Representation of Language and Skeleton for Zero-shot Action Recognition by Anqi Zhu, Qiuhong Ke, Mingming Gong, James Bailey.

- Uploaded the main model architecture and its relevant package functions. Please visit model/purls.py. (19/06/2024)

- Released pre-print version on arXiv. Available on 21/06/2024. (19/06/2024)

- docs for

- Prerequisites

- Demo

- Data Preparation

- Testing Pre-trained Models

- Training

- Citation

- codes fo\r

- basic organization and transplantation from implemented codes

- pre-trained model data

- data preprocess

main.py also supports training a new model with customized configs. The script accepts the following parameters:

| Argument | Possible Values | Description |

|---|---|---|

| ntu | 60; 120 | Which NTU dataset to use |

| ss | 5; 12 (For NTU-60); 24 (For NTU-120) | Which split to use |

| st | r (for random) | Split type |

| phase | train; val | train(required for zsl), (once with train and once with val for gzsl) |

| ve | shift; msg3d | Select the Visual Embedding Model |

| le | w2v; bert | Select the Language Embedding Model |

| num_cycles | Integer | Number of cycles(Train for 10 cycles) |

| num_epoch_per_cycle | Integer | Number of epochs per cycle 1700 for 5 random and 1900 for others |

| latent_size | Integer | Size of the skeleton latent dimension (100 for ntu-60 and 200 for ntu-120) |

| load_epoch | Integer | The epoch to be loaded |

| load_classifier | Set if the pre-trained classifier is to be loaded | |

| dataset | - | Path to the generated visual features |

| wdir | - | Path to the directory to store the weights in |

| mode | train;eval | train for training synse, eval to eval using a pretrained model |

| gpu | - | which gpu device number to train on |

For example, if you want to train PURLS for zsl under a experiment split of 55/5 split on NTU 60, you can use the following command:

python main.py -c configs/adaptive_purls_5r_clip_gb.yml

For any question, feel free to create a new issue or contact.

Qiuhone Ke : qiuhong.ke@monash.edu

Anqi Zhu : azzh1@student.unimelb.edu.au