A BERT-powered Tweet text & metadata model for authoritarian platform manipulation detection in India

By: Hemanth Bharatha Chakravarthy & Em McGlone

2 May, 2022. All errors are our own.

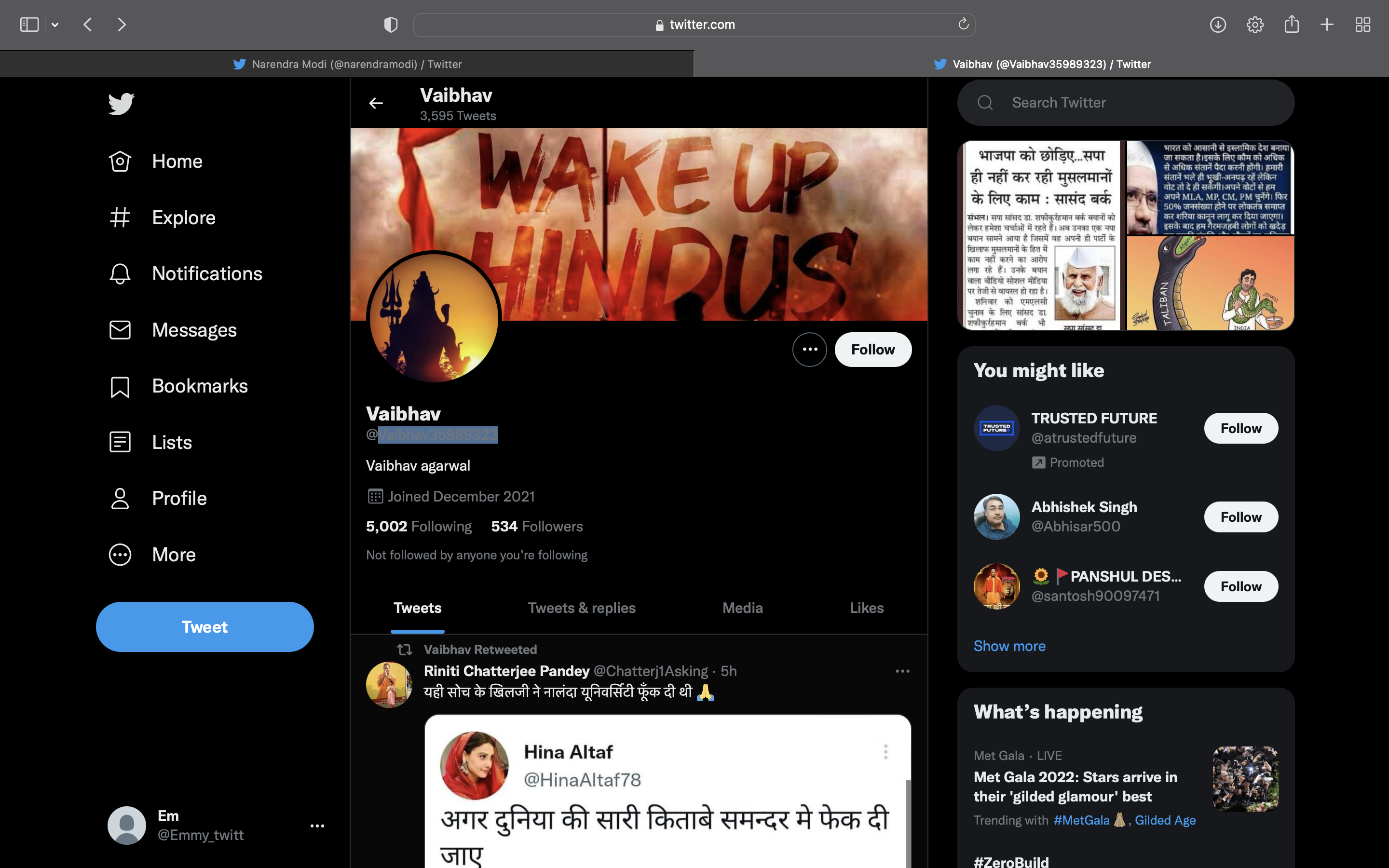

As politics moves onto Twitter, authoritarians must update their set of tools used to manipulate discourse and amplify sentiments. While much attention has been given to automated “robots” that violate platform rules and post junk content, inadequate attention is given to the paid armies of real users that strongmen leaders deploy to control trending charts and manipulate voter timelines. Our thesis is that the Indian paid armies of political Tweeters are exploited to add vitriol to the platform, drive nationalistic sentiments, and attack critics---that is, change the nature of the text corpus on Twitter. Thus, we are interested in predicting whether an account is a platform manipulator or not based on their Tweets’ word embeddings and user metadata. An ideal dataset collected from scraping millions of Tweets during the peak of the 2019 Indian national election campaigns is used to subset-train the Google BERT base model and then test subsequent models built on the word embeddings. Through model tuning, comparison, and evaluation, we arrive at a random forest natural language processing model for Twitter platform manipulation prediction. The model is trained on the most relevant principal components of Tweet word embeddings and metadata such as likes or days existed to predict a coordination indicator. The coordination indicator is predicted as true if the Tweet is associated with Tweets that were copy-pasted by multiple unique users. On the test set, the random forest model of choice has an accuracy of 74.07%, a sensitivity rate of 19.74%, and a specificity rate of 82.55%. This paper accompanies our NLP web application product, a Twitter “botocrat” detector, that is built upon this random forest model, and is available here.

botocracy.PDFis the final paper deliverable. It is a self-standing document that outlines the data, wrangling, feature engineering, models tried, model evaluation, and model choice and discussion of the same. It is our primary output./shiny/is the final deliverable Shiny app. As we use a unique Python-R hybrid back-end, Shiny's default domain API does not have capacity to run this app. Hence, the app is run locally and screen recordings are submitted in/demo/.- Demo screen recording video and screeenshots are embedded below.

/data/contains the raw data files and sampling code/shiny/contains the Shiny app that can be run locally with included installs and the setup of a Python Conda environment via a Rreticulateenvironment/paper/contains the Rmd with the model evaluation that yields the final PDF as well as \LaTeX style files/demo/contains demos of the Shiny app recorded

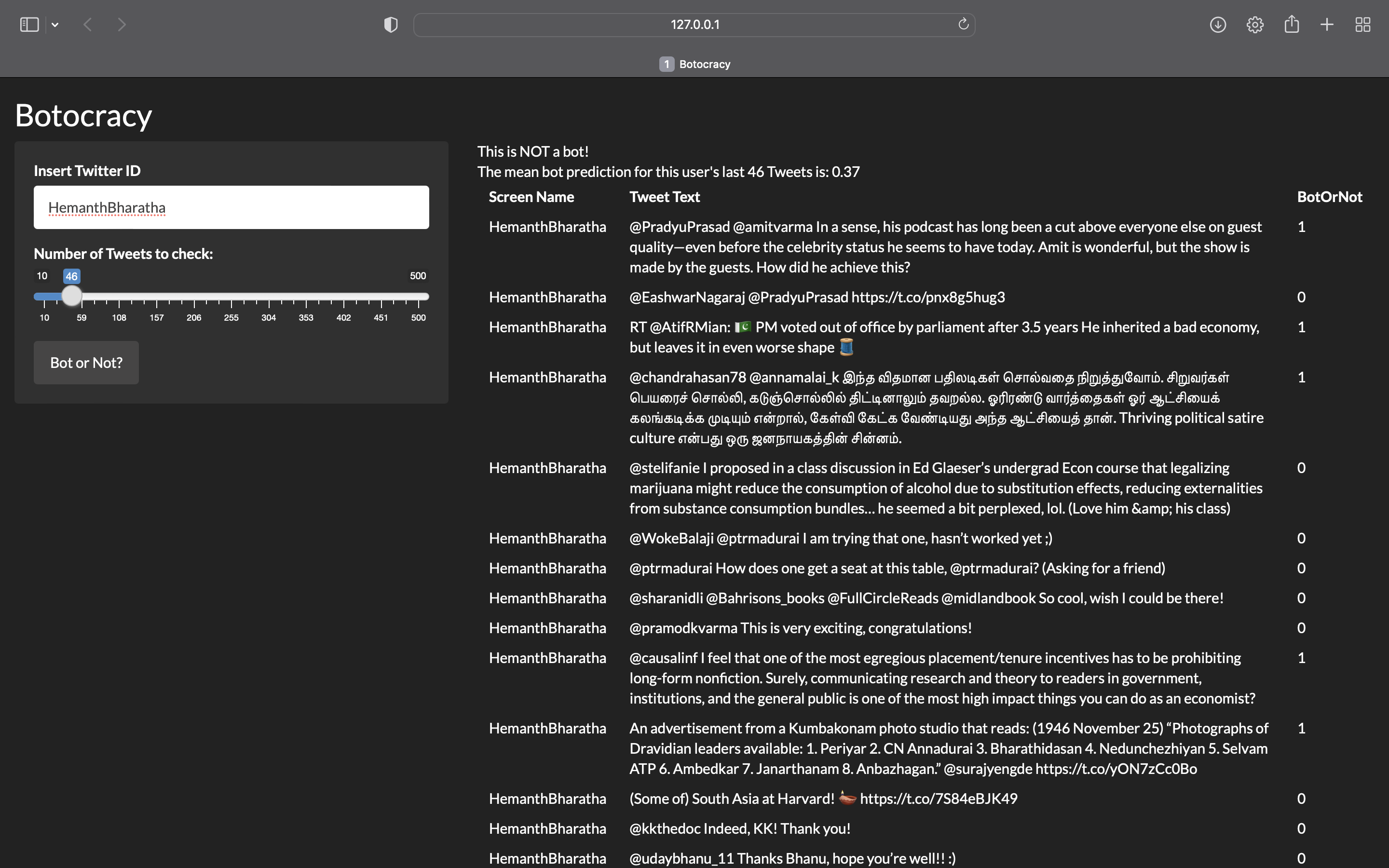

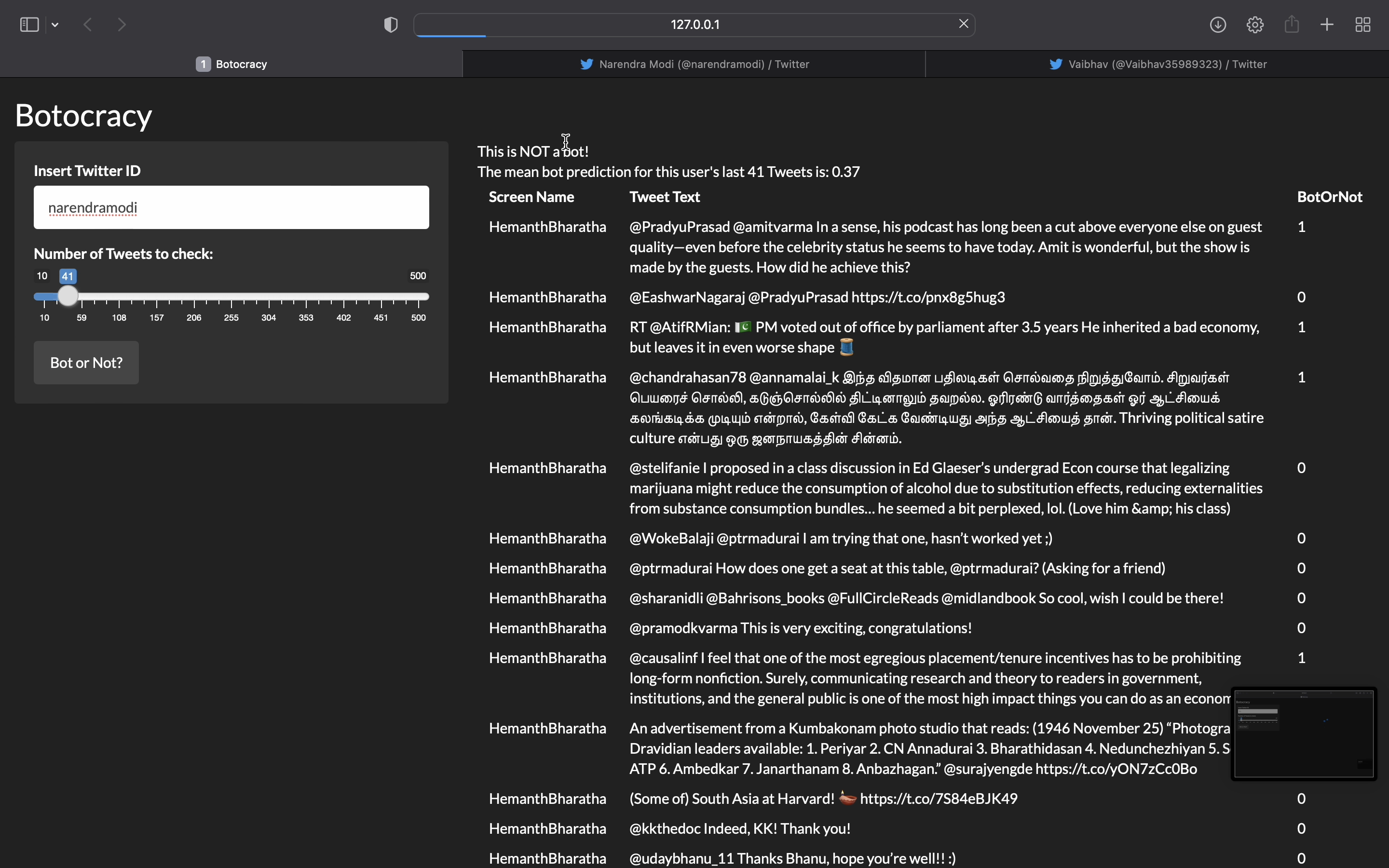

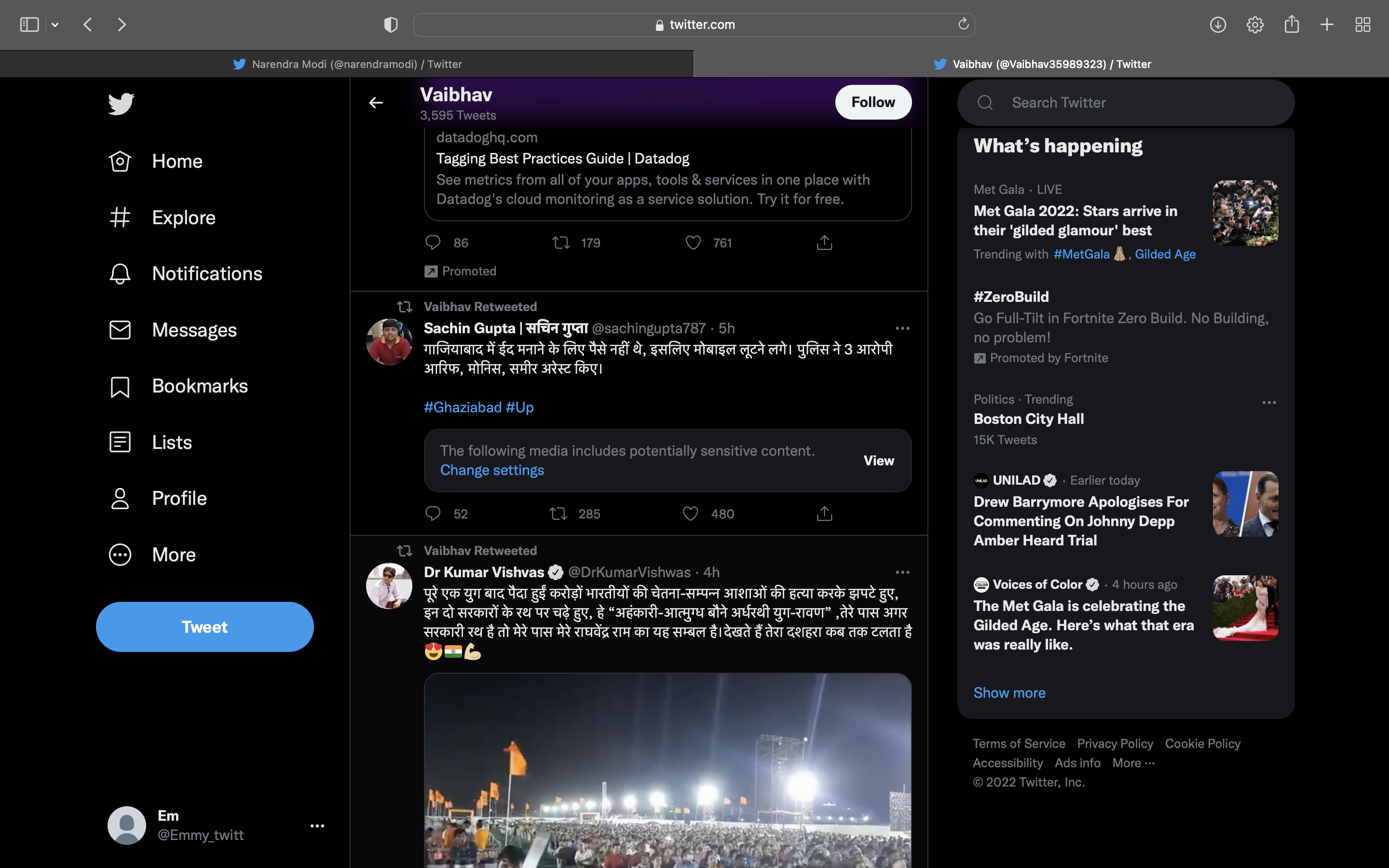

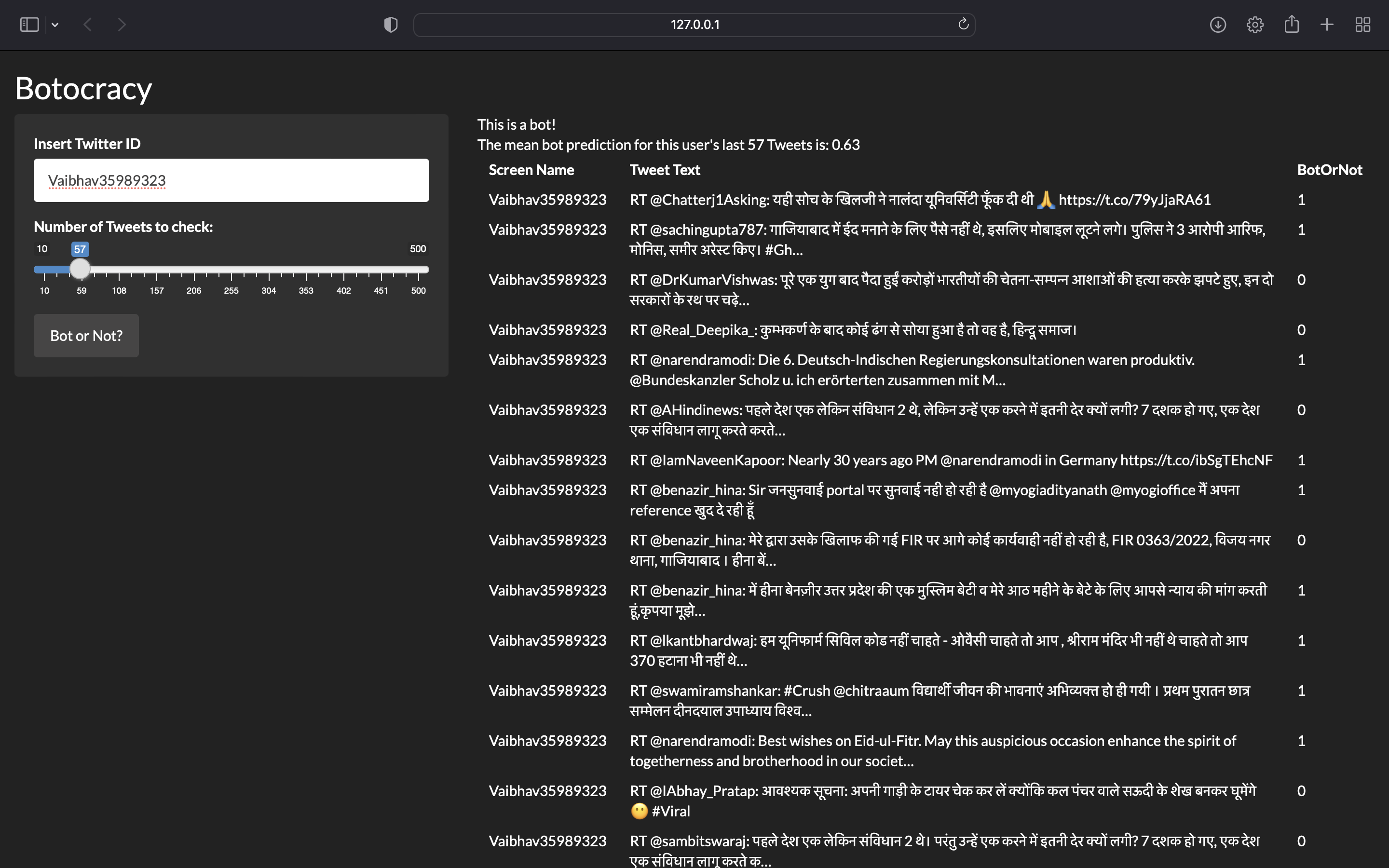

The Shiny App takes a Twitter username and a number of Tweets to check and returns:

- An overall prediction if the user is a bot and the mean bot score they attained from the tuned Random Forest model run on the important principal components of BERT word embeddings of user tweets combined with their metadata such as followers count, friends count, favorites count, number of days the account has existed, whether they have the 8-digit serial number that Twitter auto-generates for non-custom usernames, and so on.

- The set of requested

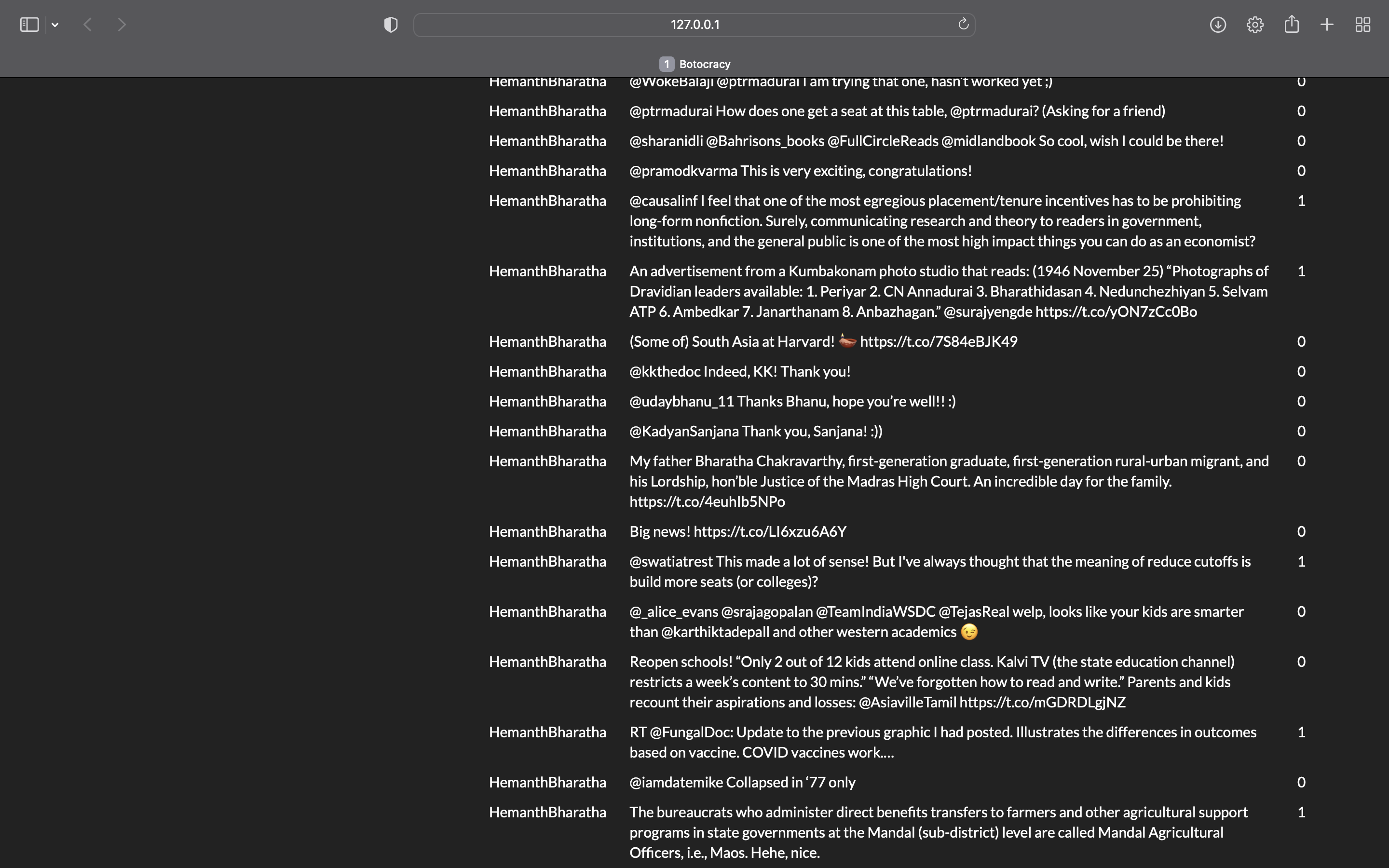

ntweets from the user and the bot prediction (1 or 0) for each Tweet (based along with the relevant metadata of each tweet)

The model predicts a fair number of 1s, meaning the random forest predicts the tweet to be a part of coordinated platform manipulation. This should be interpreted with the background in mind that the majority of Indian political Tweets come from bots or are coordinated platform manipulation.