Qiying Yu1,2*, Quan Sun2*, Xiaosong Zhang2, Yufeng Cui2, Fan Zhang2

Yue Cao3, Xinlong Wang2, Jingjing Liu1

1 Tsinghua, AIR, 2 BAAI, 3 Independent Researcher

* Equal Contribution

CapsFusion is a straightforward and scalable framework for generating high-quality captions for image-text pairs. This framework leverages large language models (LLMs) to organically incorporate the strengths of both real image-text pairs and synthetic captions generated by captioning models, to address the severe Scalability Deficiency and World Knowledge Loss issues in large multimodal models (LMMs) trained with synthetic captions.

Tue 27, 2024: CapsFusion is accepted by CVPR 2024! 🎉🍻Jan 9, 2024: Release the CapsFusion 120M caption data.Nov 29, 2023: Release the model and distributed inference code of CapsFus-LLaMA.

We release the CapsFusion-120M dataset, a high-quality resource for large-scale multimodal pretraining. This release includes corresponding captions from the LAION-2B and LAION-COCO datasets, facilitating comparative analyses and further in-depth investigations into the quality of image-text data.

The dataset can be downloaded from 🤗Huggingface. Each data entry has four fields:

- Image URL

- LAION-2B caption (raw alt-text from the web)

- LAION-COCO caption (synthesized by BLIP)

- CapsFusion caption (ours)

We provide a code snippet to illustrate the process of extracting caption data from the given parquet files, which prints the url, laion_2b, laion_coco and capsfusion captions for the first three entries:

import pandas as pd

data = pd.read_parquet("capsfusion_1.parquet")

for idx, item in d.iterrows():

print(f"{item['image_url']=}")

print(f"{item['laion_2b']=}")

print(f"{item['laion_coco']=}")

print(f"{item['capsfusion']=}")

print('\n')

if idx == 2:

breakPlease note that due to our inability to pair all captions with their corresponding image URLs, the number of captions released totals 113 million. However, we anticipate that the performance achieved with this dataset will be comparable to that of training on the entire 120 million captions.

We provide instructions below for employing the CapsFus-LLaMA model to generate CapsFusion captions given raw captions from LAION-2B and synthetic captions from LAION-COCO.

pip install -r requirements.txtWe provide 10,000 samples in ./data/example_data.json. You can organize your own data in a similar structure. Each sample has the following structure, containing captions from LAION-2B and LAION-COCO:

{

"laion_2b": ...,

"laion_coco": ...,

}We also attached a capsfusion_official item for each sample in ./data/example_data.json, which is the CapsFusion caption generated by CapsFus-LLaMA.

torchrun --nnodes 1 --nproc_per_node 8 capsfusion_inference.pyIt takes about 20 minutes to refine the 10,000 samples with 8 A100-40G GPUs. You can change the value of nnodes and nproc_per_node according to your available GPUs.

The CapsFus-LLaMA model will be automatically downloaded from huggingface. You can alternatively also manually download the model from this huggingface model repo, and change the model_name in config.yaml to your local model directory path.

The result files will be saved at ./data.

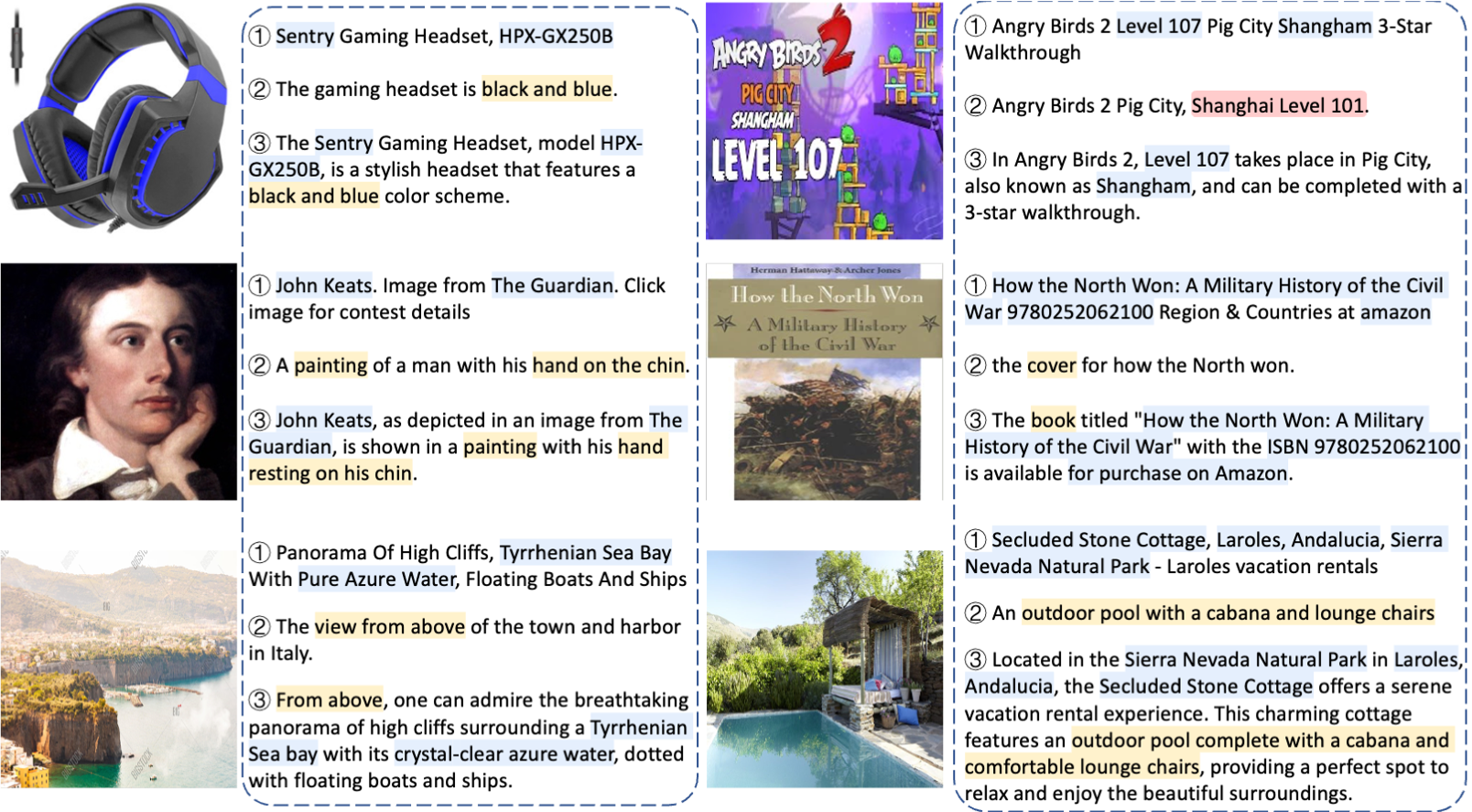

Examples generated by CapsFusion are provided below: ➀ real web-based captions (from LAION-2B, which are noisy), ➁ synthetic captions (from LAION-COCO, generated by BLIP, which are syntactically and semantically simplistic), and their corresponding ③ CapsFusion captions.

Knowledge from raw captions (in blue) and information from synthetic captions (in yellow) are organically fused into integral CapsFusion captions. More captions and detailed analysis can be found in our paper.

Models trained on CapsFusion captions exhibit a wealth of real-world knowledge (shown in the figure below), meanwhile outperforming both real and synthetic captions in benchmark evaluations (details can be found in the paper).

Please stay tuned for upcoming releases. Thank you for your understanding.

-

CapsFus-LLaMA model with distributed inference code

-

CapsFusion-10M subset: Images with Raw (from LAION-2B), Synthetic (from LAION-COCO), and CapsFusion captions

-

CapsFusion-120M fullset: Image URLs with CapsFusion captions

CapsFusion: Rethinking Image-Text Pairs at Scale -- https://arxiv.org/abs/2310.20550

@article{yu2023capsfusion,

title={CapsFusion: Rethinking Image-Text Data at Scale},

author={Yu, Qiying and Sun, Quan and Zhang, Xiaosong and Cui, Yufeng and Zhang, Fan and Cao, Yue and Wang, Xinlong and Liu, Jingjing},

journal={arXiv preprint arXiv:2310.20550},

year={2023}

}

Partial code adapted from Alpaca, FastChat. Thanks for their great work.