The official repository for Fine-grained Appearance Transfer with Diffusion Models.

- 2023.11 Code will be released coming soon.

The results of fine-grained appearance transfer using our method. The leftmost column displays the source images. On the right, the output images achieved by detailed appearance transfer corresponding to the target images (outlined in black), while preserving structural integrity. The examples at the bottom demonstrate our method's capability to transfer across various domains and categories.

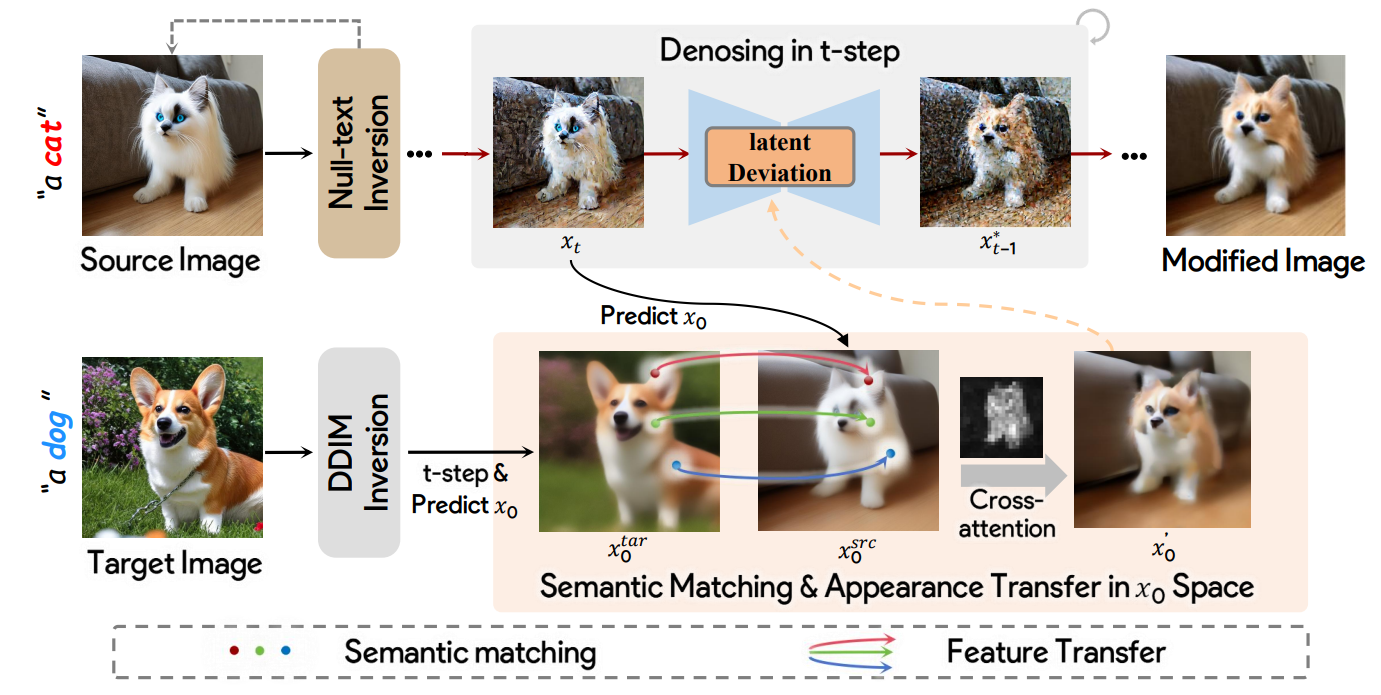

This figure illustrates our pipeline, commencing with null-text inversion applied to the source image I, creating a latent path for reconstructing the image. During the diffusion denoising stage, Latent Deviation is performed, leading to a modified image that aligns with the target image T. Specifically, the process begins with semantic alignment in the x0 space between x0src and x0tar, where x0tar is obtained through DDIM inversion with T. Based on semantic relations, features from x0tar are transferred to x0src, guided by an attention mask of I, resulting in x'0. Finally, x'0 is processed in the latent space to synthesize the final modified image.