This project shows you how to set up OpenShift on AWS using Terraform. This the companion project to my article Get up and running with OpenShift on AWS.

I am also adding some 'recipes' which you can use to mix in more advanced features:

Index

- Overview

- Prerequisites

- Creating the Cluster

- Installing OpenShift

- Accessing and Managing OpenShift

- Connecting to the Docker Registry

- Additional Configuration

- Choosing the OpenShift Version

- Destroying the Cluster

- Makefile Commands

- Pricing

- Recipes

- Troubleshooting

- Developer Guide

- References

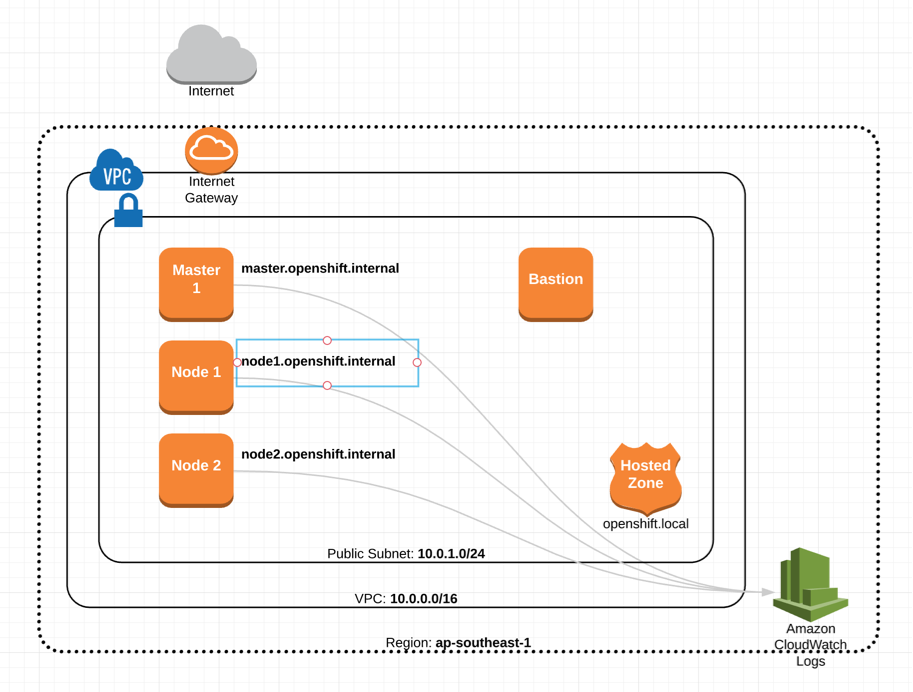

Terraform is used to create infrastructure as shown:

Once the infrastructure is set up an inventory of the system is dynamically created, which is used to install the OpenShift Origin platform on the hosts.

You need:

- Terraform (0.12 or greater) -

brew update && brew install terraform - An AWS account, configured with the cli locally -

if [[ "$unamestr" == 'Linux' ]]; then

dnf install -y awscli || yum install -y awscli

elif [[ "$unamestr" == 'FreeBSD' ]]; then

brew install -y awscli

fi

Create the infrastructure first:

# Make sure ssh agent is on, you'll need it later.

eval `ssh-agent -s`

# Create the infrastructure.

make infrastructureYou will be asked for a region to deploy in, use us-east-1 or your preferred region. You can configure the nuances of how the cluster is created in the main.tf file. Once created, you will see a message like:

$ make infrastructure

var.region

Region to deploy the cluster into

Enter a value: ap-southeast-1

...

Apply complete! Resources: 20 added, 0 changed, 0 destroyed.

That's it! The infrastructure is ready and you can install OpenShift. Leave about five minutes for everything to start up fully.

To install OpenShift on the cluster, just run:

make openshiftYou will be asked to accept the host key of the bastion server (this is so that the install script can be copied onto the cluster and run), just type yes and hit enter to continue.

It can take up to 30 minutes to deploy. If this fails with an ansible not found error, just run it again.

Once the setup is complete, just run:

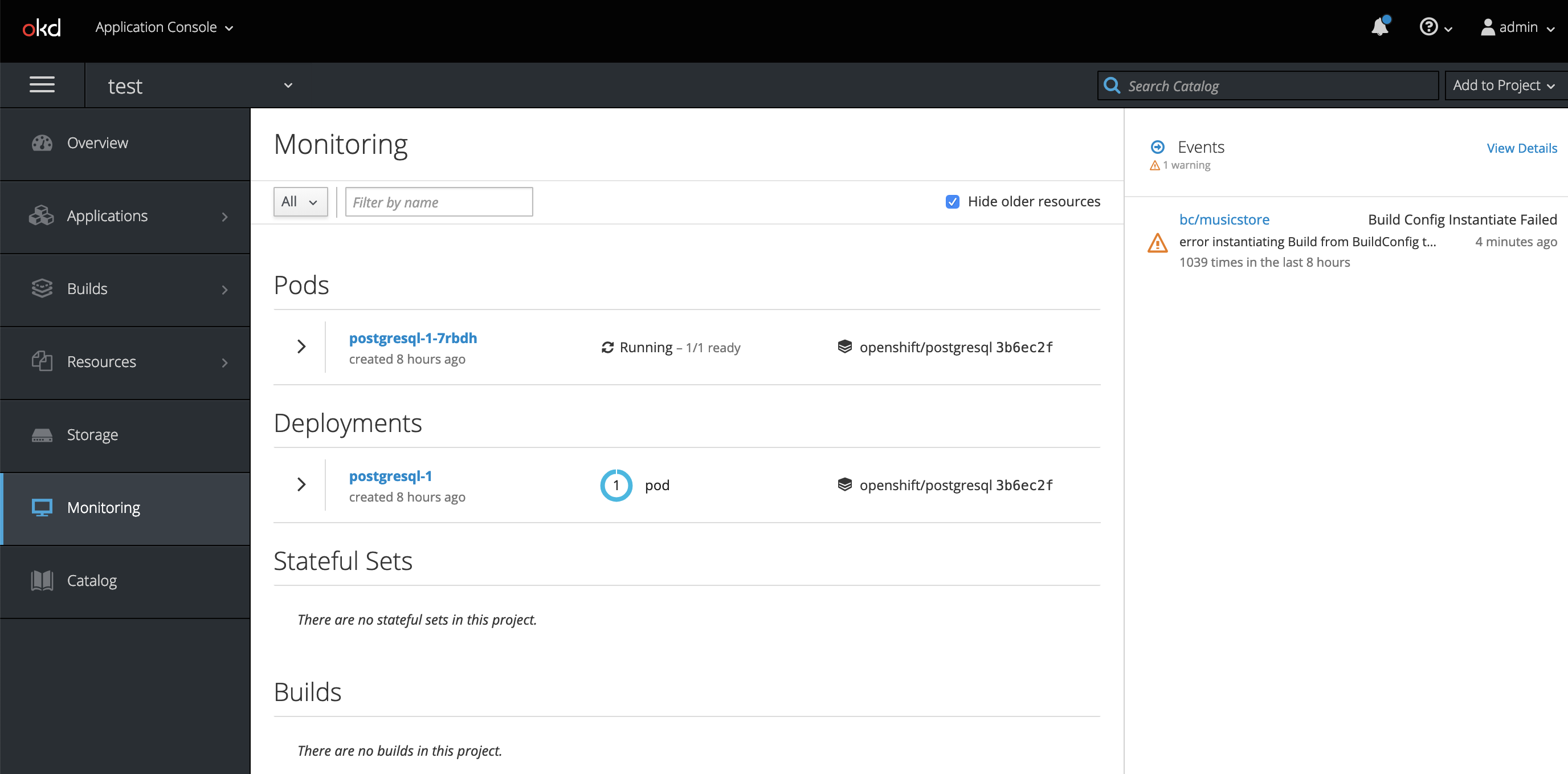

make browse-openshiftTo open a browser to admin console, use the following credentials to login:

Username: admin

Password: 123

There are a few ways to access and manage the OpenShift Cluster.

You can log into the OpenShift console by hitting the console webpage:

make browse-openshift

# the above is really just an alias for this!

open $(terraform output master-url)The url will be something like https://a.b.c.d.xip.io:8443.

The master node has the OpenShift client installed and is authenticated as a cluster administrator. If you SSH onto the master node via the bastion, then you can use the OpenShift client and have full access to all projects:

$ make ssh-master # or if you prefer: ssh -t -A ec2-user@$(terraform output bastion-public_ip) ssh master.openshift.local

$ oc get pods

NAME READY STATUS RESTARTS AGE

docker-registry-1-d9734 1/1 Running 0 2h

registry-console-1-cm8zw 1/1 Running 0 2h

router-1-stq3d 1/1 Running 0 2h

Notice that the default project is in use and the core infrastructure components (router etc) are available.

You can also use the oadm tool to perform administrative operations:

$ oadm new-project test

Created project test

From the OpenShift Web Console 'about' page, you can install the oc client, which gives command-line access. Once the client is installed, you can login and administer the cluster via your local machine's shell:

oc login $(terraform output master-url)Note that you won't be able to run OpenShift administrative commands. To administer, you'll need to SSH onto the master node. Use the same credentials (admin/123) when logging through the commandline.

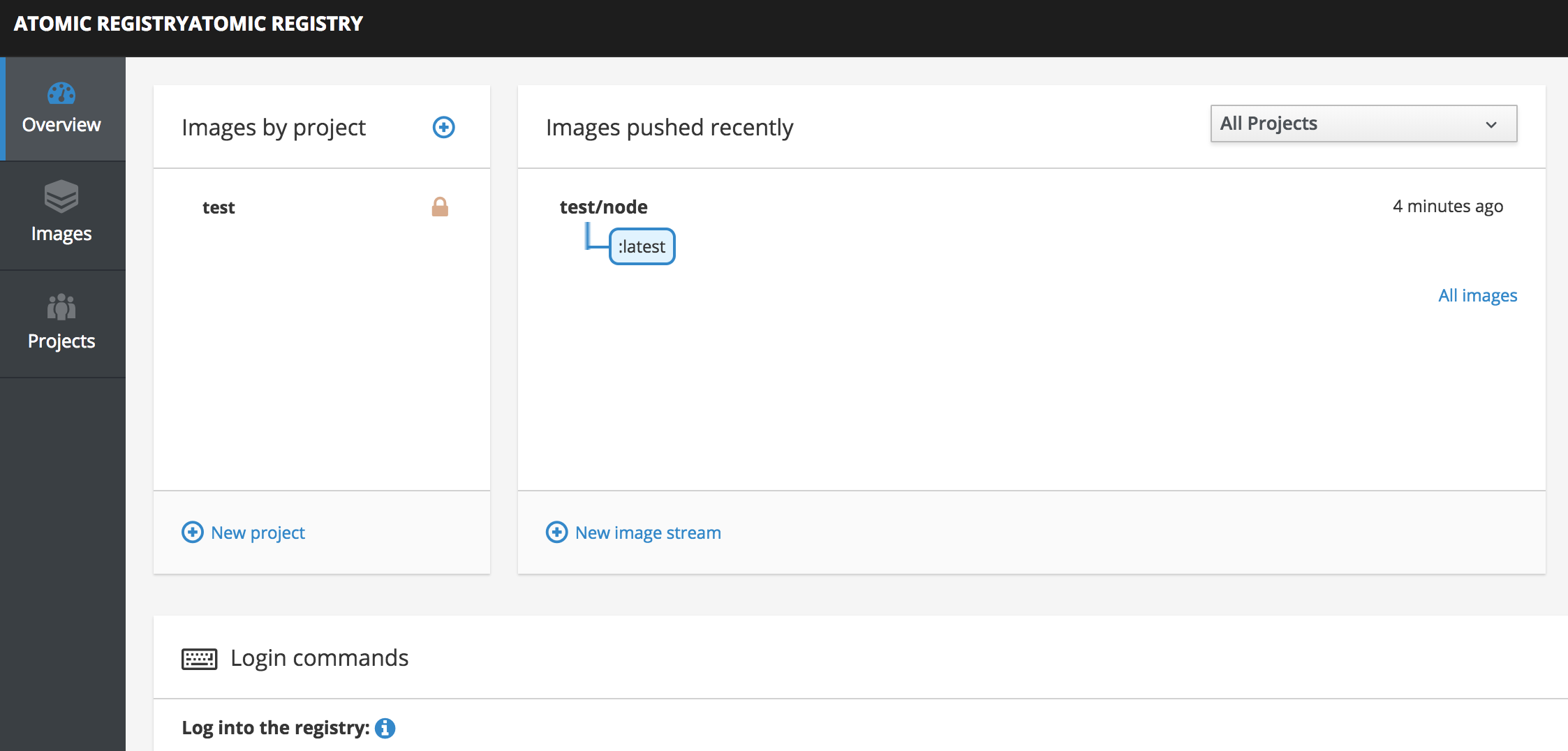

The OpenShift cluster contains a Docker Registry by default. You can connect to the Docker Registry, to push and pull images directly, by following the steps below.

First, make sure you are connected to the cluster with The OpenShift Client:

oc login $(terraform output master-url)Now check the address of the Docker Registry. Your Docker Registry url is just your master url with docker-registry-default. at the beginning:

% echo $(terraform output master-url)

https://54.85.76.73.xip.io:8443

In the example above, my registry url is https://docker-registry-default.54.85.76.73.xip.io. You can also get this url by running oc get routes -n default on the master node.

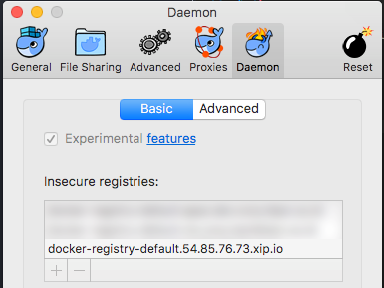

You will need to add this registry to the list of untrusted registries. The documentation for how to do this here https://docs.docker.com/registry/insecure/. On a Mac, the easiest way to do this is open the Docker Preferences, go to 'Daemon' and add the address to the list of insecure regsitries:

Finally you can log in. Your Docker Registry username is your OpenShift username (admin by default) and your password is your short-lived OpenShift login token, which you can get with oc whoami -t:

% docker login docker-registry-default.54.85.76.73.xip.io -u admin -p `oc whoami -t`

Login Succeeded

You are now logged into the registry. You can also use the registry web interface, which in the example above is at: https://registry-console-default.54.85.76.73.xip.io

The cluster is set up with support for dynamic provisioning of AWS EBS volumes. This means that persistent volumes are supported. By default, when a user creates a PVC, an EBS volume will automatically be set up to fulfil the claim.

More details are available at:

- https://blog.openshift.com/using-dynamic-provisioning-and-storageclasses/

- https://docs.openshift.org/latest/install_config/persistent_storage/persistent_storage_aws.html

No additional should be required for the operator to set up the cluster.

Note that dynamically provisioned EBS volumes will not be destroyed when running terrform destroy. The will have to be destroyed manuallly when bringing down the cluster.

The easiest way to configure is to change the settings in the ./inventory.template.cfg file, based on settings in the OpenShift Origin - Advanced Installation guide.

When you run make openshift, all that happens is the inventory.template.cfg is turned copied to inventory.cfg, with the correct IP addresses loaded from terraform for each node. Then the inventory is copied to the master and the setup script runs. You can see the details in the makefile.

Currently, OKD 3.11 is installed.

To change the version, you can attempt to update the version identifier in this line of the ./install-from-bastion.sh script:

git clone -b release-3.11 https://github.com/openshift/openshift-ansibleHowever, this may not work if the version you change to requires a different setup. To allow people to install earlier versions, stable branches are available. Available versions are listed here.

| Version | Status | Branch |

|---|---|---|

| 3.11 | Tested successfull | release/okd-3.11 |

| 3.10 | Tested successfully | release/okd-3.10 |

| 3.9 | Tested successfully | release/ocp-3.9 |

| 3.8 | Untested | |

| 3.7 | Untested | |

| 3.6 | Tested successfully | release/openshift-3.6 |

| 3.5 | Tested successfully | release/openshift-3.5 |

Bring everything down with:

terraform destroy

Resources which are dynamically provisioned by Kubernetes will not automatically be destroyed. This means that if you want to clean up the entire cluster, you must manually delete all of the EBS Volumes which have been provisioned to serve Persistent Volume Claims.

There are some commands in the makefile which make common operations a little easier:

| Command | Description |

|---|---|

make infrastructure |

Runs the terraform commands to build the infra. |

make openshift |

Installs OpenShift on the infrastructure. |

make browse-openshift |

Opens the OpenShift console in the browser. |

make ssh-bastion |

SSH to the bastion node. |

make ssh-master |

SSH to the master node. |

make ssh-node1 |

SSH to node 1. |

make ssh-node2 |

SSH to node 2. |

make sample |

Creates a simple sample project. |

make lint |

Lints the terraform code. |

You'll be paying for:

- 1 x m4.xlarge instance

- 2 x t2.large instances

Your installation can be extended with recipes.

You can quickly add Splunk to your setup using the Splunk recipe:

To integrate with splunk, merge the recipes/splunk branch then run make splunk after creating the infrastructure and installing OpenShift:

git merge recipes/splunk

make infracture

make openshift

make splunk

There is a full guide at:

http://www.dwmkerr.com/integrating-openshift-and-splunk-for-logging/

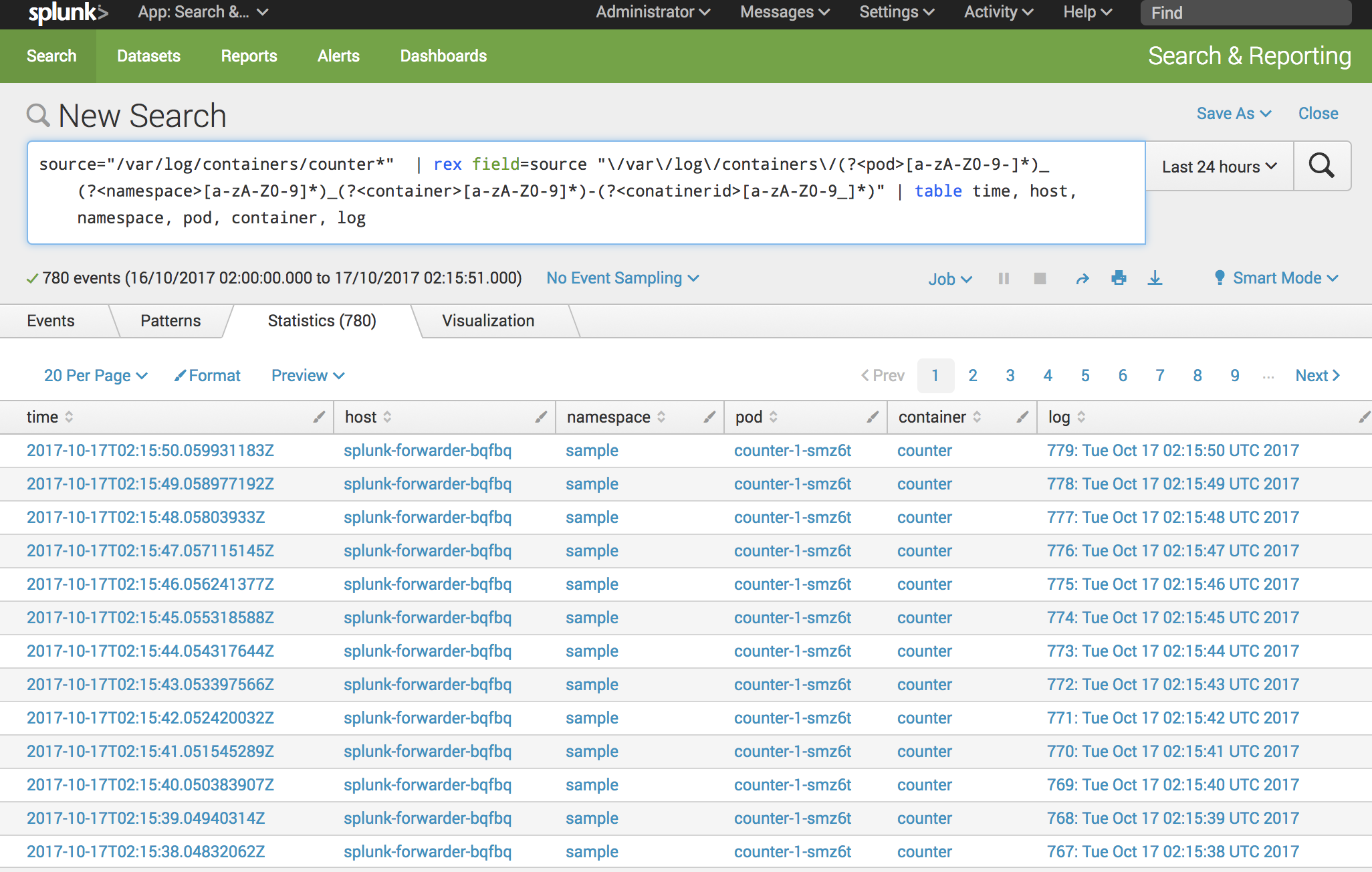

You can quickly rip out container details from the log files with this filter:

source="/var/log/containers/counter-1-*" | rex field=source "\/var\/log\/containers\/(?<pod>[a-zA-Z0-9-]*)_(?<namespace>[a-zA-Z0-9]*)_(?<container>[a-zA-Z0-9]*)-(?<conatinerid>[a-zA-Z0-9_]*)" | table time, host, namespace, pod, container, log

Image pull back off, Failed to pull image, unsupported schema version 2

Ugh, stupid OpenShift docker version vs registry version issue. There's a workaround. First, ssh onto the master:

$ ssh -A ec2-user@$(terraform output bastion-public_ip)

$ ssh master.openshift.local

Now elevate priviledges, enable v2 of of the registry schema and restart:

sudo su

oc set env dc/docker-registry -n default REGISTRY_MIDDLEWARE_REPOSITORY_OPENSHIFT_ACCEPTSCHEMA2=true

systemctl restart origin-master.serviceYou should now be able to deploy. More info here.

OpenShift Setup Issues

TASK [openshift_manage_node : Wait for Node Registration] **********************

FAILED - RETRYING: Wait for Node Registration (50 retries left).

fatal: [node2.openshift.local -> master.openshift.local]: FAILED! => {"attempts": 50, "changed": false, "failed": true, "results": {"cmd": "/bin/oc get node node2.openshift.local -o json -n default", "results": [{}], "returncode": 0, "stderr": "Error from server (NotFound): nodes \"node2.openshift.local\" not found\n", "stdout": ""}, "state": "list"}

to retry, use: --limit @/home/ec2-user/openshift-ansible/playbooks/byo/config.retry

This issue appears to be due to a bug in the kubernetes / aws cloud provider configuration, which is documented here:

dwmkerr/terraform-aws-openshift#40

At this stage if the AWS generated hostnames for OpenShift nodes are specified in the inventory, then this problem should disappear. If internal DNS names are used (e.g. node1.openshift.internal) then this issue will occur.

Unable to restart service origin-master-api

Failure summary:

1. Hosts: ip-10-0-1-129.ec2.internal

Play: Configure masters

Task: restart master api

Message: Unable to restart service origin-master-api: Job for origin-master-api.service failed because the control process exited with error code. See "systemctl status origin-master-api.service" and "journalctl -xe" for details.

This section is intended for those who want to update or modify the code.

CircleCI 2 is used to run builds. You can run a CircleCI build locally with:

make circleciCurrently, this build will lint the code (no tests are run).

tflint is used to lint the code on the CI server. You can lint the code locally with:

make lint- https://www.udemy.com/openshift-enterprise-installation-and-configuration - The basic structure of the network is based on this course.

- https://blog.openshift.com/openshift-container-platform-reference-architecture-implementation-guides/ - Detailed guide on high available solutions, including production grade AWS setup.

- https://access.redhat.com/sites/default/files/attachments/ocp-on-gce-3.pdf - Some useful info on using the bastion for installation.

- http://dustymabe.com/2016/12/07/installing-an-openshift-origin-cluster-on-fedora-25-atomic-host-part-1/ - Great guide on cluster setup.

- Deploying OpenShift Container Platform 3.5 on AWS