deep-learning computer-vision cnn encoder-decoder autoencoder efficientnet sequence-modeling attention attention-mechanism nlp transformer tensorflow

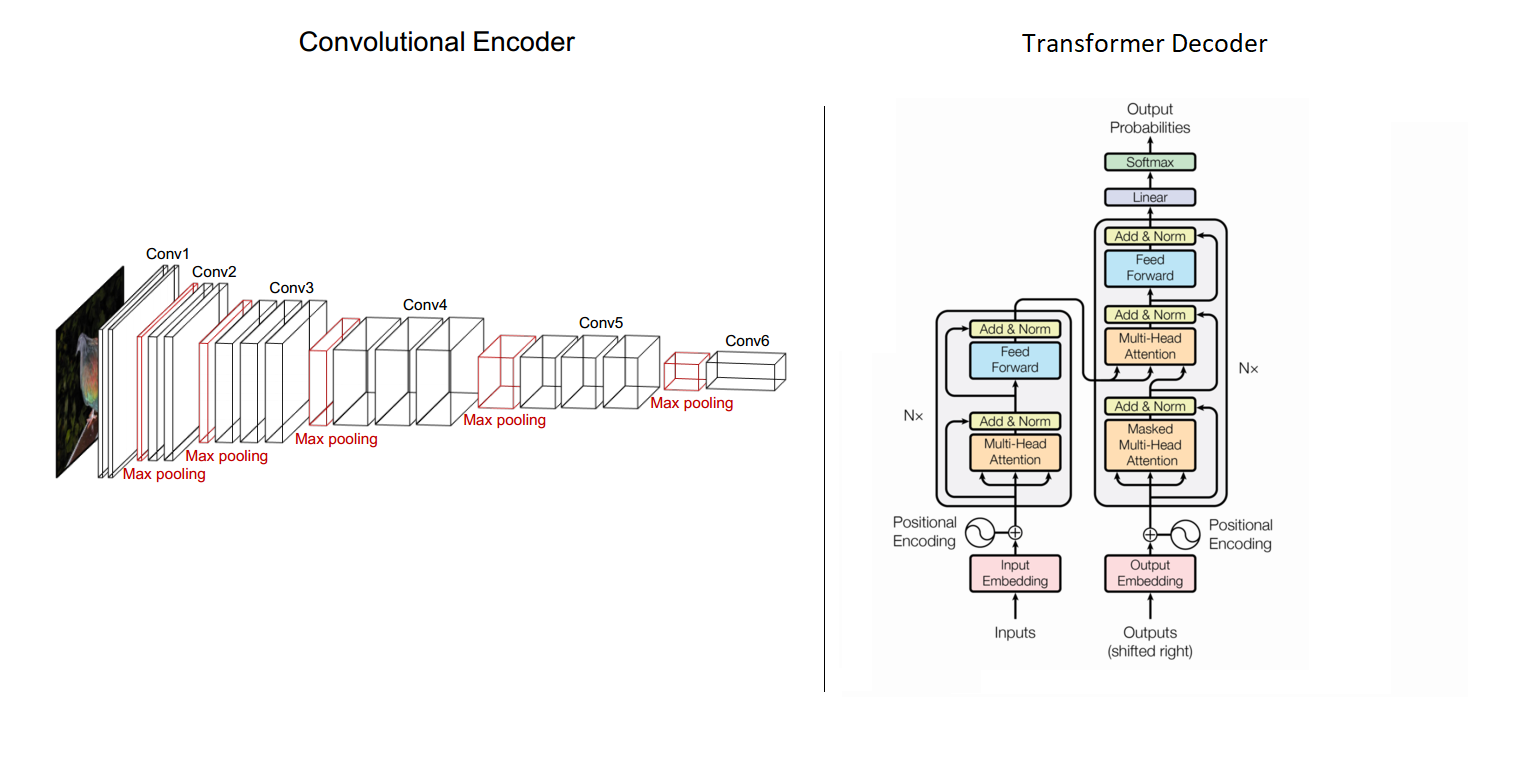

This is an implementation of attention mechanism for image captioning. The model uses an autoencoder architecture, where a CNN (Efficientnet) acts as the encoder, and a Transformer acts as the decoeder.

The model extracts features/representations from an input image, and uses attention to generate a caption from relevant parts of these features.

-

At each point of the output sequence, the token is generated only from parts of the input that are relevant, thanks to attention.

-

Parallel processing gives The Transformer an upside over sequence attention models such as Bahdanau's Attention, because in sequence models, each point in the sequence is a bottleneck to all previous representations learned. This leads to inferior performance on longer sequences.

-

2D positional encoding enables the model to tell the structure of the image's features, and the part that corresponds to each pixel.

The Flickr 8k Dataset was used.

Creating the vocabulary by sampling the top-k words/tokens from the dataset, makes the model learn on words that appear frequently in the dataset, and thus improves chance of convergence.

The encoder part of the model is a pretrained EfficientNet. The Transformer is trained by myself. With a vocabulary size of 5000, an embedding size of 256, 4 encoder/decoder layers (Nx), and 8 heads, the model can take about 30 epochs to converge.

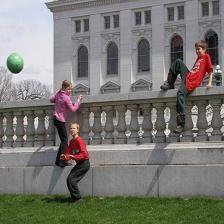

Below are some of the predicted outputs.

This model has been deployed to a mobile application. Feel free to use it if you do not have the python environment needed to run the source code.