deep-learning computer-vision deep-generative-modeling generative-adversarial-networks gan NVIDIA stylegan tensorflow

This is a very basic unofficial implementation of the research paper "A Style-Based Generator Architecture for Generative Adversarial Networks" published by NVIDIA in 2018. Here is the official implementation.

- Mapping of the latent vector to an intermediate latent vector, to reduce feature disentanglement

- Use of bilinear upsampling with normal convolutions over transpose convolutions

- Adaptive Instance Normalization

- Constant generator input

- Application of the latent vector to each block in the generator

- Addition of noise to each block in the generator

- Gradient penalty loss

- Mixing regularization

The dataset used was the CelebaHQ dataset, scaled to 256x256 pixels.

Below are samples generated after 230000 iterations of training with a batch size of 12, a channel coefficient of 48, and a base learning rate of 1e-4.

Training on a Tesla P100 GPU using the above configuration will take approximately 24 hours.

Click here to get the pretrained weights

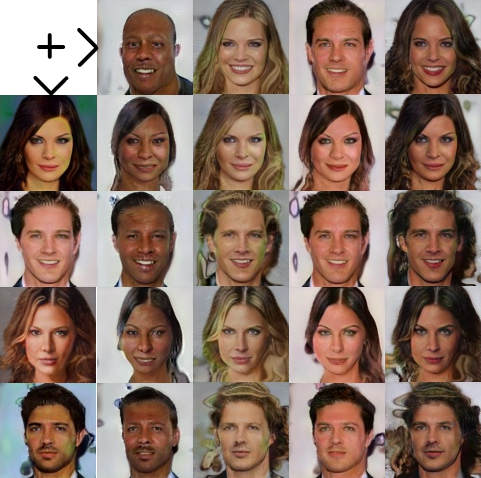

The following images were generated by mixing coarse styles (pose, gender, smile) from images on the left column with fine styles (skin color, hair color, etc) from images on the top row.

Here's an interpolation video of various generated images.

© 2020 Moses Odhiambo