by

Bagus Tris Atmaja,

Masato Akagi

Email: bagus@ep.it.ac.id

This is a repository for above paper consist of Python codes, Latex codes, and figures.

This paper has been accepted for publication in APSIPA ASC 2020.

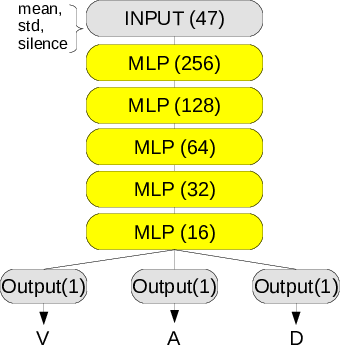

Architecture of proposed method.

Modern deep learning architectures are ordinarily performed on high-performance computing facilities due to the large size of the input features and the complexity of its model. This paper proposes traditional multilayer perceptrons (MLP) with deep layers and small input size to tackle that computation requirement limitation. The result shows that our proposed deep MLP outperformed modern deep learning architectures, i.e., LSTM and CNN, on the same number of layers and value of parameters. The deep MLP exhibited the highest performance on both speaker-dependent and speaker-independent scenarios on IEMOCAP and MSP-IMPROV corpus.

All source code used to generate the results and figures in the paper are in

the code folder.

The calculations and figure generation are by

running each Python code.

The data used in this study is provided in data and the sources for the

manuscript text and figures are in latex.

Results generated by the code are saved in results, if any.

See the README.md files in each directory for a full description.

You can download a copy of all the files in this repository by cloning the git repository:

git clone https://github.com/bagustris/deep_mlp_ser.git

You'll need a working Python environment to run the code.

The recommended way to set up your environment is through the

Anaconda Python distribution which

provides the conda package manager.

Anaconda can be installed in your user directory and does not interfere with

the system Python installation.

The required dependencies are specified in the file requirements.txt.

We use pip virtual environments to manage the project dependencies in

isolation.

Thus, you can install our dependencies without causing conflicts with your

setup (even with different Python versions).

Run the following command in the repository folder (where environment.yml

is located) to create a separate environment and install all required

dependencies in it:

pip3.6 venv REPO_NAME

Before running any code you must activate the conda environment:

source activate REPO_NAME

To reproduce the result, run each related Python file.

All source code is made available under a BSD 3-clause license. You can freely

use and modify the code, without warranty, so long as you provide attribution

to the authors. See LICENSE.md for the full license text.

The manuscript text is not public, but according to IEEE. The authors reserve the right to the article content, which is accepted for publication in the APSIPA 2020.

[1] B. T. Atmaja and M. Akagi, “Deep Multilayer Perceptrons for

Dimensional Speech Emotion Recognition,” in 2020 Asia-Pacific

Signal and Information Processing Association Annual Summit

and Conference, APSIPA ASC 2020 - Proceedings, 2020, pp. 325–331.