arXiv | PDF | Website | Gradio Demo

Hadas Orgad*, Bahjat Kawar*, and Yonatan Belinkov, Technion.

* Equal Contribution.

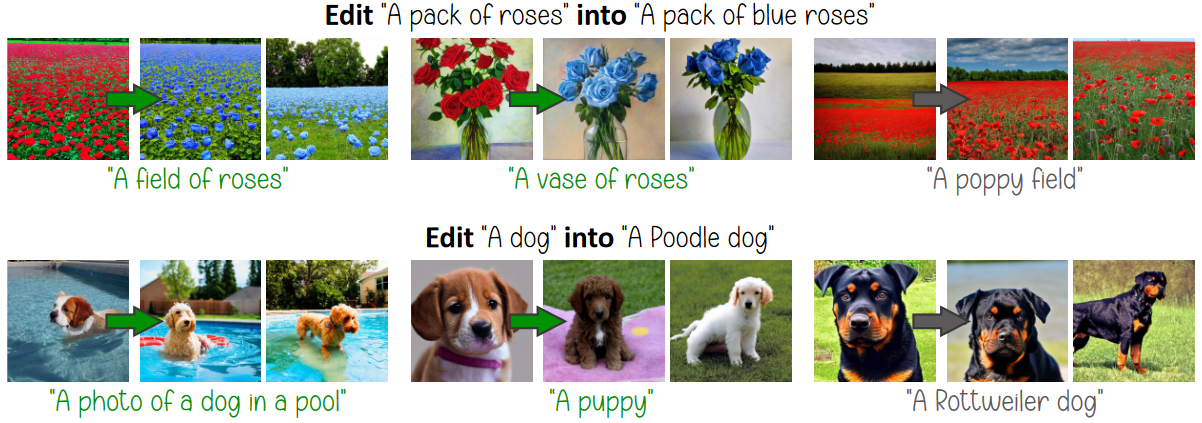

We introduce TIME (Text-to-Image Model Editing), a method for editing implicit assumptions in text-to-image diffusion models.

New: Check out the Gradio demo and edit text-to-image models from your browser!

This repo was tested with PyTorch 1.13.1, CUDA 11.6.2, Numpy 1.23.4, and Diffusers 0.9.0.

An example environment is given in environment.yml.

The general command to apply TIME and see results:

python apply_time.py {--with_to_k} {--with_augs} --train_func {TRAIN_FUNC} --lamb {LAMBDA} --save_dir {SAVE_DIR} --dataset {DATASET} --begin_idx {BEGIN} --end_idx {END} --num_seeds {SEEDS}

where the following are options

--with_to_kwhether to edit the key projection matrix along with the value projection matrix.--with_augswhether to apply textual augmentations for editing.TRAIN_FUNCthe name of the editing function to use (train_closed_formorbaseline).LAMBDAthe regularization hyperparameter to be used intrain_closed_form(default:0.1).SAVE_DIRthe directory name to save into.DATASETthe dataset csv file name (default:TIMED_test_set_filtered_SD14.csv).BEGINthe index to begin from in the dataset (inclusive).ENDthe index to end on in the dataset (exclusive).SEEDSthe number of seeds to generate images for in each prompt.

For example, for applying the main experiment on TIMED presented in the paper:

python apply_time.py --with_to_k --with_augs --train_func train_closed_form --lamb 0.1 --save_dir results --begin_idx 0 --end_idx 104 --num_seeds 24

@article{orgad2023editing,

title={Editing Implicit Assumptions in Text-to-Image Diffusion Models},

author={Orgad, Hadas and Kawar, Bahjat and Belinkov, Yonatan},

journal={arXiv:2303.08084},

year={2023}

}

This implementation is inspired by: