This repository contains the code for Pre-trained Adversarial Perturbations introduced in the following paper Pre-trained Adversarial Perturbations (Neurips 2022)

If you find this paper useful in your research, please consider citing:

@inproceedings{

ban2022pretrained,

title={Pre-trained Adversarial Perturbations},

author={Yuanhao Ban and Yinpeng Dong},

booktitle={Thirty-Sixth Conference on Neural Information Processing Systems},

year={2022},

url={https://openreview.net/forum?id=ZLcwSgV-WKH}

}

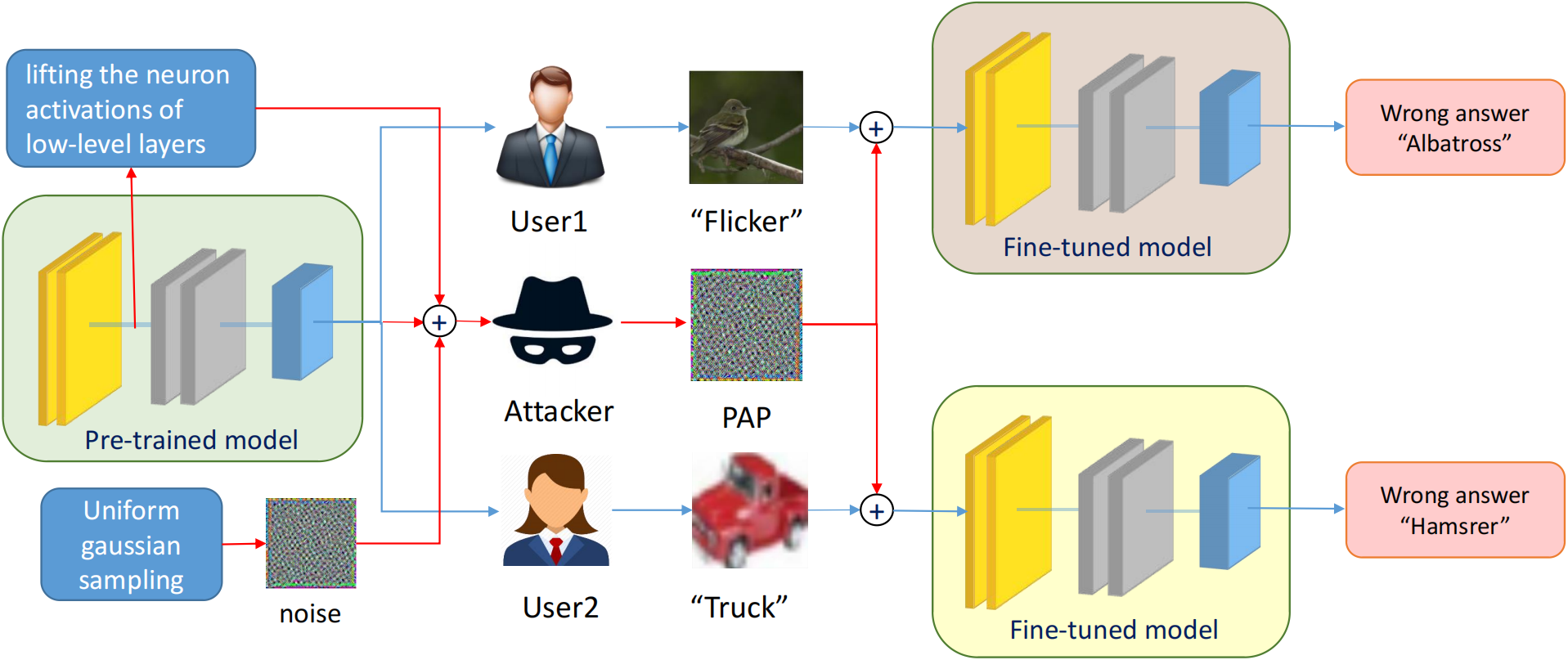

In this paper, we delve into the robustness of pre-trained models by introducing Pre-trained Adversarial Perturbations (PAPs), which are universal perturbations crafted for the pre-trained models to maintain the effectiveness when attacking fine-tuned ones without any knowledge of the downstream tasks.

To this end, we propose a Low-Level Layer Lifting Attack (L4A) method to generate effective PAPs by lifting the neuron activations of low-level layers of the pre-trained models. Equipped with an enhanced noise augmentation strategy, L4A is effective at generating more transferable PAPs against the fine-tuned models. Extensive experiments on typical pre-trained vision models and ten downstream tasks demonstrate that our method improves the attack success rate by a large margin compared to the state-of-the-art methods.

Clone this repo: git clone https://github.com/banyuanhao/PAP.git

python 3.8

Pytorch 1.8.1

Torchvision

tqdm

timm 0.3.2

Please download the Imagenet, CARS, PETS, FOOD, DTD, CIFAR10, CIFAR100, FGVC, CUB, SVHN, STL10 datasets and put them in a folder. Please use --data_path to configure it.

The repo needs the pre-trained models and the fine-tuned ones.

To get SimCLRv2 pre-trained models, please follow this repo to convert the tensorflow models provided here into Pytorch ones. Please download MAE pre-trained models from here.

To finetune models on downstream datasets, please follow this repo

Please configure the paths of pre-trained and finetuned models in tools.py.

We provide the testing code of sereval baselines STD, SSP, FFF, UAP, UAPEPGD. We omit ASV bacause it can hardly be integrated into the framework. Anyone interested in it may download the official code and have a try.

Performing L4Abase on Resnet50 pretrained by SimCLRv2.

python attacks.py --mode l4a_base --model_name r50_1x_sk1 --model_arch simclr --data_path your_data_folder --target_layer 0 --save_path your_save_path

Performing L4Augs on ViT-B pretrained by MAE.

python attacks.py --mode l4a_ugs --model_name vit_base_patch16 --data_path your_data --mean_std uniform --mean_hi 0.6 --mean_lo 0.4 --std_hi 0.10 --std_lo 0.05 --lamuda 0.01 --save_path your_save_path

Performing SSP on Resnet50 pretrained by SimCLRv2.

python attacks.py --mode ssp --model_name r50_1x_sk1 --model_arch simclr --data_path your_data_folder --save_path your_save_path

Note: if you want to perform UAP or UAPEPGD on MAE models, you have to obtain models that linearprobes on the Imagenet. Please refer to the MAE repo.

Testing PAPs on Resnet101

python eval.py --model_name r50_1x_sk1 --model_arch simclr --uap_path your_pap_path

We provide several finetuned models on the shelf. Please check the following table.

| SimCLRv2 | SimCLRv2 | MAE |

|---|---|---|

| r50_1x_sk1 | r101_1x_sk1 | vit_base_patch16 |

Due to the size of the fine-tuned models, we do not upload them to the cloud. Please download the models from the link. If you feel interested in them, please email me at banyh2000 at gmail.com, and I will send you a copy.

We also provide several perturbations in the perturbations folder.

-

This repo is based on the SimCLRv2 repo, SimCLRv2-Pytorch repo and MAE repo.

-

Thanks to UAP repo, FFF repo. We steal many lines of code from them.

banyh2000 at gmail.com

dongyinpeng at gmail.com

Any discussions, suggestions and questions are welcome!