Course project for 02750 Automation of Scientific Research at CMU

This project compares different active learning methods on the MNIST dataset.

git clone https://github.com/baolef/active-learning-mnist.git

cd active-learning-mnistpython main.pypython svm.py # already run, can skip

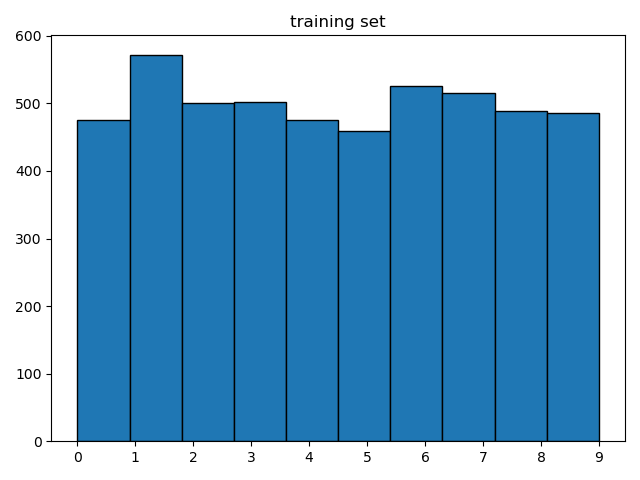

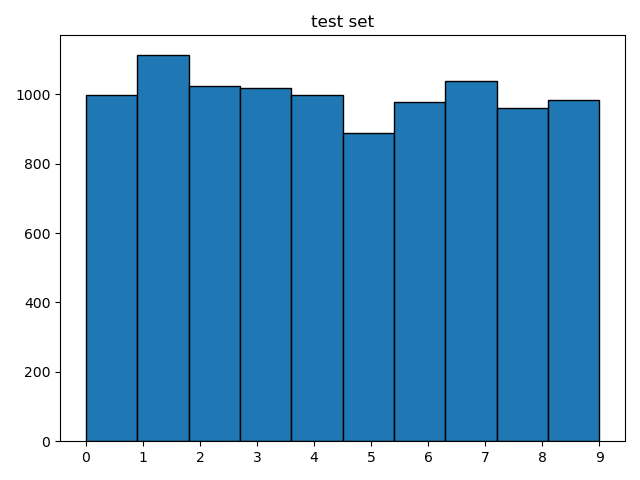

python plot_svm.pypython data.pypython boundary.pyTo save computation, our training set contains 5000 images and test set contains 10000 images.

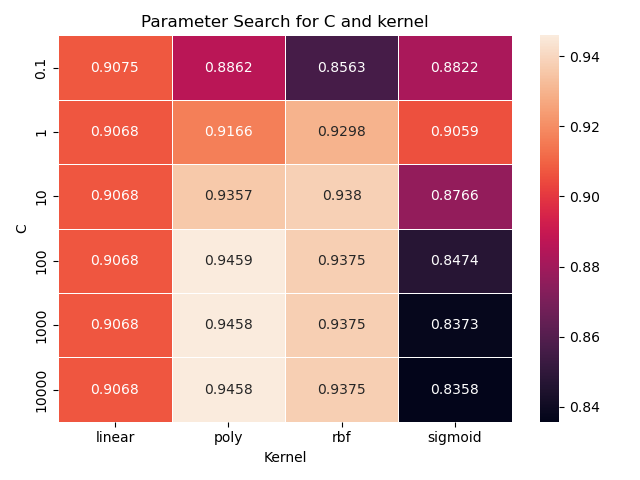

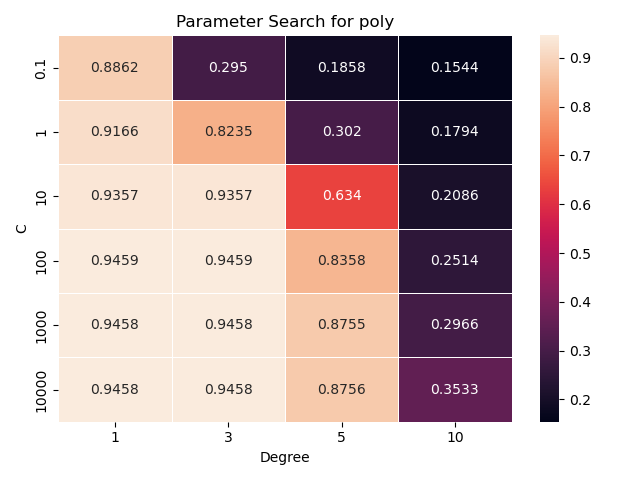

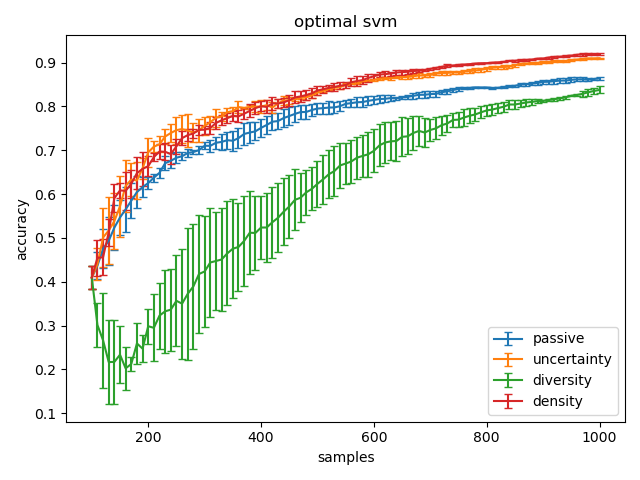

The model we use is SVM. We fine tune the hyperparameters of SVC, and find the optimal configuration {'C': 100, 'kernel': 'poly', 'degree': 3}.

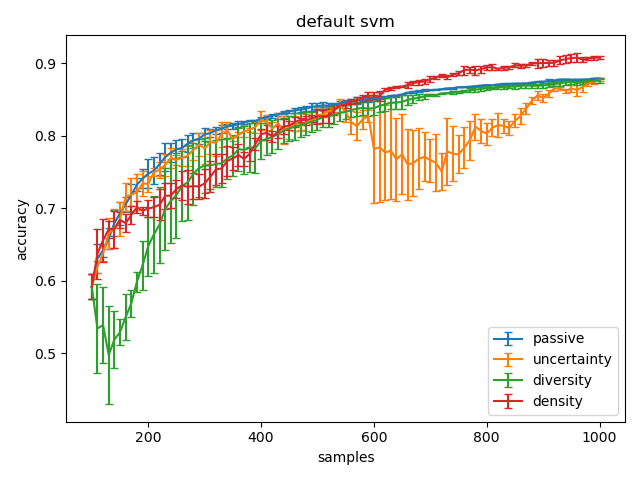

When sampling, the active learning pipeline starts with 100 samples and ends at 1000 samples, with a batch size of 10.

In the default SVM, density sampling performs the best, uncertainty sampling is unstable, and diversity sampling performs the worst.

In the default SVM, density sampling and uncertainty sampling performs the best, and diversity sampling performs the worst.

The animation shows how the decision boundary of uncertainty sampling in default SVM (left) and density sampling in optimal SVM (right) changes over iterations.