Towards Lighter and Faster: Learning Wavelets Progressively for Image Super-Resolution (accepted by ACMMM2020) [PAPER]

This repository is the official PyTorch implementation for our proposed WSR. The code is developed by supercaoO (Huanrong Zhang) based on SRFBN_CVPR19. In the future, the update will be released in supercaoO/WSR first.

We propose a lightweight and fast network to learn wavelet coefficients progressively for single image super-resolution (WSR). More specifically, the network comprises two main branches. One is used for predicting the second level low-frequency wavelet coefficients, and the other one is designed in a recurrent way for predicting the rest wavelet coefficients at first and second levels. Finally, an inverse wavelet transformation is adopted to reconstruct the SR images from these coefficients. In addition, we propose a deformable convolution kernel (side window) to construct the side-information multi-distillation block (S-IMDB), which is the basic unit of the recurrent blocks (RBs). Moreover, we train WSR with loss constraints at wavelet and spatial domains.

The RNN-based framework of our proposed 4× WSR. Notice that two recurrent blocks (RBs) share the same set of weights. The details about our proposed S-IMDB can be found in our main paper.

If you find our work useful in your research or publications, please consider citing:

@inproceedings{zhang2020wsr,

author = {Zhang, Huanrong and Jin, Zhi and Tan, Xiaojun and Li, Xiying},

title = {Towards Lighter and Faster: Learning Wavelets Progressively for Image Super-Resolution},

booktitle = {Proceedings of the 28th ACM International Conference on Multimedia},

year= {2020}

}- cuda & cudnn

- Python 3

- PyTorch >= 1.0.0

- pytorch_wavelets

- tqdm

- cv2

- pandas

- skimage

- scipy = 1.0.0

- Matlab

-

Clone this repository and cd to

WSR:git clone https://github.com/supercaoO/WSR.git cd WSR -

Check if the pre-trained model

WSR_x4_BI.pthexists in./models. -

Then, run following commands for evaluation on Set5:

CUDA_VISIBLE_DEVICES=0 python test.py -opt options/test/test_WSR_Set5.json

-

Finally, PSNR/SSIM values for Set5 are shown on your terminal, you can find the reconstruction images in

./results/SR/BI.

-

If you have cloned this repository, you can first download SR benchmark (Set5, Set14, B100, Urban100 and Manga109) from GoogleDrive (provided by SRFBN_CVPR19) or BaiduYun (code: p9pf).

-

Run

./results/Prepare_TestData_HR_LR.min Matlab to generate HR/LR images with BI degradation model. -

Edit

./options/test/test_WSR_x4_BI.jsonfor your needs according to./options/test/README.md. -

Then, run command:

cd WSR CUDA_VISIBLE_DEVICES=0 python test.py -opt options/test/test_WSR_x4_BI.json -

Finally, PSNR/SSIM values are shown on your terminal, you can find the reconstruction images in

./results/SR/BI. You can further evaluate SR results using./results/Evaluate_PSNR_SSIM.m.

-

If you have cloned this repository, you can first place your own images to

./results/LR/MyImage. -

Edit

./options/test/test_WSR_own.jsonfor your needs according to./options/test/README.md. -

Then, run command:

cd WSR CUDA_VISIBLE_DEVICES=0 python test.py -opt options/test/test_WSR_own.json -

Finally, you can find the reconstruction images in

./results/SR/MyImage.

-

Download training set DIV2K from official link or BaiduYun (code: m84q).

-

Run

./scripts/Prepare_TrainData_HR_LR.min Matlab to generate HR/LR training pairs with BI degradation model and corresponding scale factor. -

Run

./results/Prepare_TestData_HR_LR.min Matlab to generate HR/LR test images with BI degradation model and corresponding scale factor, and choose one of SR benchmark for evaluation during training. -

Edit

./options/train/train_WSR.jsonfor your needs according to./options/train/README.md. -

Then, run command:

cd WSR CUDA_VISIBLE_DEVICES=0 python train.py -opt options/train/train_WSR.json -

You can monitor the training process in

./experiments. -

Finally, you can follow the Test Instructions to evaluate your model.

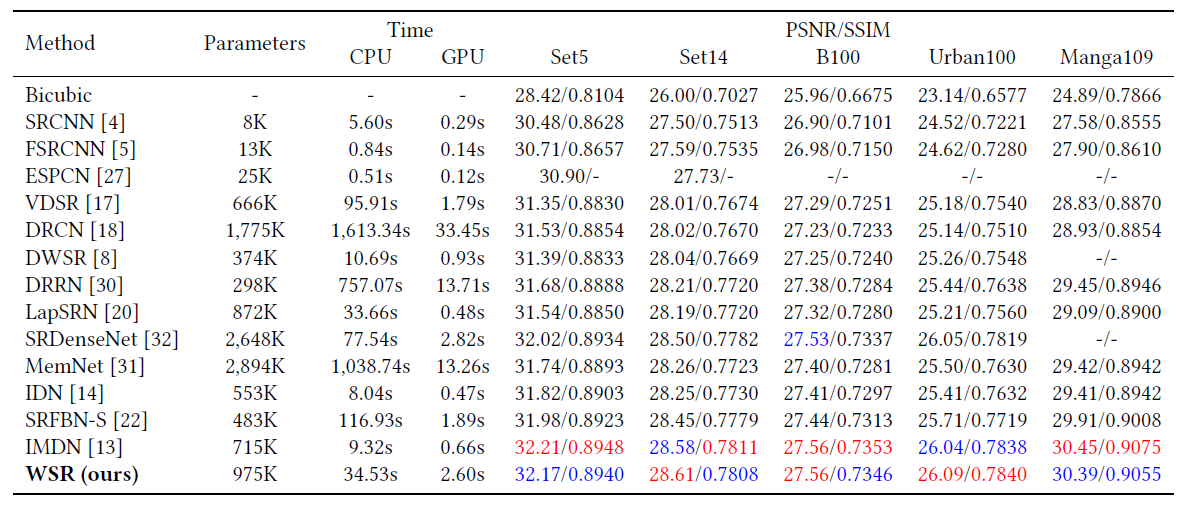

The inference time is measured on B100 dataset (100 images) using Intel(R) Xeon(R) Silver 4210 CPU @ 2.20GHz (CPU time) and NVIDIA TITAN RTX GPU (GPU time).

Comparisons on the number of network parameters, inference time, and PSNR/SSIM of different 4× SR methods. Best and second best

PSNR/SSIM results are marked in red and blue, respectively.

Comparisons on the number of network parameters, inference time, and PSNR/SSIM of different 4× SR methods. Best and second best

PSNR/SSIM results are marked in red and blue, respectively.

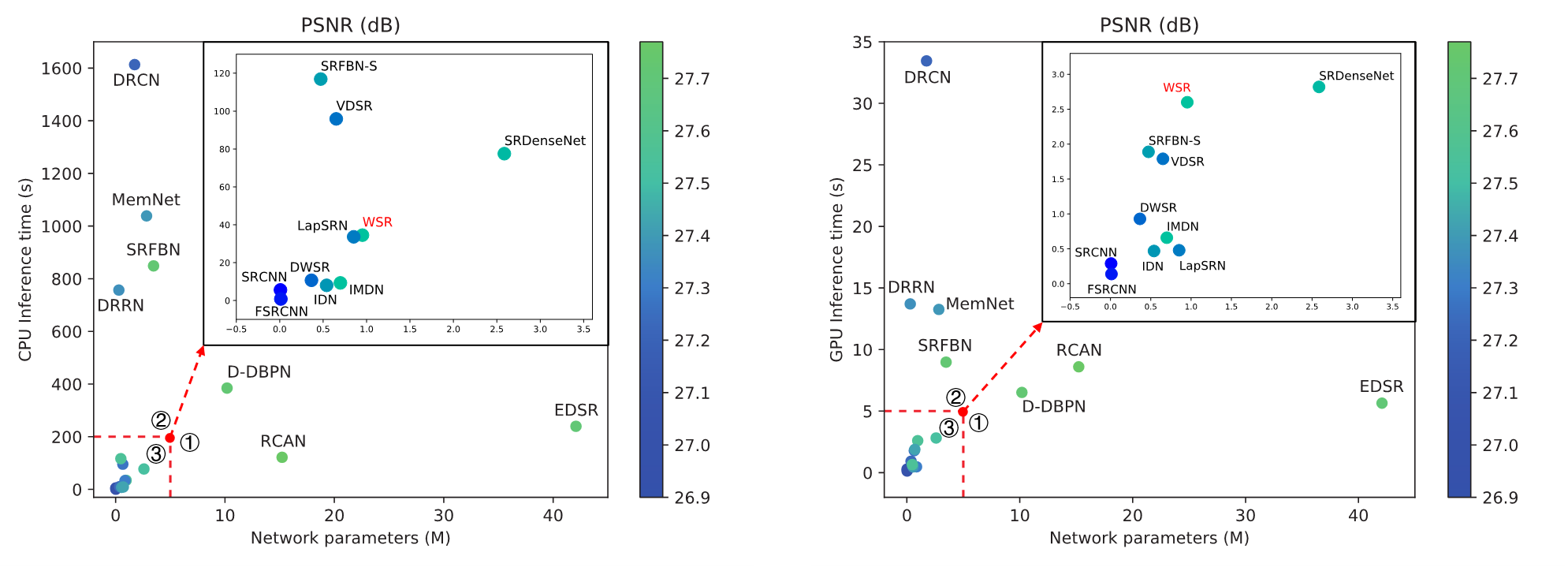

Comparisons on the number of network parameters and inference time of different 4× SR methods. Best results are

highlighted. Notice that the compared methods achieve better PSNR/SSIM results than our WSR does.

Comparisons on the number of network parameters and inference time of different 4× SR methods. Best results are

highlighted. Notice that the compared methods achieve better PSNR/SSIM results than our WSR does.

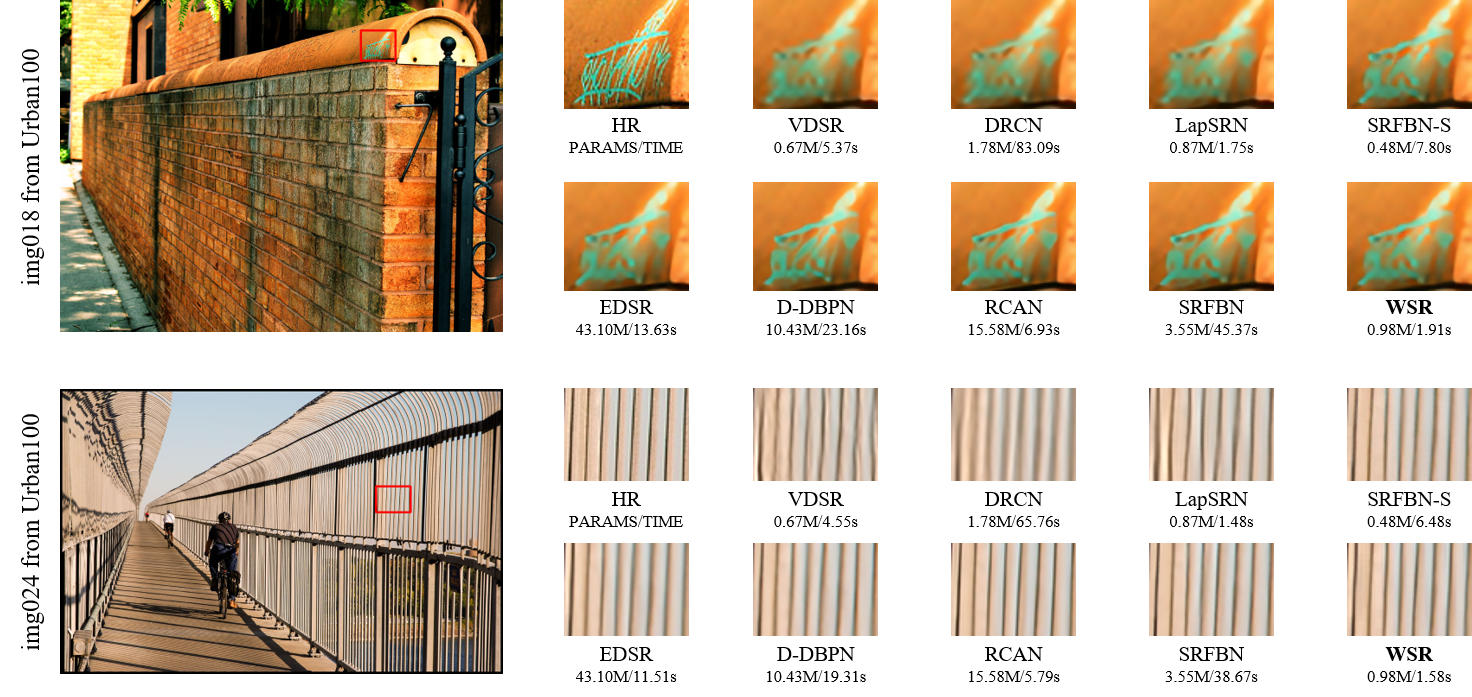

Visual comparisons with different 4× SR advances on “img018” and “img024” from Urban100 dataset. The inference time is CPU time.

Visual comparisons with different 4× SR advances on “img018” and “img024” from Urban100 dataset. The inference time is CPU time.

Relationship between the number of network parameters, inference time, and reconstruction performance of different

4× SR advances. The color represents PSNR achieved by different 4× networks on B100 dataset. The inference time in left figure is CPU time and that in right figure is GPU time.

Relationship between the number of network parameters, inference time, and reconstruction performance of different

4× SR advances. The color represents PSNR achieved by different 4× networks on B100 dataset. The inference time in left figure is CPU time and that in right figure is GPU time.

- Option files for more scale (i.e., 2×, 8×, 16×).

- Thank Paper99. Our code structure is derived from his repository SRFBN_CVPR19.