!!! MOVED here https://gitlab.com/baptiste-dauphin/doc !!!

- System

- Network

- Security

- Software

- Databases

- Hardware

- Virtualization

- Kubernetes

- Provider

- CentOS

- ArchLinux

- Miscellaneous

- Definitions

- Media / Platform

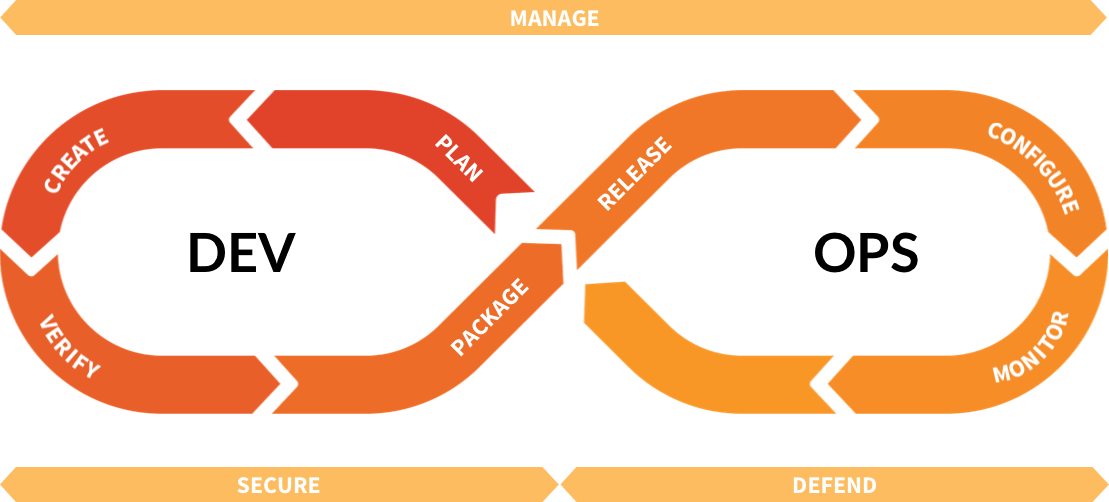

- DevOps

useradd -m -s /bin/bash b.dauphin

-m create home dir

-s shell pathecho 'root:toto' | chpasswdor get prompt for changing your current user passwd

# prompt...switch to a user (default root)

su -

su - b.dauphinIn ordre to edit sudoer file, use the proper tool visudo. Because even for root the file is readonly

visudo -f /var/tmp/sudoers.new

visudo -f /etc/sudoersvisudo -c

/etc/sudoers: parsed OK

/etc/sudoers.d/dev: parsed OK

visudo -f /etc/sudoers.d/qwbind-dev -c

/etc/sudoers.d/qwbind-dev: parsed OKAdd user baptiste to sudoer

usermod -aG sudo baptiste

usermod -aG wireshark b.dauphinapt update

apt-cache search sendmail

apt-cache search --names-only 'icedtea?'apt depends sendmailapt-get cleanhtop

nloadfree -gTo sort by memory usage we can use either %MEM or RSS columns.

RSSResident Set Size is a total memory usage in kilobytes%RAMshows the same information in terms of percent usage of total memory amount available.

ps aux --sort=+rss

ps aux --sort=%memEmpty swap

swapoff -a && swapon -aHow to read memory usage in htop?

htop- Hide

userthreadsshift + H - Hide

kernelthreadsshift + K - close the process tree view

F5 - then you can sort out the process of your interest by PID and read the RES column

- sort by MEM% by pressing

shift + M, orF3to search in cmd line)

grep MemTotal /proc/meminfo | awk '{print $2}'grep MemTotal /proc/meminfo | awk '{print $2}' | xargs -I {} echo "scale=4; {}/1024^1" | bcgrep MemTotal /proc/meminfo | awk '{print $2}' | xargs -I {} echo "scale=4; {}/1024^2" | bcavailable to the current process (may be less than all online)

nprocall online

nproc --allold fashion version

grep -c ^processor /proc/cpuinfoDefault system software (Debian)

update-alternatives - maintain symbolic links determining default commands List existing selections and list the one you wanna see

update-alternatives --get-selections

update-alternatives --list x-www-browserModify existing selection interactively

sudo update-alternatives --config x-terminal-emulatorCreate a new selection

update-alternatives --install /usr/bin/x-window-manager x-window-manager /usr/bin/i3 20Change default terminal or browser will prompt you an interactive console to chose among recognized software

sudo update-alternatives --config x-terminal-emulator

sudo update-alternatives --config x-www-browser- Graphic server (often X11, Xorg, or just X, it's the same software)

- Display Manager (SDDM, lightDM, gnome)

- Windows Manager (i3-wm, gnome)

Traduit de l'anglais-Simple Desktop Display Manager est un gestionnaire d’affichage pour les systèmes de fenêtrage X11 et Wayland. SDDM a été écrit à partir de zéro en C ++ 11 et supporte la thématisation via QML

service sddm status

service sddm restart : restart sddm (to load new monitor)update-alternatives --install /usr/bin/x-window-manager x-window-manager /usr/bin/i3 20https://i3wm.org/docs/userguide.html#_automatically_starting_applications_on_i3_startup

The > operator redirects the output usually to a file but it can be to a device. You can also use >> to append.

If you don't specify a number then the standard output stream is assumed but you can also redirect errors

>file redirects stdout to file1>file redirects stdout to file2>file redirects stderr to file&>file redirects stdout and stderr to file

/dev/null is the null device it takes any input you want and throws it away. It can be used to suppress any output.

is there a difference between > /dev/null 2>&1 and &> /dev/null ?

&> is new in Bash 4, the former is just the traditional way, I am just so used to it (easy to remember)

remove some characters ( and ) if found

.. | tr -d '()'tar --help| Command | meaning |

|---|---|

| -c | create (name your file .tar) |

| -(c)z | archive type gzip (name your file .tar.gz) |

| -(c)j | archive type bzip2 |

| -x | extract |

| -f | file |

| -v | verbose |

| -C | Set dir name to extract files |

| --directory | same |

Default, extract file to STOUT

-c : write on standard output, keep original files unchanged

gunzip -c file.gz > fileStart at the end of a file

- will run an initial command when the file is opened G jumps to the end

less +G app.logStream editor

| Cmd | meaning |

|---|---|

| sed -n | silent mode (default behaviour) |

| sed -n | silent mode. By default print nothing. Use with /p to print interesting cmd |

| sed -i | agit non pas sur l'input stream mais sur le fichier specifié |

| sed -f script_file | Take instruction from script |

Example

Replace patern 1 by patern 2

sed -i 's/patern 1/patern 2/g' /etc/ssh/sshd_configreplace Not after by nothing from the input stream

... | sed -n 's/ *Not After : *//p'| cmd | meaning |

|---|---|

| sed '342d' -i ~/.ssh/known_hosts | remove 342th line of file |

| sed '342,342d' -i ~/.ssh/known_hosts | remove 342th to 342th line, equivalent to precedent cmd |

| sed -i '1,42d' -i test.sql | remove first 42 lines of test.sql |

common usage

find . -maxdepth 1 -type l -ls

find /opt -type f -mmin -5 -exec ls -ltr {} +

find /var/log/nginx -type f -name "*access*" -mmin +5 -exec ls -ltr {} +

find . -type f -mmin -5 -print0 | xargs -0 /bin/ls -ltr| cmd | meaning |

|---|---|

| find -mtime n | last DATA MODIFICATION time (day) |

| find -atime n | last ACCESS time (day) |

| find -ctime n | last STATUS MODIFICATION time (day) |

"Modify" is the timestamp of the last time the file's content has been mofified. This is often called "mtime".

"Change" is the timestamp of the last time the file's inode has been changed, like by changing permissions, ownership, file name, number of hard links. It's often called "ctime".

list in the current directory, all files last modifed more (+10) than 10 days ago, historical order list in the current directory, all files last modifed less (-10) than 10 days ago, historical order

find . -type f -mtime +10 -exec ls -ltr {} +

find . -type f -mtime -10 -exec ls -ltr {} +list files with last modified date of LESS than 5 minutes

find . -type f -mmin -5 -exec ls -ltr {} +xargs reads items from the standard input, delimited by blanks (which can be protected with double or single quotes or a backslash) or newlines, and executes the command (default is /bin/echo) one or more times with any initial-arguments followed by items read from standard input. Blank lines on the standard input are ignored.

You can defined the name of the received arg (from stdin). In the following example the chosen name is %.

The following example : takes all the .log files and mv them into a directory named 'working_sheet_of_the_day'

ls *.log | xargs -I % mv % ./working_sheet_of_the_daycompress

tar zfcv myfiles.tar.gz /dir1 /dir2 /dir3extract in a given directory

tar zxvf somefilename.tar.gz or .tgz

tar jxvf somefilename.tar.bz2

tar xf file.tar -C /path/to/directory| Command | meaning |

|---|---|

| file | get meta info about that file |

| tail -n 15 -f | print content of file begining by end, for n lines, with keep following new files entries |

| head -n 15 | print content of a file begining by begining |

| who | info about connected users |

| w | same with more info |

| wall | print on all TTY (for all connected user) |

| sudo updatedb | update the local database of the files present in the filesystem |

| locate file_name | Search into this databases |

| echo app.$(date +%Y_%m_%d) | print a string based on subshell return |

| touch app.$(date +%Y_%m_%d) | create empty file named on string based on subshell return |

| mkdir app.$(date +%Y_%m_%d) | create directory named on string based on subshell return |

| sh | run a 'sh' shell, very old shell |

| bash | run a 'bash' shell, classic shell of debian 7,8,9 |

| zsh | run a 'zsh' shell, new shell |

| for i in google.com free.fr wikipedia.de ; do dig $i +short ; done |

| Operator | Description |

|---|---|

| ! EXPRESSION | The EXPRESSION is false. |

| -n STRING | The length of STRING is greater than zero. |

| -z STRING | The lengh of STRING is zero (ie it is empty). |

| STRING1 = STRING2 | STRING1 is equal to STRING2 |

| STRING1 != STRING2 | STRING1 is not equal to STRING2 |

| INTEGER1 -eq INTEGER2 | INTEGER1 is numerically equal to INTEGER2 |

| INTEGER1 -gt INTEGER2 | INTEGER1 is numerically greater than INTEGER2 |

| INTEGER1 -lt INTEGER2 | INTEGER1 is numerically less than INTEGER2 |

| -d FILE | FILE exists and is a directory. |

| -e FILE | FILE exists. |

| -f FILE | True if file exists AND is a regular file. |

| -r FILE | FILE exists and the read permission is granted. |

| -s FILE | FILE exists and its size is greater than zero (ie. it is not empty). |

| -w FILE | FILE exists and the write permission is granted. |

| -x FILE | FILE exists and the execute permission is granted. |

| -eq 0 | COMMAND result equal to 0 |

| $? | last exit code |

| $# | Number of parameters |

| $@ | expands to all the parameters |

if [ -f /tmp/test.txt ];

then

echo "true";

else

echo "false";

fi$ true && echo howdy!

howdy!

$ false || echo howdy!

howdy!DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null 2>&1 && pwd )"

DIR="$(dirname "$0")"for i in `seq 1 6`

do

mysql -h 127.0.0.1 -u user -p password -e "show variables like 'server_id'; select user()"

done... is the legacy syntax required by only the very oldest of non-POSIX-compatible bourne-shells. There are several reasons to always prefer the $(...) syntax:

$ echo "`echo \\a`" "$(echo \\a)"

a \a

$ echo "`echo \\\\a`" "$(echo \\\\a)"

\a \\a

# Note that this is true for *single quotes* too!

$ foo=`echo '\\'`; bar=$(echo '\\'); echo "foo is $foo, bar is $bar"

foo is \, bar is \\

echo "x is $(sed ... <<<"$y")"

In this example, the quotes around

echo "x is `sed ... <<<\"$y\"`"

x=$(grep "$(dirname "$path")" file)

x=`grep "\`dirname \"$path\"\`" file`

Be very careful to the context of their definition

set variable to current shell

export http_proxy=http://10.10.10.10:9999

echo $http_proxyshould print the value

set variables only for the current line execution

http_proxy=http://10.10.10.10:9999 wget -O - https://repo.saltstack.com/apt/debian/9/amd64/latest/SALTSTACK-GPG-KEY.pub

echo $http_proxywill return nothing because it doesn't exist anymore

Export multiple env var

export {http,https,ftp}_proxy="http://10.10.10.10:9999"Useful common usage

export http_proxy=http://10.10.10.10:9999/

export https_proxy=$http_proxy

export ftp_proxy=$http_proxy

export rsync_proxy=$http_proxy

export no_proxy="localhost,127.0.0.1,localaddress,.localdomain.com"Remove variable

unset http_proxy

unset http_proxy unset https_proxy unset HTTP_PROXY unset HTTPS_PROXY unsetdebian style

ps -ef

ps -o pid,user,%mem,command axGet parent pid of a given pid

ps -o ppid= -p 750

ps -o ppid= -p $(pidof systemd)RedHat style

ps auxkill default TERM

kill -l list all signals

kill -l 15 get name of signal

kill -s TERM PID

kill -TERM PID

kill -15 PID| shortcut | meaning |

|---|---|

| ctrl + \ | SIGQUIT |

| ctrl + C | SIGINT |

| Number | Name (short name) | Description Used for |

|---|---|---|

| 0 SIGNULL (NULL) | Null | Check access to pid |

| 1 SIGHUP (HUP) | Hangup Terminate | can be trapped |

| 2 SIGINT (INT) | Interrupt Terminate | can be trapped |

| 3 SIGQUIT (QUIT) | Quit Terminate with core dump | can be trapped |

| 9 SIGKILL (KILL) | Kill Forced termination | cannot be trapped |

| 15 SIGTERM (TERM) | Terminate Terminate | can be trapped |

| 24 SIGSTOP (STOP) | Stop Pause the process | cannot be trapped. This is default if signal not provided to kill command. |

| 25 SIGTSTP (STP) | Stop/pause the process | can be trapped |

| 26 SIGCONT (CONT) | Continue | Run a stopped process |

xeyes &

jobs -l

kill -s STOP 3405

jobs -l

kill -s CONT 3405

jobs -l

kill -s TERM 3405list every running process

ps -ef | grep ssh-agent | awk '{print $2}'

ps -ef | grep ssh-agent | awk '$0=$2'Print only the process IDs of syslogd:

ps -C syslogd -o pid=Print only the name of PID 42:

ps -q 42 -o comm=To see every process running as root (real & effective ID) in user format:

ps -U root -u root uGet PID (process Identifier) of a running process

pidof iceweasel

pgrep ssh-agentdiff <(cat /etc/passwd) <(cut -f2 /etc/passwd)<(...) is called process substitution. It converts the output of a command into a file-like object that diff can read from. While process substitution is not POSIX, it is supported by bash, ksh, and zsh.

User's IPC shared memory, semaphores, and message queues

Type of IPC object. Possible values are:

q -- message queue

m -- shared memory

s -- semaphore

USERNAME=$1

TYPE=$2

ipcs -$TYPE | grep $USERNAME | awk ' { print $2 } ' | xargs -I {} ipcrm -$TYPE {}

ipcs -s | grep zabbix | awk ' { print $2 } ' | xargs -I {} ipcrm -s {}Unix File types

| Description | symbol |

|---|---|

| Regular file | - |

| Directory | d |

| Special files | (5 sub types in it) |

| block file | b |

| Character device file | c |

| Named pipe file or just a pipe file | p |

| Symbolic link file | l |

| Socket file | s |

df -h

du -sh --exclude=relative/path/to/uploads --exclude other/path/to/exclude

du -hsx --exclude=/{proc,sys,dev} /*

lsblklist physical disk and then, mount them of your filesystem

lsblk

fdisk -l

sudo mount /dev/sdb1 /mnt/usbawk '$4~/(^|,)ro($|,)/' /proc/mountsumount /mntyou do so, you will get the “umount: /mnt: device is busy.” error as shown below.

umount /mnt

umount: /mnt: device is busy.

(In some cases useful info about processes that use

the device is found by lsof(8) or fuser(1))Use fuser command to find out which process is accessing the device along with the user name.

fuser -mu /mnt/

/mnt/: 2677c(sathiya)- fuser – command used to identify processes using the files / directories

- -m – specify the directory or block device along with this, which will list all the processes using it.

-u – shows the owner of the process

You got two choice here.

- Ask the owner of the process to properly terminate it or

- You can kill the process with super user privileges and unmount the device.

When you cannot wait to properly umount a busy device, use umount -f as shown below.

umount -f /mntIf it still doesn’t work, lazy unmount should do the trick. Use umount -l as shown below.

umount -l /mntWhen lost remote access to machine.

Reboot the system

press e to edit grub

After editing grub, add this at the end of linux line

init=/bin/bash

grub config extract

menuentry 'Debian GNU/Linux, with Linux 4.9.0-8-amd64 {

load_video

insmod gzio

if [ x$grub_platform = xxen ]; then insmod xzio; insmod lzopio; fi

insmod part_gpt

insmod ext2

...

...

...

echo 'Loading Linux 4.9.0-8-amd64 ...'

linux /vmlinuz-4.9.0-8-amd64 root=/dev/mapper/debian--baptiste--vg-root ro quiet

echo 'Loading initial ramdisk ...'

initrd /initrd.img-4.9.0-8-amd64

}Change this line

linux /vmlinuz-4.9.0-8-amd64 root=/dev/mapper/debian--baptiste--vg-root ro quietinto this

linux /vmlinuz-4.9.0-8-amd64 root=/dev/mapper/debian--baptiste--vg-root rw quiet init=/bin/bashF10 to boot with the current config

Make writable the root filesystem (useless if you switched 'ro' into 'rw')

mount -n -o remount,rw /Make your modifications

passwd user_you_want_to_modify

# or

vim /etc/iptables/rules.v4to exit the prompt and reboot the computer.

exec /sbin/initfsck.ext4 /dev/mapper/vg_data-lv_data

e2fsck 1.43.4 (31-Jan-2017)

/dev/mapper/VgData-LvData contient un système de fichiers comportant des erreurs, vérification forcée.

Passe 1 : vérification des i-noeuds, des blocs et des tailles

Passe 2 : vérification de la structure des répertoires

Passe 3 : vérification de la connectivité des répertoires

Passe 4 : vérification des compteurs de référence

Passe 5 : vérification de l information du sommaire de groupeln -sfTv /opt/app_$TAG /opt/app_currentList open file, filter by deleted

Very useful when you have incoherence between result of df -h and du -sh /*

It may happens that you remove a file, but another process file descriptor is still using it. So, view from the filesystem, space is not released/free

lsof -nP | grep '(deleted)'Old System control replaced by Systemd since debian 8

aka SystemV, aka old fashioned way, prefered by some people due to full control provided by a on directly modifiable bash script located under /etc/init.d/

usage

service rsyslog statuschange process management

vim /etc/init.d/rsyslogIntroduced since debian 8

Based on internal and templated management. The only way to interact with systemd is by modifying instructions (but not directly code) on service file.

The can be located under different directories.

Where are Systemd Unit Files Found?

The files that define how systemd will handle a unit can be found in many different locations, each of which have different priorities and implications.

The system’s copy of unit files are generally kept in the /lib/systemd/system directory. When software installs unit files on the system, this is the location where they are placed by default.

Unit files stored here are able to be started and stopped on-demand during a session. This will be the generic, vanilla unit file, often written by the upstream project’s maintainers that should work on any system that deploys systemd in its standard implementation. You should not edit files in this directory. Instead you should override the file, if necessary, using another unit file location which will supersede the file in this location.

If you wish to modify the way that a unit functions, the best location to do so is within the /etc/systemd/system directory. Unit files found in this directory location take precedence over any of the other locations on the filesystem. If you need to modify the system’s copy of a unit file, putting a replacement in this directory is the safest and most flexible way to do this.

If you wish to override only specific directives from the system’s unit file, you can actually provide unit file snippets within a subdirectory. These will append or modify the directives of the system’s copy, allowing you to specify only the options you want to change.

The correct way to do this is to create a directory named after the unit file with .d appended on the end. So for a unit called example.service, a subdirectory called example.service.d could be created. Within this directory a file ending with .conf can be used to override or extend the attributes of the system’s unit file.

There is also a location for run-time unit definitions at /run/systemd/system. Unit files found in this directory have a priority landing between those in /etc/systemd/system and /lib/systemd/system. Files in this location are given less weight than the former location, but more weight than the latter.

The systemd process itself uses this location for dynamically created unit files created at runtime. This directory can be used to change the system’s unit behavior for the duration of the session. All changes made in this directory will be lost when the server is rebooted.

Resume

| Location | override/supersede priority (higher takes precedence) | Meaning |

|---|---|---|

| /run/systemd/system | 1 | Run-time only, lost after systemd reboot |

| /etc/systemd/system directory | 2 | SysAdmin maintained |

| /lib/systemd/system directory | 3 | Packages vendor maintained (apt, rpm, pacman, ...) |

# show all installed unit files

systemctl list-unit-files --type=service

# loaded

systemctl list-units --type=service --state=loaded

# active

systemctl list-units --type=service --state=active

# running

systemctl list-units --type=service --state=running

# show a specific property (service var value)

systemctl show --property=Environment docker

# print all content

systemctl show docker --no-pager | grep proxysyslog-ng is a syslog implementation which can take log messages from sources and forward them to destinations, based on powerful filter directives.

Note: With systemd's journal (journalctl), syslog-ng is not needed by most users.

If you wish to use both the journald and syslog-ng files, ensure the following settings are in effect. For systemd-journald, in the /etc/systemd/journald.conf file, Storage= either set to auto or unset (which defaults to auto) and ForwardToSyslog= set to no or unset (defaults to no). For /etc/syslog-ng/syslog-ng.conf, you need the following source stanza:

source src {

# syslog-ng

internal();

# systemd-journald

system();

};

A very good overview, official doc Still a very good ArchLinux tutorial

Starting with syslog-ng version 3.6.1 the default system() source on Linux systems using systemd uses journald as its standard system() source.

Typically

-

systemd-journald- stores message from unit that it manages

sshd.service - unit.{service,slice,socket,scope,path,timer,mount,device,swap}

- stores message from unit that it manages

-

syslog-ng- read INPUT message from

systemd-journald - write OUTPUT various files under

/var/log/*

- read INPUT message from

Examples from default config:

log { source(s_src); filter(f_auth); destination(d_auth); };

log { source(s_src); filter(f_cron); destination(d_cron); };

log { source(s_src); filter(f_daemon); destination(d_daemon); };

log { source(s_src); filter(f_kern); destination(d_kern); };

journalctl is a command for viewing logs collected by systemd. The systemd-journald service is responsible for systemd’s log collection, and it retrieves messages from the kernel, systemd services, and other sources.

These logs are gathered in a central location, which makes them easy to review. The log records in the journal are structured and indexed, and as a result journalctl is able to present your log information in a variety of useful formats.

journalctl

journalctl -r

Each line starts with the date (in the server’s local time), followed by the server’s hostname, the process name, and the message for the log

journalctl --priority=0..3 --since "12 hours ago"-u --unit=UNIT

- --user-unit=UNIT --no-pager --list-boots -b --boot[=ID] -e --pager-end -f --follow -p --priority=RANGE

0: emerg

1: alert

2: crit

3: err

4: warning

5: notice

6: info

7: debug

| Key command | Action |

|---|---|

| down arrow key, enter, e, or j | Move down one line. |

| up arrow key, y, or k | Move up one line. |

| space bar | Move down one page. |

| b | Move up one page. |

| right arrow key | Scroll horizontally to the right. |

| left arrow key | Scroll horizontally to the left. |

| g | Go to the first line. |

| G | Go to the last line. |

| 10g | Go to the 10th line. Enter a different number to go to other lines. |

| 50p or 50% | Go to the line half-way through the output. Enter a different number to go to other percentage positions. |

| /search term | Search forward from the current position for the search term string. |

| ?search term | Search backward from the current position for the search term string. |

| n | When searching, go to the next occurrence. |

| N | When searching, go to the previous occurrence. |

| m | Set a mark, which saves your current position. Enter a single character in place of to label the mark with that character. |

| ' | Return to a mark, where is the single character label for the mark. Note that ' is the single-quote. |

| q | Quit less |

journalctl --no-pager

It’s not recommended that you do this without first filtering down the number of logs shown.

journalctl --since "2018-08-30 14:10:10"

journalctl --until "2018-09-02 12:05:50"

journalctl --list-boots

journalctl -b -2

journalctl -b

journalctl -u ssh

journalctl -k

| Format Name | Description |

|---|---|

| short | The default option, displays logs in the traditional syslog format. |

| verbose | Displays all information in the log record structure. |

| json | Displays logs in JSON format, with one log per line. |

| json-pretty | Displays logs in JSON format across multiple lines for better readability. |

| cat | Displays only the message from each log without any other metadata. |

journalctl -o json-pretty

systemd-journald can be configured to persist your systemd logs on disk, and it also provides controls to manage the total size of your archived logs. These settings are defined in /etc/systemd/journald.conf To start persisting your logs, uncomment the Storage line in /etc/systemd/journald.conf and set its value to persistent. Your archived logs will be held in /var/log/journal. If this directory does not already exist in your file system, systemd-journald will create it.

systemctl restart systemd-journald

The following settings in journald.conf control how large your logs’ size can grow to when persisted on disk:

| Setting | Description |

|---|---|

| SystemMaxUse | The total maximum disk space that can be used for your logs. |

| SystemKeepFree | The minimum amount of disk space that should be kept free for uses outside of systemd-journald’s logging functions. |

| SystemMaxFileSize | The maximum size of an individual journal file. |

| SystemMaxFiles | The maximum number of journal files that can be kept on disk. |

systemd-journald will respect both SystemMaxUse and SystemKeepFree, and it will set your journals’ disk usage to meet whichever setting results in a smaller size.

journalctl -u systemd-journald

journalctl --disk-usage

journalctl --verify

journalctl offers functions for immediately removing archived journals on disk. Run journalctl with the --vacuum-size option to remove archived journal files until the total size of your journals is less than the specified amount. For example, the following command will reduce the size of your journals to 2GiB:

journalctl --vacuum-size=2G

Run journalctl with the --vacuum-time option to remove archived journal files with dates older than the specified relative time. For example, the following command will remove journals older than one year:

journalctl --vacuum-time=1years

#### Logger To write into the journal

logger -n syslog.baptiste-dauphin.com --rfc3164 --tcp -P 514 -t 'php95.8-fpm' -p local7.error 'php-fpm error test'

logger -n syslog.baptiste-dauphin.com --rfc3164 --udp -P 514 -t 'sshd' -p local7.info 'sshd error : test '

logger -n syslog.baptiste-dauphin.com --rfc3164 --udp -P 514 -t 'sshd' -p auth.info 'sshd error : test'

for ((i=0; i < 10; ++i)); do logger -n syslog.baptiste-dauphin.com --rfc3164 --tcp -P 514 -t 'php95.8-fpm' -p local7.error 'php-fpm error test' ; done

salt -C 'G@app:api and G@env:production and G@client:mattrunks' \

cmd.run "for ((i=0; i < 10; ++i)); do logger -n syslog.baptiste-dauphin.com --rfc3164 --tcp -P 514 -t 'php95.8-fpm' -p local7.error 'php-fpm error test' ; done" \

shell=/bin/bash

logger '@cim: {"name1":"value1", "name2":"value2"}'Some good explanations ArchLinux iptables good explanations

iptables-saveiptables-save > /etc/iptables/rules.v4 iptables -L

iptables -nvL

iptables -nvL INPUT

iptables -nvL OUTPUT

iptables -nvL PREROUTINGThe Default linux iptables chain policy is ACCEPT for all INPUT, FORWARD and OUTPUT policies. You can easily change this default policy to DROP with below listed commands.

iptables -P INPUT DROP

iptables -P FORWARD DROP

iptables -P OUTPUT DROP

iptables --policy INPUT DROP

iptables -P chain target [options] --policy -P chain target

--append -A chain Append to chain

--check -C chain Check for the existence of a rule

--delete -D chain Delete matching rule from chain

iptables --list Print rules in human readable format

iptables --list-rules Print rules in iptables readable format

iptables -v -L -niptables -A OUTPUT -d 10.10.10.10/32 -p tcp -m state --state NEW -m tcp --match multiport --dports 4506:10000 -j ACCEPTiptables -t raw -I PREROUTING -j NOTRACK

iptables -t raw -I OUTPUT -j NOTRACKiptables -A INPUT -j LOG --log-prefix "INPUT:DROP:" --log-level 6

iptables -A INPUT -j DROP

iptables -P INPUT DROP

iptables -A OUTPUT -j LOG --log-prefix "OUTPUT:DROP:" --log-level 6

iptables -A OUTPUT -j DROP

iptables -P OUTPUT DROPyou have to temporarily REMOVE log and drop last lines, otherwise, your new line

iptables -D INPUT -j LOG --log-prefix "INPUT:DROP:" --log-level 6

iptables -D INPUT -j DROPiptables -A INPUT -p udp -m udp --sport 123 -j ACCEPTiptables -A INPUT -j LOG --log-prefix "INPUT:DROP:" --log-level 6

iptables -A INPUT -j DROPdebian 8 and under, get info about connection tracking. Current and max

cat /proc/sys/net/netfilter/nf_conntrack_count

cat /proc/sys/net/netfilter/nf_conntrack_maxdebian 9, with a wrapper, easier to use !

conntrack -L [table] [options] [-z]

conntrack -G [table] parameters

conntrack -D [table] parameters

conntrack -I [table] parameters

conntrack -U [table] parameters

conntrack -E [table] [options]

conntrack -F [table]

conntrack -C [table]

conntrack -Sfor those binaries : ifconfig, netstat, rarp, route, ip, dig

apt install net-tools iproute2 dnsutils| Command | meaning |

|---|---|

| ip a | get IP of the system |

| ip r | get routes of the system |

| ip route change default via 99.99.99.99 dev ens8 proto dhcp metric 100 | modify default route |

| ip addr add 88.88.88.88/32 dev ens4 | add (failover) IP to a NIC |

new ubuntu network manager

cat /{lib,etc,run}/netplan/*.yaml(old way)

| command | specification |

|---|---|

| netstat -t | list tcp connections |

| netstat -lt | list listening tcp socket |

| netstat -lu | list listening udp socket |

| netstat -ltu | list listening udp + tcp socket |

| netstat -lx | list listening unix socket |

| netstat -ltup | same as above, with info on process |

| netstat -ltupn | p(PID), l(LISTEN), t(tcp), n(Convert names) |

| netstat -ltpa | all = ESTABLISHED (default) LISTEN |

| netstat -lapute | classic useful usage |

| netstat -salope | same |

| netstat -tupac | same |

(new quicker way)

| command | specification |

|---|---|

| ss -tulipe | more info on listening process |

| ss tlpn | print listen tcp socket with process |

ss -ltpn sport eq 2377

ss -t '( sport = :ssh )'

ss -ltn sport gt 500

ss -ltn sport le 500Real time, just see what’s going on, by looking at all interfaces.

tcpdump -i any -w capturefile.pcap

tcpdump port 80 -w capture_file

tcpdump 'tcp[32:4] = 0x47455420'

tcpdump -n dst host ip

tcpdump -vv -i any port 514

tcpdump -i any -XXXvvv src net 10.0.0.0/8 and dst port 1234 or dst port 4321 | ccze -A

tcpdump -i any port not ssh and port not domain and port not zabbix-agent | ccze -Ahttps://danielmiessler.com/study/tcpdump/

tcpdump -i lo udp port 123 -vv -X

tcpdump -vv -x -X -s 1500 -i any 'port 25' | ccze -A

https://danielmiessler.com/study/tcpdump/#source-destination

tcpflow -c port 443lsof -Pan -p $PID -i

# ss version

ss -l -p -n | grep ",1234,"debian 9 new network management style

vim /etc/systemd/network/50-default.network

systemctl status systemd-networkd

systemctl restart systemd-networkd

old fashioned network management style

vlan tagging and route add

auto enp61s0f1.3200

iface enp61s0f1.3200 inet static

address 10.10.10.20/22

vlan-raw-device enp61s0f1

post-up ip route add 10.0.0.0/8 via 10.10.10.254

# with package "ifupdown"

auto eth0

iface eth0 inet static

address 192.0.2.7/30

gateway 192.0.2.254Activate NAT (Network Address Translation)

iptables -t nat -A POSTROUTING -s 10.0.0.0/24 -o eth0 -j MASQUERADE

With OpenVPN

Run OpenVpn client in background, immune to hangups, with output to a non-tty

cd /home/baptiste/.openvpn && \

nohup sudo openvpn /home/baptiste/.openvpn/b_dauphin@vpn.domain.com.ovpnNetcat (network catch) TCP/IP swiss army knife

nc -l 127.0.0.1 -p 80

nc -lvup 514

# listen all ip on tcp port 443

nc -lvtp 443only for TCP (obviously), UDP is not connected protocol

nc -znv 10.10.10.10 3306echo '<187>Apr 29 15:26:16 qwarch plop[12458]: baptiste' | nc -u 10.10.10.10 1514

Display your public AND private keys from the gpg-agent keyring

gpg --list-keys

gpg --list-secret-keysHow to generate gpg public/private key pair

vault login -method=ldap username=$USERWill set up a token under ~/.vault-token

by default ssh reads stdin. When ssh is run in the background or in a script we need to redirect /dev/null into stdin.

Here is what we can do.

ssh shadows.cs.hut.fi "uname -a" < /dev/null

ssh -n shadows.cs.hut.fi "uname -a"Will generate an output file containing 1 IP / line

for minion in minion1 minion2 database_dev random_id debian minion3 \

; do ipam $minion | tail -n 1 | awk '{print $1}' \

>> minions.list \

; doneRun parallelized exit after a test of a ssh connection

while read minion_ip; do

(ssh -n $minion_ip exit \

&& echo Success \

|| echo CONNECTION_ERROR) &

done <minions.list

Test sshd config before reloading (avoid fail on restart/reload and cutting our own hand)

sshd = ssh daemon

sshd -tTest connection to multiple servers

for outscale_instance in 10.10.10.1 10.10.10.2 10.10.10.3 10.10.10.4 \

; do ssh $outscale_instance -q exit \

&& echo "$outscale_instance :" connection succeed \

|| echo "$outscale_instance :" connection failed \

; done

10.10.10.1 : connection succeed

10.10.10.2 : connection succeed

10.10.10.3 : connection failed

10.10.10.4 : connection succeedquickly copy your ssh public key to a remote server

cat ~/.ssh/id_ed25519.pub | ssh pi@192.168.1.41 "mkdir -p ~/.ssh && chmod 700 ~/.ssh && cat >> ~/.ssh/authorized_keys"-a : archive mode

-u : update mode, not full copy

rsync -au --progress -e "ssh -i path/to/private_key" user@10.10.10.10:~/remote_path /output/path| Keywork | meaning |

|---|---|

| SSL | |

| TLS | |

| Private key | |

| Public key | |

| RSA | |

| ECDSA |

openssl s_client -connect www.qwant.com:443 -servername www.qwant.com < /dev/null | openssl x509 -text

openssl s_client -connect qwant.com:443 -servername qwant.com < /dev/null | openssl x509 -noout -fingerprint

openssl s_client -connect qwantjunior.fr:443 -servername qwantjunior.fr < /dev/null | openssl x509 -text -noout -datesUseful use case

openssl x509 --text --noout --in ./dev.bdauphin.io.pem -subject -issuer(.pem)

openssl x509 --text --noout --in /etc/ssl/private/sub.domain.tld.pem

# debian 7, openssl style

openssl x509 -text -in /etc/ssl/private/sub.domain.tld.pemOpenSSL verify with -CAfile

openssl verify ./dev.bdauphin.io.pem

CN = dev.bdauphin.io.pem

error 20 at 0 depth lookup: unable to get local issuer certificate

error ./dev.bdauphin.io: verification failed

openssl verify -CAfile ./bdauphin.io_intermediate_certificate.pem ./dev.bdauphin.io.pem

./dev.bdauphin.io: OKTest certificate validation + right adresses

for certif in * ; do openssl verify -CAfile ../baptiste-dauphin.io_intermediate_certificate.pem $certif ; done

dev.baptiste-dauphin.io.pem: OK

plive.baptiste-dauphin.io.pem: OK

www.baptiste-dauphin.io.pem: OK

for certif in * ; do openssl x509 -in $certif -noout -text | egrep '(Subject|DNS):' ; done

Subject: CN = dev.baptiste-dauphin.com

DNS:dev.baptiste-dauphin.com, DNS:dav-dev.baptiste-dauphin.com, DNS:provisionning-dev.baptiste-dauphin.com, DNS:share-dev.baptiste-dauphin.com

Subject: CN = plive.baptiste-dauphin.com

DNS:plive.baptiste-dauphin.com, DNS:dav-plive.baptiste-dauphin.com, DNS:provisionning-plive.baptiste-dauphin.com, DNS:share-plive.baptiste-dauphin.com

Subject: CN = www.baptiste-dauphin.com

DNS:www.baptiste-dauphin.com, DNS:dav.baptiste-dauphin.com, DNS:provisionning.baptiste-dauphin.com, DNS:share.baptiste-dauphin.com| args | comments |

|---|---|

| -host host | use -connect instead |

| -port port | use -connect instead |

| -connect host:port | who to connect to (default is localhost:4433) |

| -verify_hostname host | check peer certificate matches "host" |

| -verify_email email | check peer certificate matches "email" |

| -verify_ip ipaddr | check peer certificate matches "ipaddr" |

| -verify arg | turn on peer certificate verification |

| -verify_return_error | return verification errors |

| -cert arg | certificate file to use, PEM format assumed |

| -certform arg | certificate format (PEM or DER) PEM default |

| -key arg | Private key file to use, in cert file if not specified but cert file is. |

| -keyform arg | key format (PEM or DER) PEM default |

| -pass arg | private key file pass phrase source |

| -CApath arg | PEM format directory of CA's |

| -CAfile arg | PEM format file of CA's |

| -trusted_first | Use trusted CA's first when building the trust chain |

| -no_alt_chains | only ever use the first certificate chain found |

| -reconnect | Drop and re-make the connection with the same Session-ID |

| -pause | sleep(1) after each read(2) and write(2) system call |

| -prexit | print session information even on connection failure |

| -showcerts | show all certificates in the chain |

| -debug | extra output |

| -msg | Show protocol messages |

| -nbio_test | more ssl protocol testing |

| -state | print the 'ssl' states |

| -nbio | Run with non-blocking IO |

| -crlf | convert LF from terminal into CRLF |

| -quiet | no s_client output |

| -ign_eof | ignore input eof (default when -quiet) |

| -no_ign_eof | don't ignore input eof |

| -psk_identity arg | PSK identity |

| -psk arg | PSK in hex (without 0x) |

| -ssl3 | just use SSLv3 |

| -tls1_2 | just use TLSv1.2 |

| -tls1_1 | just use TLSv1.1 |

| -tls1 | just use TLSv1 |

| -dtls1 | just use DTLSv1 |

| -fallback_scsv | send TLS_FALLBACK_SCSV |

| -mtu | set the link layer MTU |

| -no_tls1_2/-no_tls1_1/-no_tls1/-no_ssl3/-no_ssl2 | turn off that protocol |

| -bugs | Switch on all SSL implementation bug workarounds |

| -cipher | preferred cipher to use, use the 'openssl ciphers' command to see what is available |

| -starttls prot | use the STARTTLS command before starting TLS for those protocols that support it, where 'prot' defines which one to assume. Currently, only "smtp", "pop3", "imap", "ftp", "xmpp", "xmpp-server", "irc", "postgres", "lmtp", "nntp", "sieve" and "ldap" are supported. |

| -xmpphost host | Host to use with "-starttls xmpp[-server]" |

| -name host | Hostname to use for "-starttls lmtp" or "-starttls smtp" |

| -krb5svc arg | Kerberos service name |

| -engine id | Initialise and use the specified engine -rand file:file:... |

| -sess_out arg | file to write SSL session to |

| -sess_in arg | file to read SSL session from |

| -servername host | Set TLS extension servername in ClientHello |

| -tlsextdebug | hex dump of all TLS extensions received |

| -status | request certificate status from server |

| -no_ticket | disable use of RFC4507bis session tickets |

| -serverinfo types | send empty ClientHello extensions (comma-separated numbers) |

| -curves arg | Elliptic curves to advertise (colon-separated list) |

| -sigalgs arg | Signature algorithms to support (colon-separated list) |

| -client_sigalgs arg | Signature algorithms to support for client certificate authentication (colon-separated list) |

| -nextprotoneg arg | enable NPN extension, considering named protocols supported (comma-separated list) |

| -alpn arg | enable ALPN extension, considering named protocols supported (comma-separated list) |

| -legacy_renegotiation | enable use of legacy renegotiation (dangerous) |

| -use_srtp profiles | Offer SRTP key management with a colon-separated profile list |

| -keymatexport label | Export keying material using label |

| -keymatexportlen len | Export len bytes of keying material (default 20) |

ls -l /usr/local/share/ca-certificates

ls -l /etc/ssl/certs/sudo update-ca-certificatesWill generates both private key and csr token

openssl req -nodes -newkey rsa:4096 -sha256 -keyout $(SUB.MYDOMAIN.TLD).key -out $(SUB.MYDOMAIN.TLD).csr -subj "/C=FR/ST=France/L=PARIS/O=My Company/CN=$(SUB.MYDOMAIN.TLD)"# generate private key

openssl ecparam -out $(SUB.MYDOMAIN.TLD).key -name sect571r1 -genkey

# generate csr

openssl req -new -sha256 -key $(SUB.MYDOMAIN.TLD).key -nodes -out $(SUB.MYDOMAIN.TLD).csr -subj "/C=FR/ST=France/L=PARIS/O=My Company/CN=$(SUB.MYDOMAIN.TLD)"You can verify the content of your csr token here : DigiCert Tool

print jails

fail2ban-client statusget banned ip and other info about a specific jail

fail2ban-client status sshset banip triggers email send

fail2ban-client set ssh banip 10.10.10.10unbanip

fail2ban-client set ssh unbanip 10.10.10.10check a specific fail2ban chain

iptables -nvL f2b-sshd

fail2ban-client get dbpurgeage

fail2ban-client get dbfilefail2ban will send mail using the MTA (mail transfer agent)

grep "mta =" /etc/fail2ban/jail.conf

mta = sendmailglobal default config

- /etc/fail2ban/jail.conf

will be override with this parameters Centralized Control file This is here we enable jails

- /etc/fail2ban/jail.local

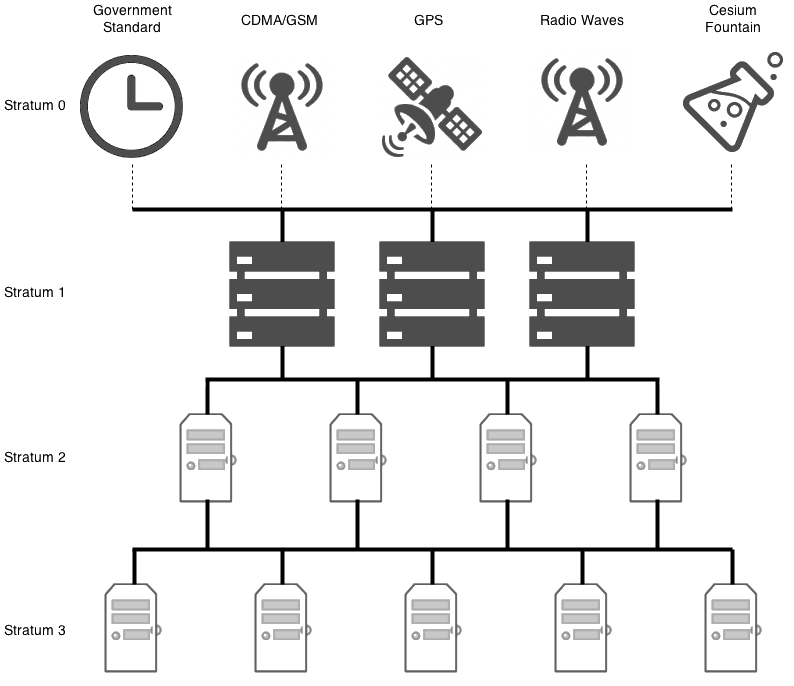

stands for Network Time Protocol

Debian, Ubuntu, Fedora, CentOS, and most operating system vendors, don't package NTP into client and server packages separately. When you install NTP, you've made your computer both a server, and a client simultaneously.

As a client, rather than pointing your servers to static IP addresses, you may want to consider using the NTP pool project. Various people all over the world have donated their stratum 1 and stratum 2 servers to the pool, Microsoft, XMission, and even myself have offered their servers to the project. As such, clients can point their NTP configuration to the pool, which will round robin and load balance which server you will be connecting to.

There are a number of different domains that you can use for the round robin. For example, if you live in the United States, you could use:

- 0.us.pool.ntp.org

- 1.us.pool.ntp.org

- 2.us.pool.ntp.org

- 3.us.pool.ntp.org

There are round robin domains for each continent, minus Antarctica, and for many countries in each of those continents. There are also round robin servers for projects, such as Ubuntu and Debian:

- 0.debian.pool.ntp.org

- 1.debian.pool.ntp.org

- 2.debian.pool.ntp.org

- 3.debian.pool.ntp.org

On my public NTP stratum 2 server, I run the following command to see its status:

ntpq -pn

remote refid st t when poll reach delay offset jitter

------------------------------------------------------------------------------

*198.60.22.240 .GPS. 1 u 912 1024 377 0.488 -0.016 0.098

+199.104.120.73 .GPS. 1 u 88 1024 377 0.966 0.014 1.379

-155.98.64.225 .GPS. 1 u 74 1024 377 2.782 0.296 0.158

-137.190.2.4 .GPS. 1 u 1020 1024 377 5.248 0.194 0.371

-131.188.3.221 .DCFp. 1 u 952 1024 377 147.806 -3.160 0.198

-217.34.142.19 .LFa. 1 u 885 1024 377 161.499 -8.044 5.839

-184.22.153.11 .WWVB. 1 u 167 1024 377 65.175 -8.151 0.131

+216.218.192.202 .CDMA. 1 u 66 1024 377 39.293 0.003 0.121

-64.147.116.229 .ACTS. 1 u 62 1024 377 16.606 4.206 0.216We need to understand each of the columns, so we understand what this is saying:

| Column | Meaning |

|---|---|

| remote | The remote server you wish to synchronize your clock with |

| refid | The upstream stratum to the remote server. For stratum 1 servers, this will be the stratum 0 source. |

| st | The stratum level, 0 through 16. |

| t | The type of connection. Can be "u" for unicast or manycast, "b" for broadcast or multicast, "l" for local reference clock, "s" for symmetric peer, "A" for a manycast server, "B" for a broadcast server, or "M" for a multicast server |

| when | The last time when the server was queried for the time. Default is seconds, or "m" will be displayed for minutes, "h" for hours and "d" for days. |

| poll | How often the server is queried for the time, with a minimum of 16 seconds to a maximum of 36 hours. It's also displayed as a value from a power of two. Typically, it's between 64 seconds and 1024 seconds. |

| reach | This is an 8-bit left shift octal value that shows the success and failure rate of communicating with the remote server. Success means the bit is set, failure means the bit is not set. 377 is the highest value. |

| delay | This value is displayed in milliseconds, and shows the round trip time (RTT) of your computer communicating with the remote server. |

| offset | This value is displayed in milliseconds, using root mean squares, and shows how far off your clock is from the reported time the server gave you. It can be positive or negative. |

| jitter | This number is an absolute value in milliseconds, showing the root mean squared deviation of your offsets. |

Next to the remote server, you'll notice a single character. This character is referred to as the "tally code", and indicates whether or not NTP is or will be using that remote server in order to synchronize your clock. Here are the possible values:

| remote single character | Meaning |

|---|---|

| whitespace | Discarded as not valid. Could be that you cannot communicate with the remote machine (it's not online), this time source is a ".LOCL." refid time source, it's a high stratum server, or the remote server is using this computer as an NTP server. |

| x | Discarded by the intersection algorithm. |

| . | Discarded by table overflow (not used). |

| - | Discarded by the cluster algorithm. |

| + | Included in the combine algorithm. This is a good candidate if the current server we are synchronizing with is discarded for any reason. |

| # | Good remote server to be used as an alternative backup. This is only shown if you have more than 10 remote servers. |

| * | The current system peer. The computer is using this remote server as its time source to synchronize the clock |

| o | Pulse per second (PPS) peer. This is generally used with GPS time sources, although any time source delivering a PPS will do. This tally code and the previous tally code "*" will not be displayed simultaneously. |

apt-get install ntp

ntpq -p

vim /etc/ntp.conf

sudo service ntp restart

ntpq -p

ntpstat

unsynchronised

time server re-starting

polling server every 64 s

ntpstat

synchronised to NTP server (10.10.10.10) at stratum 4

time correct to within 323 ms

polling server every 64 s

ntpq -c peers

remote refid st t when poll reach delay offset jitter

======================================================================

hamilton-nat.nu .INIT. 16 u - 64 0 0.000 0.000 0.001

ns2.telecom.lt .INIT. 16 u - 64 0 0.000 0.000 0.001

fidji.daupheus. .INIT. 16 u - 64 0 0.000 0.000 0.001#### Drift

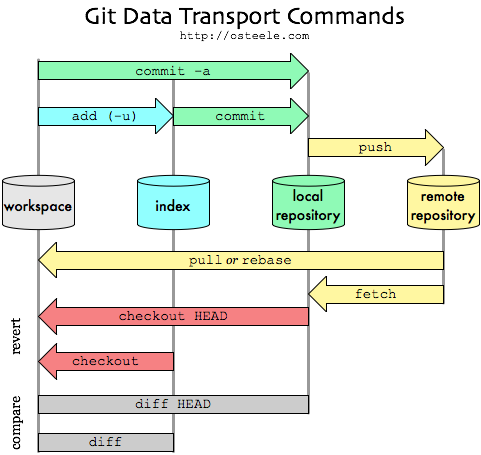

git remote -v

git branch -vgit remote set-url origin git@git.baptiste-dauphin.com:GROUP/SUB_GROUP/project_namecreate tag at your current commit

git tag temp_tag_2By default tags are not pushed, nor pulled

git push origin tag_1 tag_2list tag

git tag -ldelete tag

git tag -d temp_tag_2Get the current tag

git describe --tags --exact-match HEADgit checkout dev

git checkout master

git checkout branch

git checkout v_0.9

git checkout ac92da0124997377a3ee30f3159cdee838bd5b0bGet the current branch name

git branch | grep \* | cut -d ' ' -f2Specific file

git diff -- human/lvm.mdGlobal diff between your unstagged changes (workspace) and the index

git diffGlobal diff between your stagged changes (index) and local repository

git diff --stagedTo list the stashed modifications

git stash listTo show files changed in the last stash

git stash showSo, to view the content of the most recent stash, run

git stash show -pTo view the content of an arbitrary stash, run something like

git stash show -p stash@{1}In case of conflict when pulling, by default git will conserve both version of file(s)

git pull origin master

git status

You have unmerged paths.

(fix conflicts and run "git commit")

(use "git merge --abort" to abort the merge)

...

...

Unmerged paths:

(use "git add <file>..." to mark resolution)

both modified: path/to/filegit checkout --theirs /path/to/file

git checkout --ours /path/to/filewith Git reset and Git stash go at the previous commit. will uncommit your last changes

git reset --soft HEAD^hide temporary your work in a dirty magic directory and verify the content of it

git stash

git stash showTry to FF merge without any conflict

git pullput back your work by spawning back your modifications

git stash popAnd then commit again

git commit "copy-paste history commit message :)"You can tell him that you want your modifications take precedance So, in that case of merge conflict cancel your conflict by cancel the current merge,

git merge --abortThen, pull again telling git to keep YOUR local changes

git pull -X ours origin masterOr if you want to keep only the REMOTE work

git pull -X theirs origin mastergit log --author="b.dauphin" \

--since="2 week ago"git log --author="b.dauphin" \

-3git log --since="2 week ago" \

--pretty=format:"%an"git log --author="b.dauphin" \

--since="2 week ago" \

--pretty=format:"%h - %an, %ar : %s"git show 01624bc338d4a89c09ba2915ff25ce08174b8e93 3d9228fa99eab6c208590df91eb2af05daad8b40git log --follow -p -- file

git --no-pager log --follow -p -- fileThe git revert command can be considered an 'undo' type command, however, it is not a traditional undo operation. INSTEAD OF REMOVING the commit from the project history, it figures out how to invert the changes introduced by the commit and appends a new commit with the resulting INVERSE CONTENT. This prevents Git from losing history, which is important for the integrity of your revision history and for reliable collaboration.

Reverting should be used when you want to apply the inverse of a commit from your project history. This can be useful, for example, if you’re tracking down a bug and find that it was introduced by a single commit. Instead of manually going in, fixing it, and committing a new snapshot, you can use git revert to automatically do all of this for you.

git revert <commit hash>

git revert c6c94d459b4e1ed81d523d53ef81b6a4744eac12find a specific commit

git log --pretty=format:"%h - %an, %ar : %s"The git reset command is a complex and versatile tool for undoing changes. It has three primary forms of invocation. These forms correspond to command line arguments --soft, --mixed, --hard. The three arguments each correspond to Git's three internal state management mechanism's, The Commit Tree (HEAD), The Staging Index, and The Working Directory.

Git reset & three trees of Git

To properly understand git reset usage, we must first understand Git's internal state management systems. Sometimes these mechanisms are called Git's "three trees".

git commit ...

git reset --soft HEAD^

edit

git commit -a -c ORIG_HEADFirst time, clone a repo including its submodules

git clone --recurse-submodules -j8 git@github.com:FataPlex/documentation.gitUpdate an existing local repositories after adding submodules or updating them

git pull --recurse-submodules

git submodule update --init --recursive- Delete the relevant section from the .gitmodules file

- Stage the .gitmodules changes git add .gitmodules

- Delete the relevant section from .git/config

- Run git rm --cached path_to_submodule (no trailing slash)

- Run rm -rf .git/modules/path_to_submodule (no trailing slash).

- Commit git commit -m "Removed submodule"

- Delete the now untracked submodule files rm -rf path_to_submodule

Depuis le shell, avant de rentrer dans une session tmux

tmux ls

tmux new

tmux new -s session

tmux attach

tmux attach -t session_name

tmux kill-server : kill all sessions

:setw synchronize-panes on

:setw synchronize-panes off

:set-window-option xterm-keys onset-window-option -g xterm-keys onCtrl + B : (to press each time before another command)

| Command | meaning |

|---|---|

| Flèches | = se déplacer dans le splitage des fenêtres |

| N | "Next window" |

| P | "Previous window" |

| z | : zoom in/out in the current span |

| d | : detach from the current and let it running on the background (to be reattached to later) |

| x | : kill |

| % | vertical split |

| " | horizontal split |

| o | : swap panes |

| q | : show pane numbers |

| x | : kill pane |

| + | : break pane into window (e.g. to select text by mouse to copy) |

| - | : restore pane from window |

| ⍽ | : space - toggle between layouts |

| q | (Show pane numbers, when the numbers show up type the key to goto that pane) |

| { | (Move the current pane left) |

| } | (Move the current pane right) |

| z | toggle pane zoom |

| ":set synchronise-panes on" : | synchronise_all_panes in the current session (to execute parallel tasks like multiple iperfs client)" |

There are three main functions that make up an e-mail system.

- First there is the Mail User Agent (MUA) which is the program a user actually uses to compose and read mails.

- Then there is the Mail Transfer Agent (MTA) that takes care of transferring messages from one computer to another.

- And last there is the Mail Delivery Agent (MDA) that takes care of delivering incoming mail to the user's inbox.

| Function | Name | Tool which do this |

|---|---|---|

| Compose and read | MUA (User Agent) | mutt, thunderbird |

| Transferring | MTA (Transfer Agent) | msmtp, exim4, thunderbird |

| Delivering incoming mail to user's inbox | MDA (Devliery agent) | exim4, thunderbird |

It exists two types of MTA (Mail Transfert Agent)

- Mail server : like postfix, or sendmail-server

- SMTP client, which only forward to a SMTP relay : like ssmtp (deprecated since 2013), use mstmp instead,

which sendmail

/usr/sbin/sendmail

ls -l /usr/sbin/sendmail

lrwxrwxrwx 1 root root 5 Jul 15 2014 /usr/sbin/sendmail -> ssmtpIn this case, ssmtp in my mail sender

msmtp est un client SMTP très simple et facile à configurer pour l'envoi de courriels. Son mode de fonctionnement par défaut consiste à transférer les courriels au serveur SMTP que vous aurez indiqué dans sa configuration. Ce dernier se chargera de distribuer les courriels à leurs destinataires. Il est entièrement compatible avec sendmail, prend en charge le transport sécurisé TLS, les comptes multiples, diverses méthodes d’authentification et les notifications de distribution.

Installation

apt install msmtp msmtp-mta

vim /etc/msmtprchashtag Valeurs par défaut pour tous les comptes.

defaults

auth on

tls on

tls_trust_file /etc/ssl/certs/ca-certificates.crt

logfile ~/.msmtp.log

hashtag Exemple pour un compte Gmail

account gmail

host smtp.gmail.com

port 587

from username@gmail.com

user username

password plain-text-password

hashtag Définir le compte par défaut

account default : gmailTest email sending

echo -n "Subject: hello\n\nDo see my mail" | sendmail baptistedauphin76@gmail.com

You run the command... and, oops: sendmail: Cannot open mailhub:25. The reason for this is that we didn't provide mailhub settings at all. In order to forward messages, you need an SMTP server configured. That's where SSMTP performs really well: you just need to edit its configuration file once, and you are good to go.

Note that it also works with netcat

nc smtp.free.fr 25telnet smtp.free.fr 25

Trying 212.27.48.4...

Connected to smtp.free.fr.

Escape character is '^]'.

220 smtp4-g21.free.fr ESMTP Postfix

HELO test.domain.com

250 smtp4-g21.free.fr

MAIL FROM:<test@domain.com>

250 2.1.0 Ok

RCPT TO:<toto@domain.fr>

250 2.1.5 Ok

DATA

354 End data with <CR><LF>.<CR><LF>

Subject: test message

This is the body of the message!

.

250 2.0.0 Ok: queued as 2D8FD4C80FF

quit

221 2.0.0 Bye

Connection closed by foreign host.It just plugs into

tshark -f "udp port 53" -Y "dns.qry.type == A and dns.flags.response == 0"count total dns query

tshark -f "udp port 53" -n -T fields -e dns.qry.name | wc -ltshark -i wlan0 -Y http.request -T fields -e http.host -e http.user_agenttshark -r example.pcap -Y http.request -T fields -e http.host -e http.user_agent | sort | uniq -c | sort -ntshark -r example.pcap -Y http.request -T fields -e http.host -e ip.dst -e http.request.full_uritshark -r example.pcap -Y http.request -T fields -e http.host -e ip.dst -e http.request.full_uriSearch into LDAP

ldapsearch --help

-H URI LDAP Uniform Resource Identifier(s)

-x Simple authentication

-W prompt for bind password

-D binddn bind DN

-b basedn base dn for search

SamAccountName SINGLE-VALUE attribute that is the logon name used to support clients and servers from a previous version of Windows.

ldapsearch -H ldap://10.10.10.10 \

-x \

-W \

-D "user@fqdn" \

-b "ou=ou,dc=sub,dc=under,dc=com" "(sAMAccountName=b.dauphin)"modify an acount (remotly)

apt install ldap-utils

ldapmodify \

-H ldaps://ldap.company.tld \

-D "cn=b.dauphin,ou=people,c=fr,dc=company,dc=fr" \

-W \

-f b.gates.ldif(.ldif must contains modification data)

slapcat -f b.gates.ldifwill prompt you the string you wanna hash, and generate it in stout

slappasswd -h {SSHA}dn: cn=b.dauphin@github.com,ou=people,c=fr,dc=company,dc=fr

changetype: modify

replace: userPassword

userPassword: {SSHA}0mBz0/OyaZqOqXvzXW8TwE8O/Ve+YmSl| --list=$ARG | definition |

|---|---|

| pre,un,unaccepted | list unaccepted/unsigned keys. |

| acc or accepted | list accepted/signed keys. |

| rej or rejected | list rejected keys |

| den or denied | list denied keys |

| all | list all above keys |

salt -S 192.168.40.20 test.version

salt -S 192.168.40.0/24 test.versioncompound match

salt -C 'S@10.0.0.0/24 and G@os:Debian' test.version

salt -C '( G@environment:staging or G@environment:production ) and G@soft:redis*' test.pingsalt '*' network.ip_addrs

salt '*' cmd.run

salt '*' state.Apply

salt '*' test.ping

salt '*' test.version

salt '*' grains.get

salt '*' grains.item

salt '*' grains.items

salt '*' grains.lssalt-run survey.diff '*' cmd.run "ls /home"Forcibly removes all caches on a minion.

WARNING: The safest way to clear a minion cache is by first stopping the minion and then deleting the cache files before restarting it.

soft way

salt '*' saltutil.clear_cachesure way

systemctl stop salt-minion \

&& rm -rf /var/cache/salt/minion/ \

&& systemctl start salt-minionSaltStack - pillar, custom modules, states, beacons, grains, returners, output modules, renderers, and utils

Signal the minion to refresh the pillar data.

salt '*' saltutil.refresh_pillarsynchronizes custom modules, states, beacons, grains, returners, output modules, renderers, and utils.

salt '*' saltutil.sync_all - SSDs

- biosreleasedate

- biosversion

- cpu_flags

- cpu_model

- cpuarch

- disks

- dns

- domain

- fqdn

- fqdn_ip4

- fqdn_ip6

- gid

- gpus

- groupname

- host

- hwaddr_interfaces

- id

- init

- ip4_gw

- ip4_interfaces

- ip6_gw

- ip6_interfaces

- ip_gw

- ip_interfaces

- ipv4

- ipv6

- kernel

- kernelrelease

- kernelversion

- locale_info

- localhost

- lsb_distrib_codename

- lsb_distrib_id

- machine_id

- manufacturer

- master

- mdadm

- mem_total

- nodename

- num_cpus

- num_gpus

- os

- os_family

- osarch

- oscodename

- osfinger

- osfullname

- osmajorrelease

- osrelease

- osrelease_info

- path

- pid

- productname

- ps

- pythonexecutable

- pythonpath

- pythonversion

- saltpath

- saltversion

- saltversioninfo

- selinux

- serialnumber

- server_id

- shell

- swap_total

- systemd

- uid

- username

- uuid

- virtual

- zfs_feature_flags

- zfs_support

- zmqversion os:

Debian

os_family:

Debian

osarch:

amd64

oscodename:

stretch

osfinger:

Debian-9

osfullname:

Debian

osmajorrelease:

9

osrelease:

9.5

osrelease_info:

- 9

- 5Upgrade Salt-Minion:

cmd.run:

- name: |

exec 0>&- # close stdin

exec 1>&- # close stdout

exec 2>&- # close stderr

nohup /bin/sh -c 'salt-call --local pkg.install salt-minion && salt-call --local service.restart salt-minion' &

- onlyif: "[[ $(salt-call --local pkg.upgrade_available salt-minion 2>&1) == *'True'* ]]" Upgrade salt-minion bash script

{% set ipaddr = grains['fqdn_ip4'][0] %}

{% if (key | regex_match('.*dyn.company.tld.*', ignorecase=True)) != None %}salt -C "minion.local or minion2.local" \

> cmd.run "docker run debian /bin/bash -c 'http_proxy=http://10.100.100.100:1598 apt update ; http_proxy=http://10.100.100.100:1598 apt install netcat -y ; nc -zvn 10.3.3.3 3306' | grep open"

minion.local:

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

debconf: delaying package configuration, since apt-utils is not installed

(UNKNOWN) [10.3.3.3] 3306 (?) open

minion2.local:

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

debconf: delaying package configuration, since apt-utils is not installed

(UNKNOWN) [10.3.3.3] 3306 (?) openWill print you the GRANTS for the user

echo "enter your password" ; read -s password ; \

salt "*" \

cmd.run "docker pull imega/mysql-client ; docker run --rm imega/mysql-client mysql --host=10.10.10.10 --user=b.dauphin --password=$password --database=db1 --execute='SHOW GRANTS FOR CURRENT_USER();'" \

env='{"http_proxy": "http://10.10.10.10:9999"}'Validate config before reload/restart

apachectl configtestpronouced 'Engine X'

Various variables HTTP variables

example redirect HTTP to HTTPS

server {

listen 80;

return 301 https://$host$request_uri;

}

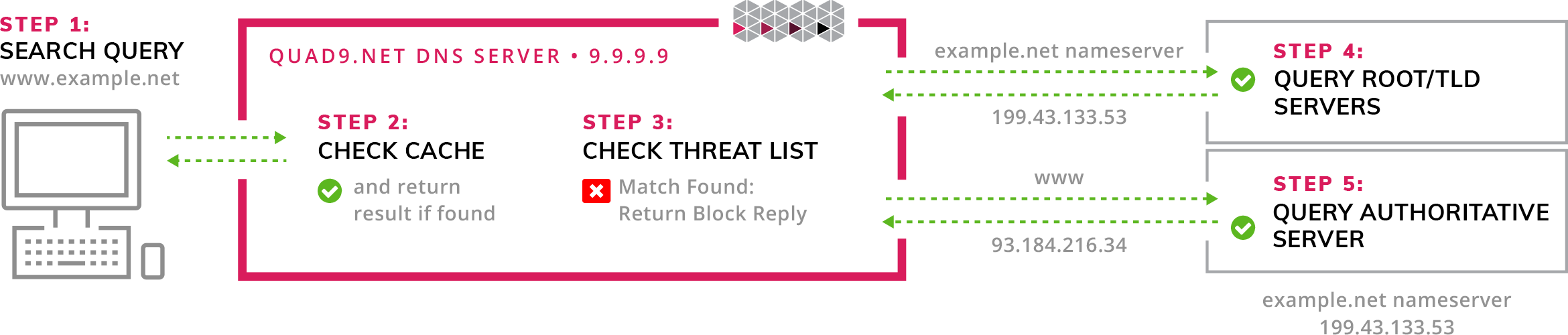

The Domain Name System is a hierarchical and decentralized naming system for computers, services, or other resources connected to the Internet or a private network.

It associates various information with domain names assigned to each of the participating entities.

https://wiki.csnu.org/index.php/Installation_et_configuration_de_bind9

the process name of bind9 is "named"

name server control utility

Write (dump) cache of named in default file (/var/cache/bind/named_dump.db)

dumpdb [-all|-cache|-zones|-adb|-bad|-fail] [view ...]

rndc dumpdb -cache default_anyenable query logging in default location (/var/log/bind9/query.log)

rndc querylog [on|off]toggle querylog mode

rndc querylogflush Flushes all of the server's caches.

rndc flushflush [view] Flushes the server's cache for a view.

rndc flush default_anyget unic master zone loaded

named-checkconf -z 2> /dev/null | grep 'zone' | sort -u | awk '{print $2}' | rev | cut --delimiter=/ -f2 | rev | sort -u

named-checkconf -z 2> /dev/null | grep 'zone' | grep -v 'bad\|errors' | sort -u | awk '{print $2}' | rev | cut --delimiter=/ -f2 | rev | sort -ukeep cache

systemctl reload bind9empty cache

systemctl restart bind9dig @8.8.8.8 +short www.qwant.com +nodnssec

dig @8.8.8.8 +short google.com +notcp

dig @8.8.8.8 +noall +answer +tcp www.qwant.com A

dig @8.8.8.8 +noall +answer +notcp www.qwant.com Aothers options

- +short

- +(no)tcp

- +(no)dnssec

- +noall

- +answer

- type

Verify your url

https://zabbix.company/zabbix.php?action=dashboard.view

https://zabbix.company/zabbix/zabbix.php?action=dashboard.view

### Test a given item

zabbix_agentd -t system.hostname

zabbix_agentd -t system.swap.size[all,free]

zabbix_agentd -t vfs.file.md5sum[/etc/passwd]

zabbix_agentd -t vm.memory.size[pavailable]

### print all known items

zabbix_agentd -pcurl \

-d '{

"jsonrpc":"2.0",

"method":"apiinfo.version",

"id":1,

"auth":null,

"params":{}

}' \

-H "Content-Type: application/json-rpc" \

-X POST https://zabbix.company/api_jsonrpc.php | jq .curl \

-d '{

"jsonrpc": "2.0",

"method": "user.login",

"params": {

"user": "b.dauphin",

"password": "toto"

},

"id": 1,

"auth": null

}' \

-H "Content-Type: application/json-rpc" \

-X POST https://zabbix.company/api_jsonrpc.php | jq .replace $host and $token

curl \

-d '{

"jsonrpc": "2.0",

"method": "host.get",

"params": {

"filter": {

"host": [

"$host"

]

},

"with_triggers": "82567"

},

"id": 2,

"auth": "$token"

}' \

-H "Content-Type: application/json-rpc" \

-X POST https://zabbix.company/api_jsonrpc.php | jq .Replace $hostname1,$hostname2 and $token

curl \

-d '{

"jsonrpc": "2.0",

"method": "host.get",

"params": {

"output": ["hostid"],

"filter": {

"host": [

""$hostname1","$hostname2"

]

}

},

"id": 2,

"auth": "$token"

}' \

-H "Content-Type: application/json-rpc" \

-X POST https://zabbix.tld/api_jsonrpc.php | jq '.result'Replace $hostname1,$hostname2 and $token

curl \

-d '{

"jsonrpc": "2.0",

"method": "host.get",

"params": {

"output": ["hostid"],

"selectGroups": "extend",

"filter": {

"host": [

"$hostname1","$hostname2"

]

}

},

"id": 2,

"auth": "$token"

}' \

-H "Content-Type: application/json-rpc" \

-X POST https://zabbix.tld/api_jsonrpc.php | jq .curl \

-d '{

"jsonrpc": "2.0",

"method": "host.get",

"params": {

"output": ["name"],

"selectTags": "extend",

"tags": [

{

"tag": "environment",

"value": "dev",

"operator": 1

}

]

},

"id": 2,

"auth": "$token"

}' \

-H "Content-Type: application/json-rpc" \

-X POST https://zabbix.company/api_jsonrpc.php | jq .Output hostid, host and name

curl \

-d '{

"jsonrpc": "2.0",

"method": "host.get",

"params": {

"output": ["hostid","host","name"],

"tags": [

{

"tag": "environment",

"value": "dev",

"operator": 1

}

]

},

"id": 2,

"auth": "$token"

}' \

-H "Content-Type: application/json-rpc" \

-X POST https://zabbix.company/api_jsonrpc.php | jq .curl \

-d '{

"jsonrpc": "2.0",

"method": "host.get",

"params": {

"output": ["name"],

"tags": [

{

"tag": "app",

"value": "swarm",

"operator": "1"

},

{

"tag": "environment",

"value": "dev",

"operator": "1"

}

]

},

"id": 2,

"auth": "$token"

}' \

-H "Content-Type: application/json-rpc" \

-X POST https://zabbix.tld/api_jsonrpc.php | jq .Warning erase all others tags + can set only one tag... So I do not recommend using this shity feature.

curl \

-d '{

"jsonrpc": "2.0",

"method": "host.update",

"params": {

"hostid": "12345",

"tags": [

{

"tag": "environment",

"value": "staging"

}

]

},

"id": 2,

"auth": "$token"

}' \

-H "Content-Type: application/json-rpc" \

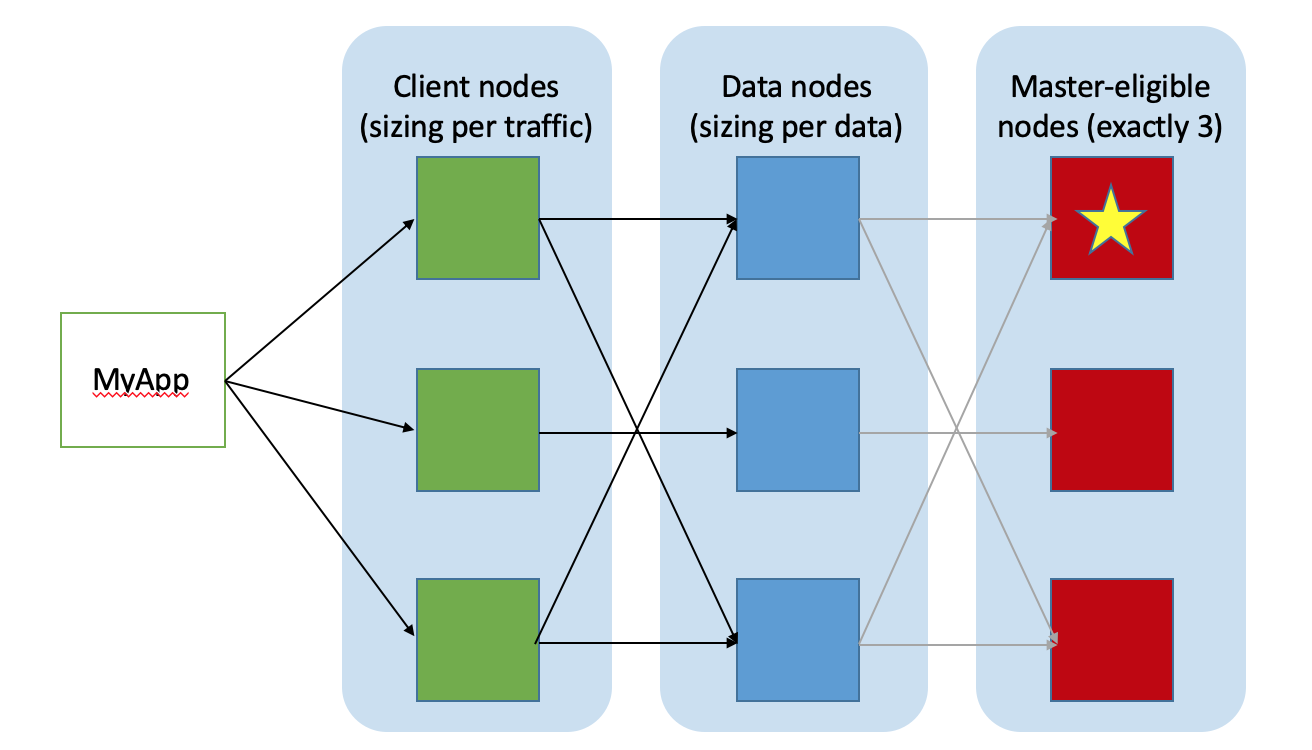

-X POST https://zabbix.tld/api_jsonrpc.php | jq '.result'By default, each index in Elasticsearch is allocated 5 primary shards and 1 replica which means that if you have at least two nodes in your cluster, your index will have 5 primary shards and another 5 replica shards (1 complete replica) for a total of 10 shards per index.

| Meaning | end point (http://ip:9200) |

|---|---|

| Nodes name, load, heap, Disk used, segments, JDK version | /_cat/nodes?v&h=name,ip,load_1m,heapPercent,disk.used_percent,segments.count,jdk |

| info plus précises sur les index | /_cat/indices/INDEX/?v&h=index,health,pri,rep,docs.count,store.size,search.query_current,segments,memory.total |

| compter le nombre de doc | /_cat/count/INDEX/?v&h=dc |

| savoir l'état du cluster à un instant T | /_cat/health |

| full stats index | /INDEX/_stats?pretty=true |

| Kopg plugin | /_plugin/kopf |

Very good tutorial https://blog.ruanbekker.com/blog/2017/11/22/using-elasticdump-to-backup-elasticsearch-indexes-to-json/

Warning

DO NOT BACKUP with wildcard matching

I tested to backup indexes with wildcard, It works but when you want to put back the data, elasticdump takes ALL the DATA from ALL index from the the json file to feed the one you provide in the url. Exemple :

elasticdump --input=es_test-index-wildcard.json --output=http://localhost:9200/test-index-1 --type=dataIn this exemple the file es_test-index-wildcard.json was the result of the following command, which matches 2 indexes (test-index-1 and test-index-2)

elasticdump --input=http://localhost:9200/test-index-* --output=es_test-index-1.json --type=dataSo, I'll have to manually expand all various indexes in order to back them up !

Elasticsearch Cluster Topology

Change the future index sharding and and replicas and other stuff.

For example, if you have a mono-node cluster, you don't want any replica nor sharding.

curl -X POST '127.0.0.1:9200/_template/default' \

-H 'Content-Type: application/json' \

-d '

{

"index_patterns": ["*"],

"order": -1,

"settings": {

"number_of_shards": "1",

"number_of_replicas": "0"

}

}

' \

| jq .check config

php-fpm7.2 -thaproxy -f /etc/haproxy/haproxy.cfg -c -VMeaning of various status codes

version

java -version

openjdk version "1.8.0_222"

OpenJDK Runtime Environment (build 1.8.0_222-b10)

OpenJDK 64-Bit Server VM (build 25.222-b10, mixed mode)Java doesn't use system CA but a specific keystore

You can manage the keystore with keytool

keytool -list -v -keystore /usr/jdk64/jdk1.7.0_62/jre/lib/security/cacerts

keytool -import -alias dolphin_ltd_root_ca -file /etc/pki/ca-trust/source/anchors/dolphin_ltd_root_ca.crt -keystore /usr/jdk64/jdk1.7.0_62/jre/lib/security/cacerts

keytool -import -alias dolphin_ltd_subordinate_ca -file /etc/pki/ca-trust/source/anchors/dolphin_ltd_subordinate_ca.crt -keystore /usr/jdk64/jdk1.7.0_62/jre/lib/security/cacerts

keytool -delete -alias dolphin_ltd_root_ca -keystore /usr/jdk64/jdk1.7.0_62/jre/lib/security/cacerts

keytool -delete -alias dolphin_ltd_subordinate_ca -keystore /usr/jdk64/jdk1.7.0_62/jre/lib/security/cacerts{% %}

{%- %}

{% -%}

{%- -%}(By default) add an empty line before jinja rendering and add one after

{% set site_url = 'www.' + domain %}remove the empty line before jinja rendering and add one after

{%- set site_url = 'www.' + domain %}add the empty line before jinja rendering and remove one after

{% set site_url = 'www.' + domain -%}remove the empty line before jinja rendering and remove one after

{%- set site_url = 'www.' + domain -%}| Symbol | Meaning |

|---|---|

| () | tuple |

| [] | list |

| {} | dictionary |

Work with variables, if you don't know if the variable exists Jinja2 example

{% if min_verbose_level is defined

and min_verbose_level %}

and level({{ min_verbose_level }} .. emerg);

{% endif %}list all versions of python (system wide)

ls -ls /usr/bin/python*install pip3

apt-get install build-essential python3-dev python3-pipinstall a package

pip install virtualenv

pip --proxy http://10.10.10.10:5000 install dockerinstall without TLS verif (not recommended)

pip install --trusted-host pypi.python.org \

--trusted-host github.com \

https://github.com/Exodus-Privacy/exodus-core/releases/download/v1.0.13/exodus_core-1.0.13.tar.gzShow information about one or more installed packages

pip3 show $package_name

pip3 show virtualenvprint all installed package (depends on your environement venv or system-wide)

pip3 freezeinstall from local sources (setup.py required)

python setup.py install --record files.txtprint dependencies tree of a specified package

pipdeptree -p uwsgiglobal site-packages ("dist-packages") directories

python3 -m sitemore concise list

python3 -c "import site; print(site.getsitepackages())"Note: With virtualenvs getsitepackages is not available, sys.path from above will list the virtualenv s site-packages directory correctly, though.

Create python package (to be downloaded in site-packages local dir)

-----------------------------

some_root_dir/

|-- README

|-- setup.py

|-- an_example_pypi_project

| |-- __init__.py

| |-- useful_1.py

| |-- useful_2.py

|-- tests

|-- |-- __init__.py

|-- |-- runall.py

|-- |-- test0.py

----------------------------Utility function to read the README file.

Used for the long_description.

It's nice, because now

- we have a top level README file

- it's easier to type in the README file than to put a raw string in below ...

import os

from setuptools import setup

def read(fname):

return open(os.path.join(os.path.dirname(__file__), fname)).read()

setup(

name = "an_example_pypi_project",

version = "0.0.4",

author = "Andrew Carter",

author_email = "andrewjcarter@gmail.com",

description = ("An demonstration of how to create, document, and publish "

"to the cheese shop a5 pypi.org."),

license = "BSD",

keywords = "example documentation tutorial",

url = "http://packages.python.org/an_example_pypi_project",

packages=['an_example_pypi_project', 'tests'],

long_description=read('README'),

classifiers=[

"Development Status :: 3 - Alpha",

"Topic :: Utilities",

"License :: OSI Approved :: BSD License",

],

)within the root directory

Your package have been built in /dist/$(package-name)-$(version)-$(py2-compatible)-$(py3-compatible)-any.whl

python setup.py sdist bdist_wheel

example : ./dist/dns_admin-1.0.0-py2-none-any.whlapt install python-pip python3-pip

pip install pipenvUsage Examples:

Create a new project using Python 3.7, specifically:

$ pipenv --python 3.7

Remove project virtualenv (inferred from current directory):

$ pipenv --rm

Install all dependencies for a project (including dev):

$ pipenv install --dev

Create a lockfile containing pre-releases:

$ pipenv lock --pre

Show a graph of your installed dependencies:

$ pipenv graph

Check your installed dependencies for security vulnerabilities:

$ pipenv check

Install a local setup.py into your virtual environment/Pipfile:

$ pipenv install -e .