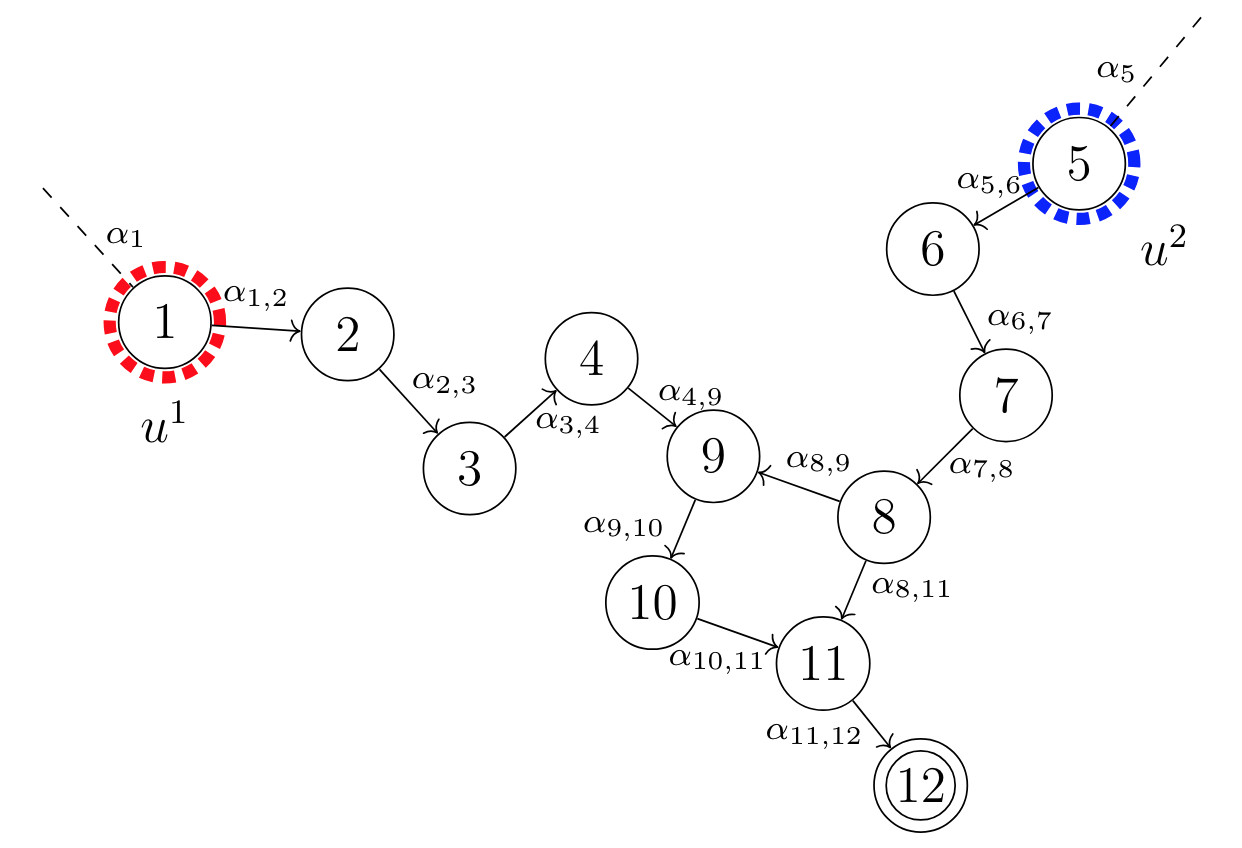

The Threat Defense environment is an OpenAI Gym implementation of the environment defined as the toy example in Optimal Defense Policies for Partially Observable Spreading Processes on Bayesian Attack Graphs by Miehling, E., Rasouli, M., & Teneketzis, D. (2015). It constitutes a 29-state/observation, 4-action POMDP defense problem.

Above, the Threat Defense environment can be observed. None of the notations or the definitions made in the paper will be explained in the text that follows, but rather the benchmark of the toy example will be stated. If these are desired, follow the link found earlier to the paper of Miehling, E., Rasouli, M., & Teneketzis, D. (2015).

Of the 12 attributes that the toy example is built up by, two are leaf attributes (1 and 5) and one is a critical attribute (12). To give the network a more realistic appearance, the 12 attributes are intepreted in the paper as:

- Vulnerability in WebDAV on machine 1

- User access on machine 1

- Heap corruption via SSH on machine 1

- Root access on machine 1

- Buffer overflow on machine 2

- Root access on machine 2

- Squid portscan on machine 2

- Network topology leakage from machine 2

- Buffer overflow on machine 3

- Root access on machine 3

- Buffer overflow on machine 4

- Root access on machine 4

The defender have access to the two following binary actions:

- u_1: Block WebDAV service

- u_2: Disconnect machine 2

Thus we have four countermeasures to apply, i.e U = {none, u_1, u_2, u_1 & u_2}.

The cost function is defined as C(x,u) = C(x) + D(u).

C(x) is the state cost, and is 1 if the state, that is x, is a critical attribute. Otherwise it is 0.

D(u) is the availability cost of a countermeasure u, and is 0 if the countermeasure is none, 1 if it is u_1 or u_2 and 5 if it is both u_1 and u_2.

The parameters of the problem are:

# The probabilities of detection:

beta = [0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.7, 0.6, 0.7, 0.85, 0.95]

# The attack probabilities:

alpha_1, alpha_5 = 0.5

# The spread probabilities:

alpha_(1,2), alpha_(2,3), alpha_(4,9), alpha_(5,6), alpha_(7,8), alpha_(8,9), alpha_(8,11), alpha_(10,11) = 0.8

alpha_(3,4), alpha_(6,7), alpha_(9,10), alpha_(11,12) = 0.9

# The discount factor:

gamma = 0.85

# The initial belief vector

pi_0 = [1,0,...,0]- OpenAI Gym

- Numpy

cd gym-threat-defense

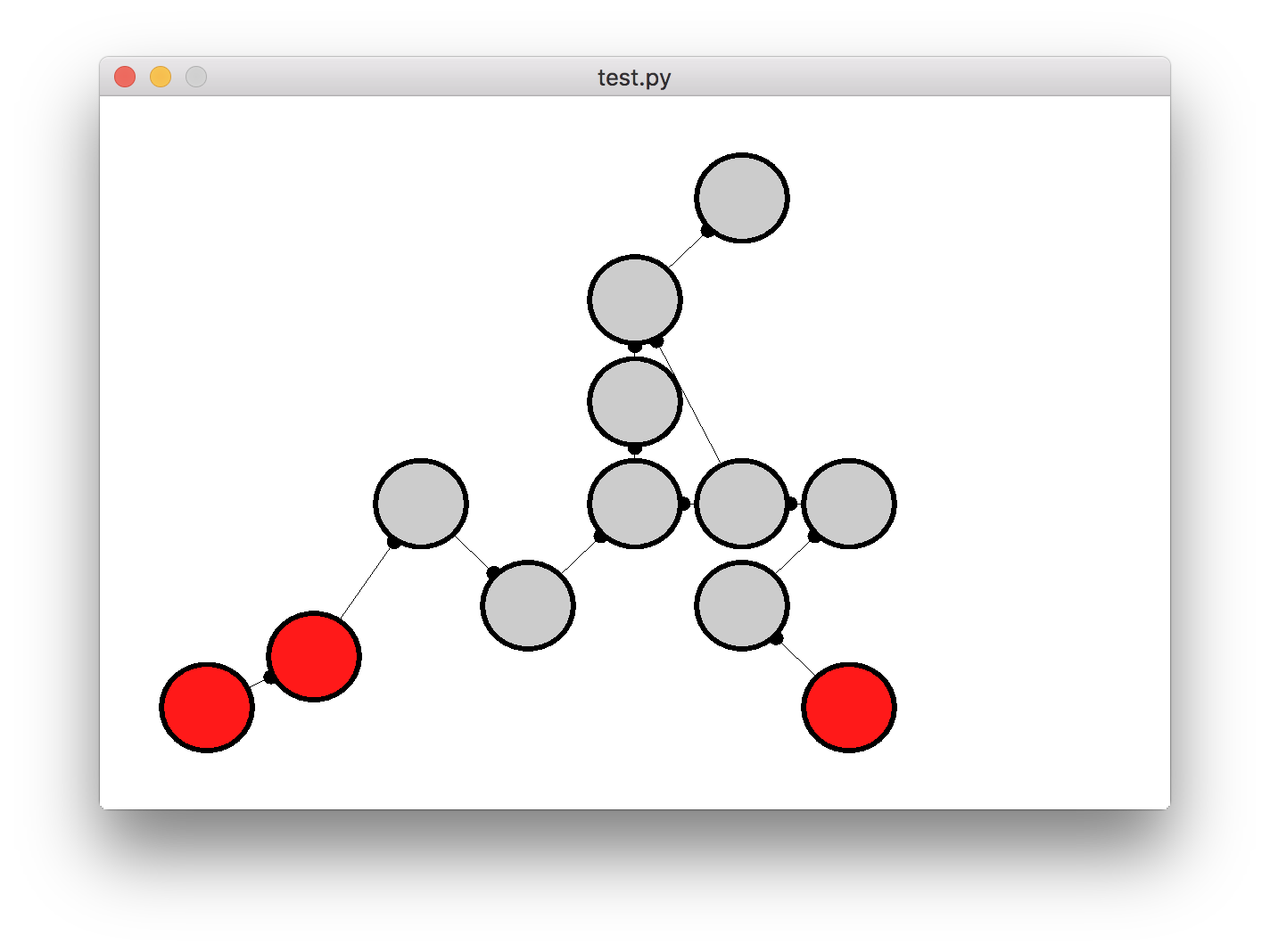

pip install -e .There are two possible rendering alternatives when running the environment. These are:

- Render to stdout

- A visual mode which prints the graph and indicate which nodes the attacker has taken over

To do a visual rendering, pass in 'rgb_array' to the render function.

env.render('rgb_array')Otherwise, for an ASCII representation to stdout, pass in 'human'.

env.render('human')Example of the printing, where we can see that the agent took the block and disconnect action. The attacker has enabled five attributes, i.e. nodes, represented by ones, where the non-enabled attributes are represented by zeros. A node with parentheses is a leaf node, also known as an entry-point, a square bracket is a normal non-leaf node and a double bracketed node is a critical node.

Action: Block WebDAV service and Disconnect machine 2

(1) --> [1] --> [0] --> [0]

\--> [0] <-- [0] <-- [1] <-- [1] <-- (1)

\--> [0] <---/

\--> [0] --> [[0]]

By default the mode is set to printing to stdout.

As an example on how to use the Threat Defense environment, we provide a couple of algorithms that

uses both configurations of the environment. Read the README in the examples/ directory for more information

on which algorithm works with which.

How to create new environments for Gym

- Johan Backman johback@student.chalmers.se

- Hampus Ramström hampusr@student.chalmers.se