GREATER and CARLA-4D -- two synthetic datasets that are released alongside our CVPR 2022 paper "Revealing Occlusions with 4D Neural Fields".

See the main repository here.

Click here to access the already generated data that we actually used to train and evaluate our models. The archives are located on Google Drive and split into multiple files, so a tool like gshell may be useful for downloading them in a terminal more efficiently.

This repository contains the video generation code, which may be useful if you want to tweak the simulators or environments from which our datasets are sourced.

This is based on CATER, which is originally based on CLEVR.

Please see greater_gen_commands.sh, which runs render_videos.py and postprocess_dataset.py.

I recommend parallelizing the data generation via multiple tmux panes.

This dataset uses the CARLA simulator.

After installing CARLA (see this guide), first run:

./CarlaUE4.sh -carla-world-port=$PORT -RenderOffScreen -graphicsadapter=2 -nosound -quality-level=Epic

where 2 is your 0-based GPU index. No display is needed if you run the command this way (also called headless), so it is suited for Linux machines that you may be operating remotely.

Then, see carla_gen_commands/. These scripts execute the Python files record_simulation.py, capture_sensor_data.py, and video_scene.py in that order, parallelizing across separate CARLA instances running on different GPUs (if you have multiple).

I used the following eight maps: Town01, Town02, Town03, Town04, Town05, Town06, Town07, Town10HD.

An optional final step is to run meas_interest.py to generate occlusion_rate files, intended for training the model in our paper specifically.

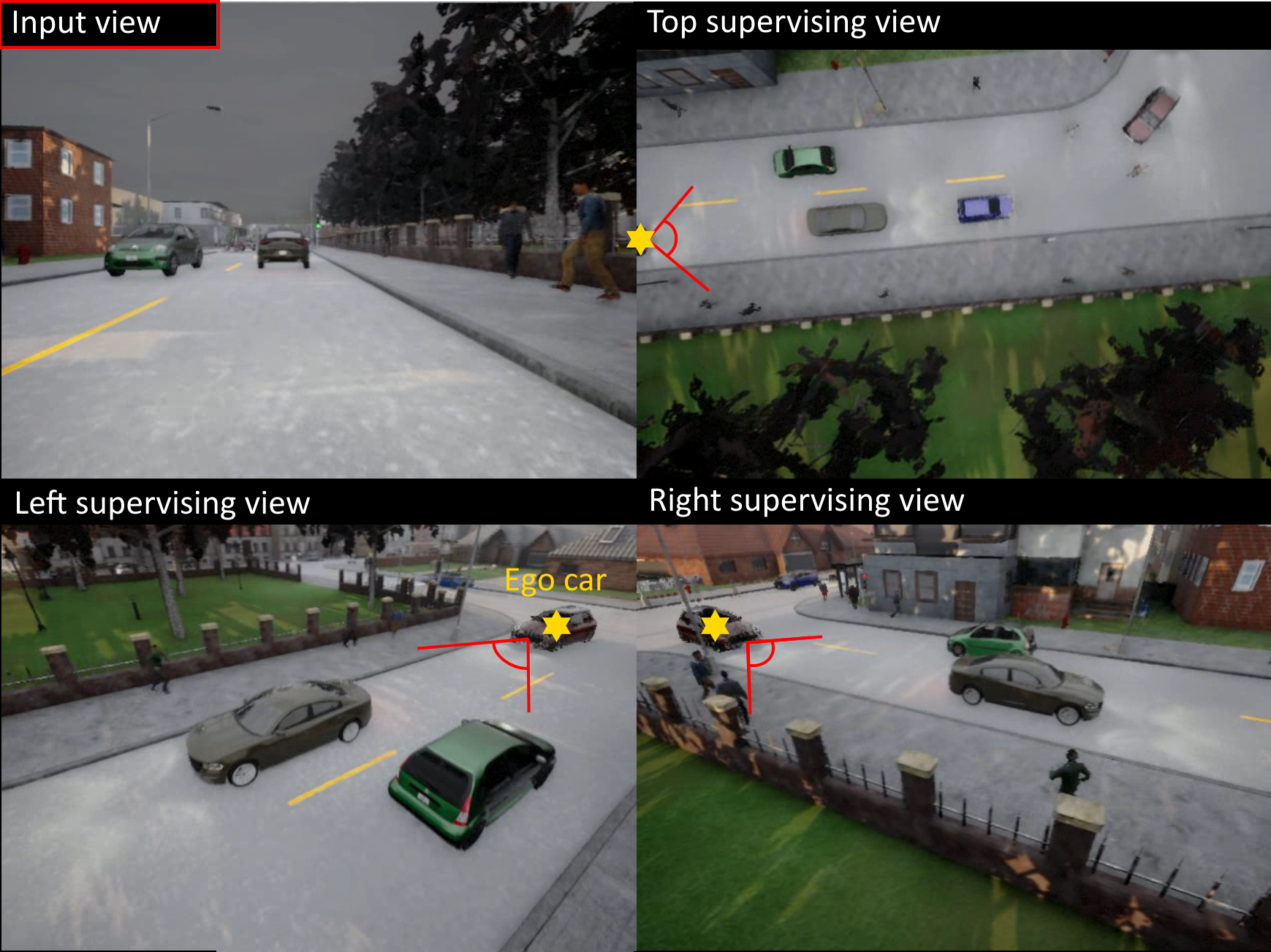

Some examples of the raw RGB views are shown here:

This is a the dynamic merged point cloud, colored according to semantic category:

carla_merged_semantic_example.mp4

Note that while our paper simply calls the dataset CARLA, we decided to denote it as CARLA-4D instead henceforth for disambiguation.