Implementation for the paper Improved Soccer Action Spotting Using Both Audio and Video Streams.

This repository proposes an approach to show that using audio streams for soccer action spotting improves the performance.

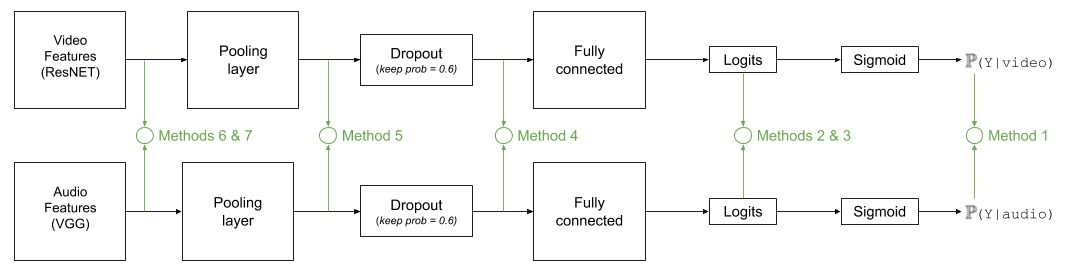

We tried several methods to merge visual and audio features with the baseline model provided by S. Giancola [1], as illustrated on the Figure below.

In this paper, we propose a study on multi-modal (audio and video) action spotting and classification in soccer videos. Action spotting and classification are the tasks that consist in finding the temporal anchors of events in a video and determine which event they are. This is an important application of general activity understanding. Here, we propose an experimental study on combining audio and video information at different stages of deep neural network architectures. We used the SoccerNet benchmark dataset, which contains annotated events for 500 soccer game videos from the Big Five European leagues. Through this work, we evaluated several ways to integrate audio stream into video-only-based architectures. We observed an average absolute improvement of the mean Average Precision (mAP) metric of 7.43% for the action classification task and of 4.19% for the action spotting task.

- Bastien Vanderplaetse (bastien.vanderplaetse@umons.ac.be) - GitHub

- Stéphane Dupont (stephane.dupont@umons.ac.be) - GitHub

The SoccerNet dataset [1] and visual features were downloaded by following the instructions here.

Audio features were extracted by using the VGGish [2] implementation available here and can be downloaded here.

The VGGish weights used can be downloaded here.

- Python-3.6+

- Tensorflow-gpu-1.14

- Numpy

To train the network (action classification task):

python ClassificationMinuteBased.py --architecture AudioVideoArchi5 --training listgame_Train_300.npy --validation listgame_Valid_100.npy --testing listgame_Test_100.npy --featuresVideo ResNET --featuresAudio VGGish --PCA --network VLAD --tflog Model --VLAD_k 512 --WindowSize 20 --outputPrefix vlad-archi5-20sec --formatdataset 1To test the network (action spotting task):

python ClassificationSecondBased.py --testing listgame_Test_100.npy --featuresVideo ResNET --featuresAudio VGGish --architecture AudioVideoArchi5 --network VLAD --VLAD_k 512 --WindowSize 20 --PCA --output VLAD-Archi5-20sec-SpottingTo compute average-mAP:

python compute_mAP.pyOur best model's weights will be soon available to download.

[1] Silvio Giancola, Mohieddine Amine, Tarek Dghaily, and Bernard Ghanem. Soccernet: A scalable dataset for action spotting in soccer videos. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, June 2018. - [Paper] - [GitHub]

[2] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv1409.1556, 09 2014 - [Paper]