A collection of terraform modules which creates a generic data processing pipeline. This is a recurring infrastructure pattern in both academic and commerical settings. The codebase is designed to be modulerised but care is needed when a subset of the modules are used.

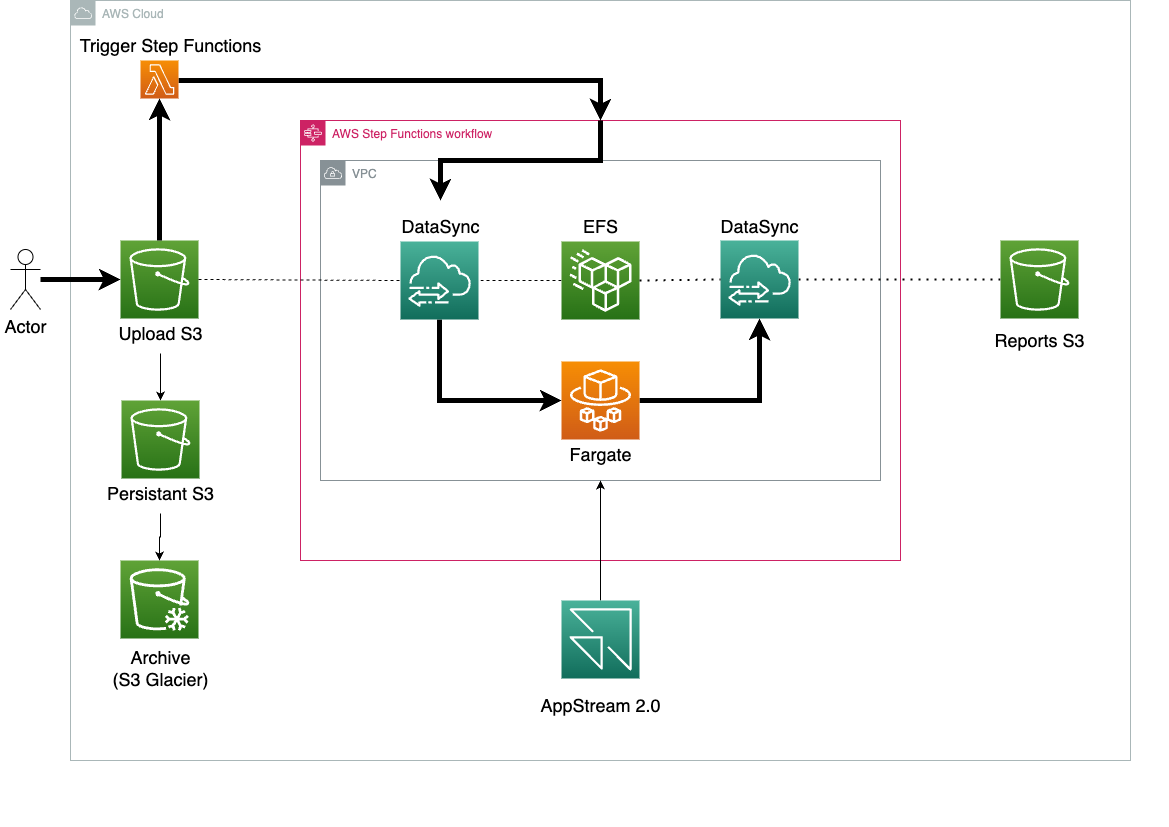

The containerised workflow consists of three highlevel steps: upload a file (image or text base), trigger an automatic workflow which processes the uploaded data and then return the resultant output. To capture this workflow in a modern serverless design the following architected solution was developed.

- A S3 bucket with intelligent storage options and lambda trigger is set up to receive the uploaded data. When data (file, image, etc.) arrives on the S3 bucket a lambda function is triggered which instantiates AWS Step Functions.

- AWS Step Functions consists of three states:

- A DataSync task for copying the contents of the upload S3 bucket to EFS.

- AWS Batch which launches an AWS Fargate containerized task.

- A DataSync task to copy the resultant output from the Batch job on EFS to a reports S3 bucket.

- A S3 bucket denoted as Reports S3 in the schematic figure provides storage for the processed output from the container.

- AppStream 2.0 allows a potential user a virtual desktop within the VPC. This service is included to enable quality control to be carried out on the containerized workflow.

- An AWS account.

- The AWS CLI installed.

- The Terraform CLI (1.2.0+) installed.

Ensure that your IAM credentials can be used to authenticate the Terraform AWS provider. Details can be found on the Terraform documentation.

- To enable Appstream 2.0 one needs to first create the appropriate stacks and fleets. This can be done through the management console. Make note of the AppStream2.0 image name.

- Clone this GitHub repo.

- Navigate to the cloned repo in the terminal and run the following commands:

terraform initterraform validateterraform apply

- When prompted for the

as2_image_namepass the value from Step 1. - A complete list of the AWS services which will be deployed appears. The user should check this before agreeing to the deployment (Please be advised this will incur a cost).

If this is the first time to deploy please note that it will take some time.

As stated, the step functions state machine consists of three main tasks:

- DataSync between the S3 uploads bucket and EFS

- AWS Batch job with launches a Fargate Task

- DataSync task to copy data from EFS to the S3 reports bucket.

However, to ensure that the DataSync tasks finish before moving to the next state a polling and wait pattern has been implemented.

The best way to test the deployment is to navigate to the uploads S3 bucket in the management console. From here you can upload an example file to the bucket. This action should automatically trigger the step functions. To see this, open step functions in the management console and you should note a running job will have started. Once the workflow has completed you will see the processed file appear in the reports S3 bucket. Cleaning up

To avoid incurring future charges, delete the resources.

Please be advised that to use Appstream2.0 you will need to follow the console set up at [https://eu-west-2.console.aws.amazon.com/appstream2] before deploying the terraform.

| Name | Version |

|---|---|

| terraform | >= 1.3.9 |

| aws | >= 4.9.0 |

No providers.

| Name | Source | Version |

|---|---|---|

| appstream | ./modules/appstream | n/a |

| batch | ./modules/batch | n/a |

| datasync | ./modules/datasync | n/a |

| efs | ./modules/efs | n/a |

| s3_reports | ./modules/s3_reports | n/a |

| s3_upload | ./modules/s3_upload | n/a |

| step_function | ./modules/step_function | n/a |

| vpc | ./modules/vpc | n/a |

No resources.

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| as2_desired_instance_num | Desired number of AS2 instances | number |

1 |

no |

| as2_fleet_description | Fleet description | string |

"ARC batch process fleet" |

no |

| as2_fleet_display_name | Fleet diplay name | string |

"ARC batch process fleet" |

no |

| as2_fleet_name | Fleet name | string |

"ARC-batch-fleet" |

no |

| as2_image_name | AS2 image to deploy | string |

n/a | yes |

| as2_instance_type | AS2 instance type | string |

"stream.standard.medium" |

no |

| as2_stack_description | Stack description | string |

"ARC batch process stack" |

no |

| as2_stack_display_name | Stack diplay name | string |

"ARC batch process stack" |

no |

| as2_stack_name | Stack name | string |

"ARC-batch-stack" |

no |

| compute_environments | Compute environments | string |

"fargate" |

no |

| compute_resources_max_vcpus | Max VCPUs resources | number |

1 |

no |

| container_image_url | Container image URL | string |

"public.ecr.aws/docker/library/busybox:latest" |

no |

| container_memory | Containter Memory resources | number |

2048 |

no |

| container_vcpu | Containter VCPUs resources | number |

1 |

no |

| efs_throughput_in_mibps | EFS provisioned throughput in mibps | number |

1 |

no |

| efs_transition_to_ia_period | Lifecycle policy transition period to IA | string |

"AFTER_7_DAYS" |

no |

| region | The region to deploy into. | string |

"eu-west-2" |

no |

| solution_name | Overall name for the solution | string |

"arc-batch" |

no |

| vpc_cidr_block | The CIDR block for the VPC | string |

"10.0.0.0/25" |

no |

No outputs.