Rundeck Pro on EKS

About

This workshop is a walkthrough of deploying Rundeck Pro. After working through this lab you will have a web accessable deployment of Rundeck running on Amazon EKS Kubernetes, backed by MySQL DB and EFS. This is a workable architecture that can easily be used as a basis for deploying a fully HA production Rundeck cluster with further consideration for cluster scaling and object storage.

Assumptions

-

You already have an AWS account with typical permissions to create EC2 resources, EKS clusters, EFS file systems

-

A rundeck pro license is currently issued to you, or you expect to receive one while completing this workshop.

Part 1 - Requirements

If you are currently already developing with EKS and Kubernetes, feel free to skip to Part 2. Otherwise, visit Setting up your EKS Dev Environment for instructions on how to set up your machine with the proper tools needed to continue this lab.

Part 2 - Deploy EKS Cluster

In order to deploy the Docker image for Rundeck Pro, we need an EKS cluster to work with. There are two options for us for backing an EKS cluster, Fargate, or your own managed VPC nodes. Since Rundeck Pro needs file persistence in order to write logs and configuration data, we are opting for a managed EC2 backend deployment to allow persistence with EFS.

-

Download or copy your AWS public SSH key to your current directory and make sure the permissions are set securely.

$ cp ~/eks.pub . && chmod 600 eks.pub

-

Create an EKS cluster in your region, with a node size capable of running Rundeck Pro’s recommended resources

$ eksctl create cluster \ --name rundeckpro \ --version 1.18 \ --region us-east-2 \ --nodegroup-name linux-nodes \ --node-type t2.medium \ --nodes 1 \ --nodes-min 1 \ --nodes-max 1 \ --ssh-access \ --ssh-public-key eks.pub \ --managed

-

After 10-15 minutes, test that Kubectl has been configured and see the ready status of your cluster nodes.

$ kubectl get nodes NAME STATUS ROLES AGE VERSION ip-192-168-10-182.us-east-2.compute.internal Ready <none> 3m46s v1.17.9-eks-4c6976

-

Install the AWS EFS CSI Driver for mounting EFS volumes in pods

$ kubectl apply -k "github.com/kubernetes-sigs/aws-efs-csi-driver/deploy/kubernetes/overlays/stable/ecr/?ref=release-1.0"

-

Verifying the EFS CSI Driver

$kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE efs-csi-node-cnv8j 3/3 Running 0 49m

Part 3 - Setup Cluster Ingress

To have access to Rundeck via the browser, we need to load balance to the running container within Kubernetes. To do this we create an Application Load Balancer. Fortunetly, AWS provides an ALB controller to make this easy for us which integrates nicely with EKS.

-

Create an IAM OIDC provider and associate it with your cluster

$ eksctl utils associate-iam-oidc-provider \ --region us-east-2 \ --cluster rundeckpro \ --approve -

Download an IAM policy for the ALB Ingress Controller pod that allows it to make calls to AWS APIs on your behalf

curl -o iam-policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.1.8/docs/examples/iam-policy.json

-

Create an IAM policy called ALBIngressControllerIAMPolicy using the policy downloaded in the previous step. Take note of the ARN policy string that was created for a following step.

$ aws iam create-policy \ --policy-name ALBIngressControllerIAMPolicy \ --policy-document file://iam-policy.json -

Create a Kubernetes service account named alb-ingress-controller in the kube-system namespace, a cluster role, and a cluster role binding for the ALB Ingress Controller to use with the following command

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.1.8/docs/examples/rbac-role.yaml

-

Create an IAM role for the ALB Ingress Controller and attach the role to the service account created in the previous step. The command that follows only works for clusters that were created with eksctl. Note use your ARN from the previous step.

eksctl create iamserviceaccount \ --region us-east-2 \ --name alb-ingress-controller \ --namespace kube-system \ --cluster rundeckpro \ --attach-policy-arn arn:aws:iam::111122223333:policy/ALBIngressControllerIAMPolicy \ --override-existing-serviceaccounts \ --approve -

Deploy your ALB Controller, note that initially it will error until the subsequent steps

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.1.8/docs/examples/alb-ingress-controller.yaml

-

Edit the ALB Deployment with your cluster name, your EKS VPC, and region

kubectl edit deployment.apps/alb-ingress-controller -n kube-system ... spec: containers: - args: - --ingress-class=alb - --cluster-name=rundeckpro - --aws-vpc-id=vpc-03468a8157edca5bd - --aws-region=us-east-2 -

Confirm that the ALB Ingress Controller is running with the following command.

$ kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE alb-ingress-controller-646d767ccf-4h624 1/1 Running 0 12s

-

Create the Rundeck Service

$ kubectl apply -f rundeck-service.yml service/service-rundeck created

-

Create the ALB Ingress

$ kubectl apply -f rundeck-ingress.yml ingress.extensions/rundeck-ingress created

-

Obtain the ALB Ingress address

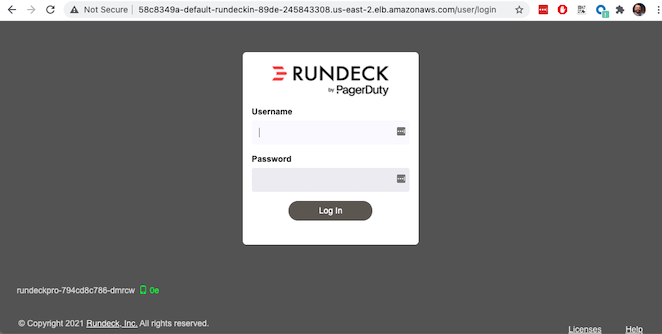

$ kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE rundeck-ingress <none> * 58c8349a-default-rundeckin-89de-245843308.us-east-2.elb.amazonaws.com 80 3d1h

-

Add the Ingress URL to the Rundeck Deployment manifest replacing RUNDECK_INGRESS_ELB_ADDRESS with the value above

- name: RUNDECK_GRAILS_URL value: "http://RUNDECK_INGRESS_ELB_ADDRESS"

Part 4 - Setup EFS

-

Get your VPC ID for your cluster (again)

$ aws eks describe-cluster --name rundeckpro --query "cluster.resourcesVpcConfig.vpcId" --output text vpc-015b916167f38076a

-

Locate the CIDR range for your cluster

$ aws ec2 describe-vpcs --vpc-ids vpc-015b916167f38076a --query "Vpcs[].CidrBlock" --output text 192.168.0.0/16

-

Create an Amazon EFS file system for your Amazon EKS cluster following Steps 3 & 4 on AWS Docs

-

Verify your File system ID

$ aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text fs-41dd9839

-

The container runs as user 'rundeck' with uid=1000(rundeck) gid=0(root) groups=0(root),27(sudo), and this impacts writing to the EFS volume. Create an EFS Application access point for the 'rundeck' user to write to our file mount. Make note of the "AccessPointId" for each execution of the command for use in the PV deployment.

$ aws efs create-access-point --file-system-id fs-41dd9839 --posix-user Uid=1000,Gid=0 --root-directory "Path=/home/rundeck/server/logs, CreationInfo={OwnerUid=1000,OwnerGid=0,Permissions=775}" $ aws efs create-access-point --file-system-id fs-41dd9839 --posix-user Uid=1000,Gid=0 --root-directory "Path=/home/rundeck/server/data, CreationInfo={OwnerUid=1000,OwnerGid=0,Permissions=775}" -

Create a file systems access point for the MySQL container. Make note of the "AccessPointId" for use in the PV deployment.

$ aws efs create-access-point --file-system-id fs-9481c6ec --posix-user Uid=0,Gid=0 --root-directory "Path=/var/lib/mysql, CreationInfo={OwnerUid=0,OwnerGid=0,Permissions=775}" -

Deploy the StorageClass

$ kubectl apply -f efs-storageclass.yml storageclass.storage.k8s.io/efs-sc created

-

View Storage Classes

$ kubectl get storageclass NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE efs-sc efs.csi.aws.com Delete Immediate false 13m gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 4h26m

Part 5 - Deploy Volumes

-

Update the rundeck-pv.yml csi:volumeHandles with your coresponding EFS Filesystem ID and EFS Accesspoint IDs, RUNDECK_EFS_ID::RUNDECK_EFS_ACCESSPOINT_DATA, RUNDECK_EFS_ID::RUNDECK_EFS_ACCESSPOINT_LOGS:

apiVersion: "v1" kind: "PersistentVolume" metadata: name: "rundeck-pv-data" spec: capacity: storage: "5Gi" accessModes: - "ReadWriteMany" persistentVolumeReclaimPolicy: Retain storageClassName: efs-sc csi: driver: efs.csi.aws.com volumeHandle: fs-41dd9839::fsap-06c7a7f26e8436dc8 ... apiVersion: "v1" kind: "PersistentVolume" metadata: name: "rundeck-pv-logs" spec: capacity: storage: "5Gi" accessModes: - "ReadWriteMany" persistentVolumeReclaimPolicy: Retain storageClassName: efs-sc csi: driver: efs.csi.aws.com volumeHandle: fs-41dd9839::fsap-0df507dac9b21a7a4 ... -

Deploy the Rundeck PV and PVCs

$ kubectl apply -f rundeck-pv.yml persistentvolume/rundeck-pv-data created persistentvolumeclaim/rundeck-pv-claim-data created persistentvolume/rundeck-pv-logs created persistentvolumeclaim/rundeck-pv-claim-logs created

-

Deploy the MySQL PV and PVCs

$ kubectl apply -f mysql-pv.yml persistentvolume/mysql-pv created persistentvolumeclaim/mysql-pv-claim created

-

Check the status of your Volumes and Claims

$ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE rundeck-pv-data 5Gi RWX Retain Bound default/rundeck-pv-claim-data efs-sc 2d14h rundeck-pv-logs 5Gi RWX Retain Bound default/rundeck-pv-claim-logs efs-sc 2d14h rundeck-pv-mysql 3Gi RWX Retain Bound default/rundeck-pv-claim-mysql efs-sc 2d19h $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE rundeck-pv-claim-data Bound rundeck-pv-data 5Gi RWX efs-sc 2d14h rundeck-pv-claim-logs Bound rundeck-pv-logs 5Gi RWX efs-sc 2d14h rundeck-pv-claim-mysql Bound rundeck-pv-mysql 3Gi RWX efs-sc 2d19h

Part 6 - Deploy MySQL

-

Create MySQL Database Password

$ echo -n 'rundeck123.' > ./password $ kubectl create secret generic mysql-rundeckuser --from-file=./password

-

Deploy MySQL

$ kubectl apply -f mysql-deployment.yaml

Part 7 - Deploy Rundeck

-

Add rundeck License key as secret

$ kubectl create secret generic rundeckpro-license --from-file=./data/rundeckpro-license.key

-

Create Default Rundeck ACL from secrets

$ kubectl create secret generic rundeckpro-admin-acl --from-file=./data/admin-role.aclpolicy

-

Deploy Rundeck

$ kubectl apply -f rundeck-deployment.yaml

-

If all goes well you should see output as such:

$ kubectl get all NAME READY STATUS RESTARTS AGE pod/mysql-7575f75b59-wkh48 1/1 Running 0 2d14h pod/rundeckpro-794cd8c786-dmrcw 1/1 Running 0 2d14h NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 3d3h service/mysql ClusterIP 10.100.214.8 <none> 3306/TCP 2d23h service/service-rundeck NodePort 10.100.199.86 <none> 80:31983/TCP 3d1h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/mysql 1/1 1 1 2d20h deployment.apps/rundeckpro 1/1 1 1 2d14h NAME DESIRED CURRENT READY AGE replicaset.apps/mysql-7575f75b59 1 1 1 2d20h replicaset.apps/rundeckpro-794cd8c786 1 1 1 2d14h

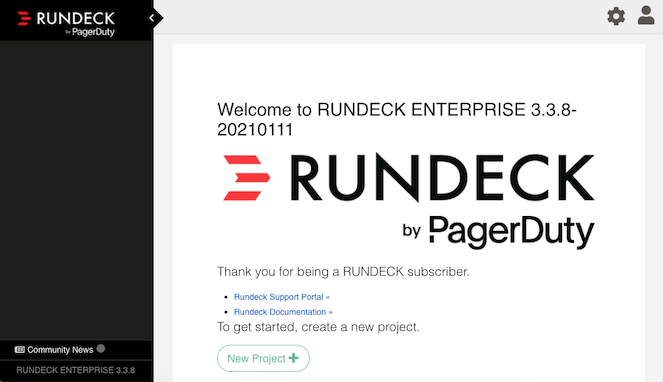

After a few minutes, open the browser and connect to your instance on the ALB address, if prompted for a password, use the default for admin.

Congratulations! You have deployed a stateful Rundeck cluster with HA on EKS backed by EFS

Next Steps: Learn how to use Rundeck