rfvs-regression

The goal of rfvs-regression is to compare random forest variable selection techniques for continuous outcomes

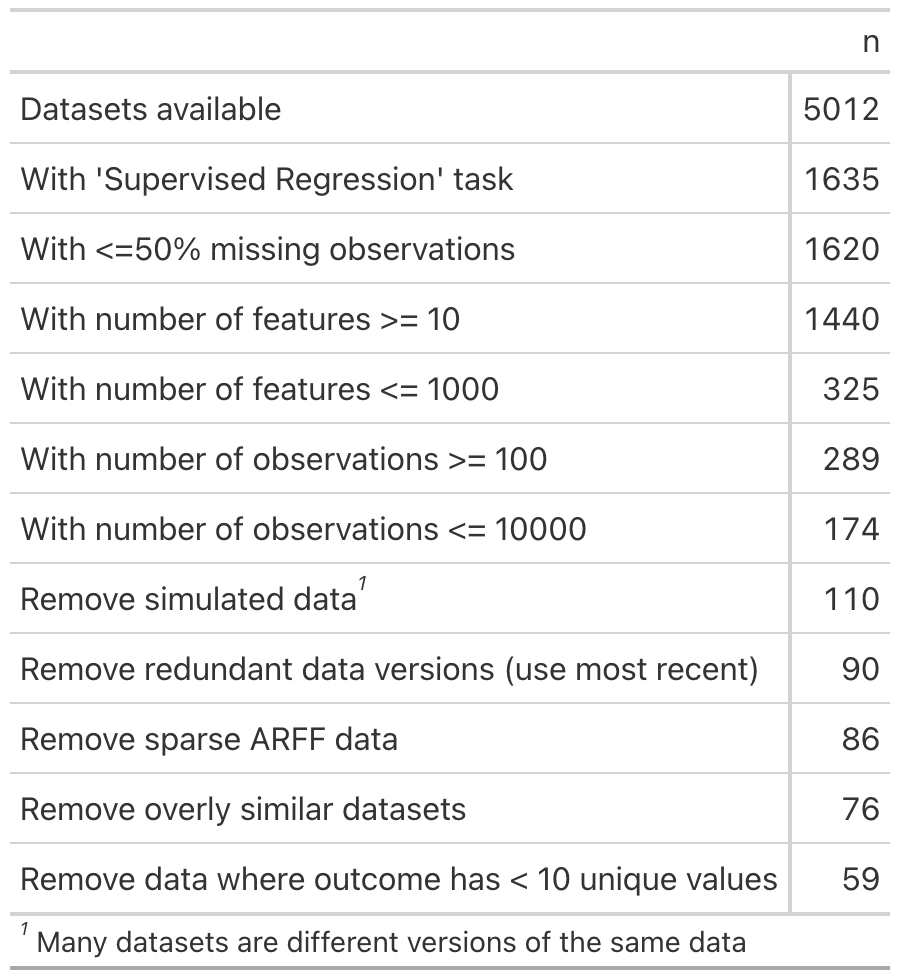

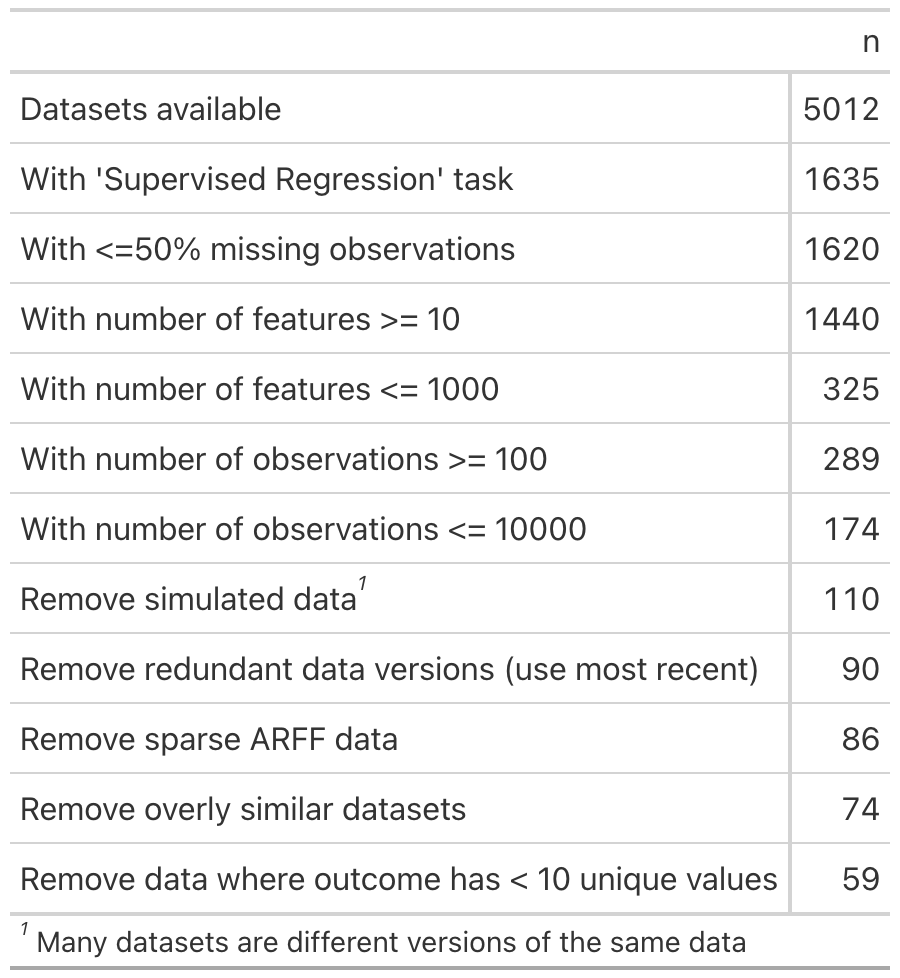

Datasets included

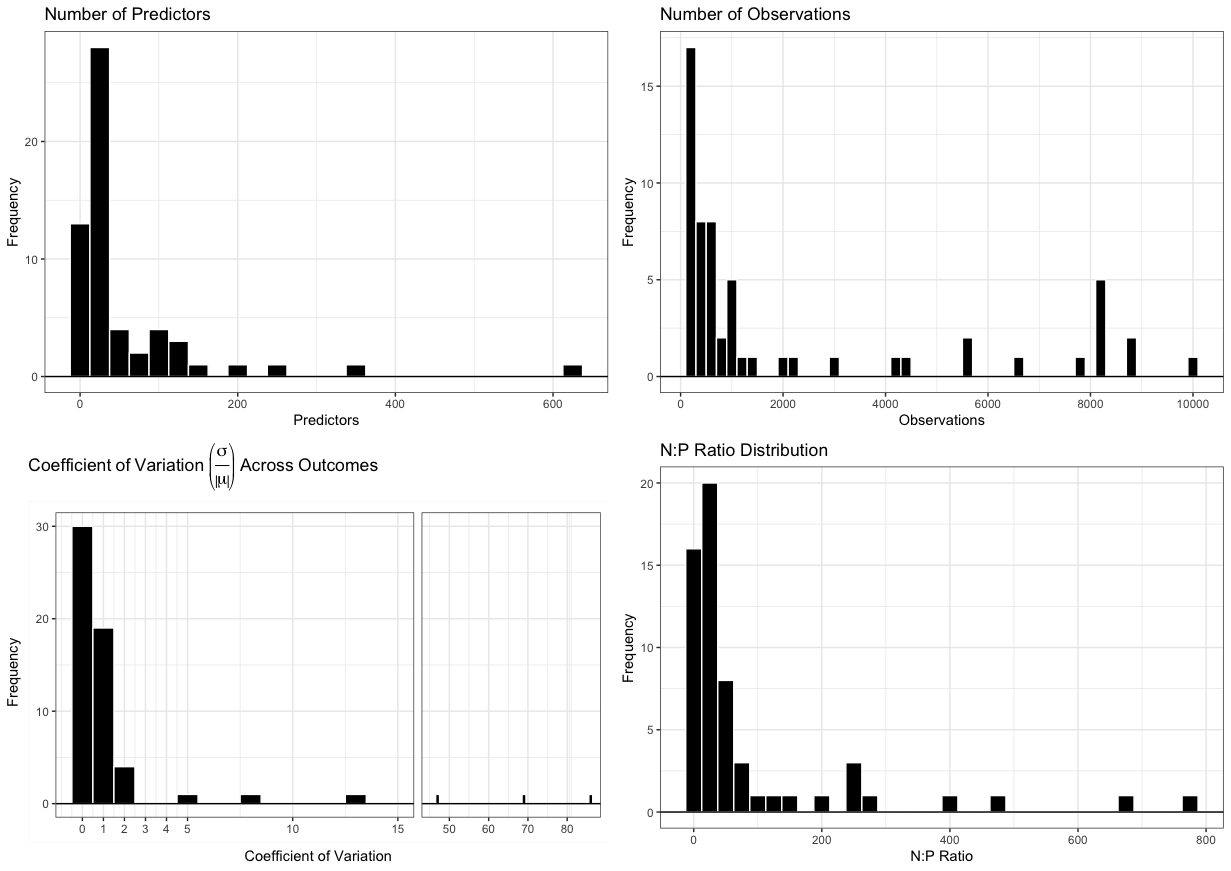

Central illustration

The experiment we ran involves three steps:

- Select variables with a given method.

- Fit a random forest to the data, including only selected variables.

- Evaluate prediction accuracy of the forest in held-out data.

Note: We use both axis-based and oblique random forests for regression in step 2.

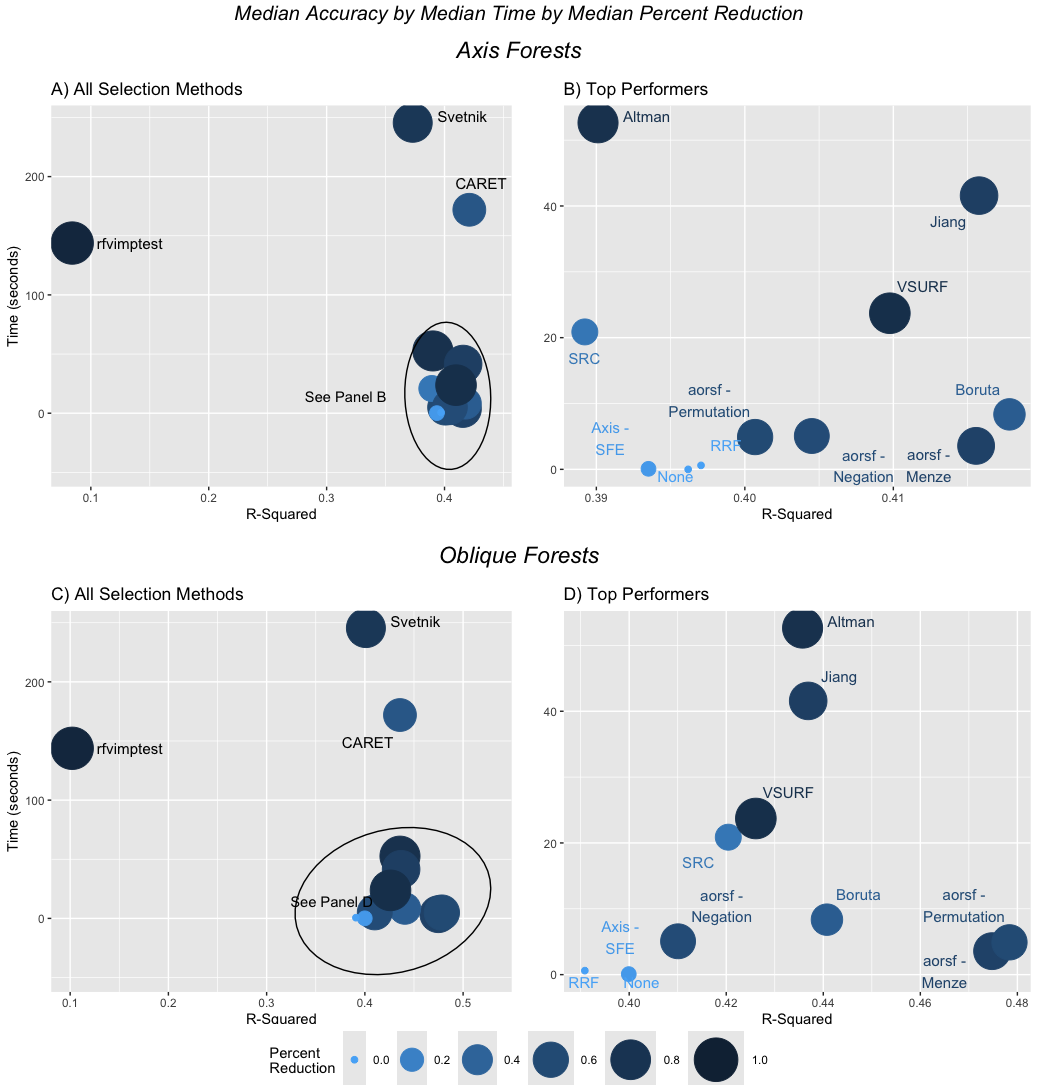

We assume that better variable selection leads to better prediction accuracy. Results from the experiment are below.

Similar results, but using mean and standard error

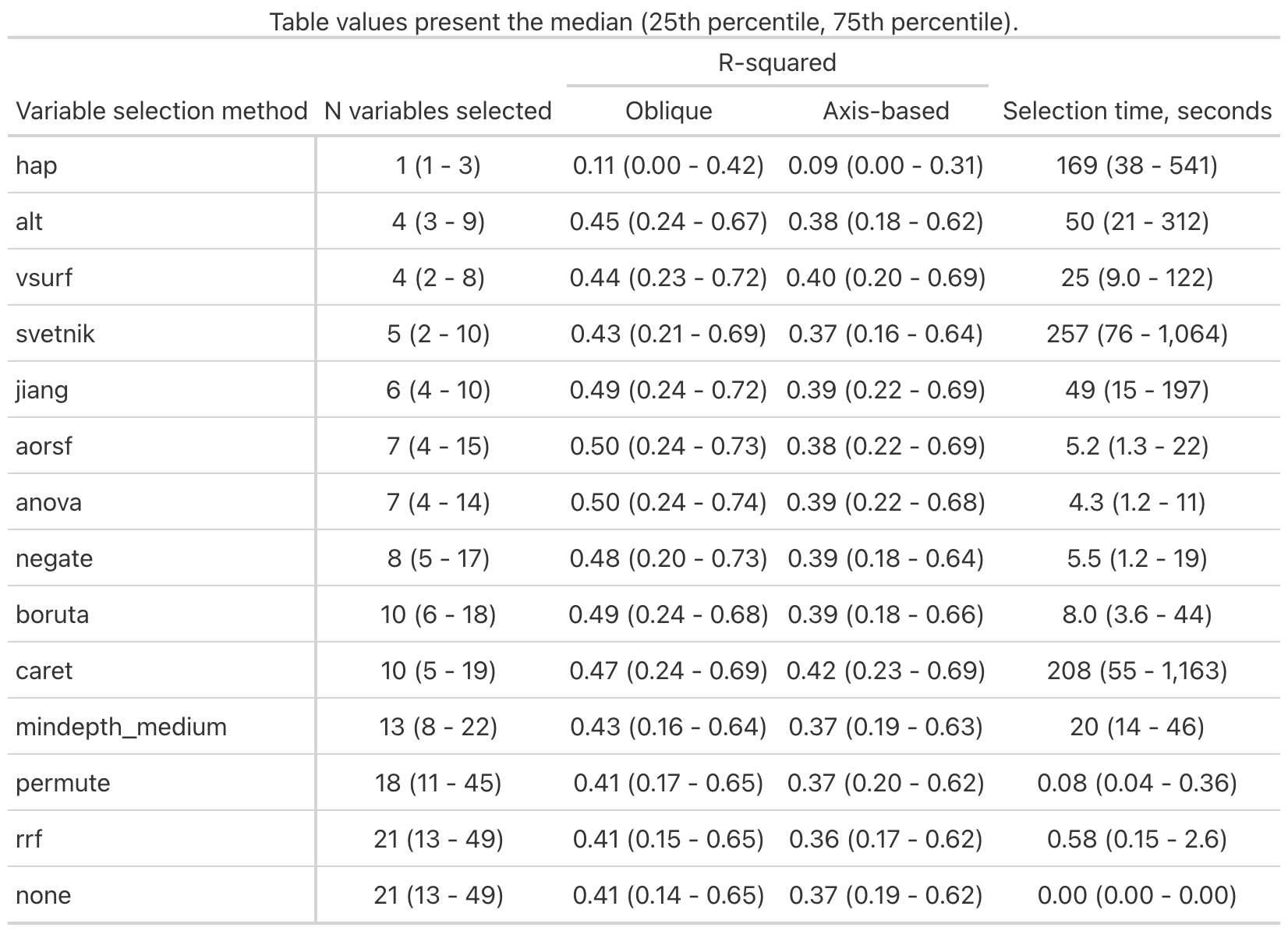

Takeaways:

-

Variable selection method

jianghas consistently high prediction accuracy but scales poorly to large datasets in terms of computational efficiency. E.g., the max time that this method required was 2 days, 23 hours, 40 minutes, 9 seconds -

Variable selection method

aorsfhas very high prediction accuracy when the downstream model is an oblique random forest, and does not become computationally intractable with larger data. E.g., the max time this method required was 1 hour, 18 minutes, 26 seconds