[ACM MM-23] Deep image harmonization in Dual Color Space [ACM MM-23]

This is the official repository for the following paper:

Deep Image Harmonization in Dual Color Spaces [arXiv]

Linfeng Tan, Jiangtong Li, Li Niu, Liqing Zhang

Accepted by ACMMM2023.

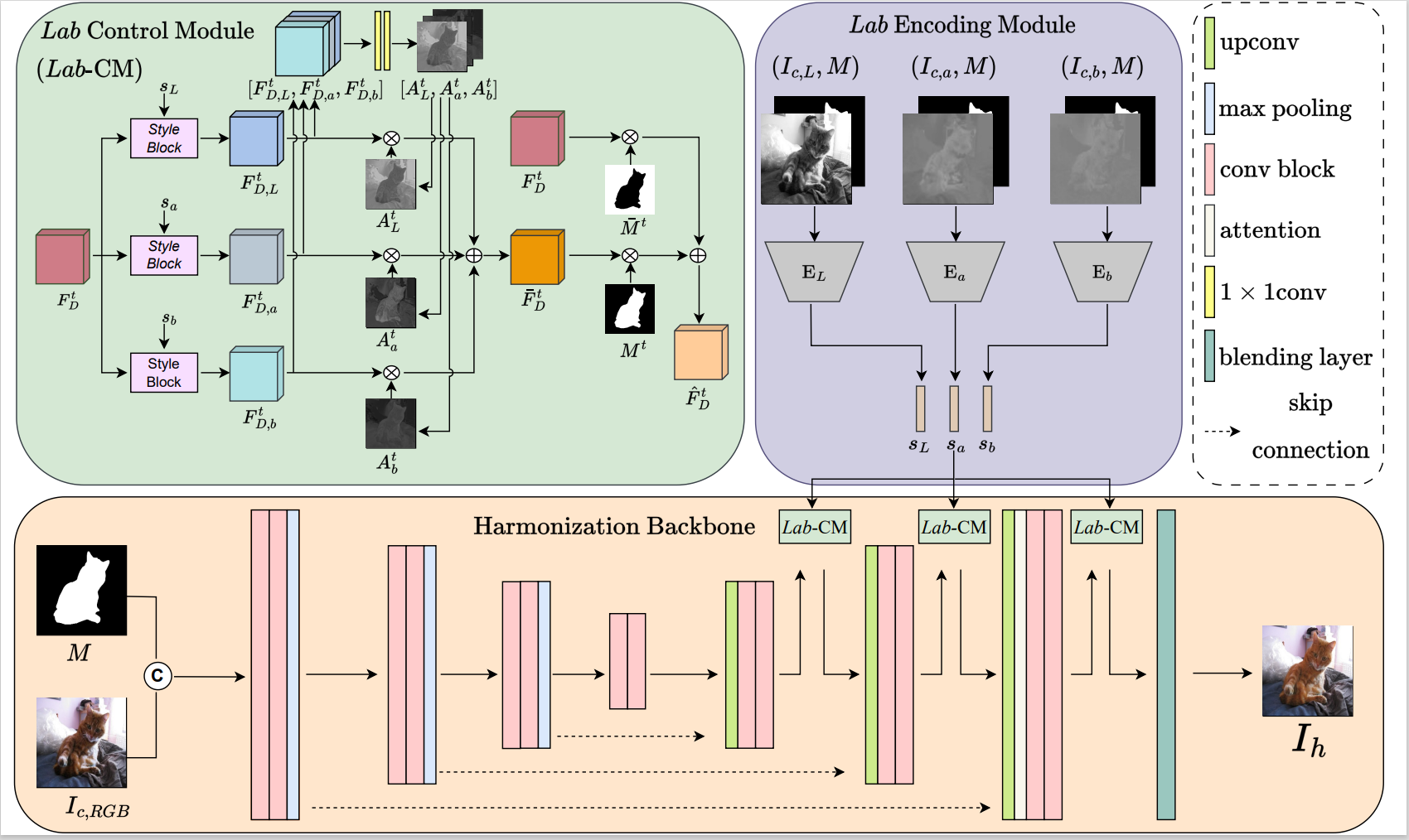

Image harmonization is an essential step in image composition that adjusts the appearance of composite foreground to address the inconsistency between foreground and background. Existing methods primarily operate in correlated

$RGB$ color space, leading to entangled features and limited representation ability. In contrast, decorrelated color space ($Lab$ ) has decorrelated channels that provide disentangled color and illumination statistics. In this paper, we explore image harmonization in dual color spaces, which supplements entangled$RGB$ features with disentangled$L$ ,$a$ ,$b$ features to alleviate the workload in harmonization process. The network comprises a$RGB$ harmonization backbone, an$Lab$ encoding module, and an$Lab$ control module. The backbone is a U-Net network translating composite image to harmonized image. Three encoders in$Lab$ encoding module extract three control codes independently from$L$ ,$a$ ,$b$ channels, which are used to manipulate the decoder features in harmonization backbone via$Lab$ control module.

Please refer to iSSAM for guidance on setting up the environment.

- Clone this repo:

git clone https://github.com/bcmi/DucoNet-Image-Harmonization.git

cd ./DucoNet-Image-Harmonization

- Download the iHarmony4 dataset, and configure the paths to the datasets in config.yml.

-

Install PyTorch and dependencies from http://pytorch.org.

-

Install python requirements:

pip install -r requirements.txtIf you want to train DucoNet on dataset iHarmony4, you can run this command:

## for low-resolution

python train.py models/DucoNet_256.py --workers=8 --gpus=0,1 --exp-name=DucoNet_256 --batch-size=64

## for high-resolution

python train.py models/DucoNet_1024.py --workers=8 --gpus=2,3 --exp-name=DucoNet_1024 --batch-size=4

We have also provided some commands in the "train.sh" for your convenience.

You can run the following command to test the pretrained model, and you can download the pre-trained model we released from Google Drive or Baidu Cloud:

python scripts/evaluate_model.py DucoNet ./checkpoints/last_model/DucoNet256.pth \

--resize-strategy Fixed256 \

--gpu 0

#python scripts/evaluate_model.py DucoNet ./checkpoints/last_model/DucoNet1024.pth \

#--resize-strategy Fixed1024 \

#--gpu 1 \

#--datasets HAdobe5k1

We have also provided some commands in the "test.sh" for your convenience.

We test our DucoNet on iHarmony4 dataset with image size 256×256 and on HAdobe5k dataset with image size 1024×1024. We report our results on evaluation metrics, including MSE, fMSE, and PSNR. We also released the pretrained model corresponding to our results, you can download it from the corresponding link.

| Image Size | fMSE | MSE | PSNR | Google Drive | Baidu Cloud |

|---|---|---|---|---|---|

| 256 |

212.53 | 18.47 | 39.17 | Google Drive | Baidu Cloud |

| 1024 |

80.69 | 10.94 | 41.37 | Google Drive | Baidu Cloud |

Our code is heavily borrowed from iSSAM and PyTorch implementation of styleGANv2 .

If you are interested in our work, please consider citing the following:

@article{tan2023deep,

title={Deep Image Harmonization in Dual Color Spaces},

author={Tan, Linfeng and Li, Jiangtong and Niu, Li and Zhang, Liqing},

journal={arXiv preprint arXiv:2308.02813},

year={2023}

}