This is an unofficial implementation of the paper "ObjectStitch: Object Compositing with Diffusion Model", CVPR 2023.

Following ObjectStitch, our implementation takes masked foregrounds as input and utilizes both class tokens and patch tokens as conditional embeddings. Since ObjectStitch does not release their training dataset, we train our models on a large-scale public dataset Open-Images.

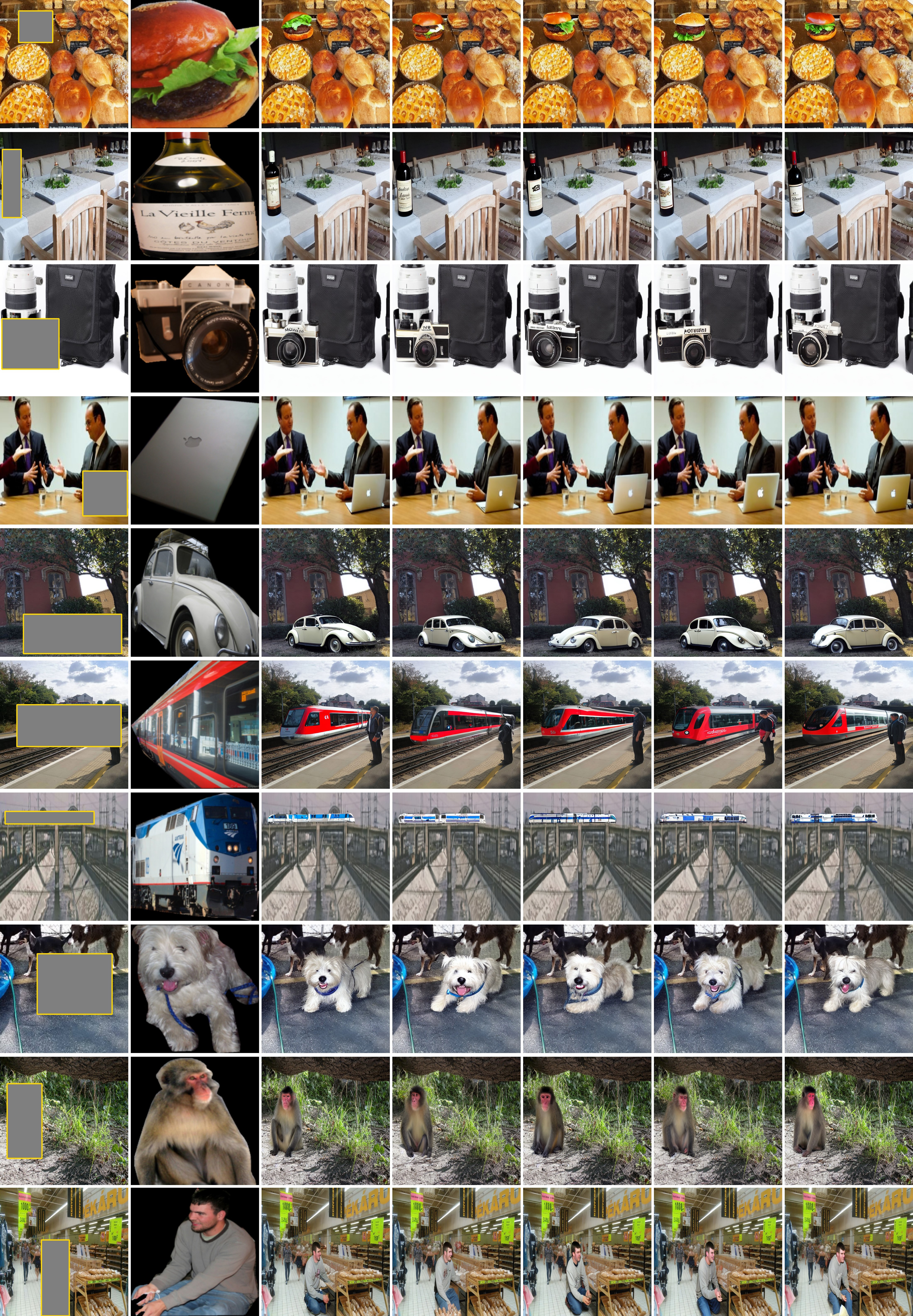

ObjectStitch is very robust and adept at adjusting the pose/viewpoint of inserted foreground object according to the background. However, the details could be lost or altered for those complex or rare objects.

For better detail preservation and controllability, you can refer to our ControlCom and MureObjectStitch. ControlCom and MureObjectStitch have been integrated into our image composition toolbox libcom https://github.com/bcmi/libcom. Welcome to visit and try \(^▽^)/

Note that in the provided foreground image, the foreground object's length and width should fully extend to the edges of the image (see our example), otherwise the performance would be severely affected.

Our implementation is based on Paint-by-Example, utilizing masked foreground images and employing all class and patch tokens from the foreground image as conditional embeddings. The model is trained using the same hyperparameters as Paint-by-Example. Foreground masks for training images are generated using Segment-Anything.

In total, our model is trained on approximately 1.8 million pairs of foreground and background images from both the train and validation sets of Open-Images. Training occurs over 40 epochs, utilizing 16 A100 GPUs with a batch size of 16 per GPU.

- torch==1.11.0

- pytorch_lightning==1.8.1

- install dependencies:

cd ObjectStitch-Image-Composition pip install -r requirements.txt cd src/taming-transformers pip install -e .

-

Please download the following files to the

checkpointsfolder to create the following file tree:checkpoints/ ├── ObjectStitch.pth └── openai-clip-vit-large-patch14 ├── config.json ├── merges.txt ├── preprocessor_config.json ├── pytorch_model.bin ├── tokenizer_config.json ├── tokenizer.json └── vocab.json -

openai-clip-vit-large-patch14 (Huggingface | ModelScope).

-

ObjectStitch.pth (Huggingface | ModelScope).

-

To perform image composition using our model, you can use

scripts/inference.py. For example,python scripts/inference.py \ --outdir results \ --testdir examples \ --num_samples 3 \ --sample_steps 50 \ --gpu 0or simply run:

sh test.shThese images under

examplesfolder are obtained from COCOEE dataset.

- Please refer to the examples folder for data preparation:

- keep the same filenames for each pair of data.

- either the

mask_bboxfolder or thebboxfolder is sufficient.

- Download link:

Notes: certain sensitive information has been removed since the model training was conducted within a company. To start training, you'll need to prepare your own training data and make necessary modifications to the code according to your requirements.

We showcase several results generated by the released model on FOSCom dataset. In each example, we display the background image with a bounding box (yellow), the foreground image, and 5 randomly sampled images.

We also provide the full results of 640 foreground-background pairs on FOSCom dataset, which can be downloaded from Baidu Cloud (9g1p). Based on the results, you can quickly know the ability and limitation of ObjectStitch.

- We summarize the papers and codes of generative image composition: Awesome-Generative-Image-Composition

- We summarize the papers and codes of image composition from all aspects: Awesome-Image-Composition

- We summarize all possible evaluation metrics to evaluate the quality of composite images: Composite-Image-Evaluation

- We write a comprehensive survey on image composition: the latest version