Data Collection Utilities for GDQStatus

(Successor to sgdq-collector)

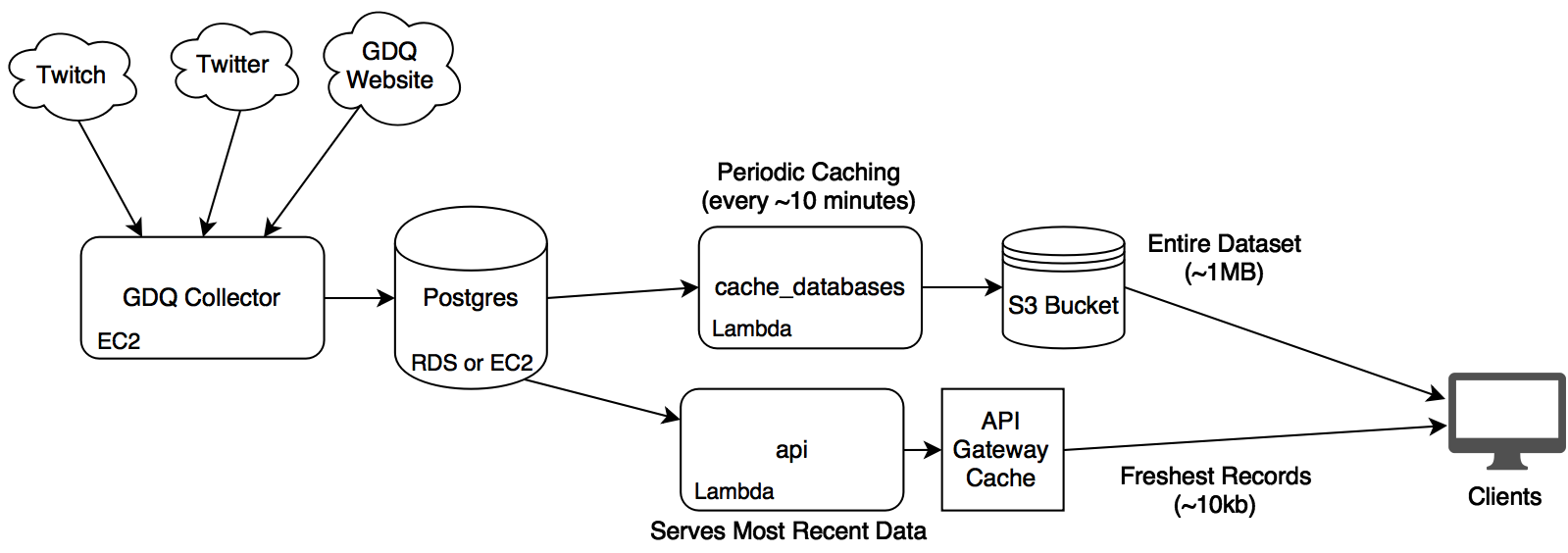

gdq-collector is an amalgamation of services and utilities designed to be a serverless-ish backend for gdq-stats. There are 3 distinct components of the project:

gdq_collector(the python module) - Python scraping module designed to be run constantly on a compute platform like EC2 which updates a Postgres database with new timeseries and GDQ schedule data.lambda_suite- Lambda application that caches the Postgres database to a JSON file in S3 (to reduce the load on the Database). Also includes a simple API to query recent timeseries data that doesn't appear in the cached JSON. The Lambda Suite has 3 stages (three separate configurations that are deployed independently):- The API stage (

dev/prod) - Serves recent data to a publicly facing REST endpoing - The Caching stage (

cache_databases) - Queries Postgres database and stores query results in S3 as a cache - The Monitoring stage (

monitoring) - Queries the API stage to do periodic health checks on the system

- The API stage (

gdq_collector uses APScheduler its schedule and execute the scraping / refreshing tasks.

The Lambda applications use Zappa for deployment.

Note: If you're running this on an Ubuntu EC2 instance, bootstrap_aws.sh will be more useful then the following bullet list for specific setup.

- Clone the repo and

cdinto the root project directory. - Pull down the dependencies with

pip install -r requirements.txt --user- You may wish to run

aws/install.sh, as there will be necessary system dependencies to install some of the python packages.

- You may wish to run

- Copy

credentials_template.pytocredentials.py. Fill in your credentials for Twitch, your Postgres server, and Twitch. - Ensure your Postgres server is running and that your credentials are valid. Create the necessary tables by executing the SQL commands in

schema.sql - Run

python -m gdq_collectorto start the collector.- You can run

python -m gdq_collector --helpto learn about the optional command line args.

- You can run

- Clone the repo and

cdinto the root project directory. - Pull down the dependencies with

pip install -r requirements.txt --user- You may wish to run

aws/install.sh, as there will be necessary system dependencies to install some of the python packages.

- You may wish to run

- Copy

credentials_template.pytocredentials.py. Fill in your credentials for your Postgres database. Add the ARN of the monitoring SNS topic tosns_arnif you want to use the lambda functions to send you notifications when monitoring alarms occur. - Update

zappa_settings.jsonto fit your AWS configuration. Of particular note is that you'll need to update yourvpc_config. You'll also need to make an S3 bucket that matches yourS3_CACHE_BUCKETconfig. - Run

zappa deploy devto deploy the application and schedule the caching operations. - Run

zappa deploy cache_databasesto deploy the lambdas that cache the Postgres data to JSON blobs in S3. - Run

zappa deploy monitoringto deploy the lambdas that check the output of the APIs to detect problems with the collector.