This is a self paced hands-on lab that strives to demystify using Terraform to provision Data Analytics services on GCP. Not all Data Analytics services are covered. This lab does not feature any dazzling demos - the focus is purely environment provisioning-configuring automation.

Terraform noobs

~90 minutes and then some to destroy the environment

Self-paced, fully scripted, no research is required.

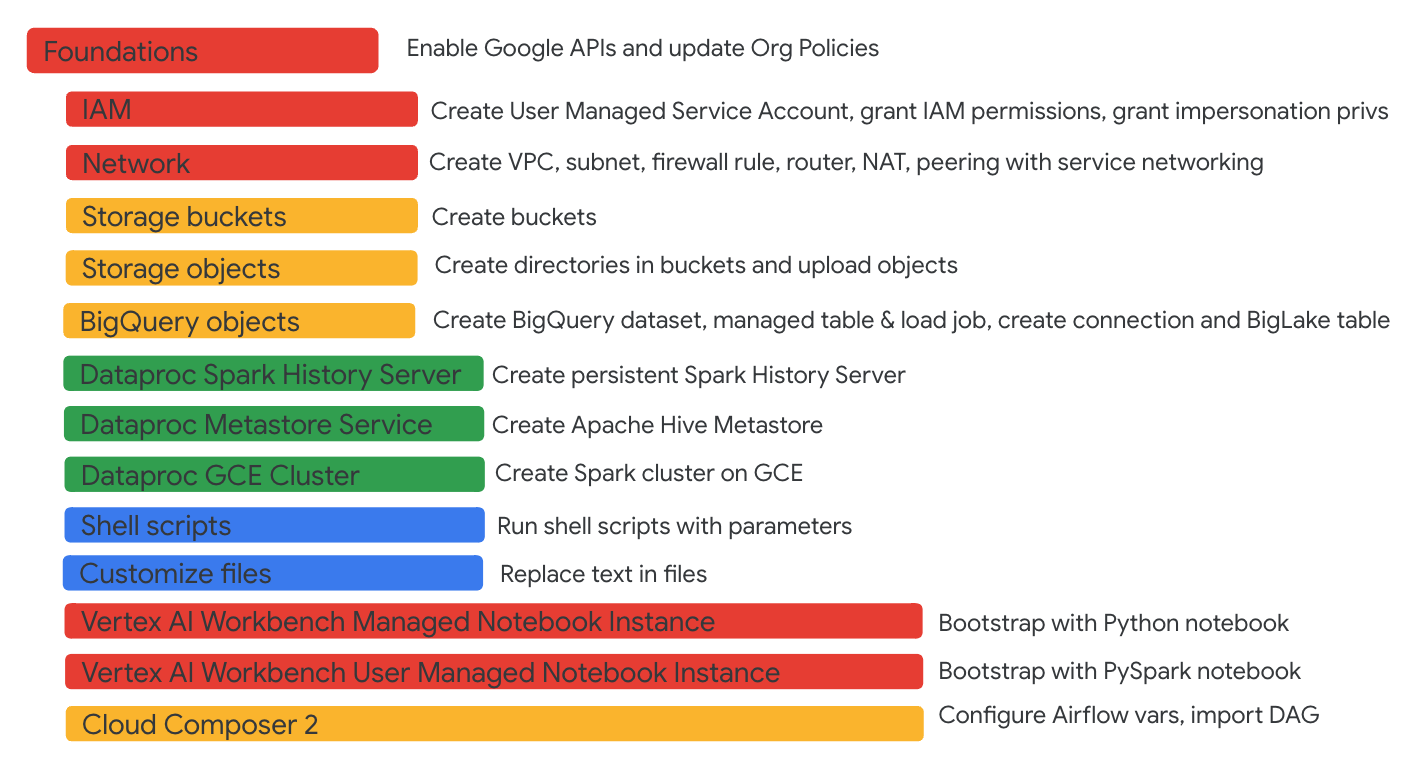

The following is a subset of Google Data Analytics portfolio covered. Its not all-encompassing. Contributions are welcome.

Applicable for Google Customer Engineers, in Argolis-

Grant yourself Organization Policy Administrator role from Cloud Console, at an Organization scope

(If you have done created VPC native services in Argolis, you have likely already granted yourself this permission

Don't forget to set the context in the Cloud console back to the project you created.

- Understanding of core Terraform concepts

- Knowledge of creating a set of Data Analytics services

- Terraform scripts that can be repurposed for your trails, PoCs, projects and such

Hashicorp docs: https://registry.terraform.io/providers/hashicorp/google/4.41.0

Production hardened Terraform Blueprints: https://github.com/GoogleCloudPlatform/cloud-foundation-fabric

| # | Google Cloud Collaborators | Contribution |

|---|---|---|

| 1. | Anagha Khanolkar | Vision, author |

| 2. | Rick Chen | Terraform expertise, feedback |

| 3. | Jay O' Leary | Testing and feedback |

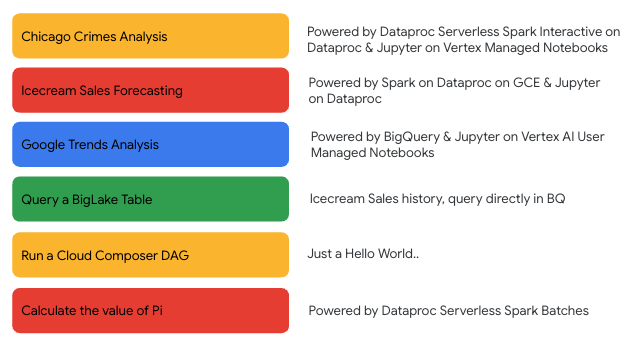

Optional exercises:

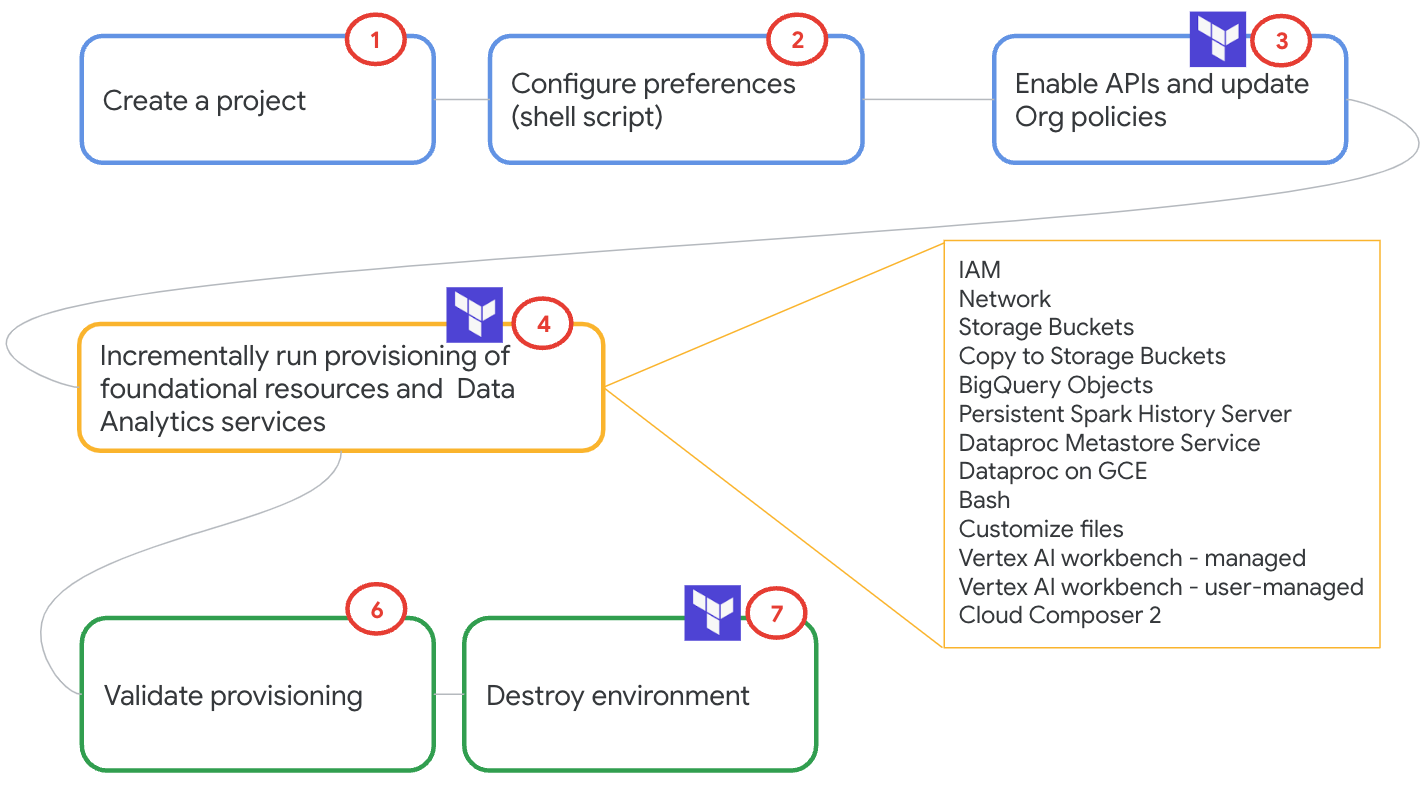

The lab modules listed are best run sequentially to avoid dependency issues.

Each module has an explanation of exactly what is covered, and covers one service at a time to make it bite-sized.

Shut down the environment. Module 19 covers the same.

Community contribution to improve the lab is very much appreciated.

If you have any questions or if you found any problems with this repository, please report through GitHub issues.

Log an entry in GitHub issues