Our work proposes a graph-based semi-supervised fake news detection method.

First, this repo is a python implementation of the paper

Semi-Supervised Learning and Graph Neural Networks for Fake News Detection by Adrien Benamira, Benjamin Devillers,Etienne Lesot, Ayush K., Manal Saadi and Fragkiskos D. Malliaros

published at ASONAM '19, August 27-30, 2019, Vancouver, Canada copyright space2019Association for Computing Machinery. ACM ISBN 978-1-4503-6868-1/19/08 http://dx.doi.org/10.1145/3341161.3342958}

Requires python >= 3.6.

Copy the config/config.default.yaml and rename the copy config/config.yaml.

This file will contain the configuration of the project.

Uses last (not even released) tensorly sparse features.

Install master version of sparse:

$ git clone https://github.com/pydata/sparse/ & cd sparse

$ pip install .Then use this version of tensorly:

$ git clone https://github.com/jcrist/tensorly.git tensorly-sparse & cd tensorly-sparse

$ git checkout sparse-take-2Then place the tensorly-sparse/tensorly folder our project structure.

Install master version of sparse:

$ git clone https://github.com/huggingface/pytorch-openai-transformer-lm.gitThen place the pytorch-openai-transformer-lm/ folder in our project structure under teh name transformer

There are multiple choices : Method of the co-occurence matrix / embedding with GloVe (mean or RNN) / Transformer / LDA-idf.

method_decomposition_embedding can be parafac, GloVe, LDA or Transformer.

embedding:

# Parafac - LDA - GloVe - Transformer -

method_decomposition_embedding: parafac

method_embedding_glove: mean

rank_parafac_decomposition: 10

size_word_co_occurrence_window: 5

use_frequency: No # If No, only a binary co-occurence matrix.

vocab_size: -1The embedding with glove : download GloVe nlp.stanford.edu/data/glove.6B.zip

There is 2 method of embedding: mean or RNN

paths:

GloVe_adress: ../glove6B/glove.6B.100d.txt

embedding:

# Parafac - LDA - GloVe - Transformer -

method_decomposition_embedding: GloVe

method_embedding_glove: mean # mean or RNN

use_frequency: No

vocab_size: -1Git clone the project transformer-pytorch-hugging face, rename the file transformer and download the pre-trained model of OpenAI. Set the config path :

paths:

encoder_path: transformer/model/encoder_bpe_40000.json

bpe_path: transformer/model/vocab_40000.bpe

embedding:

# Parafac - LDA - GloVe - Transformer -

method_decomposition_embedding: Transformer

use_frequency: No

vocab_size: -1embedding:

# Parafac - LDA - GloVe - Transformer -

method_decomposition_embedding: LDA

use_frequency: No

vocab_size: -1The idea is instead of using the euclidean distance, we can use the WMD

Install Python packages:

- spacy

- wmd

The pygcn lib used is: tkipf pytorch implementation.

The pyagnn lib used is based on dawnrange pytorch implementation.

Our pipeline is described in our report

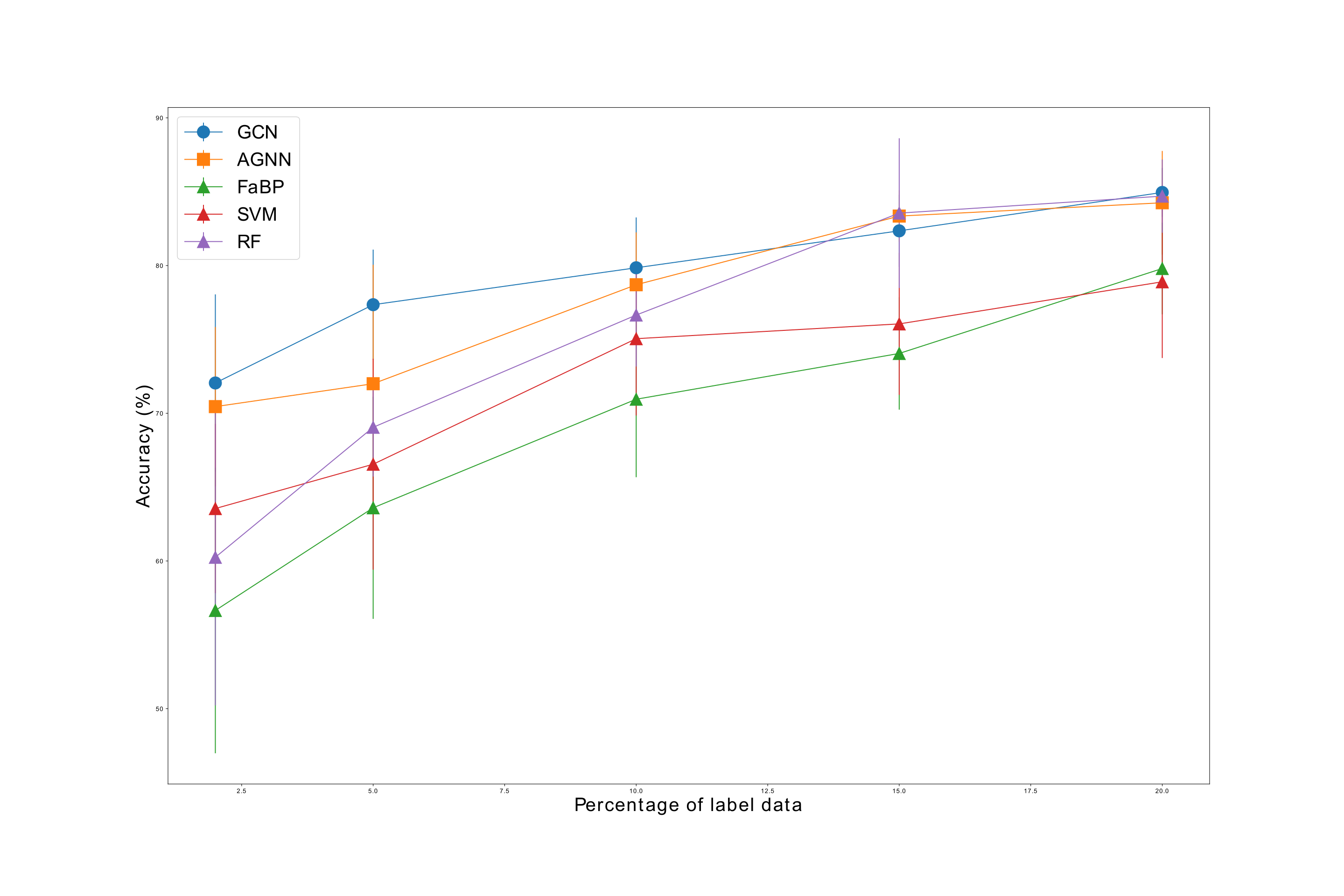

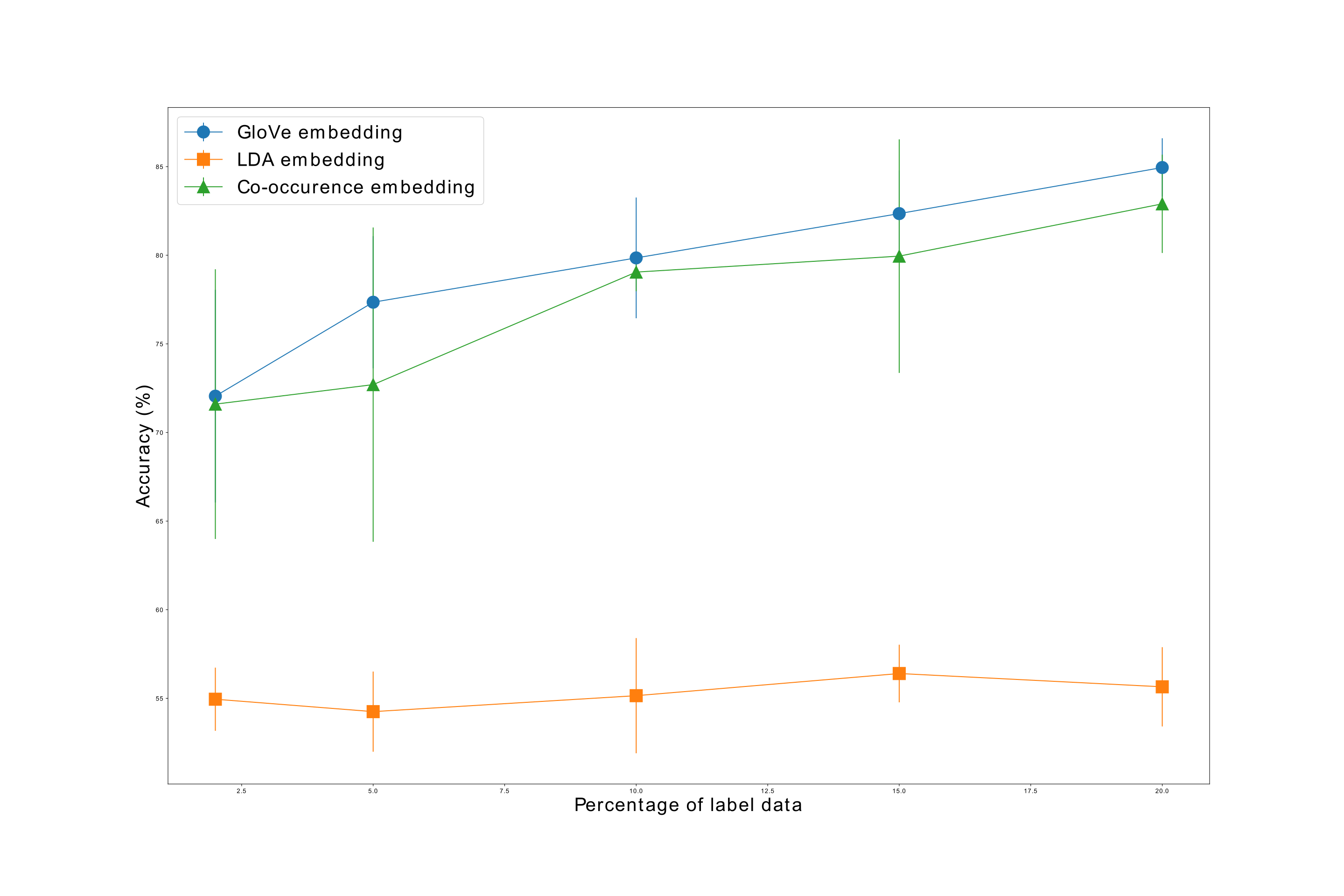

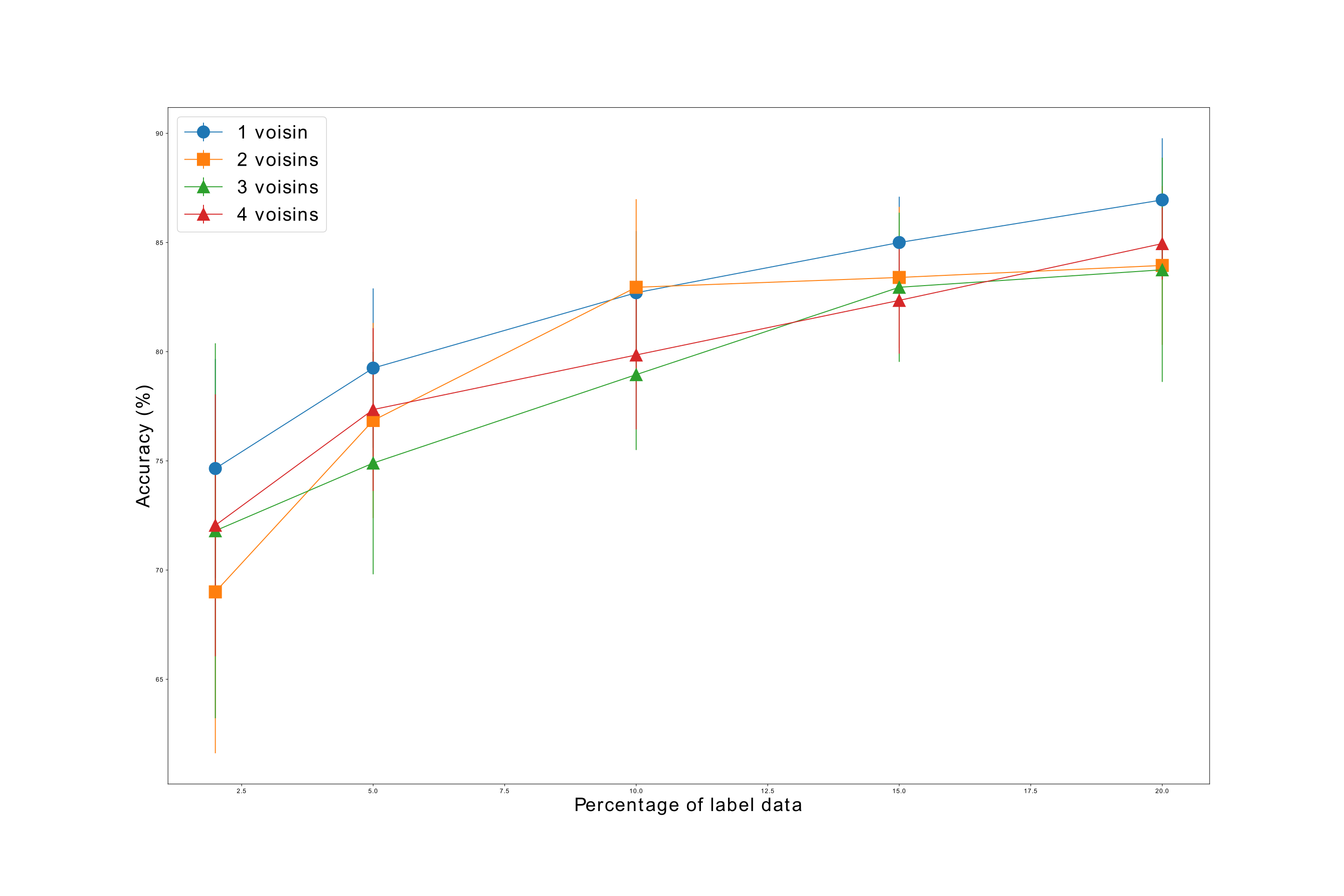

Here is our result on the dataset

- Kipf, Thomas N and Welling, Max, Semi-Supervised Classification with Graph Convolutional Networks. https://github.com/tkipf/pygcn.

- TensorLy: Tensor Learning in Python, Jean Kossaifi and Yannis Panagakis and Anima Anandkumar and Maja Pantic

- Vlad Niculae, Matt Kusner for the word mover's distance knn.

- Attention-based Graph Neural Network for semi-supervised learning,