Accepted to ICDAR 2019 arxiv

Authors: He Guo, Xiameng Qin, Jiaming Liu, Junyu Han, Jingtuo Liu and Errui Ding

This repository is designed to provide an open-source dataset for Visual Text Extraction.

The dataset can be downloaded through the following link:

baiduyun, PASSWORD: e4z1

Some details:

| scenes | number | size | Google Drive link |

|---|---|---|---|

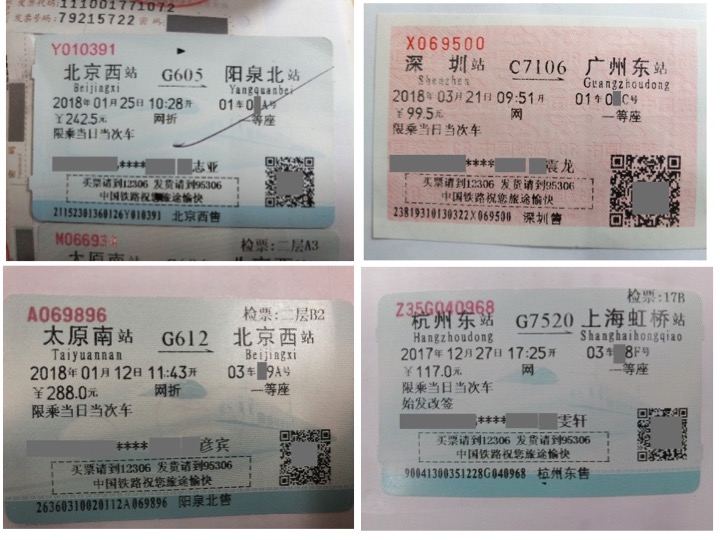

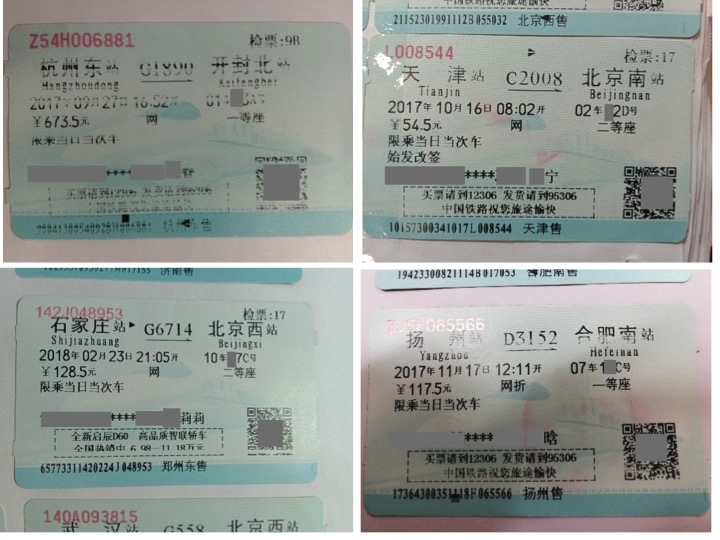

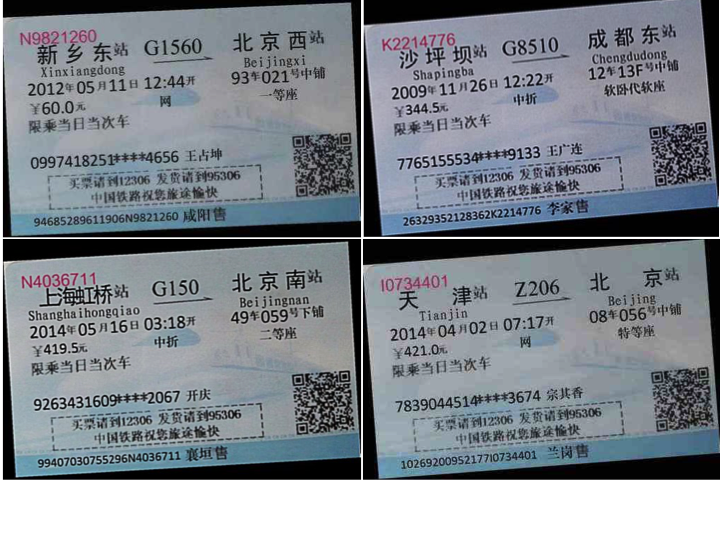

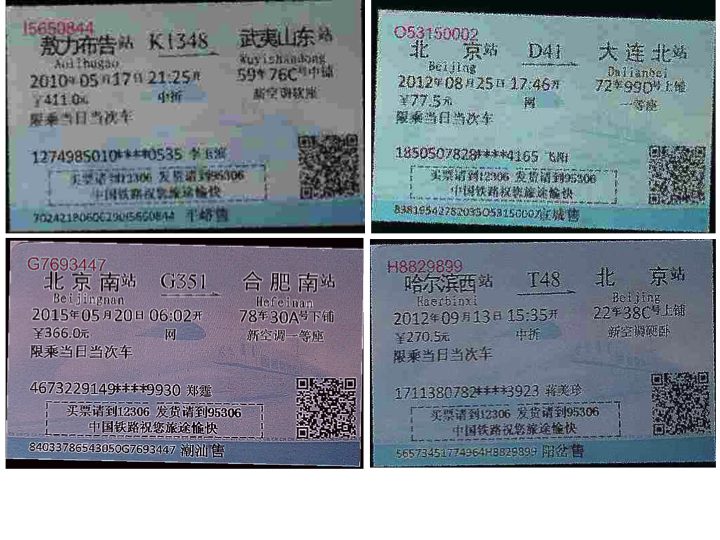

| train ticket | 300k synth + 1.9 real | 13G | dataset_trainticket.tar |

| passport | 100k synth | 5.8G | dataset_passport.tar |

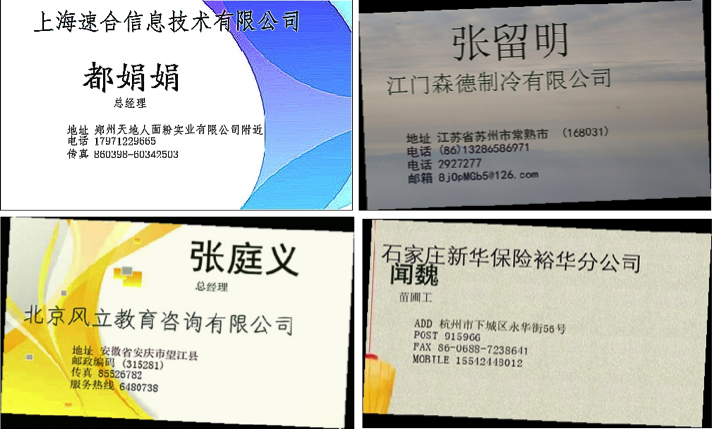

| business card | 200k synth | 19G | dataset_business.tar.0 dataset_business.tar.1 dataset_business.tar.2 dataset_business.tar.3 |

- [A large of training data]

Todo:- Use CycleGan or domain adaptation to synth data to train EATEN.

- Introduce datasets of STR to EATEN.

- [Generalization on complex scenes]

Todo:- Add bounding box annotations of ToIs to EATEN, such as 2019-ICCV-oral Towards Unconstrained End-to-End Text Spotting.

- [Engineering]

- Merge server decoder to one.

- parallel decoding.