In this code pattern you will create music based on the movement of your arms in front of a webcam.

It is based on the Veremin but modified to use the Human Pose Estimator model from the Model Asset eXchange (MAX). The Human Pose Estimator model is trained to detect humans and their poses in a given image. It is converted to the TensorFlow.js web-friendly format.

The web application streams video from your web camera. The Human Pose Estimator model is used to predict the location of your wrists within the video. The application takes the predictions and converts them to tones in the browser or to MIDI values which get sent to a connected MIDI device.

Browsers must allow access to the webcam and support the Web Audio API. Optionally, to integrate with a MIDI device the browser will need to support the Web MIDI API (e.g., Chrome browser version 43 or later).

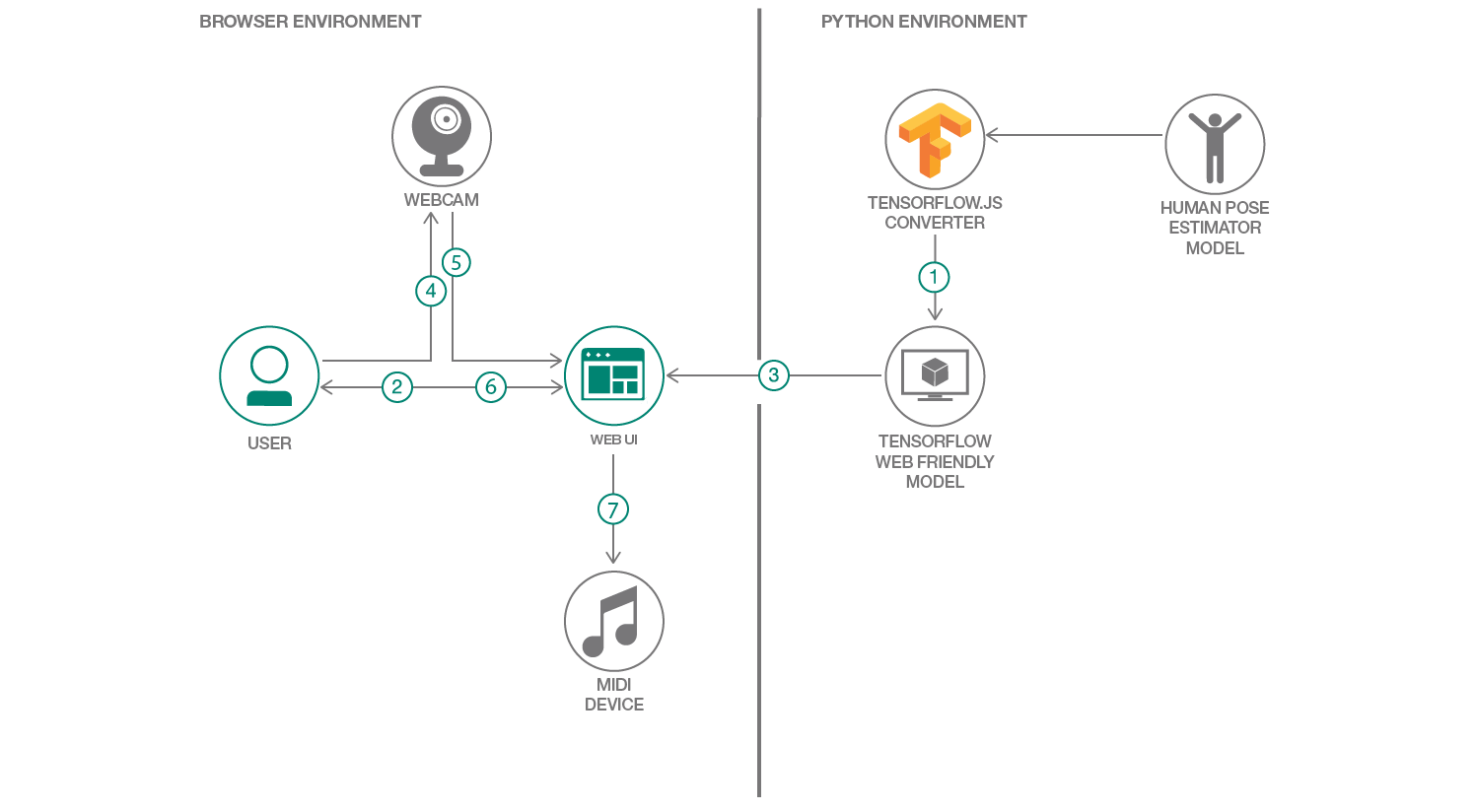

- Human pose estimator model is converted to the TensorFlow.js web format using the Tensorflow.js converter.

- User launches the web application.

- Web application loads the TensorFlow.js model.

- User stands in front of webcam and moves arms.

- Web application captures video frame and sends to the TensorFlow.js model. Model returns a prediction of the estimated poses in the frame.

- Web application processes the prediction and overlays the skeleton of the estimated pose on the Web UI.

- Web application converts the position of the user’s wrists from the estimated pose to a MIDI message, and the message is sent to a connected MIDI device or sound is played in the browser.

- MAX Human Pose Estimator: A machine learning model which detects human poses

- TensorFlow.js: A JavaScript library for training and deploying ML models in the browser and on Node.js

- Web MIDI API: An API supporting the MIDI protocol, enabling web applications to enumerate and select MIDI input and output devices on the client system and send and receive MIDI messages

- Web Audio API: A high-level Web API for processing and synthesizing audio in web applications

- Tone.js: A framework for creating interactive music in the browser

To try this application without installing anything, simply visit ibm.biz/veremax in a web browser that has access to a web camera and support for the Web Audio API.

There are two ways to run your own Veremax:

Pre-requisites:

- Get an IBM Cloud account

- Install/Update the IBM Cloud CLI

- Configure and login to the IBM Cloud using the CLI

To deploy to the IBM Cloud, from a terminal run:

-

Clone the

max-human-pose-estimator-tfjslocally:$ git clone https://github.com/IBM/max-human-pose-estimator-tfjs -

Change to the directory of the cloned repo:

$ cd max-human-pose-estimator-tfjs -

Log in to your IBM Cloud account:

$ ibmcloud login -

Target a Cloud Foundry org and space:

$ ibmcloud target --cf -

Push the app to IBM Cloud:

$ ibmcloud cf pushDeploying can take a few minutes.

-

View the app with a browser at the URL listed in the output.

Note: Depending on your browser, you may need to access the app using the

httpsprotocol instead of thehttp

To run the app locally:

-

From a terminal, clone the

max-human-pose-estimator-tfjslocally:$ git clone https://github.com/IBM/max-human-pose-estimator-tfjs -

Point your web server to the cloned repo directory (

/max-human-pose-estimator-tfjs)For example:

-

using the Web Server for Chrome extension (available from the Chrome Web Store)

- Go to your Chrome browser's Apps page (chrome://apps)

- Click on the Web Server

- From the Web Server, click CHOOSE FOLDER and browse to the cloned repo directory

- Start the Web Server

- Make note of the Web Server URL(s) (e.g., http://127.0.0.1:8887)

-

using the Python HTTP server module

- From a terminal shell, go to the cloned repo directory

- Depending on your Python version, enter one of the following commands:

- Python 2.x:

python -m SimpleHTTPServer 8080 - Python 3.x:

python -m http.server 8080

- Python 2.x:

- Once started, the Web Server URL should be http://127.0.0.1:8080

-

-

From your browser, go to the Web Server's URL

For best results use in a well-lit area with good contrast between you and the background. And stand back from the webcam so at least half of your body appears in the video.

At a minimum, your browsers must allow access to the web camera and support the Web Audio API.

In addition, if it supports the Web MIDI API, you may connect a MIDI synthesizer to your computer. If you do not have a MIDI synthesizer you can download and run a software synthesizer such as SimpleSynth.

If your browser does not support the Web MIDI API or no (hardware or software) synthesizer is detected, the app defaults to using the Web Audio API to generate tones in the browser.

Open your browser and go to the app URL. Depending on your browser, you may need to access the app using the https protocol instead of the http. You may also have to accept the browser's prompt to allow access to the web camera. Once access is allowed, the Human Pose Estimator model gets loaded.

After the model is loaded, the video stream from the web camera will appear and include an overlay with skeletal information detected by the model. The overlay will also include two adjacent zones/boxes. When your wrists are detected within each of the zones, you should here some sound.

- Move your right hand/arm up and down (in the right zone) to generate different notes

- Move your left hand/arm left and right (in the left zone) to adjust the velocity of the note.

Click on the Controls icon (top right) to open the control panel. In the control panel you are able to change MIDI devices (if more than one is connected), configure post-processing settings, set what is shown in the overlay, and configure additional options.

The converted MAX Human Pose Estimator model can be found in the model directory. To convert the model to the TensorFlow.js web friendly format the following steps below.

Note: The Human Pose Estimator model is a frozen graph. The current version of the

tensorflowjs_converterno longer supports frozen graph models. To convert frozen graphs it is recommended to use an older version of the Tensorflow.js converter (0.8.0) and then run thepb2jsonscript from Tensorflow.js converter 1.x.

-

Install the tensorflowjs 0.8.0 Python module

-

Download and extract the pre-trained Human Pose Estimator model

-

From a terminal, run the

tensorflowjs_converter:tensorflowjs_converter \ --input_format=tf_frozen_model \ --output_node_names='Openpose/concat_stage7' \ {model_path} \ {pb_model_dir}where

- {model_path} is the path to the extracted model

- {pb_model_dir} is the directory to save the converted model artifacts

- output_node_names (

Openpose/concat_stage7) is obtained by inspecting the model’s graph. One useful and easy-to-use visual tool for viewing machine learning models is Netron.

-

Clone and install the

tfjs-converterTypeScipt scripts$ git clone git@github.com:tensorflow/tfjs-converter.git $ cd tfjs-converter $ yarn -

Run the

pb2jsonscript:yarn ts-node tools/pb2json_converter.ts {pb_model_dir}/ {json_model_dir}/where

- {pb_model_dir} is the directory to converted model artifacts from step 3

- {json_model_dir} is the directory to save the updated model artifacts

When the completed, the contents of {json_model_dir} will be the web friendly format of the Human Pose Estimator model for TensorFlow.js 1.x. And the {pb_model_dir} will be the web friendly format for TensorFlow.js 0.15.x.

- Bring Machine Learning to the Browser With TensorFlow.js - Part I, Part II, Part III

- Model Asset eXchange

- IBM Cloud

- Getting started with the IBM Cloud CLI

- Prepare the app for deployment - IBM Cloud

- Playing with MIDI in JavaScript

- Introduction to Web Audio API

- Human pose estimation using OpenPose with TensorFlow - Part 1, Part II

This code pattern is licensed under the Apache Software License, Version 2. Separate third party code objects invoked within this code pattern are licensed by their respective providers pursuant to their own separate licenses. Contributions are subject to the Developer Certificate of Origin, Version 1.1 (DCO) and the Apache Software License, Version 2.