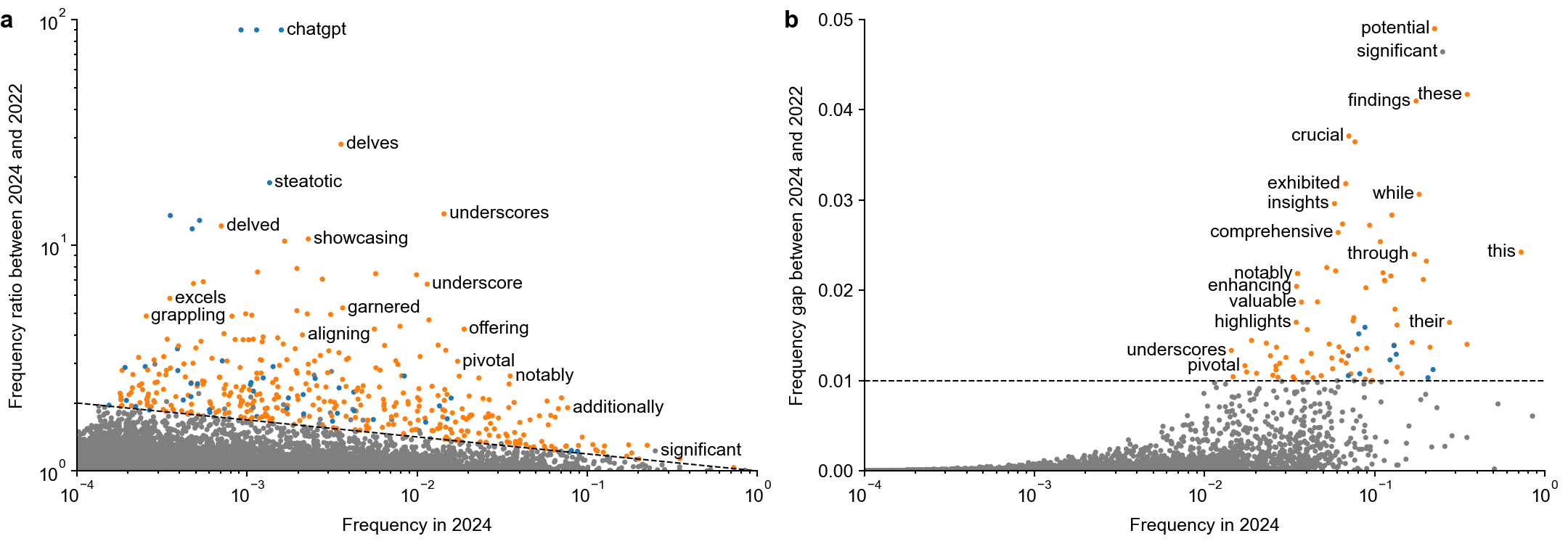

Analysis code for the paper Kobak et al. 2024, Delving into ChatGPT usage in academic writing through excess vocabulary.

How to cite:

@article{kobak2024delving,

title={Delving into {ChatGPT} usage in academic writing through excess vocabulary},

author={Kobak, Dmitry and Gonz\'alez-M\'arquez, Rita and Horv\'at, Em\H{o}ke-\'Agnes and Lause, Jan},

journal={arXiv preprint arXiv:2406.07016},

year={2024}

}

All excess words that we identified from 2013 to 2024 are listed in results/excess_words.csv together with our annotations.

- All excess frequency analysis and all figures shown in the paper (and provided in the

figures/folder) are produced by thescripts/figures.ipynbPython notebook. This notebook takes as the input a Pickle file with yearly counts of each word (which is too large to be provided here) and several other files with yearly counts of word groups (yearly-counts*). The notebook only takes a minute to run. - These yearly word count files are produced by the

scripts/preprocess-and-count.pyscript which takes a few hours to run and needs a lot of memory. This script takes CSV files with abstract texts as input, performs abstracts cleaning via regular expressions (~1 hour), then runs(~0.5 hours), and then does yearly aggregation.sklearn.feature_extraction.text.CountVectorizer(binary=True).fit_transform(df.AbstractText.values) - The input to the

scripts/preprocess-and-count.pyscript are three files:pubmed_landscape_data_2024_v2.zipandpubmed_landscape_abstracts_2024.zipcontaining PubMed data from the end-of-2023 baseline, available at the repository associated with our Patterns paper "The landscape of biomedical research";- and

pubmed_daily_updates_2024_v2.zipcontaing PubMed data from January--June 2024.

- This last file is constructed by the

scripts/process-daily-updates.ipynbnotebook that takes all daily XML files from https://ftp.ncbi.nlm.nih.gov/pubmed/updatefiles/ until 2024-06-30 (frompubmed24n1220.xml.gztopubmed24n1456.xml.gz) as input. These files have to be previously downloaded from the link above, unzipped, and stored in a directory, from which thescripts/process-daily-updates.ipynbnotebook will read, combine, and save as a single dataframe (pubmed_landscape_data_2024_v2.zip).