Setup Kubernetes the hard way on AWS

This is intended for audience that wants to understand how Kubernetes all fits together in AWS before going to production.

In this tutorial, I deployed the infrastructure as code on AWS using AWS CloudFormation. I configured all the needed packages using Ansible for Configuration as Code.

- kubernetes v1.23.9

- containerd v1.6.8

- coredns v1.9.3

- cni v1.1.1

- etcd v3.4.20

- weavenetwork 1.23

- All the provisioned instances run the same OS

ubuntu@ip-10-192-10-110:~$ cat /etc/os-release

NAME="Ubuntu"

VERSION="20.04.4 LTS (Focal Fossa)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 20.04.4 LTS"

VERSION_ID="20.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=focal

UBUNTU_CODENAME=focal

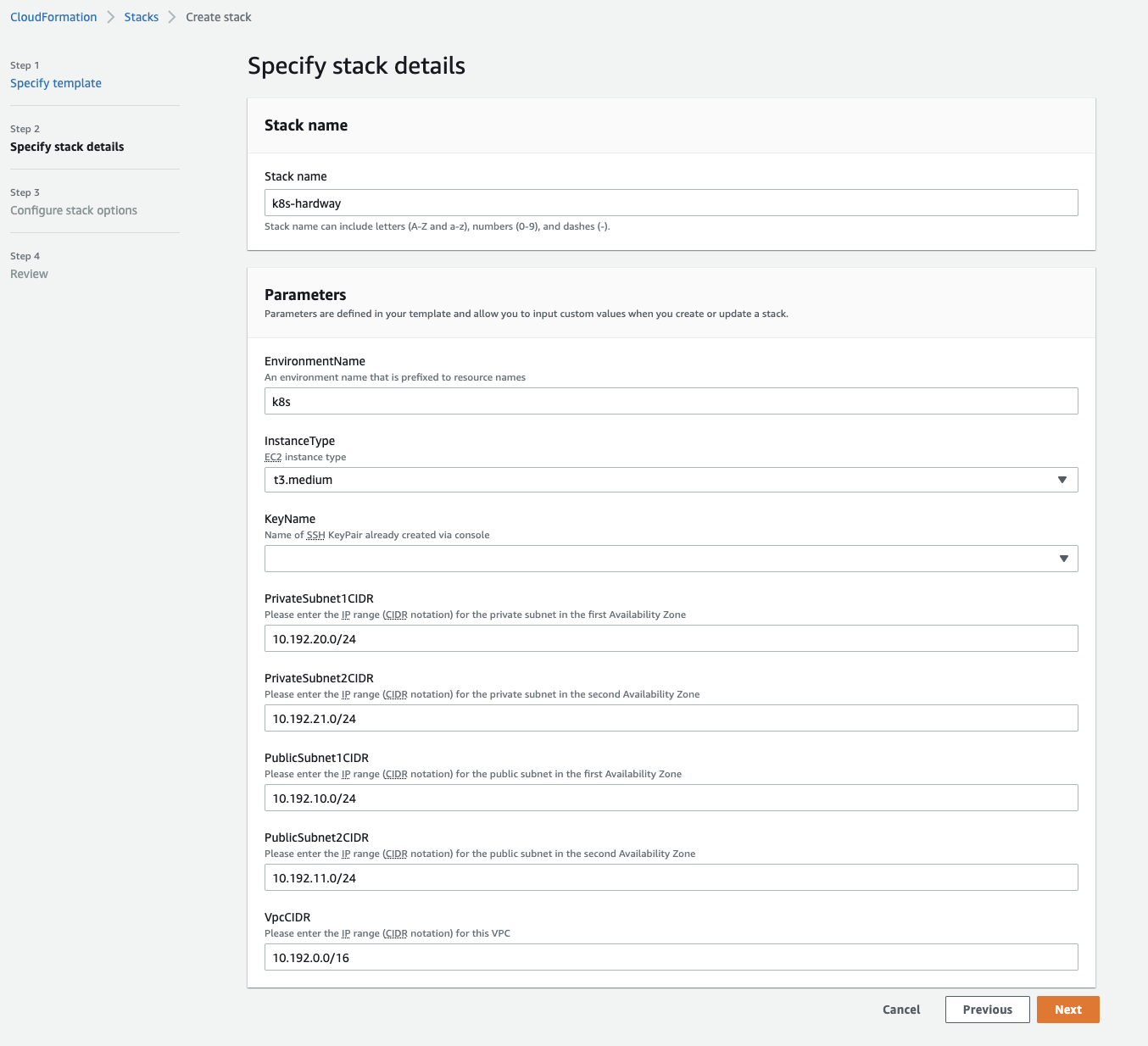

- Goto AWS Console > Choose Region (e.g. eu-west-1) > CloudFormation > Create Stack

- Use the CF Yaml template in infrastructure/k8s_aws_instances.yml

- See image below:

- Define your global variables

export LOCAL_SSH_KEY_FILE="~/.ssh/key.pem"

export REGION="eu-west-2"

- Confirm the instances created and the Public IP of the Ansible controller server

aws ec2 describe-instances --filters "Name=tag:project,Values=k8s-hardway" --query 'Reservations[*].Instances[*].[Placement.AvailabilityZone, State.Name, InstanceId, PrivateIpAddress, PublicIpAddress, [Tags[?Key==`Name`].Value] [0][0]]' --output text --region ${REGION}

- Define your Ansible server environment variable

export ANSIBLE_SERVER_PUBLIC_IP=""

- You can use SSH or AWS SSM to access the Ansible Controller Server or any other nodes that were created with the CloudFormation Template

- Connecting via AWS SSM e.g.

aws ssm start-session --target <instance-id> --region ${REGION}

- Transfer your SSH key to the Ansible Server. This will be need in the Ansible Inventory file.

echo "scp -i ${LOCAL_SSH_KEY_FILE} ${LOCAL_SSH_KEY_FILE} ubuntu@${ANSIBLE_SERVER_PUBLIC_IP}:~/.ssh/"

inspect and execute the output

- To Create inventory file. Edit the inventory.sh and update the variable SSH_KEY_FILE and REGION accordingly

vi deployments/inventory.sh

chmod +x deployments/inventory.sh

bash deployments/inventory.sh

- Transfer all playbooks in deployments/playbooks to the ansible server

cd kubernetes-the-hard-way-on-aws/deployments

scp -i ${LOCAL_SSH_KEY_FILE} *.yml *.yaml ../inventory *.cfg ubuntu@${ANSIBLE_SERVER_PUBLIC_IP}:~

scp -i ${LOCAL_SSH_KEY_FILE} ../easy_script.sh ubuntu@${ANSIBLE_SERVER_PUBLIC_IP}:~

- Connect to the Ansible Server

ssh -i ${LOCAL_SSH_KEY_FILE} ubuntu@${ANSIBLE_SERVER_PUBLIC_IP}

chmod +x easy_script.sh

LOCAL_SSH_KEY_FILE="~/.ssh/key.pem" # your ssh key

chmod 400 "~/.ssh/key.pem" # your ssh key

- After building the inventory file, test if all hosts are reachable

- list all hosts to confirm that the inventory file is properly configured

ansible all --list-hosts -i inventory

hosts (5):

controller1

controller2

worker1

worker2

controller_api_server_lb

- Test ping on all the hosts

ansible -i inventory k8s -m ping

worker1 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

controller_api_server_lb | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

controller2 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

controller1 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

worker2 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

ubuntu@ip-10-192-10-137:~$

From the Ansible server, execute the Ansible playbook in the following order or For an easier 1 click deployment option, see instruction

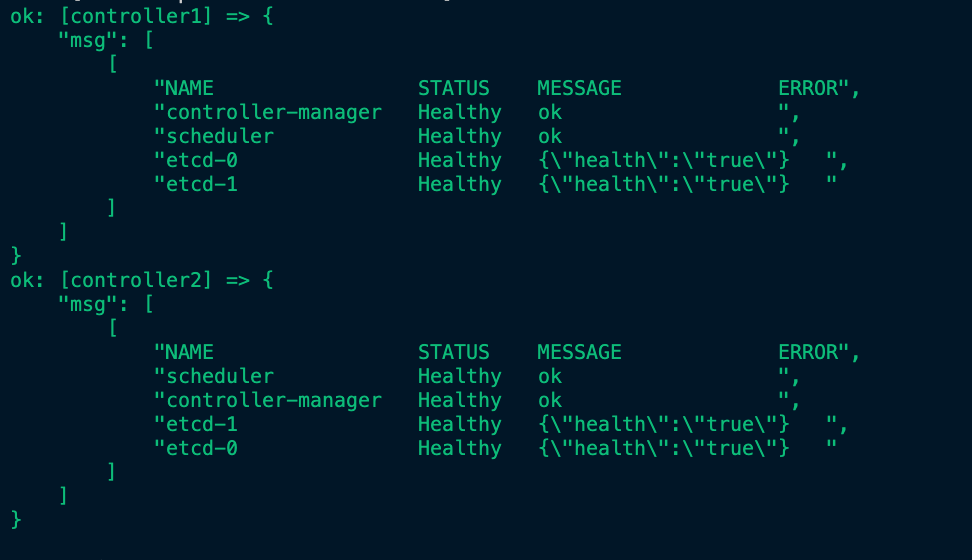

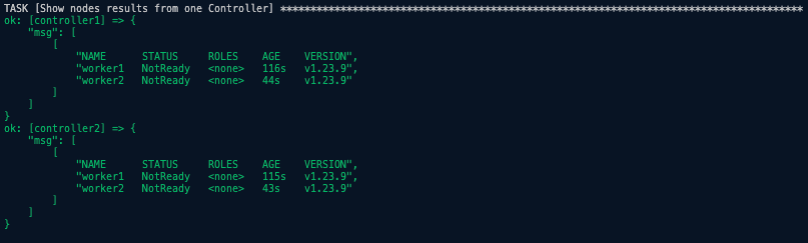

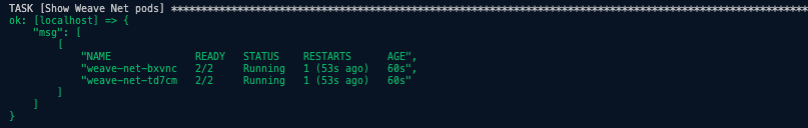

ansible-playbook -i inventory -v client_tools.ymlansible-playbook -i inventory -v cert_vars.ymlcat variables.text >> env.yamlansible-playbook -i inventory -v create_ca_certs.ymlansible-playbook -i inventory -v create_kubeconfigs.ymlansible-playbook -i inventory -v distribute_k8s_files.ymlansible-playbook -i inventory -v deploy_etcd_cluster.ymlansible-playbook -i inventory -v deploy_api-server.ymlSee API Server Bootstrap results below.ansible-playbook -i inventory -v rbac_authorization.ymlansible-playbook -i inventory -v deploy_nginx.ymlansible-playbook -i inventory -v workernodes.ymlSee Worker Nodes Bootstrap results below.ansible-playbook -i inventory -v kubectl_remote.ymlansible-playbook -i inventory -v deploy_weavenet.ymlSee Weavenetwork pods results below.- Setup coreDNS

ansible-playbook -i inventory -v smoke_test.yml

Worker Nodes Bootstrap Results. Nodes are in NotReady state because we haven't configured networking.

Delete the AWS CloudFormation Stack

aws cloudformation delete-stack --stack-name k8s-hardway

Check if the AWS CloudFormation Stack still exist to confirm deletion

aws cloudformation list-stacks --stack-status-filter CREATE_COMPLETE --region eu-west-1 --query 'StackSummaries[*].{Name:StackName,Date:CreationTime,Status:StackStatus}' --output text | grep k8s-hardway