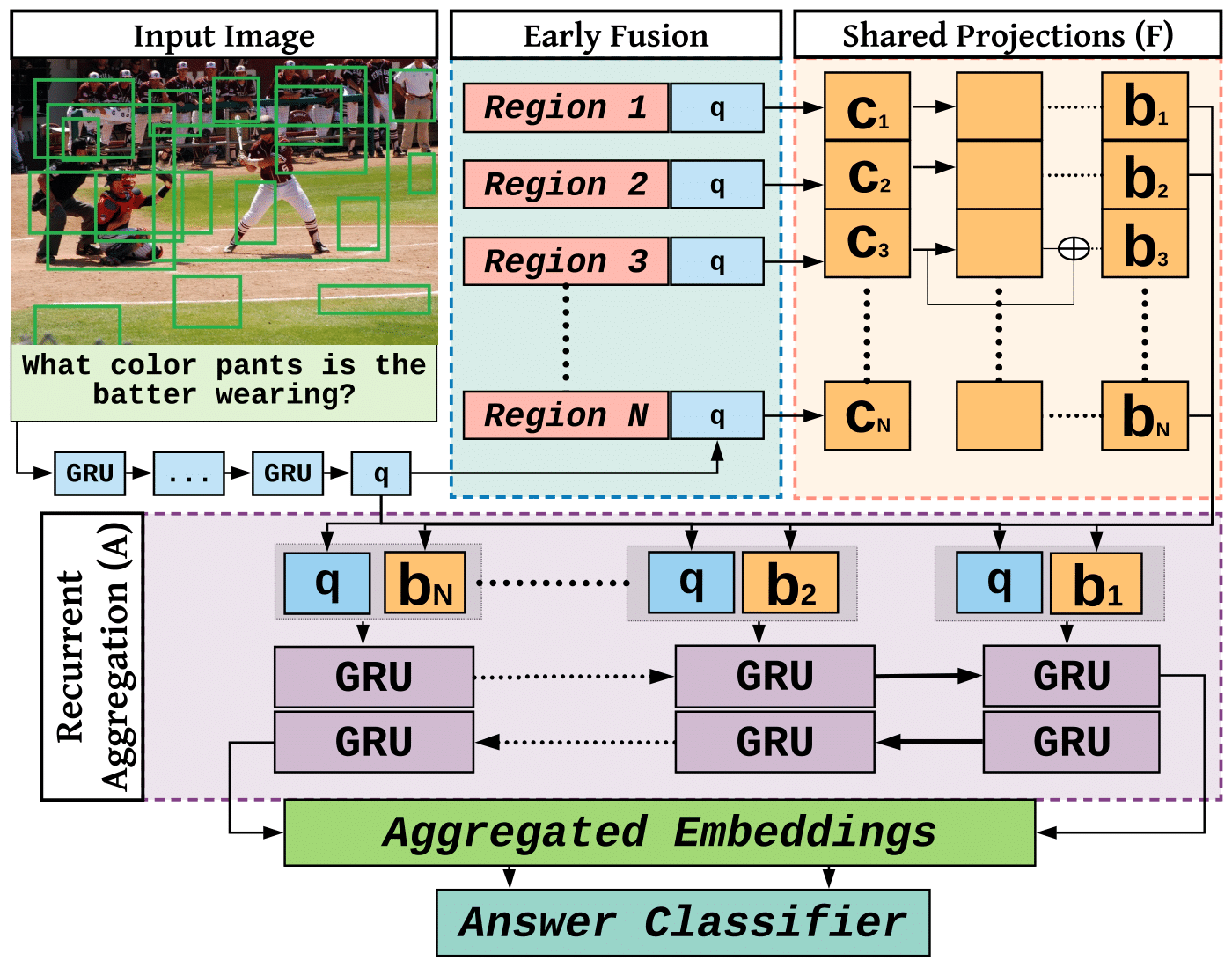

This is a pytorch implementation of our Recurrent Aggregation of Multimodal Embeddings Network (RAMEN) from our CVPR-2019 paper.

I added support for RUBi, which can wrap any VQA model and mitigate question biases. For this, we need to first install block.bootstrap, by following the instructions in this repo.

If you do need to run RUBi, please comment out the following two lines from run_network.py:

from models.rubi import RUBiNet

from criterion.rubi_criterion import RUBiCriterion

Let ROOT be the directory where all data/code/results will be placed. DATA_ROOT=${ROOT}/${DATASET}, where DATASET has one of the following values: VQA2, CVQA or VQACP

-

Download train+val features into

${DATA_ROOT}using this link so that you have this file:${DATA_ROOT}/trainval_36.zip -

Extract the zip file, so that you have

${DATA_ROOT}/trainval_36/trainval_resnet101_faster_rcnn_genome_36.tsv -

Download test features into

${DATA_ROOT}using this link -

Extract the zip file, so that you have

${DATA_ROOT}/test2015_36/test2015_resnet101_faster_rcnn_genome_36.tsv -

Execute the following script to extract the zip files and create hdf5 files

./tsv_to_h5.sh- Train and val features are extracted to

${DATA_ROOT}/features/trainval.hdf5. The script will create softlinkstrain.hdf5andval.hdf5, pointing totrainval.hdf5. - Test features are extracted to

${DATA_ROOT}/features/test.hdf5

- Train and val features are extracted to

-

Create symbolic links for

test_devsplit, which point totestfiles:${DATA_ROOT}/features/test_dev.hdf5, pointing to${DATA_ROOT}/features/test.hdf5${DATA_ROOT}/features/test_dev_ids_map.json, pointing to${DATA_ROOT}/features/test_ids_map.json

- Download questions and annotations from this link.

- Rename question and annotation files to

${SPLIT}_questions.jsonand${SPLIT}_annotations.jsonand copy them to$ROOT/VQA2/questions.- You should have

train_questions.json,train_annotations.json,val_questions.json,val_annotations.json,test_questions.jsonandtest_dev_questions.jsoninside$ROOT/VQA2/questions

- You should have

- Download glove.6B.zip, extract it and copy

glove.6B.300d.txtto${DATA_ROOT}/glove/ - Execute

./ramen_VQA2.sh. This will first preprocess all questions+annotations files and then start training the model.

We have provided a pre-trained FasterRCNN model and feature extraction script in a separate repository to extract bottom-up features for CLEVR.

Please refer to the README file of that repository for detailed instructions to extract the features.

-

Download the question files from this link

-

Copy all of the question files to

${ROOT}/CLEVR/questions.- You should now have the following files

CLEVR_train_questions.json,CLEVR_val_questions.jsoninside$ROOT/CLEVR/questions/

- You should now have the following files

-

Download glove.6B.zip, extract it and copy

glove.6B.300d.txtto${ROOT}/CLEVR/glove/

- This script first converts the CLEVR files into VQA2-like format.

- Then it creates dictionaries for the dataset.

- Finally it starts the training.

- Download pre-trained model from and put it into

${ROOT}/RAMEN_CKPTS - Execute

./scripts/ramen_CLEVR_test.sh

@InProceedings{shrestha2019answer,

author = {Shrestha, Robik and Kafle, Kushal and Kanan, Christopher},

title = {Answer Them All! Toward Universal Visual Question Answering Models},

booktitle = {CVPR},

year = {2019}

}