Steps and Explorations in Interpretable / Explainable AI

- Feature Manipulations

- Individual Conditional Expectations (ICE)

- Partial Dependence Plots (PDP)

- Shapley Values

- Local Interpretable Model-Agnostic Explanations (LIME)

The trained machine learning model will be referred to as the underlying model.

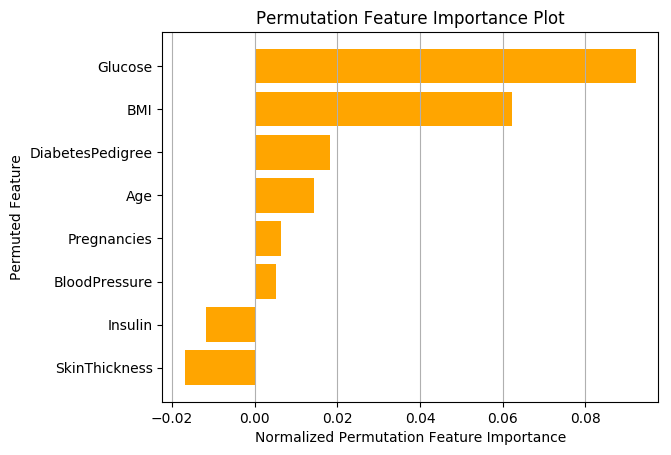

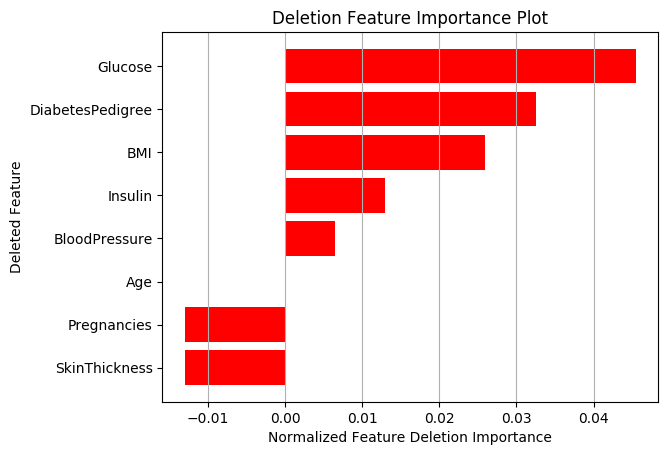

The underlying model is run multiple times with different feature combinations, to get an estimate of the contribution of each feature.

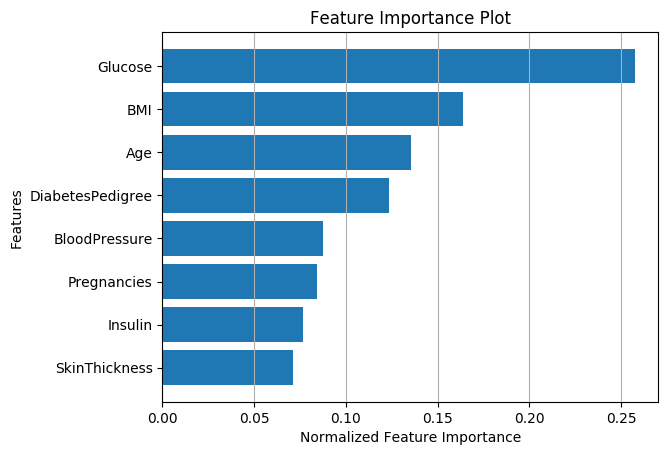

A RandomForestClassifier (from sklearn.ensemble) is used as the underlying model for experiments on direct feature manipulations.

This uses the feature_importances_ attribute of the ensemble methods in Scikit Learn which is calculated by the Mean Decrease in Impurity (MDI).

Here, for each feature in

Here, for each feature in

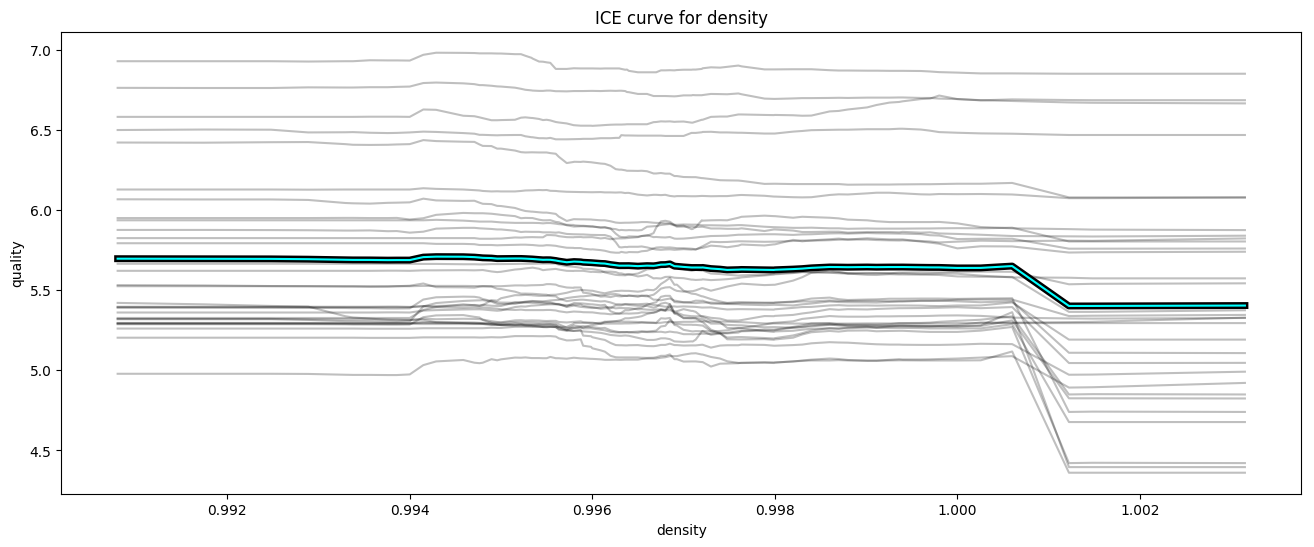

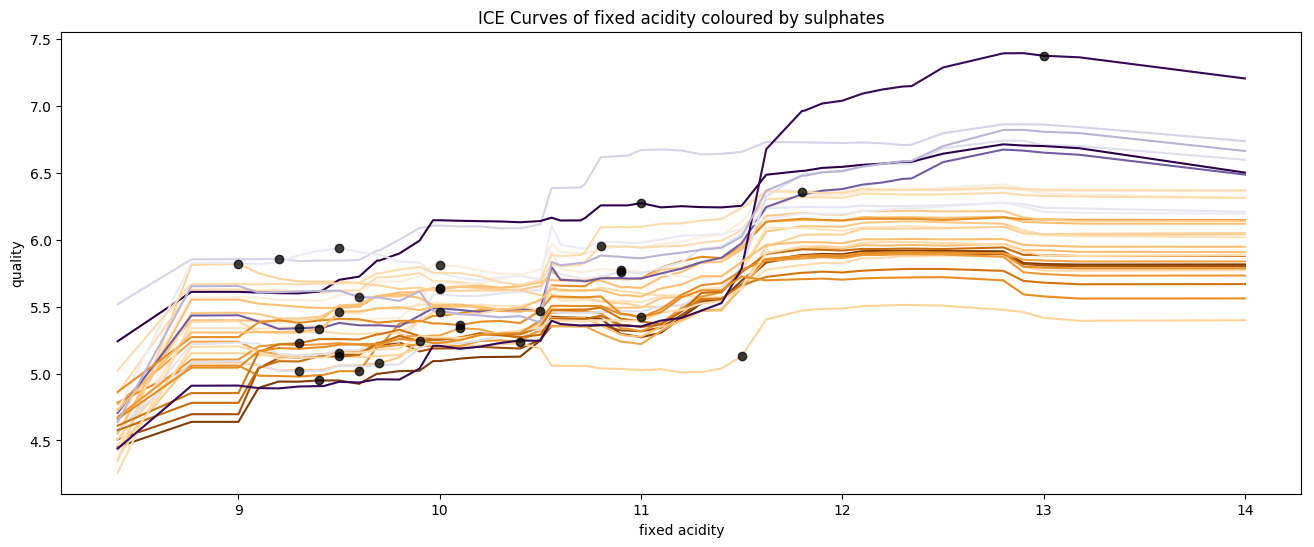

Individual Conditional Expectation (ICE) are a local per-instance method really useful in revealing feature interactions. They display one line per instance that shows how the instance’s prediction changes when a feature changes.

Formally, in ICE plots, for each instance in

The

In these examples, the underlying model RandomForestRegressor

Examples from the Wine Quality Dataset are shown below.

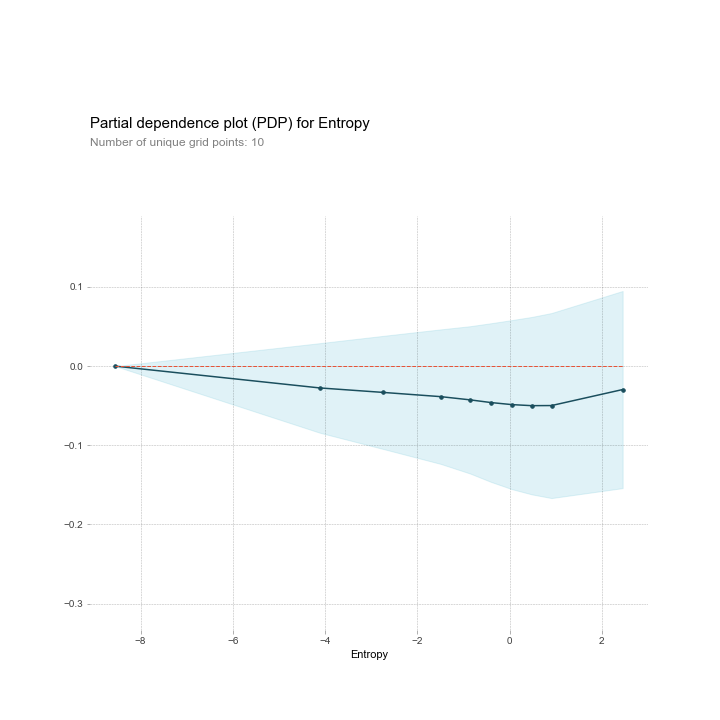

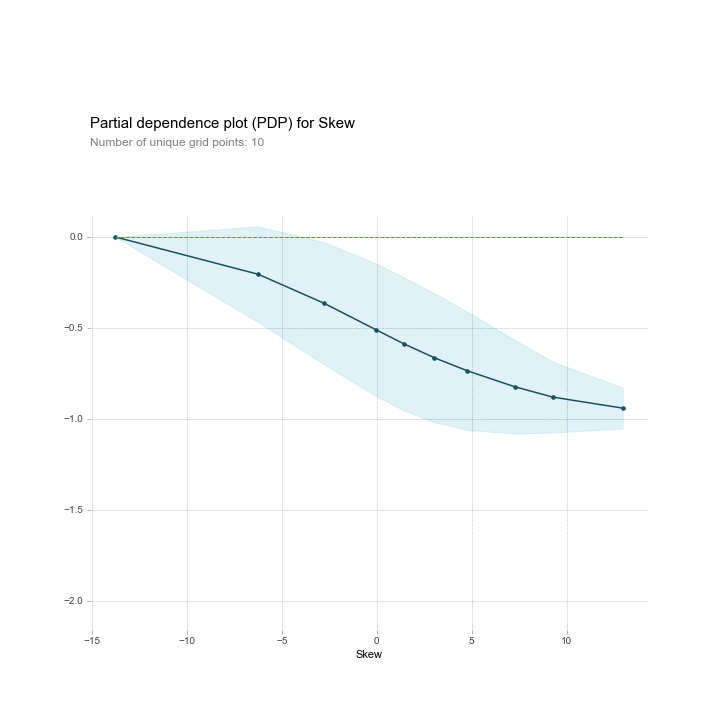

Partial dependence plots (short PDP or PD plot) shows the marginal effect one or two features have on the predicted outcome of a machine learning model. A partial dependence plot can show whether the relationship between the target and a feature is linear, monotonic or more complex.

For regression, the partial dependence function is:

Again,

The partial function

The partial function tells us for given value(s) of features

The underlying model MLPClassifier with around 25 neurons in the hidden layer and the dataset used is the BankNotes Authentication Dataset.

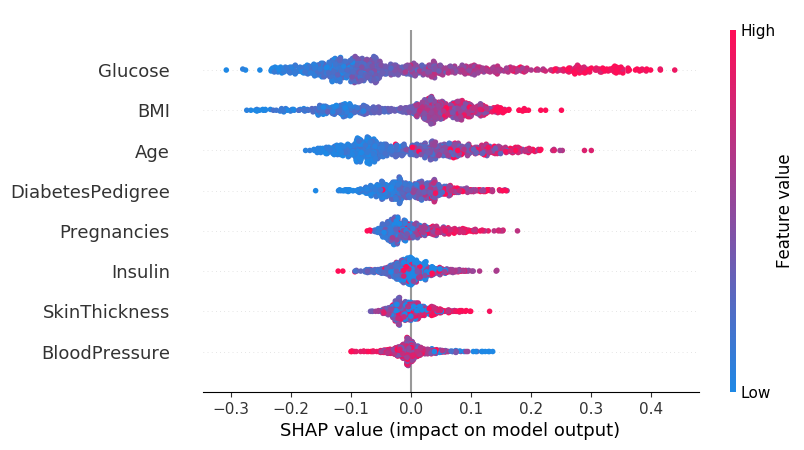

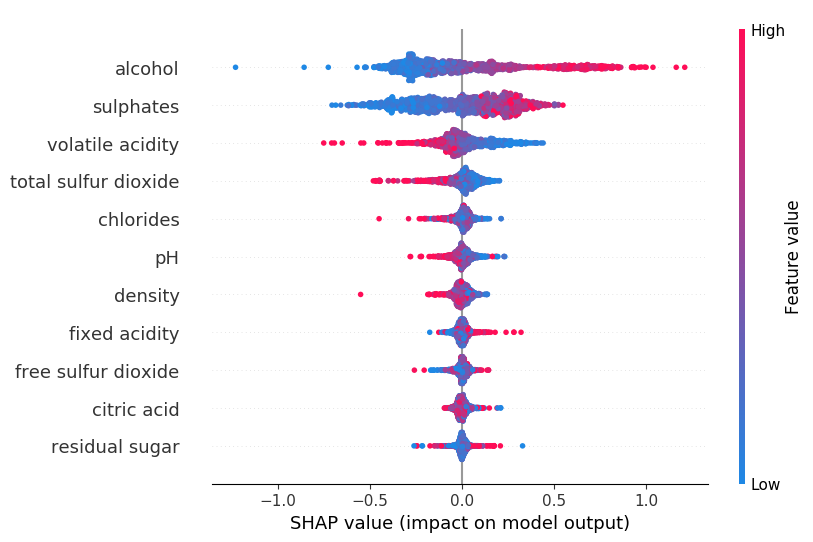

This idea comes from game theory and gives a theoretical estimate of feature prediction as compared to the above methods which were empirical and also gives importance to the sequence of features introduced.

The contribution of feature

where

The underlying model RandomForestRegressor on the Diabetes and the Wine Quality datasets.

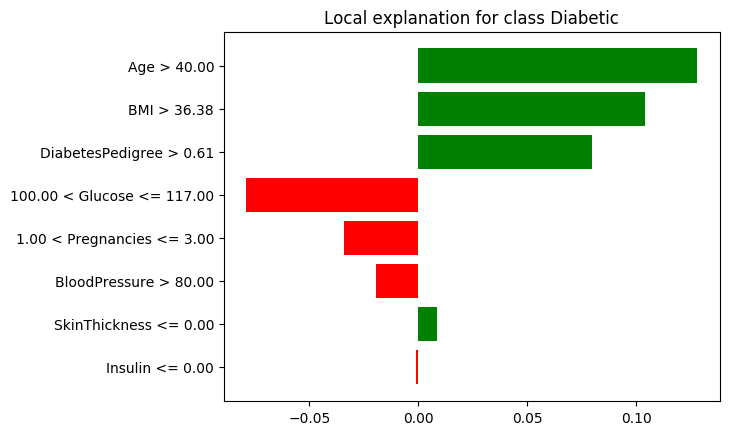

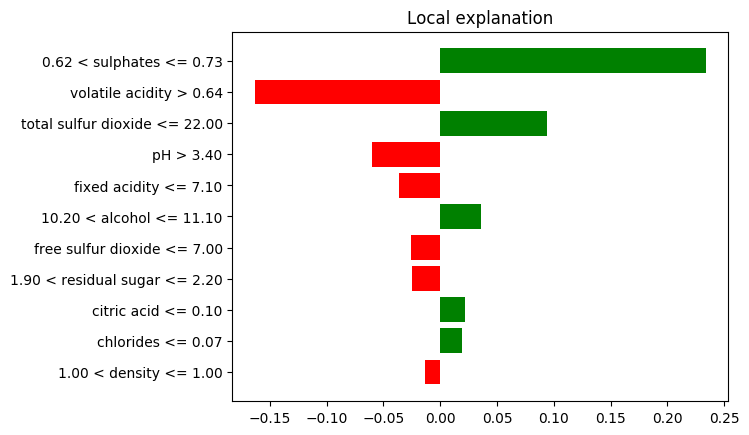

Local Interpretable Model-Agnostic Explanation (LIME) is a black-box model agnostic technique, which means it is independent of the underlying model used. It is however, local in nature, and generates an approximation and explanation for each example/instance of data. The explainer tries to perturb model inputs which are more interpretable to humans and then tries to generate a linear approximation locally in the neighbourhood of the prediction.

In general, the overall objective function is given by

where

These are better illustrated by examples: (Examples on the Diabetes and the Wine Quality datasets)

Probability of being Diabetic: 0.56

Prediction: Diabetic

Predicted Wine Quality: 5.8 / 10